-

Converged Plantwide Ethernet (CPwE) Design and Implementation Guide

-

Preface

-

Converged Plantwide Ethernet Overview

-

Converged Plantwide Ethernet Solution

-

CPwE Solution Design—Cell/Area Zone

-

Solution Design—Manufacturing and Demilitarized Zones

-

Implementing and Configuring the Cell/Area Zone

-

Plant Network Security and the Demilitarized Zone

-

Testing the CPwE Solution

-

CIP Motion

-

CIP Sync Sequence of Events

-

DHCP Persistence in the Cell/Area Zone

-

Key Terms and Definitions

-

Test Result Analysis

-

Complete Test Data

-

Configurations

-

Reference Documents

-

Table Of Contents

Application-Level Latency and Jitter (Screw-to-Screw)

Application-level Latency and Jitter

Testing the CPwE Solution

Overview

This chapter describes the test plans and environment used to validate the key concepts outlined in this solution. This chapter covers the following:

•

Key test objectives, activities and exit criteria to validate the solution

•

Describe the test environments in which the tests will be conducted

•

Summarize the test execution steps including test scenarios and test cases

For detailed test results refer to "Complete Test Data" and refer to "Test Result Analysis" for analysis of those results. The intention of providing this level of detail is to allow implementers of IACS networks to estimate the various characteristics of their own networks (e.g., network convergence) and to compare their own test results to determine whether improvements are possible following the guidelines outlined in this Design and Implementation Guide.

Introduction

Test Objective

The objective of the test was to provide input and background for the requirements and solution architecture. The test approach is designed to enable Cisco and Rockwell Automation to provide detailed design and implementation guidance for applying industrial Ethernet to production facilities. The test was also designed to produce results that customers and implementers of IACS networks can use to plan and design their own networks. This test plan is designed to test key network characteristics to best support automation and control system. The key plant network design requirements and considerations to be tested include the following:

•

Performance/real-time communications (network latency and jitter)

•

Availability (e.g., resiliency protocols)

•

Adaptability (e.g., topology)

•

Security (e.g., remote access)

•

Scalability

The testing for the CPwE solution does not cover the following:

•

Automation and Control applications and the IACS devices themselves

•

Any of the environmental characteristics (temperature, shock, etc.) conditions of the network infrastructure or automation and control devices

•

Performance and scalability testing of Manufacturing zone

•

Full performance capability of the industrial Ethernet switches. In most well designed IACS networks and by applying the concepts within, the IACS applications generally do not produce the volume of traffic that would come anywhere near the performance thresholds of modern managed network switching infrastructure.

Test Equipment

The test equipment used for the testing falls into two categories: network infrastructure and IACS equipment.

Network Equipment

Table 7-1 list the network equipment used in this solution testing.

For media interconnecting switches, either Cat 5E copper cabling or multi-mode fiber cabling was used.

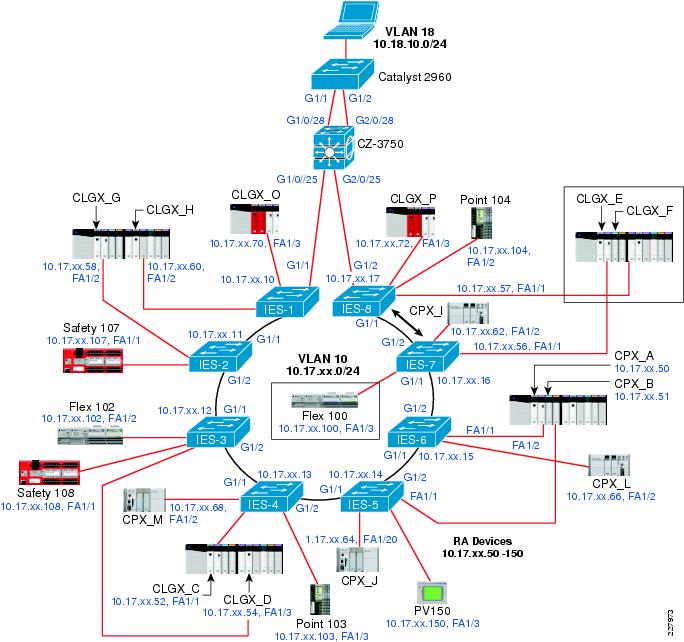

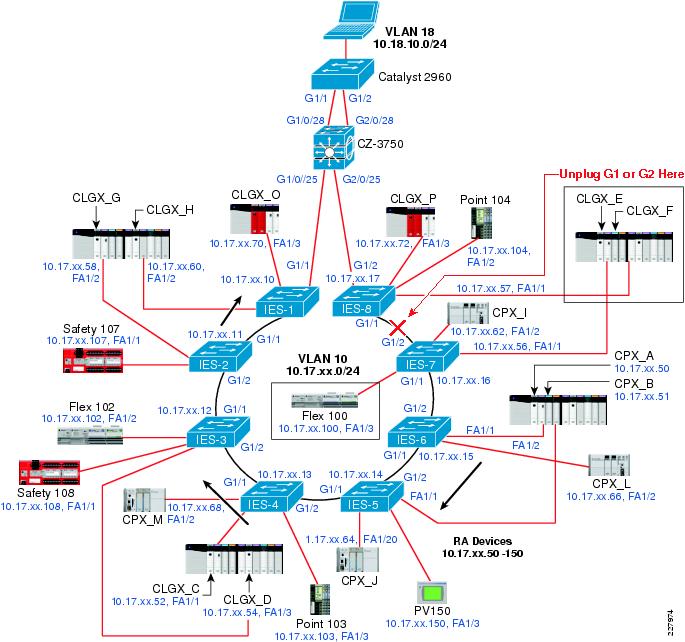

Network Topology

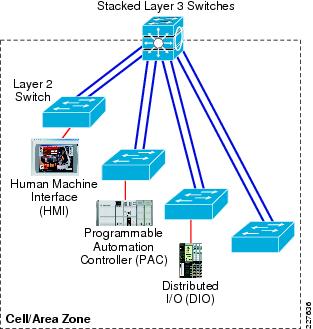

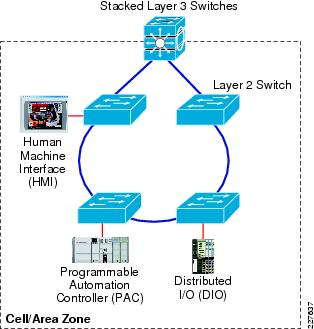

For the purposes of the testing, only the resilient network, and ring and redundant star topologies were used. In all cases, a distribution switch was part of the ring or redundant star topology. Figure 7-1 shows star topology and Figure 7-2 shows the ring topology tested for this solution.

Figure 7-1 Redundant Star Topology

Figure 7-2 Ring Topology

IACS Equipment

Test Approach

To supply the feedback as required by the testing objectives, Cisco and Rockwell Automation designed the following two key tests to be executed:

•

Network resiliency tests on a variety of test configurations measuring both application sensitivity to outages and network convergence (defined in the "Test Measurements" section)

•

Screw-to-screw tests to measure latency as seen by the IACS application and the impact of network scalability on latency

This section describes each of the above tests in more detail, including the key objectives of each test.

Cisco and Rockwell Automation carried out these tests in each of their labs. For Cisco, the tests were conducted at the San Jose campus location. For Rockwell Automation, the tests were conducted at the Mayfield Heights location. The test environments of each lab were as similar as possible, where both labs were operating with the same level of IACS software, nearly identical IACS equipment, and nearly identical network configurations. The most significant difference was that the Rockwell Automation lab tested with Stratix 8000 IE switches and the Cisco lab tested with IE 3000 switches. As a result, there are some minor differences in the configurations, as noted in Chapter 4 "CPwE Solution Design—Manufacturing and Demilitarized Zones." The test results were very similar and have been integrated into this chapter.

In addition, Cisco and Rockwell Automation collaborated to establish secure remote access for the Rockwell Automation team to access Cisco's San Jose IACS lab. Rockwell Automation was able to securely access IACS applications in Cisco's IACS lab and assist with deployment and maintenance of the IACS equipment in Cisco's lab during the test phase. No particular test cases were developed, but the relevant configurations are supplied here and the design and implementation recommendations in this guide are based on the successful deployment of that feature. Note that Cisco and Rockwell Automation have not and will not produce detailed configurations of the IT portion of the secure remote access as that was deployed on operative Cisco production equipment.

Network Resiliency

The test was designed to measure the network convergence under a number of fault and recovery situations in a variety of network configurations. Network convergence is defined as the time it takes the network to identify a failure (or restore) situation, make appropriate changes in switching functions, and reestablish interconnectivity. This test was designed to provide input and verify recommendations in regards to the following:

•

Media, in particular the use of fiber versus copper for inter-switch uplinks

•

Network topology (ring versus redundant star)

•

Network scalability in terms of the number of switches and number of end-devices, in particular the impact of a larger network on network convergence.

•

Network resiliency protocol, in particular between Spanning Tree versions, EtherChannel, and Flex Links.

•

Network behavior in a variety of failure/restore scenarios, so customers and implementers can make decisions about how to react and potentially when to restore failed links or devices.

This section covers the following:

•

A description of the IACS application that was in operation during the testing

•

The key measurements collected during the testing, including application sensitivity and network convergence

•

A description of the test organization including test variables, test suites developed and test cases executed

IACS Application

The IACS application employs the following:

•

14 controllers, each with a dedicated network card

•

6 I/O modules

•

1 PanelView Plus HMI

Each network module was configured for approximately 80 percent utilization. Table 7-3 lists the packets per-second approximate loading for the types of network modules in the test environment.

Table 7-3 Packets Per-Second Loading

Table 7-4 lists all the controllers, their communication modules, and the relevant network and IACS application data.

The following controllers have the specified tasks:

•

CLGX_E—Monitors the status of its I/O LED and the I/O LEDs of the other controllers. This is handled with GSV instructions locally and with a consumed tag within CLGX_E from each of the other controllers with the exception of CLGX_F.

•

CLGX_F has the screw-to-screw test logic. CLGX_F is not involved in the network resiliency tests.

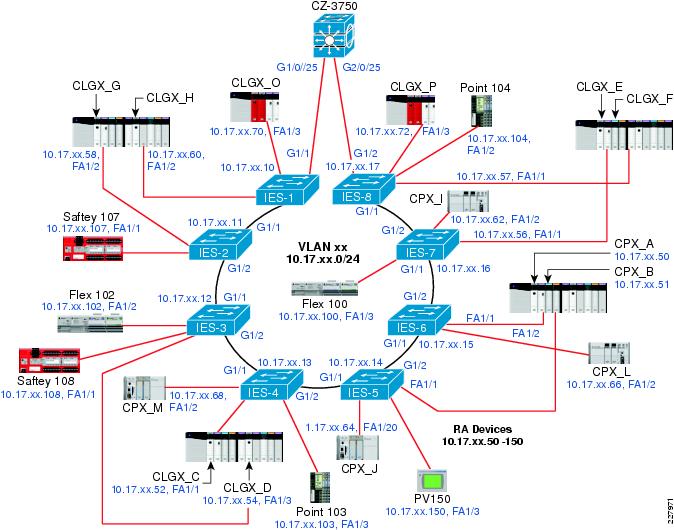

Figure 7-3 depicts the IACS devices and the network infrastructure for the Cell/Area zone in a ring topology that was used for testing network resiliency, application latency, and jitter.

Figure 7-3 IACS Devices and Network Infrastructure for Cell/Area Zone in Ring Topology

Test Measurements

This section describes the key measurements collected during the testing for CPwE, including network convergence and application sensitivity during network convergence.

Network Convergence

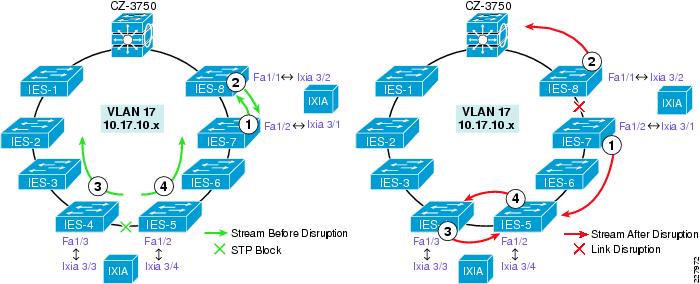

Network convergence is measured in the same manner as in Phase 1. The network traffic generator (see the "Test Equipment" section) generates bidirectional UDP unicast or multicast packet streams from two ports on the network topology. Each port both send packets to and capture packets from the other port. Two such streams are used to measure network convergence from different points in the topology. The test streams were ran for a set period of time, during which a topology change was executed (e.g., pull an uplink cable or port shutdown). Each port measure the number of packets received from the sending port. The convergence time is measured using the following formula:

Convergence in milliseconds = [(Tx - Rx) / packet rate] * 1000 ms/s

Where:

Tx = Packets transmitted

Rx = Packets received

Packet rate = 10,000 packets per second

Typically, the network resiliency test collects network convergence from four streams of data. Figure 7-4 shows an example of the streams in a ring topology. The same switches and ports were used in the redundant star topologies.Figure 7-4 Network Resiliency Test Streams - Ring topology

Table 7-5 lists the network resiliency test streams.

In "Complete Test Data,"each test suite has a topology diagram with the test streams indicated.

As noted, there were two types of test streams used to calculate network convergence: unicast and multicast UDP. The UDP unicast stream more closely represents peer-to-peer traffic. The UDP multicast stream more closely represents implicit I/O traffic. For a number of reasons, network convergence based on multicast streams was measured only in redundant star configurations where EtherChannel or FlexLinks was the resiliency protocol. As Spanning Tree does not recover unicast or multicast traffic in the timeframe to support any type of I/O traffic, peer-to-peer or Implicit I/O, network convergence for multicast streams was not measured in any of the Spanning Tree test suites.

Application Sensitivity

The tests are designed to measure three types of application flows. These flows are commonly designed into automation and control systems. These flows are used to measure the impact network disruption and resulting network convergence has on the application traffic flow.

•

HMI (explicit)—Where communication is established between controllers and HMI devices. The flow is designed to not disrupt in network disruptions of under 20 seconds. This is an example of information/process traffic as described in the "Real-Time Communication, Determinism, and Performance" section.

•

Peer-to-peer (implicit unicast) represents communication between controllers that is typically passed via UDP unicast packets. This flow was designed to not disrupt in network disruptions of under 100 ms. This is an example of time critical traffic as described in the "Real-Time Communication, Determinism, and Performance" section.

•

Implicit I/O (implicit multicast) represents communication between controllers and I/O devices and drives that is typically passed via UDP multicast packets. This flow was designed to not disrupt in network disruptions of under 100 ms. This is an example of time critical traffic as described in the "Real-Time Communication, Determinism, and Performance" section.

These traffic flows represent the type of IACS data and network traffic that is handled by the network infrastructure. If any of the operational devices were to have a communication fault, the IACS application would display the controller and flag at fault. Traffic types 2 and 3 are relatively similar in that they both timeout at around 100 ms and are both based upon UDP traffic. The difference between them was whether unicast or multicast communication modes were used to communicate the data. In all network resiliency test cases, the IACS application was operational and communicating data. Therefore, the base test case has the following:

•

21 IACS devices on the network

•

Producing 80 streams of data (90 multicast groups)

In the current IACS application, only one of the implicit traffic types, peer-to-peer or Implicit I/O traffic flows are operational during a test run. Cisco and Rockwell Automation choose to operate the Implicit I/O traffic flow for the redundant star topologies.

The tests were designed to measure whether or not the application times out when a network fault or restoration is introduced, indicating whether the network converged fast enough to have the application avoid faulting. These test results are included in the appendices.

Test Organization

This section describes the organization of the network resiliency testing. First, there is a description of the test variables. This is a summary of the key variables that the network resiliency tests took into consideration. Second, there is a description of the test suites. A test suite in this case is a network topology and configuration. For each test suite, a variety of test cases were executed. For each test case, a number of test runs were executed for a variety of inserted MAC addresses to simulate additional end-devices. Each test run was executed with the IACS application in operation and measuring whether any controller lost a connection during the test run.

Test Variables

Table 7-6 outlines the key test variables incorporated into the CPwE testing.

Test Suites

The test suite represents a network topology, resiliency protocol, and uplink media-type. Table 7-7 lists the test suites performed.

Note the naming convention:

•

First letter is for topology where R is for ring and S for redundant star.

•

Second letter is for resiliency protocol where M is for MSTP, P is for RPVST+, E is for EtherChannel, and F is for Flex Links.

•

Third letter is for uplink media type where C is for Cat 5E copper (Cat 5E or 6) cabling and F is for fiber.

•

The last number is for the number of switches in the topology, 8 or 16.

Test Cases

For each test suite, a variety of test cases were executed. A test case represents a type of network event, either a disruption or restoration. Table 7-8 describes the test cases executed. For each test case, a number of test runs were performed with varying amounts of simulated end-devices coming from the network generator. The eight cases of network disruption shown in Table 7-8 are performed.

NoteThe root bridge up/down test cases were only executed in the ring topology test suites. In a redundant star, the root bridge down represents a catastrophic failure where the network is no longer viable.

In nearly all cases, some network disruption is expected. The exception was with Flex Links that places a restored connection into standby mode, which has no noticeable impact on the traffic flows.

Summary

Table 7-9 lists all the test suites and test cases that were executed for the network resiliency portion of the CPwE test.

Not all test cases were executed for each topology for the following reasons:

•

Root bridge up/down test cases were only executed in ring topology test suites. In a redundant star, the root bridge down represents a catastrophic failure where the network is no longer viable.

•

Uplink media type only has an impact on the link disruption test cases. Therefore, the Root Bridge and StackMaster tests were not completed for test suite RMF8.

Application-Level Latency and Jitter (Screw-to-Screw)

Screw-to-screw testing exposes how long it takes an I/O packet of data to make a round trip through the IACS hardware and network architecture, in other words application latency. By comparing these test results in various scenarios, the impact of network latency on the application latency is highlighted.

The approach of this test is to operate the screw-to-screw test in each of the test suites identified in the network resiliency test under two scenarios; short-path and long-path. In the short-path case, no network faults are in place and the I/O traffic will pass between 2 or 3 switches depending on the topology. In the long-path scenario, a fault already in place will divert the I/O traffic over the long-path. The resulting differences in application latency from the short-path and long-path scenarios, in theory, are then attributable to the additional switches in the network path. The application latency test results will be summarized later in this chapter and analyzed in "Test Result Analysis."

This section covers the following:

•

Test cases executed for the screw-to-screw test

•

IACS application describing the screw-to-screw test from an application perspective

•

Test measurements describing how the application latency is measured

Test Cases

This screw-to-screw test was conducted on the same test suites identified in the network resiliency test. This section highlights the short path and long path scenario in a 8-switch ring topology.

Short Path

Communication between the relevant IO and controller is between adjacent switches with an active link as shown in Figure 7-5.

Figure 7-5 Short Path

Long Path

Communication between the relevant IO and controller traverses the ring of network switches when link between adjacent switches is disrupted, as show in Figure 7-6.

Figure 7-6 Long Path

Table 7-10 lists the data for the screw-to-screw test performed.

Table 7-10 Screw-to-Screw

NoteTests with Flex Links were not performed as there were no significant path differences.

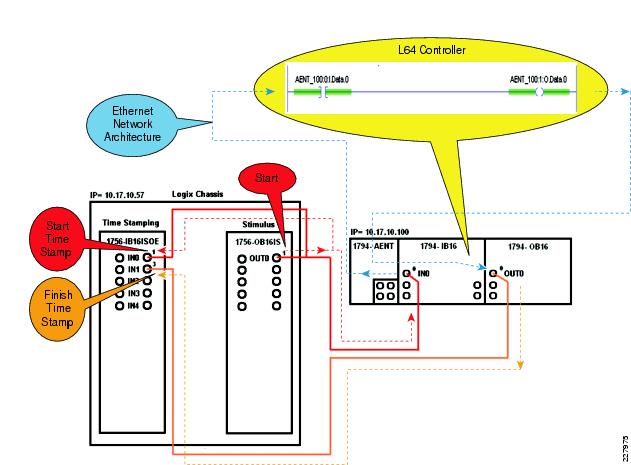

IACS Application

The screw-to-screw test is essentially measuring communication between a controller (CLGX_F) and an EtherNet/IP (EIP) connected I/O device (Flex I/O 100). The communication takes two paths, one via the EIP network modules and the IACS network, the other via direct hardwire connections. This section describes the I/O paths and hardwire path.

I/O Packet Path

The I/O packet of data is in the form of a 24vdc pulse. The 24vdc pulse will be time stamped when it is first sent and will be time stamped after it travels through the hardware and architecture as shown in Figure 7-7.

A 24vdc pulse is generated from the 1756-OB16IS module. This 24vdc pulse will take two paths:

•

Path 1—The 1756-IB16ISOE module is used to timestamp when the 24vdc pulse is first generated by the 1756-OB16IS module, registering on IN0 of the 1756-IB16ISOE module, and when the 24vdc pulse has been generated by the 1794-OB16 module. These will be the start and finish time stamps that will be compared to help us understand how long it takes this 24vdc pulse to travel through the hardware and network architecture.

•

Path 2—The 1794-IB16 module is used to register this 24vdc pulse as an input condition into the L64 controller.

The 24vdc pulse is registered by the 1794-IB16 module and is sent every 10ms to the L64 controller in the form of an I/O packet of data via the 1794-AENT module and network architecture.

Once the I/O packet of data arrives in the L64 Controller, the 1794-IB16 input point IN0 will register as a value of (1) or a High signal (AENT_100:0:I.Data.0) and through Ladder Application Code will drive an output instruction (AENT_100:1:O.Data.0). This output packet of data will be sent back through the network architecture, back through the 1794-AENT module every 10ms and turn on the output point OUT0 on the 1794-OB16 module.

The 24vdc pulse generate (passed on) by the 1794-OB16 module will finish its travels by registering as a time stamp on the 1756-IB16ISOE module input IN1.

Figure 7-7 I/O Packet Path

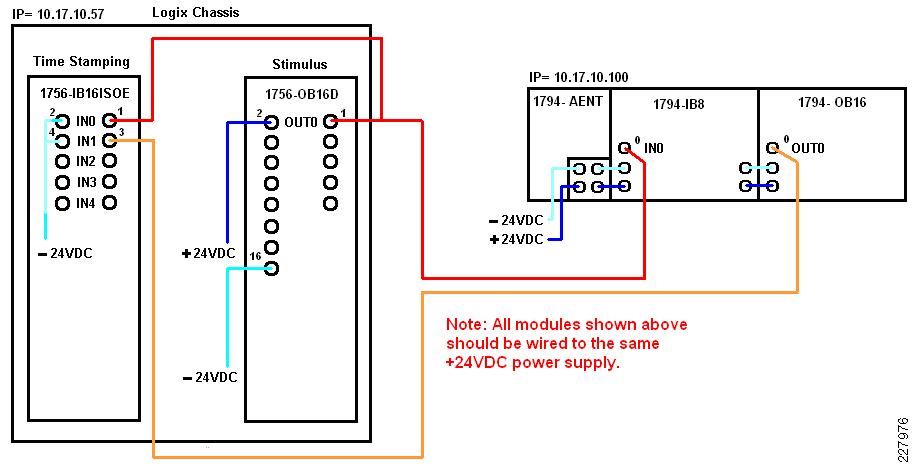

Hardware Wiring

The 1756 and 1794 modules are wired as shown in Figure 7-8.

Figure 7-8 Hardware Wiring

Test Measurements

In this test, the IACS application measures the amount of time it takes to transmit a piece of information from the controller to the IO module via the EtherNet/IP network versus directly transmitting the information via hardwires between the IO module and controller, the SOE Time Stamp Difference Test Data. The time stamp difference is recorded in millisecond (ms) increments, for example if the SOE Time Stamp Data value received from the controller is 2.438 this value falls between 2 and 3ms so the 2ms bucket will get a value of (1) added to its field. If the SOE Time Stamp Data value is 10.913 this value falls between 10 and 11ms so the 10ms bucket will get a value of (1) added to its field. Based on this data, a minimum, maximum, and averages value are calculated. The average time stamp difference represents the average application latency. Each test run collected 300,000 samples and took about 8 to 10 hours to collect.

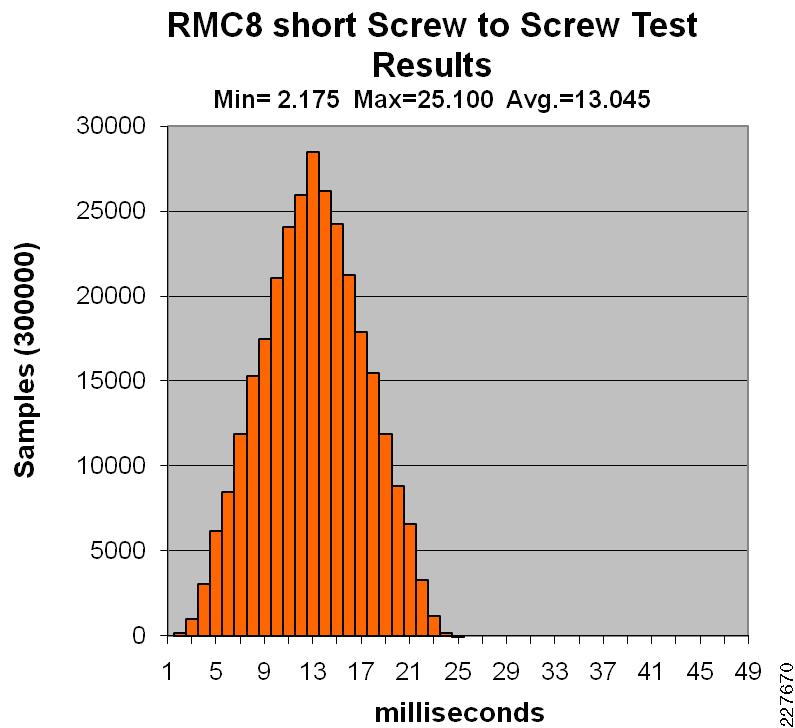

Figure 7-9 represents an example set of test results from screw-to-screw test run.

Figure 7-9 Test Results from an 8-switch Ring topology with MSTP and copper uplinks, Short-path

Test Execution

This section describes the key steps performed to execute the tests conducted for CPwE. The two key test types are network resiliency and application latency and jitter.

Network Resiliency

This section describes the test case execution for the network resiliency testing. Note that this section describes the test cases for the ring topology. Some minor modifications to these must be done for redundant star topology, notably that the link disrupted (virtually or physically) is between the IES-8 and 3750-stack switches, as all the industrial Ethernet switches were connected to the distribution switch in this test suite.

This section does not describe the setup steps as we followed the design and implementation recommendations for Cell/Area zones described in Chapter 3 "CPwE Solution Design—Cell/Area Zone" and Chapter 5 "Implementing and Configuring the Cell/Area Zone."

Test Cases

Eight tests cases were outlined for the network resiliency test. Table 7-11 to Table 7-18 list the steps to execute these test cases. The tables apply to the 8-switch ring, MSTP, copper-uplinks test suite. The steps were slightly varied for the redundant star topology, for example, the link between IES-8 and the distribution switch was disconnected/shutdown or reconnected for test cases 1 through 4.

Application-level Latency and Jitter

The application-level latency tests or screw-to-screw were performed on the above discussed test suites. Two test cases were performed: a short and long path. The long-path test case was executed after introducing a failure in the topology and then conducting the test run. An Excel spreadsheet with embedded macros was developed to start the test and collect the application latency statistics. The other IACS devices and applications were in operations during the test. No Ixia traffic generation was introduced or measured during these tests.

Test Results Summary

This section summarizes the key findings from the tests performed. This section is intended to summarize observations made from the test analysis documented in "Test Result Analysis" and "Complete Test Data." Some of the findings were included in earlier chapters to support key recommendations. The key objectives set forth for this test plan included to test and measure impact of the following:

•

Network scalability in terms of the number of switches and number of end-devices, in particular the impact of a larger network on network convergence

•

Media, in particular the use of fiber versus copper for inter-switch uplinks

•

Network topology (ring versus redundant star)

•

Network resiliency protocol, in particular between Spanning Tree versions, EtherChannel, and Flex Links

•

Network behavior in a variety of failure/restore scenarios, so customers and implementers can make decisions about how to react and potentially when to restore failed links or devices, in terms of network convergence, application sensitivity and overall system latency and jitter.

The key observations can be summarized into the following points:

•

Scalability (number of switches)—The size of the ring impacts (slows down) the network convergence in link disruption (physical or software) and stack master down test cases, although with the variability due to the copper media, this impact is difficult to quantify.

•

Scalability (number of switches)—The number of switches in a redundant star did not have an impact on the network convergence.

•

Scalability (number of switches)—Number of switches in the different network topologies tested did not create a large difference in application-level latency (screw-to-screw)

•

Scalability (number of endpoints)—The number of endpoints tested had an impact on the Spanning Tree topologies, although this impact was less significant than either media or topology and therefore difficult to measure. There was no significant negative impact of the number of endpoints simulated in Flex Links or EtherChannel test suites.

•

Media—Fiber uplink topologies converged significantly faster and with less variability than copper uplink topologies, all other conditions the same. The signal loss is detected much more quickly with fiber media than with copper.

•

Topology—Redundant star topologies converged more quickly than ring topologies. This is due to the fact that a redundant star only has two uplinks (three switches) maximum in the path between any two endpoints.

•

Resiliency—EtherChannel and Flex Links are faster than Spanning Tree in redundant star topologies. EtherChannel and Flex Links with fiber uplinks converged fast enough to avoid "time-critical" application timeouts; i.e. consistent recovery in less than 100ms. EtherChannel convergence times approached or exceeded 100ms in a few cases, so some application timeouts may occur, but were not experienced in the testing, With the exception of ring with copper uplinks, all other topology and resiliency protocol combinations converged fast enough for information/process applications (i.e., generally the network recovers within 1000ms).

•

Resiliency—Flex Links had better network convergence than EtherChannel, especially with multicast traffic, in a range of test cases. This is due to the multicast fast convergence feature in FlexLinks.

•

Network Behavior—Restoring connections (physically or virtually) and restoring switches to the stack have little impact. Network convergence was often fast enough to avoid time-critical application timeouts. One notable exception was EtherChannel did have slow network convergence when a switch was restored to the stack.