Table Of Contents

About Cisco Validated Design (CVD) Program

FlexPod Select with Cloudera's Distribution including Apache Hadoop (CDH)

Big Data Challenges and Opportunities

FlexPod Select for Hadoop Benefits

FlexPod Select for Hadoop with Cloudera Architecture

Server Configuration and Cabling

Performing an Initial Setup of Cisco UCS 6296UP Fabric Interconnects

Logging into Cisco UCS Manager

Upgrade Cisco UCS Manager Software to Version 2.1(1e)

Adding a Block of IP Addresses for KVM Console

Editing the Chassis Discovery Policy

Enabling Server and Uplink Ports

Creating Pools for Service Profile Template

Creating Policies for Service Profile Template

Creating Host Firmware Package Policy

Create a Local Disk Configuration Policy

Creating Service Profile Template

Configuring Network Settings for the Template

Configuring Storage Policy for the Template

Configuring vNIC/vHBA Placement for the Template

Configuring Server Boot Order for the Template

Configuring Maintenance Policy for the Template

Configuring Server Assignment for the Template

Configuring Operational Policies for the Template

Cisco UCS 6296UP FI Configuration for NetApp FAS 2220

Configuring VLAN for Appliance Port

Server and Software Configuration

Performing Initial Setup of C-Series Servers

Logging into the Cisco UCS 6200 Fabric Interconnects

Configuring Disk Drives for OS

Installing Red Hat Enterprise Linux Server 6.2 using KVM

Installing and Configuring Parallel Shell

Enable and start the httpd service

Download Java SE 6 Development Kit (JDK)

Services to Configure On Infrastructure Node

DHCP for Cluster Private Interfaces

DNS for Cluster Private Interfaces

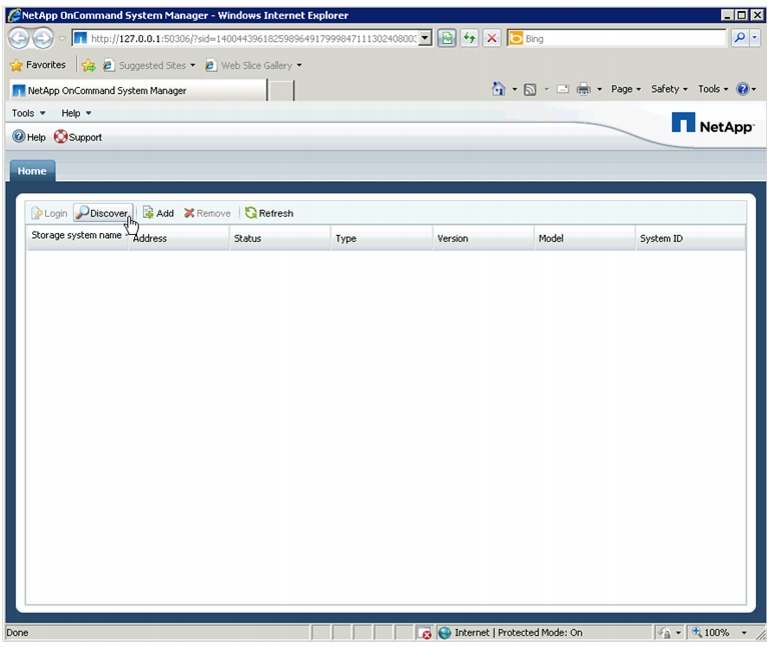

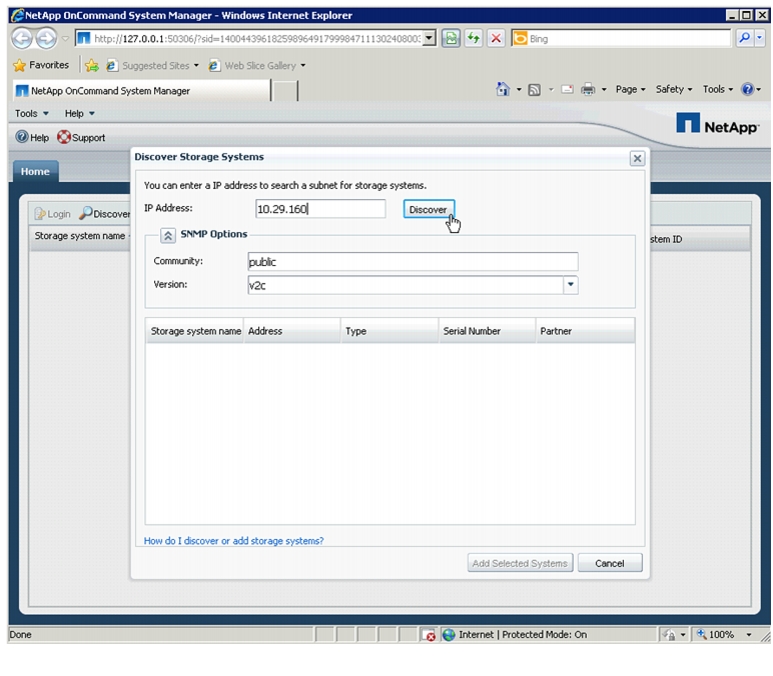

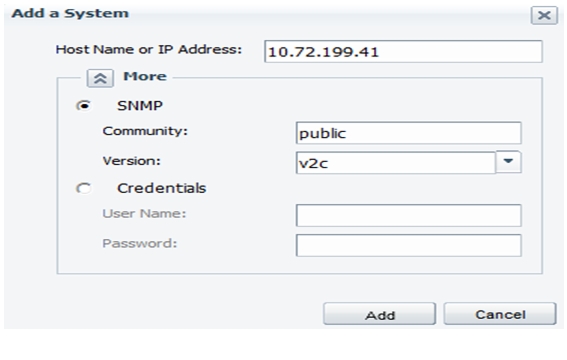

NetApp OnCommand System Manager 2.1

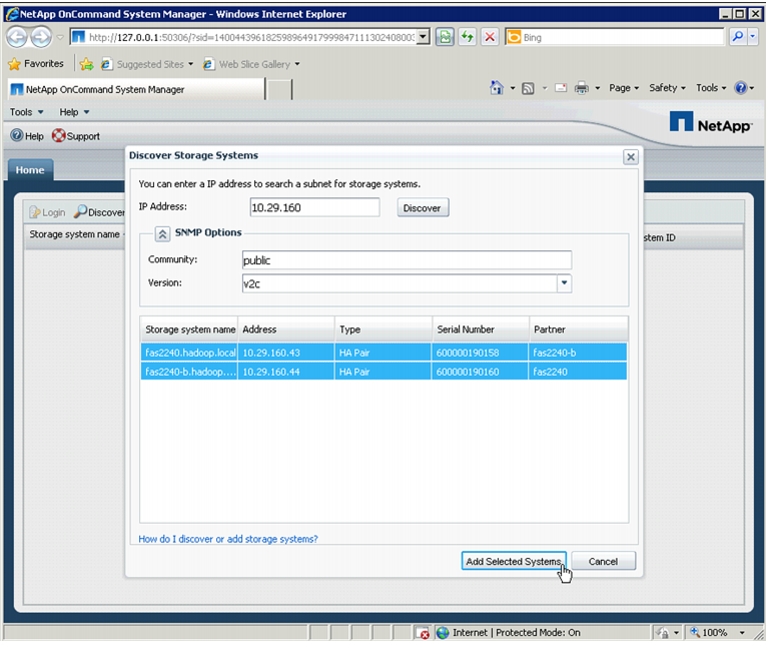

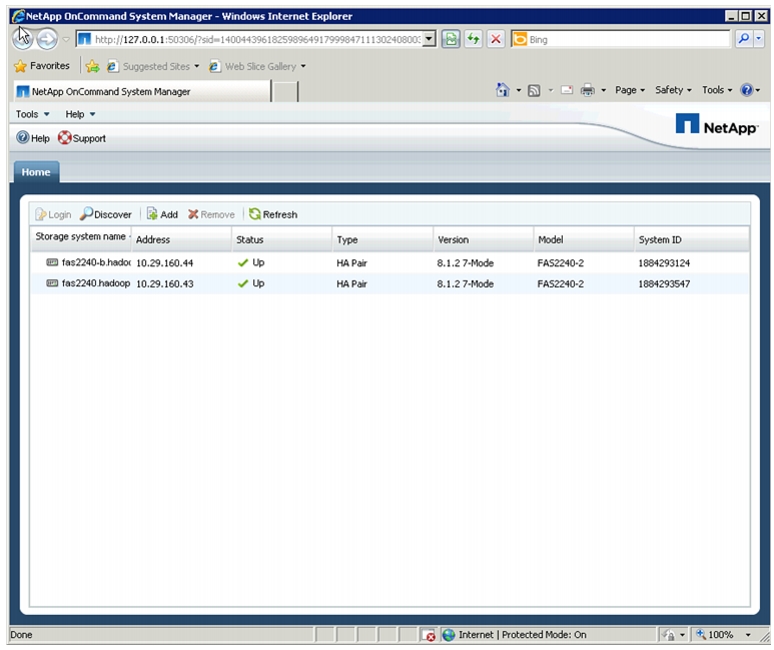

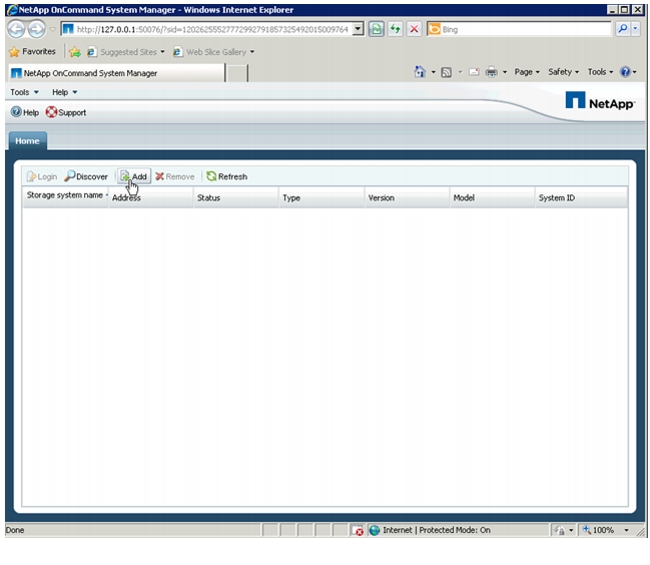

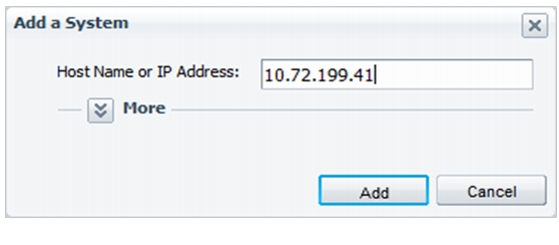

Launch OnCommand System Manager

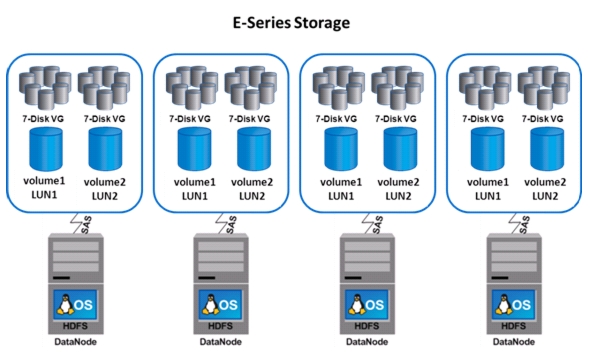

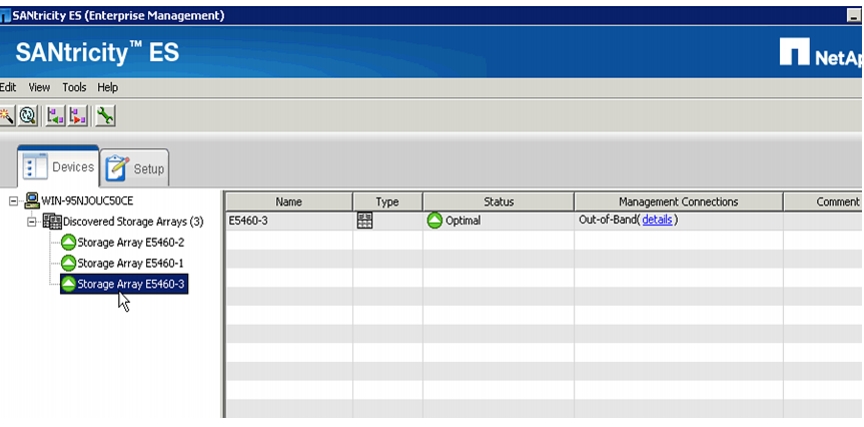

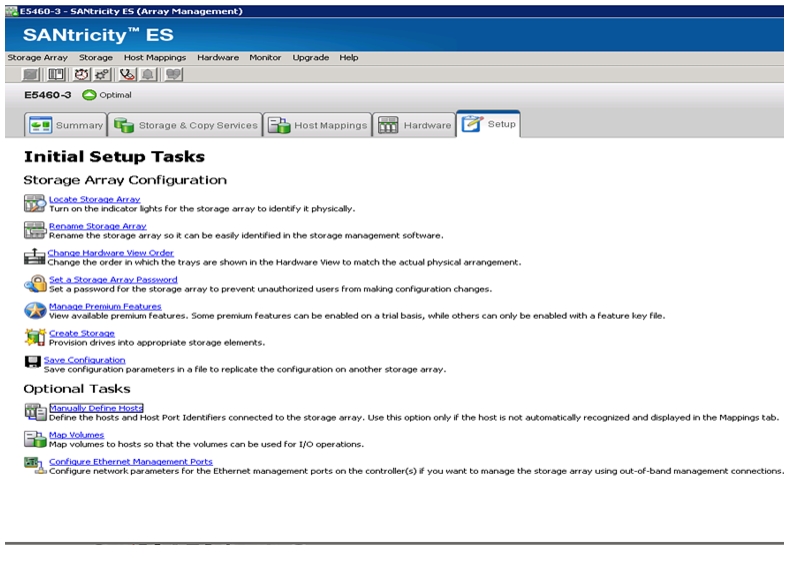

E-Series Configuration & Management

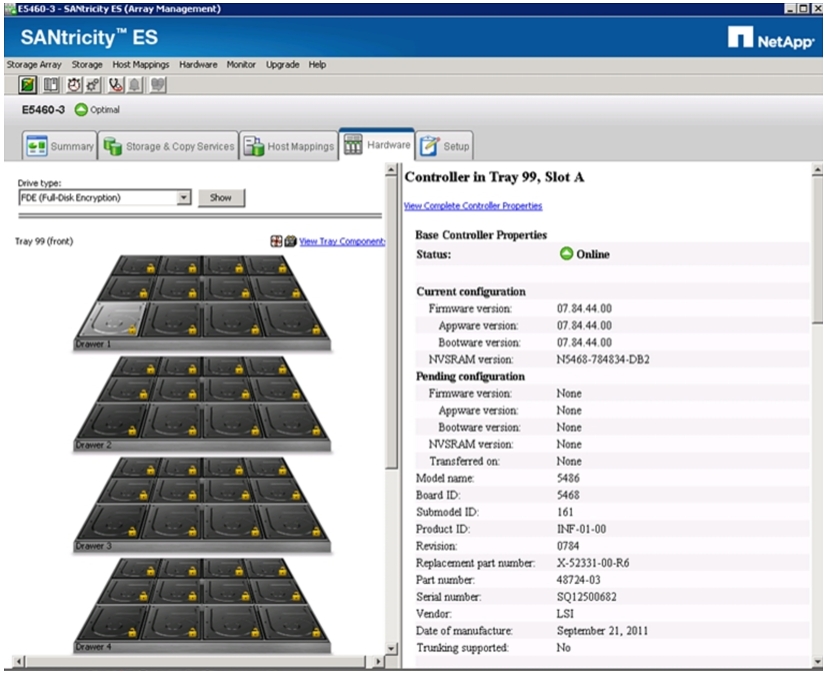

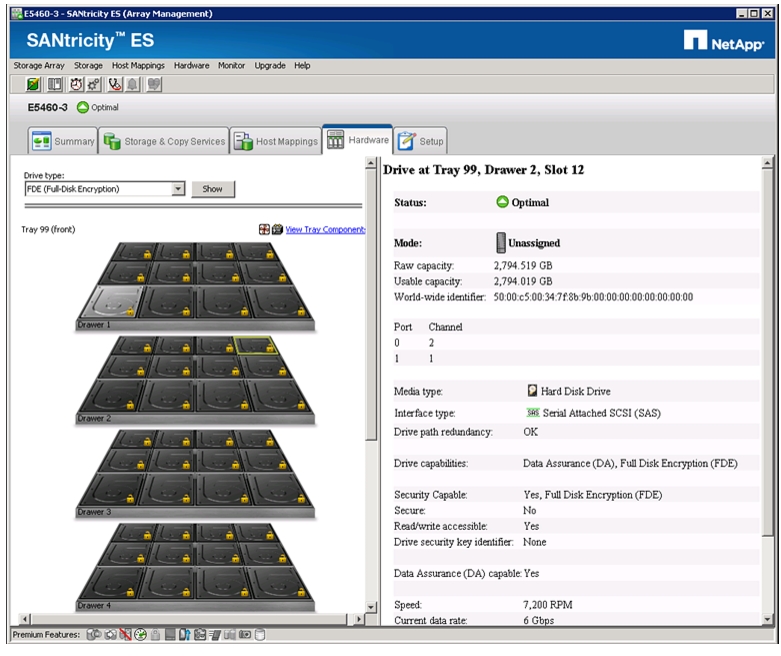

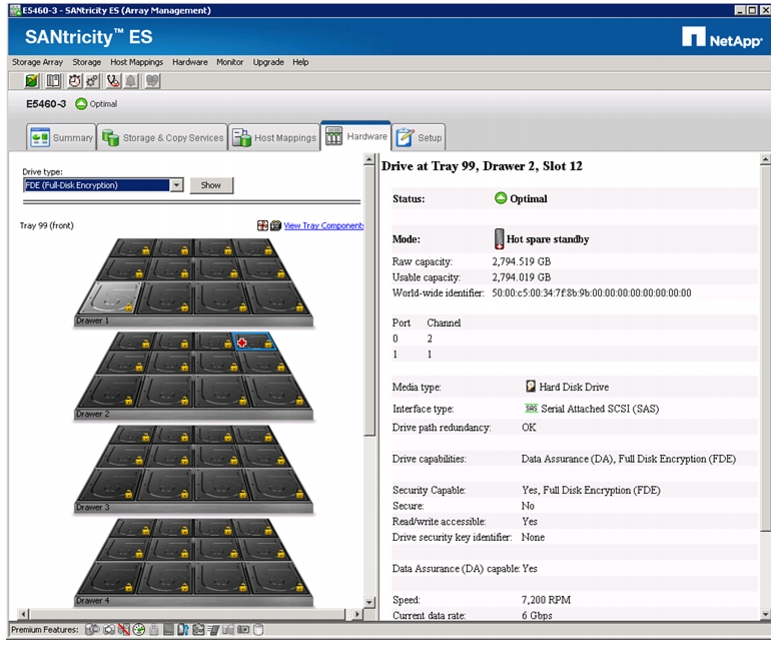

Confirm That All Disks Are in Optimal Status

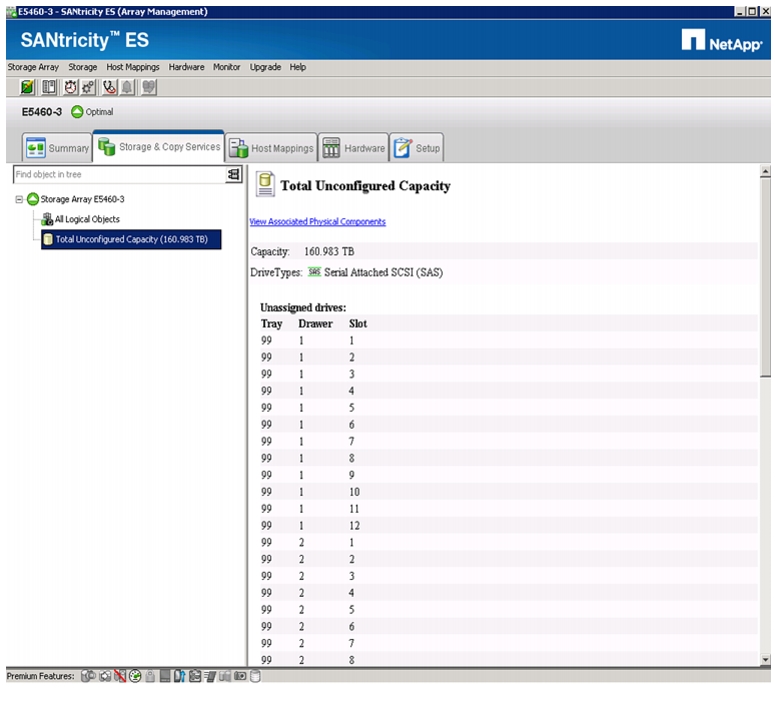

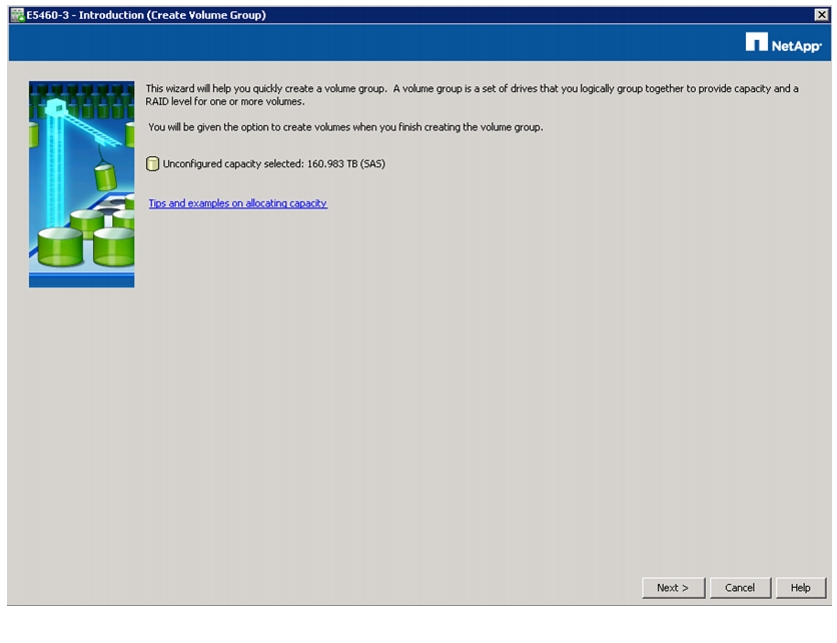

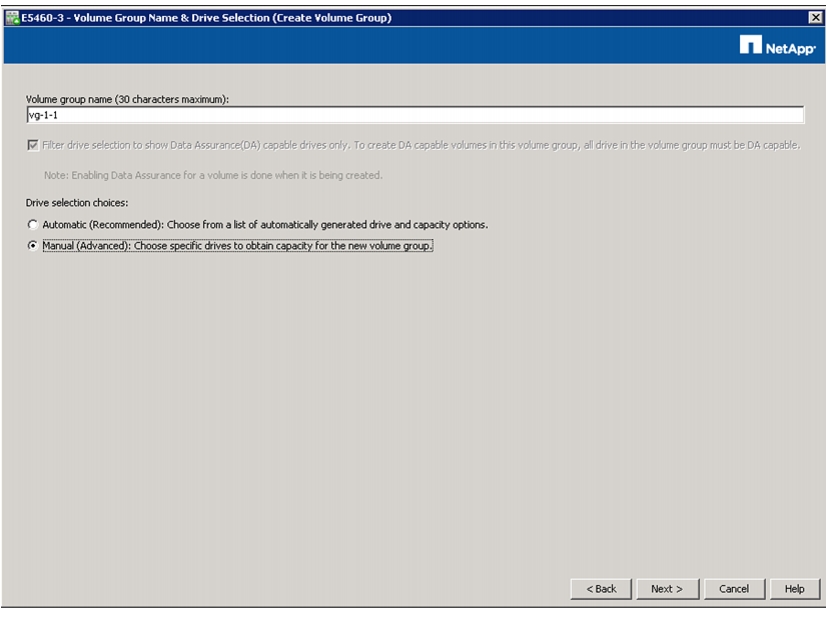

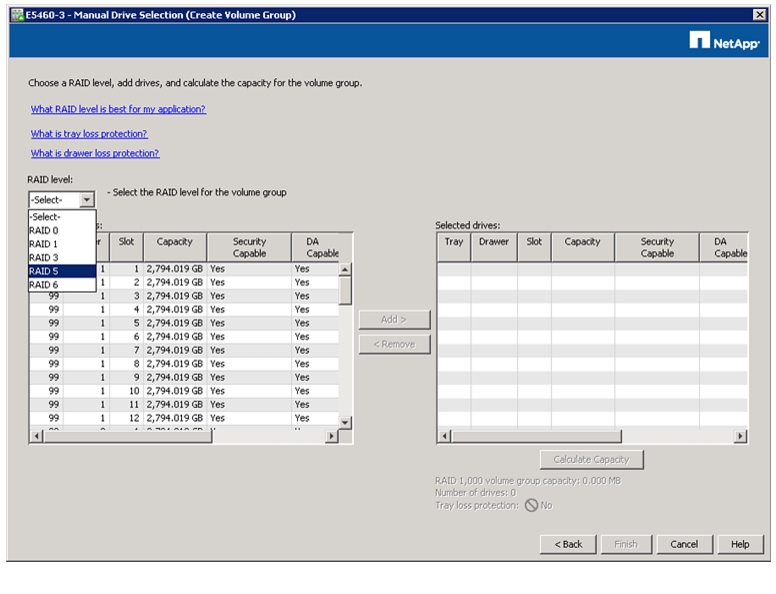

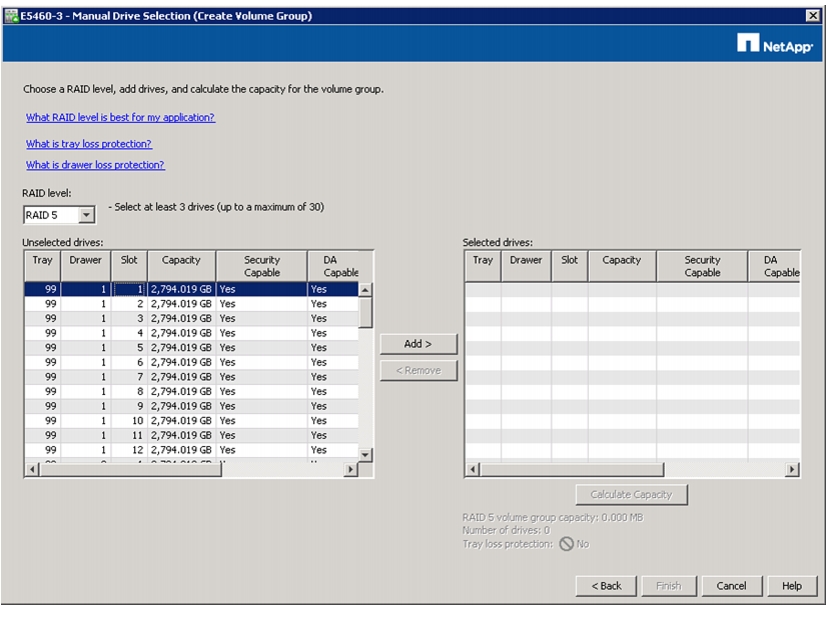

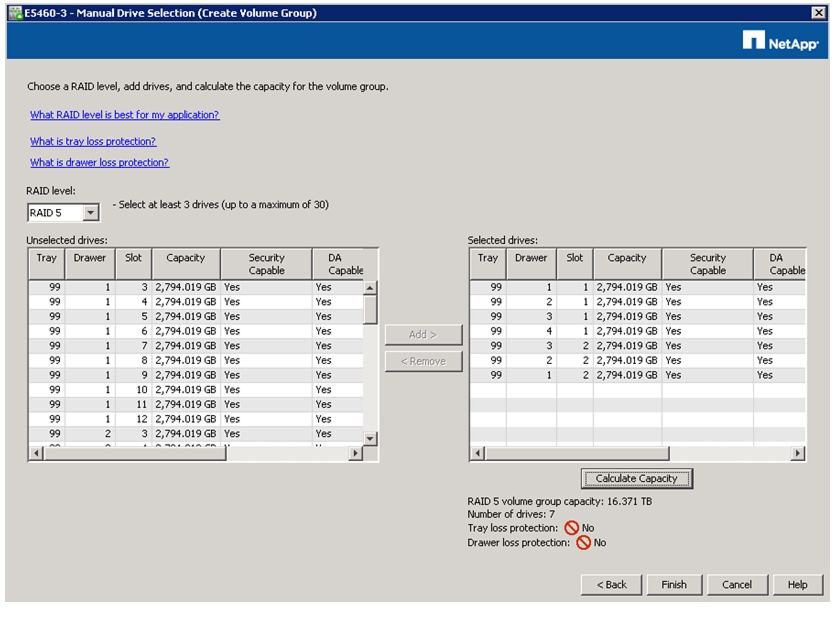

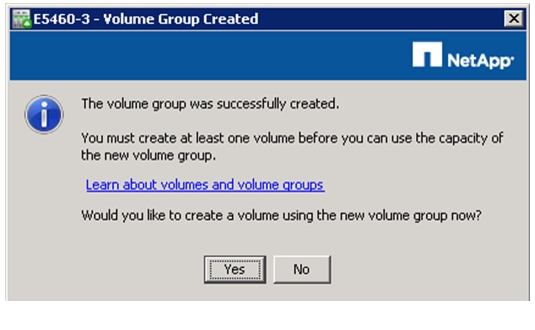

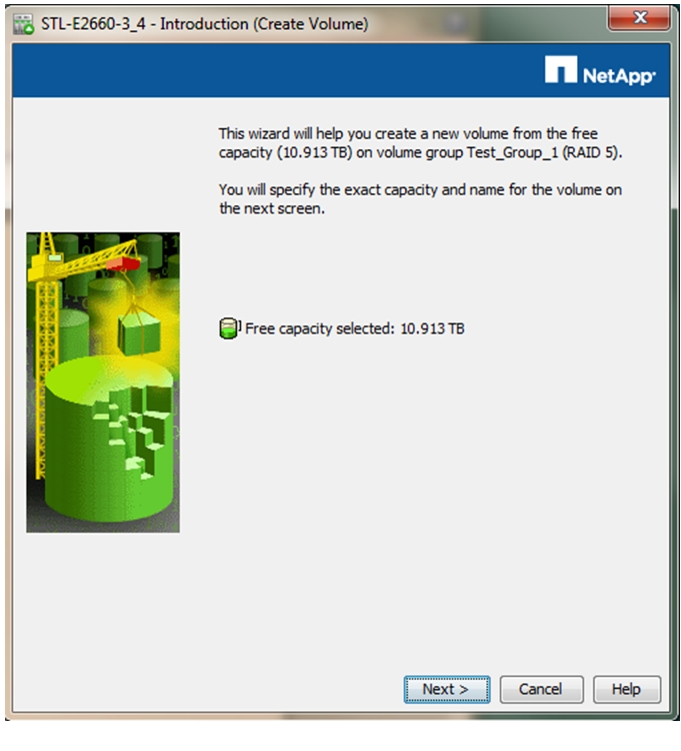

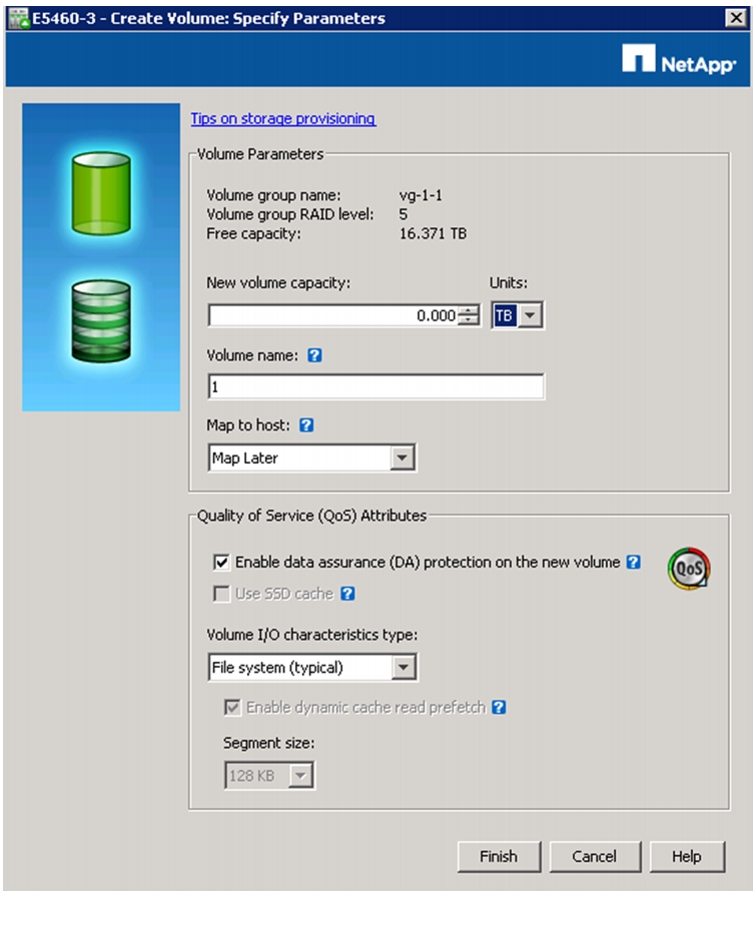

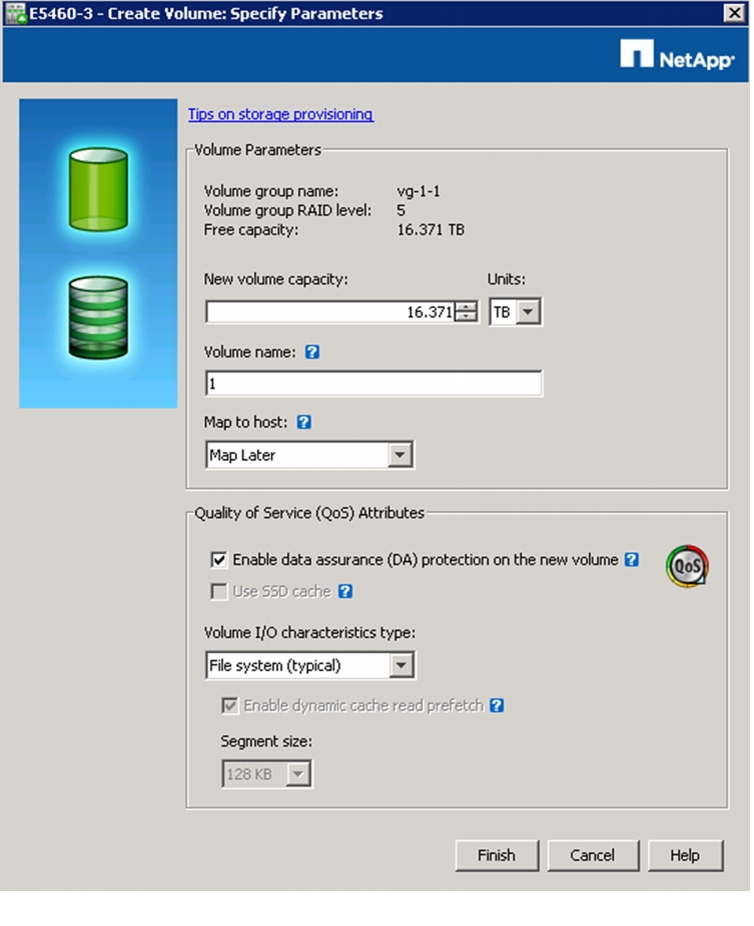

Selecting Drives for Volume Groups and Hot Spares

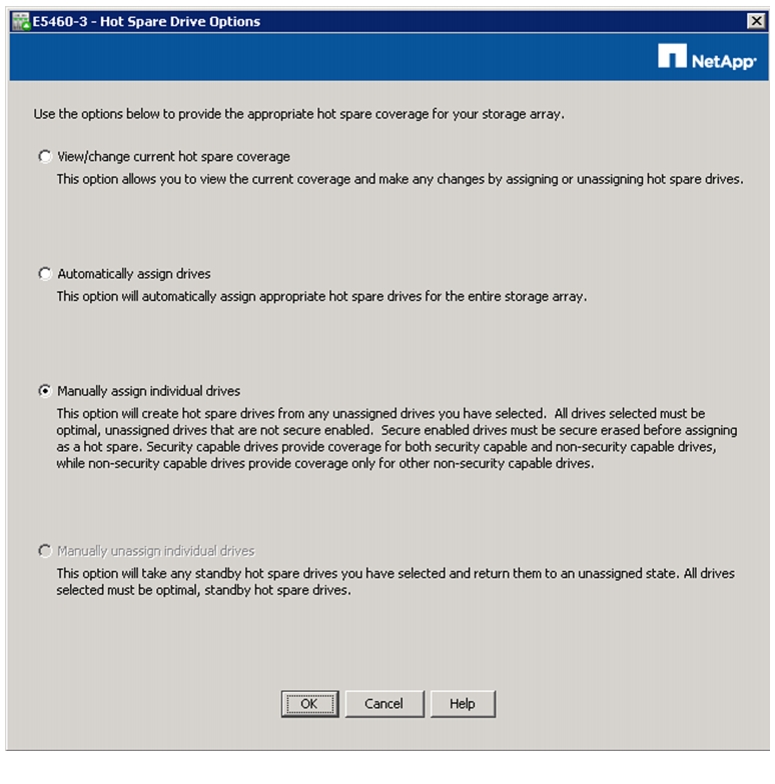

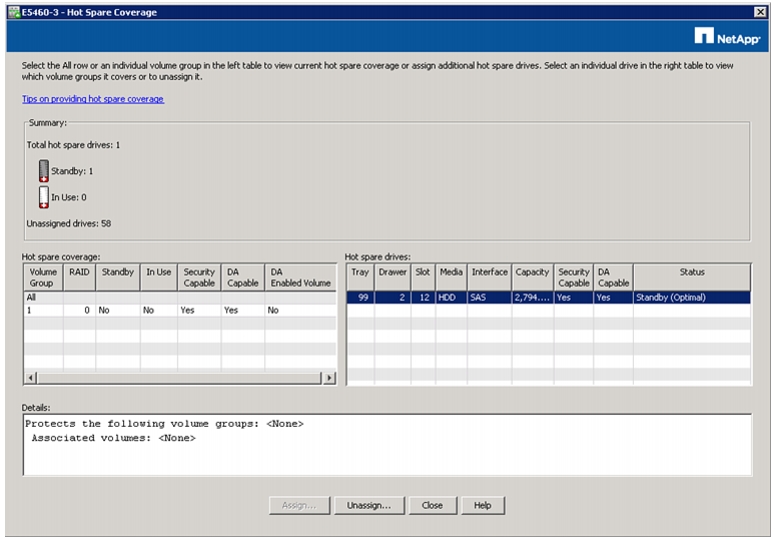

Creating and Assigning Hot Spares

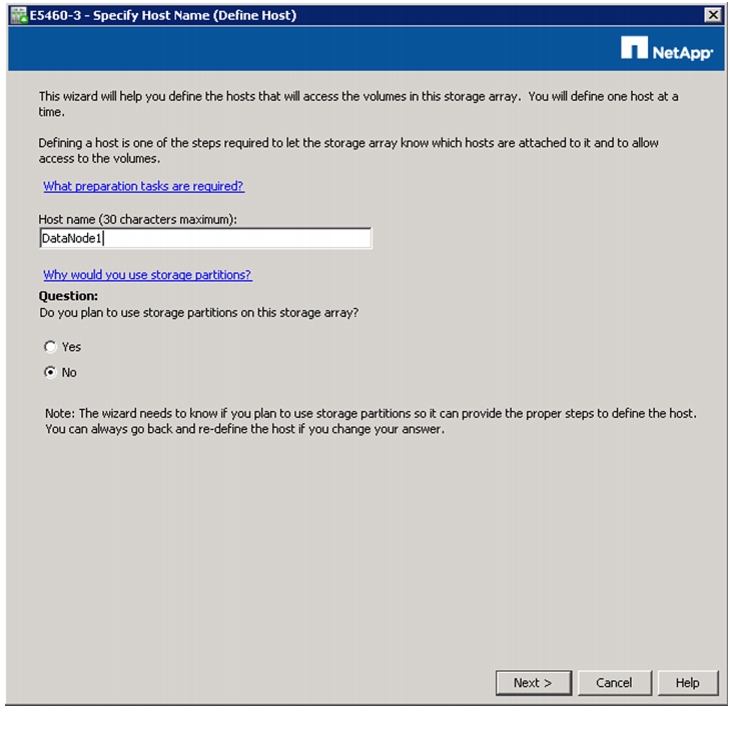

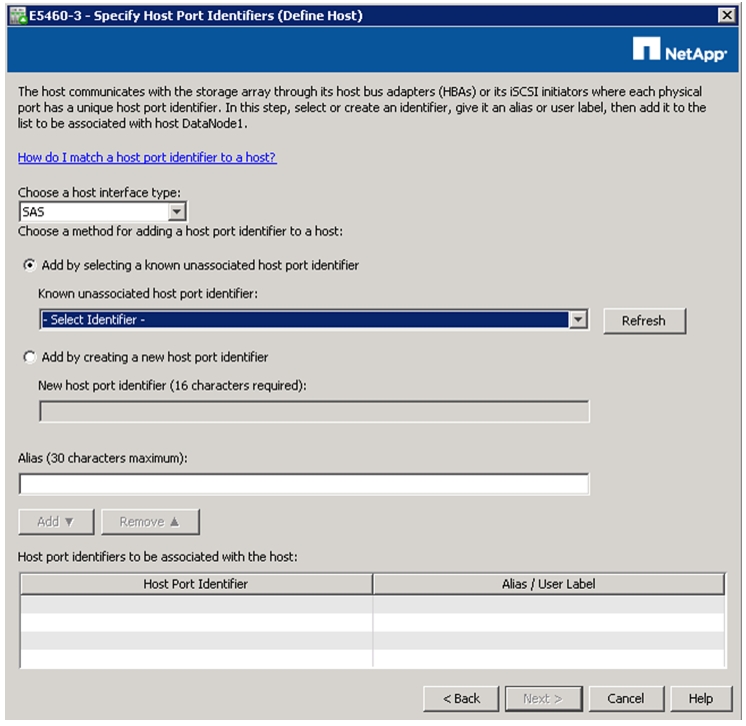

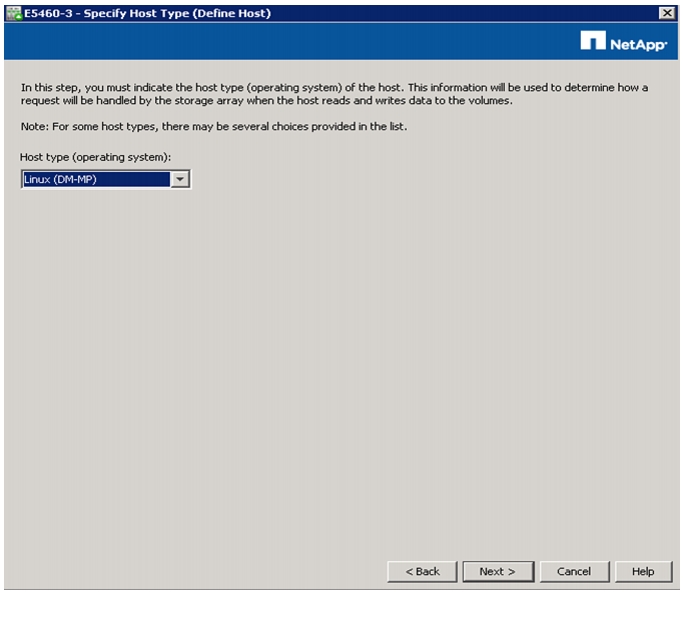

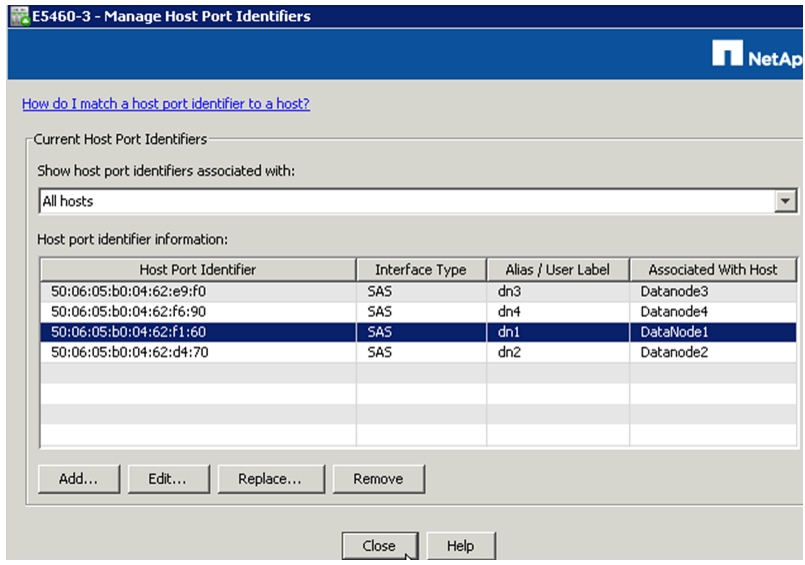

Map Datanodes to E-Series SAS Ports

Identify SAS Port ID Using LSI SAS2Flash Utility

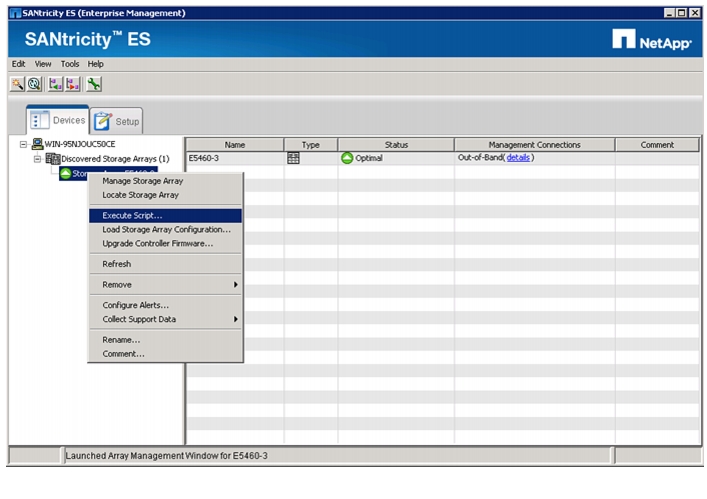

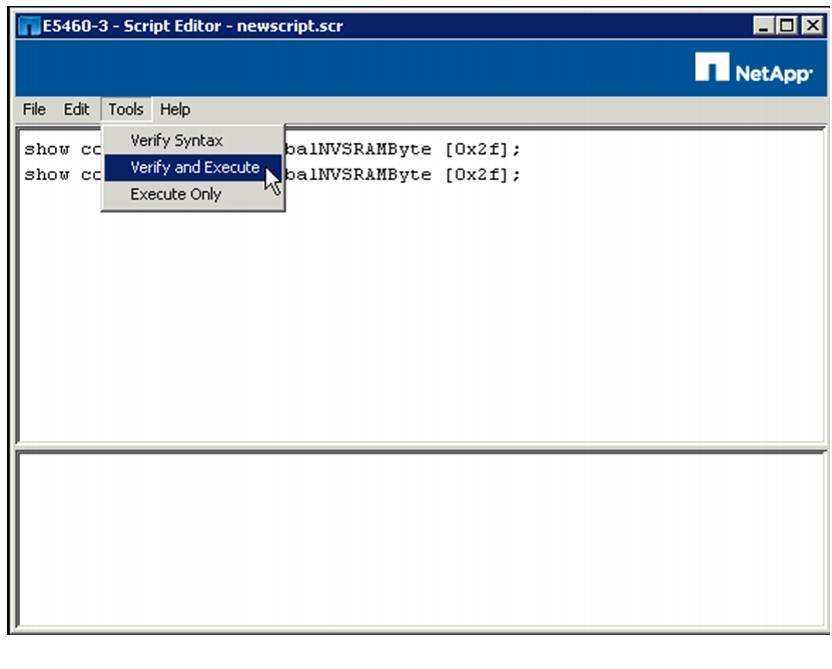

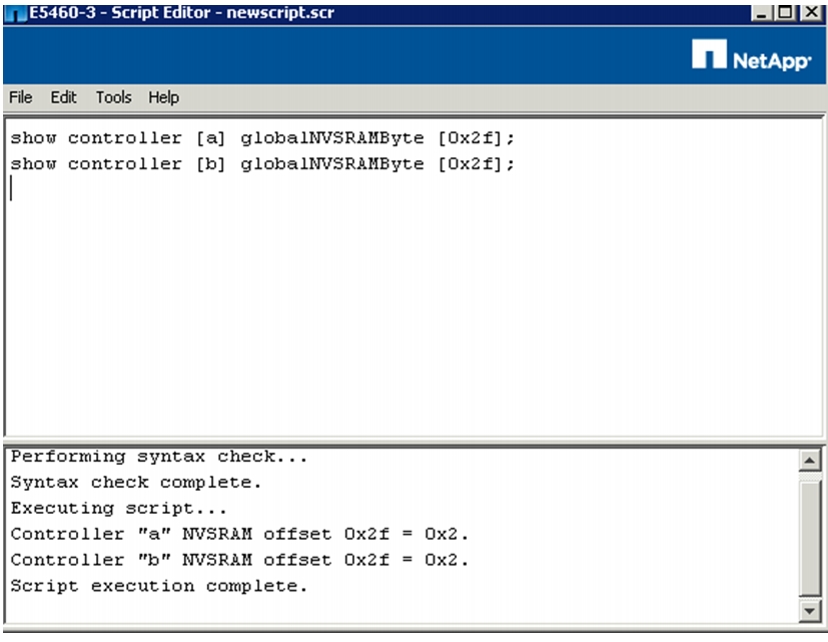

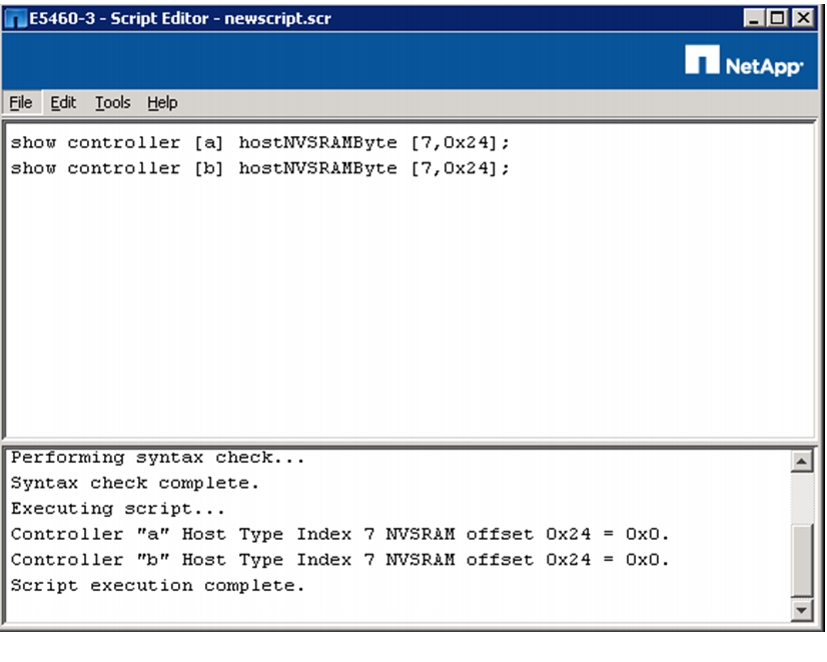

Disable E-Series Auto Volume Transfer

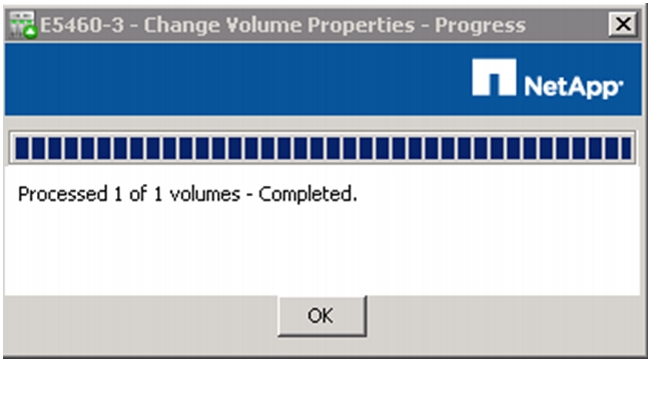

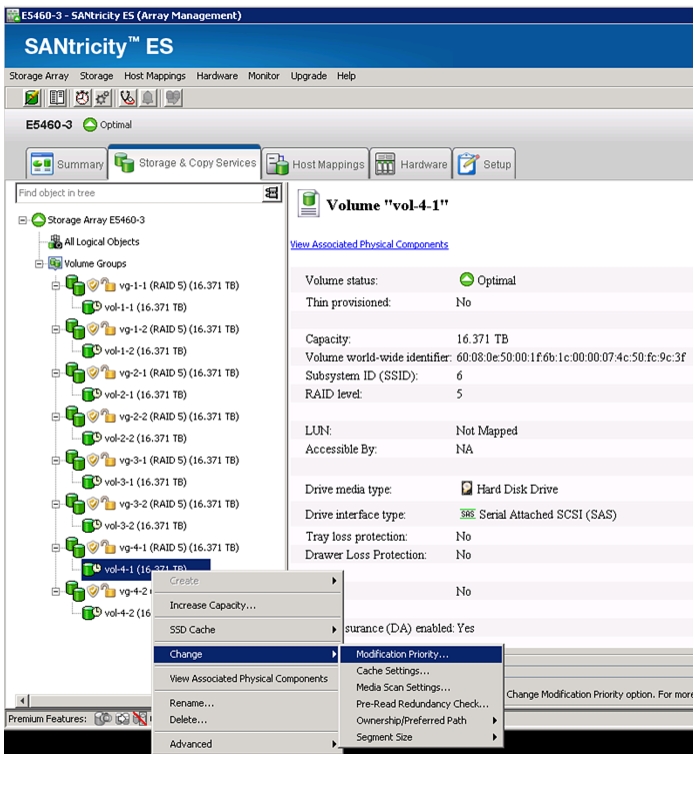

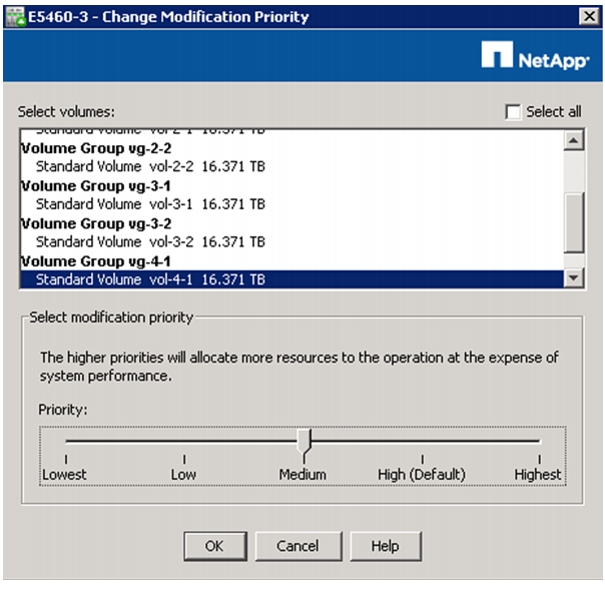

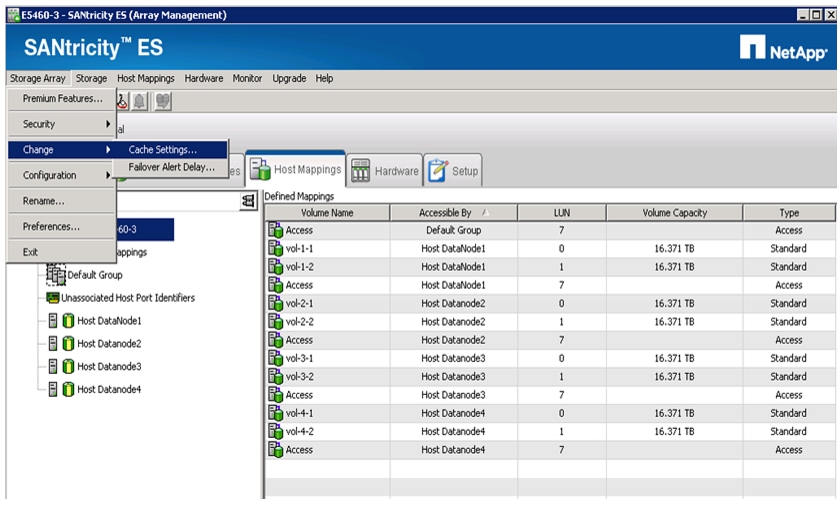

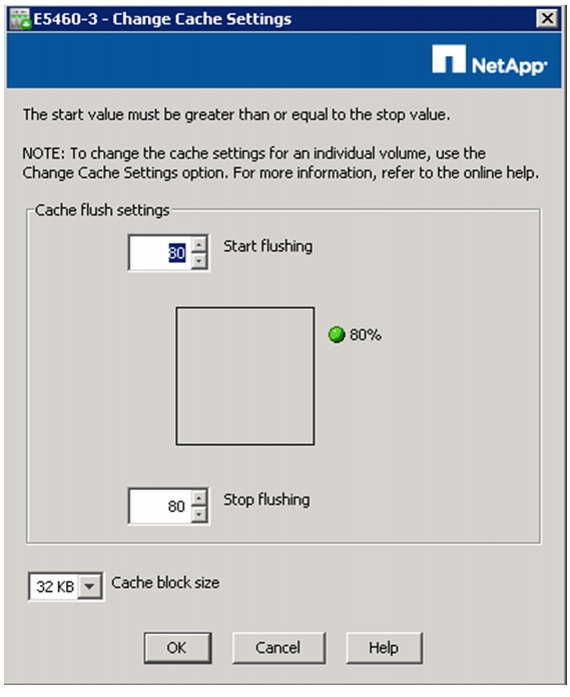

Configure Cache Settings for Array

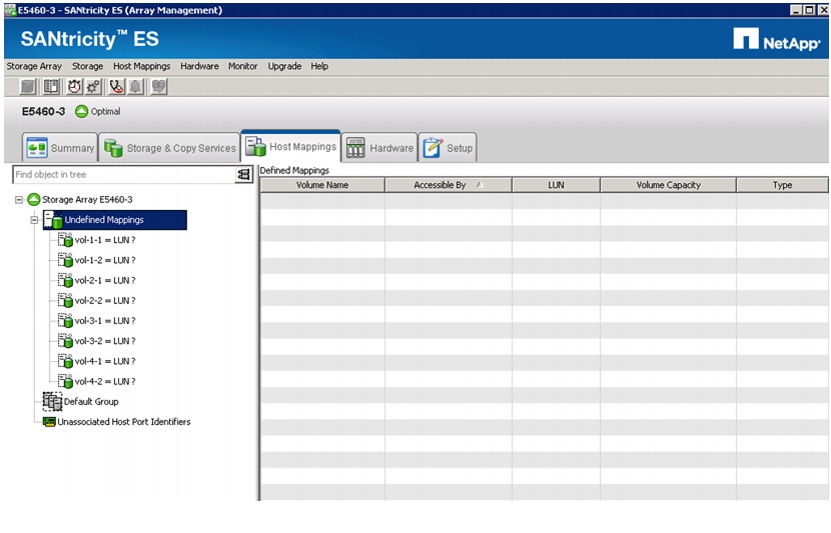

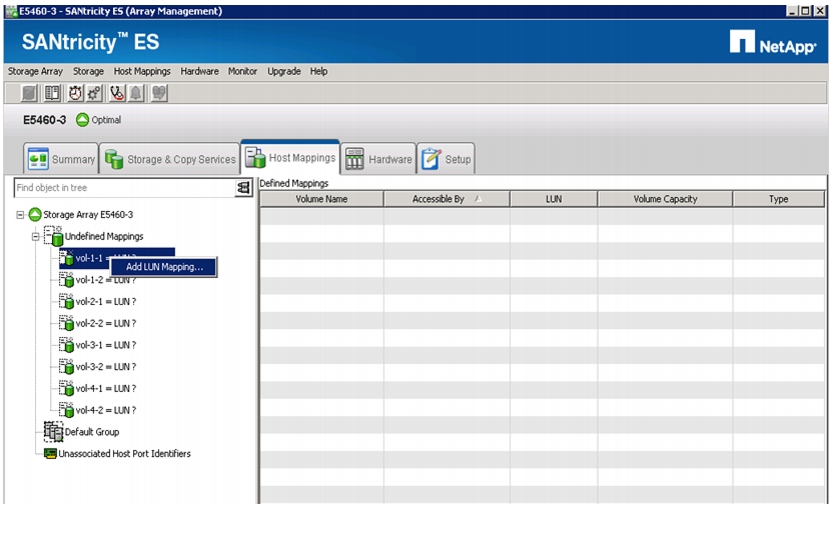

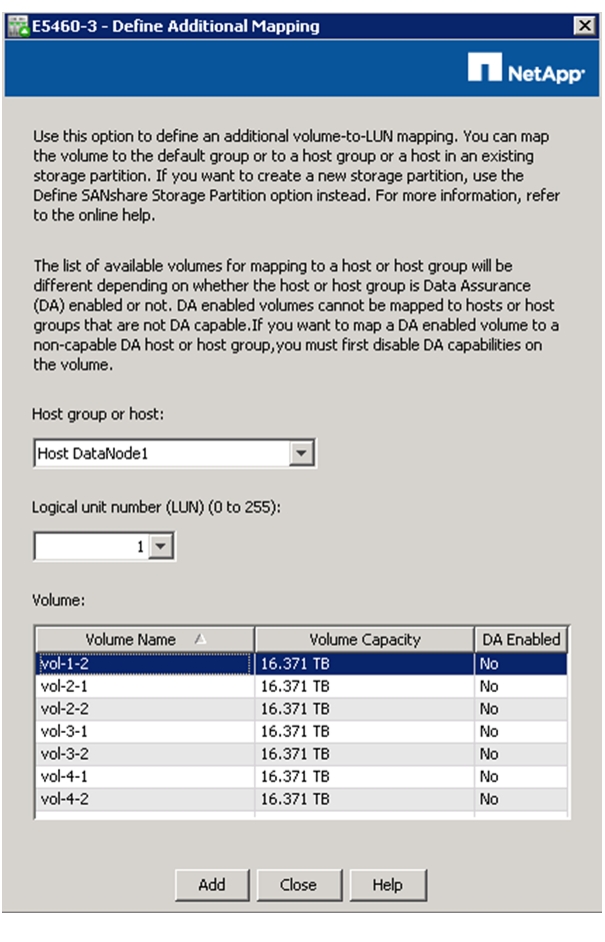

DataNode File Systems on E5460 LUNs

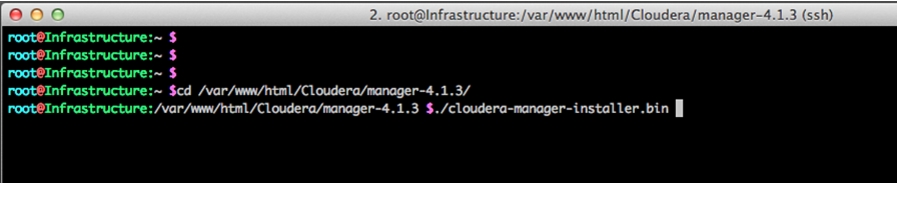

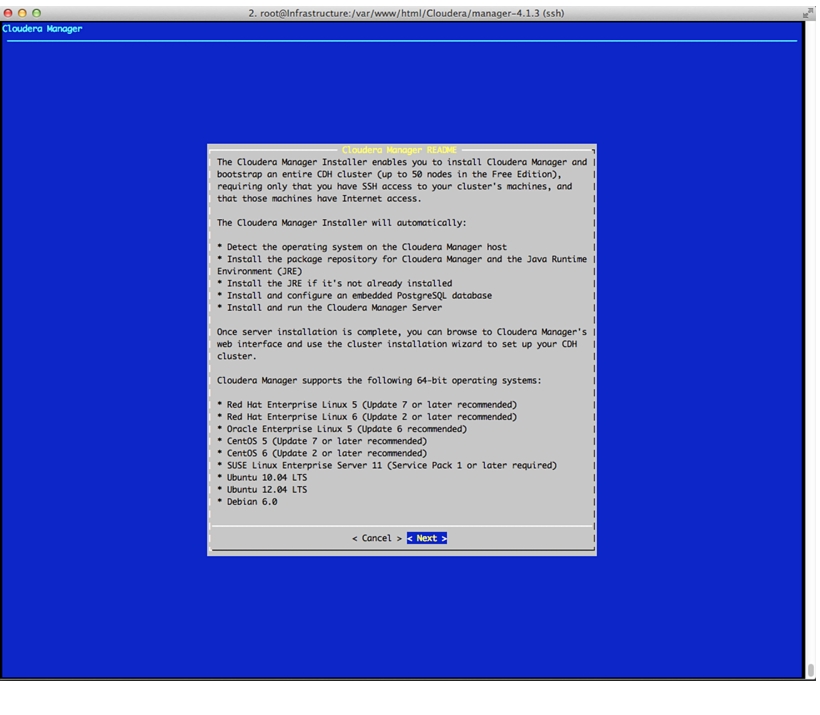

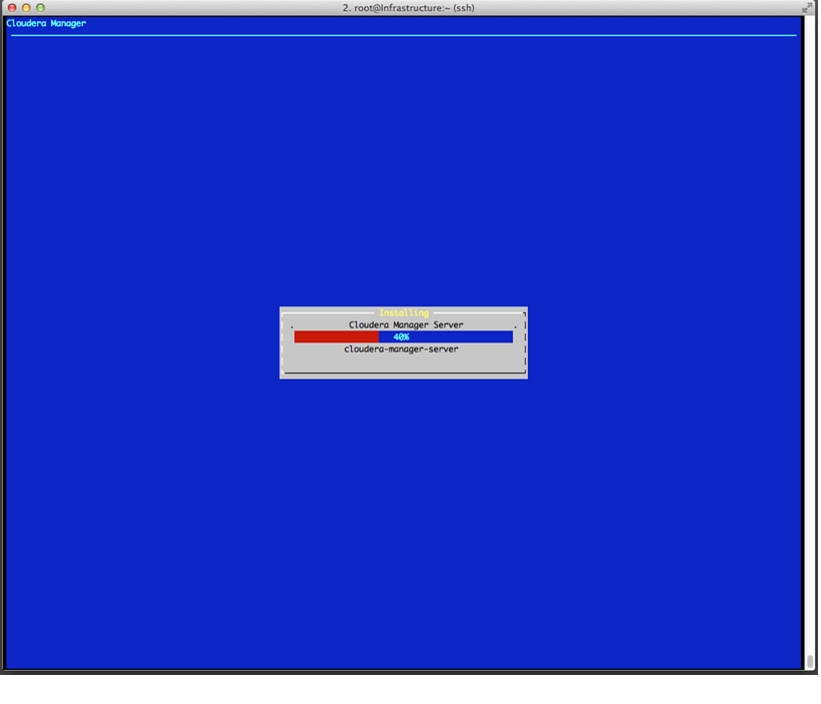

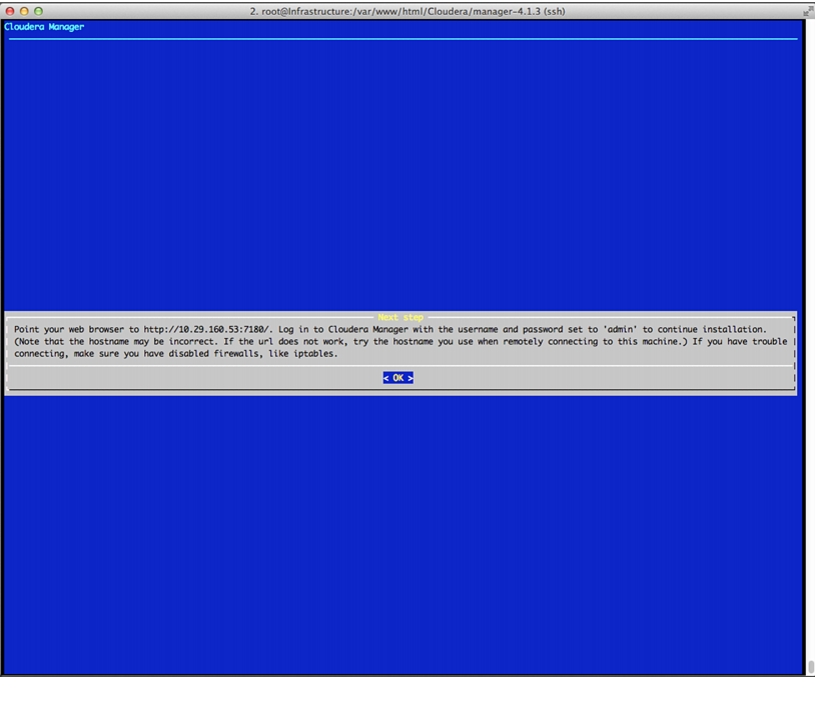

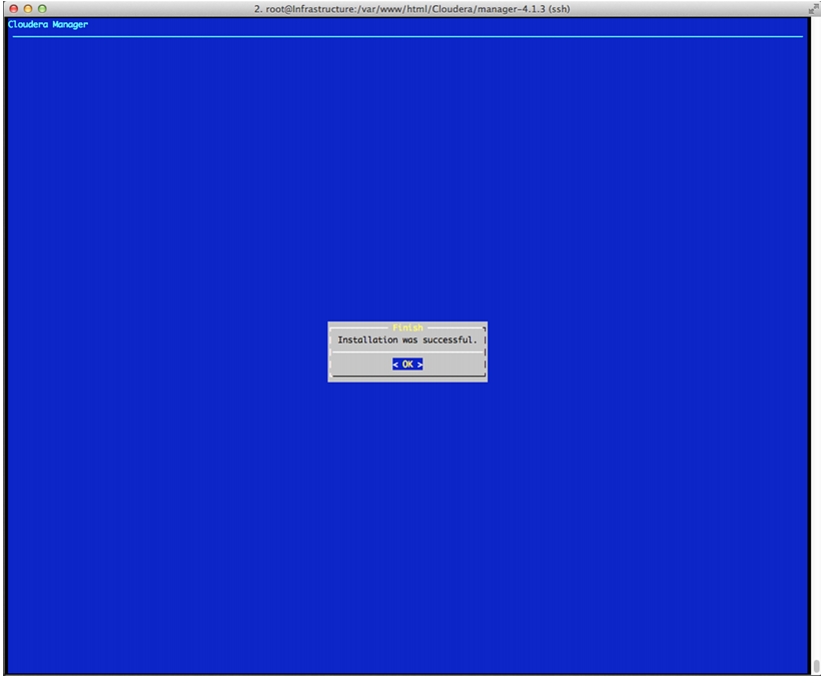

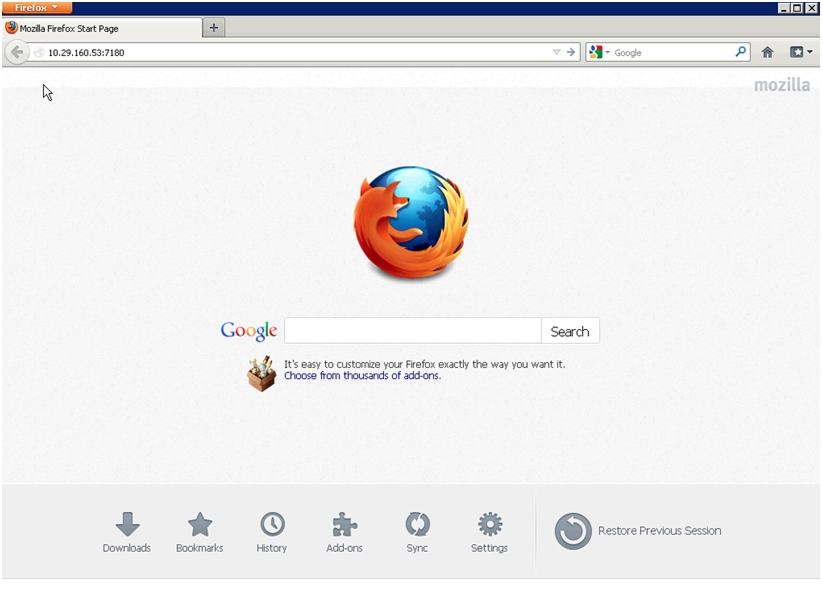

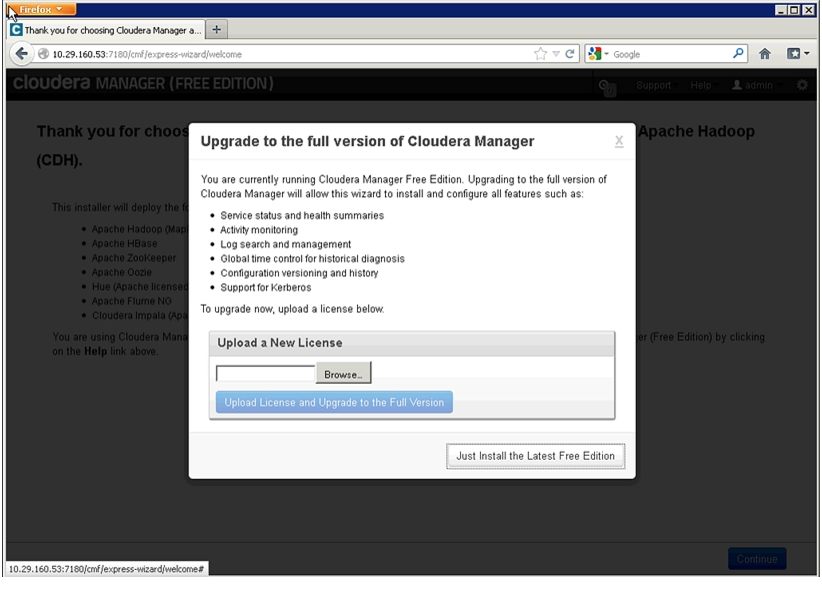

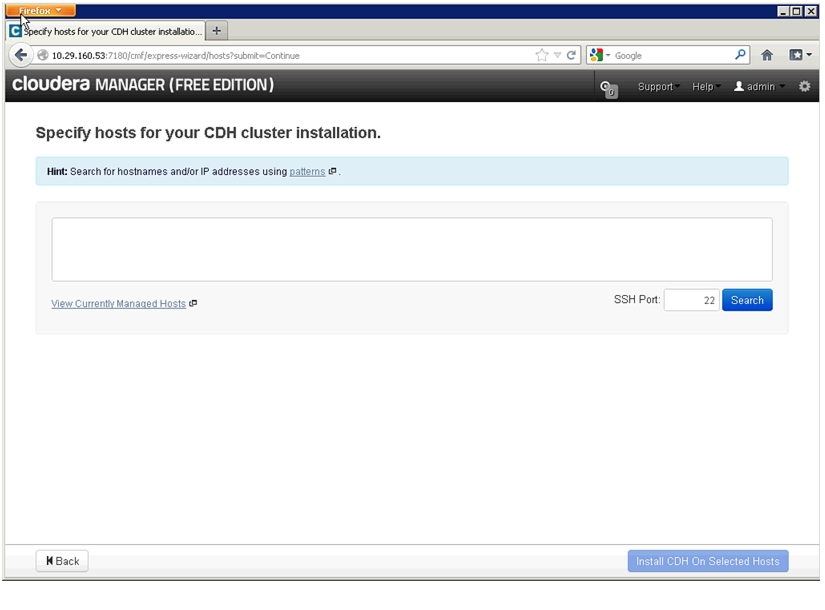

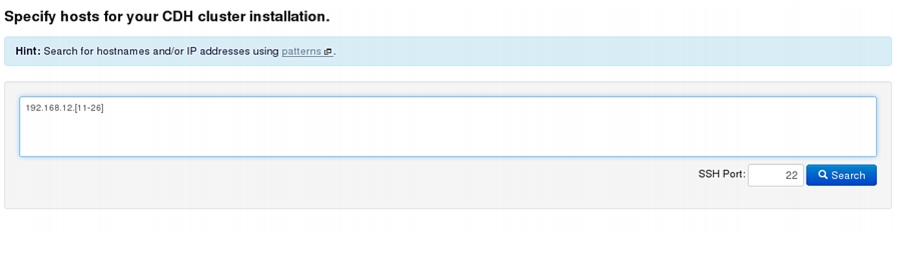

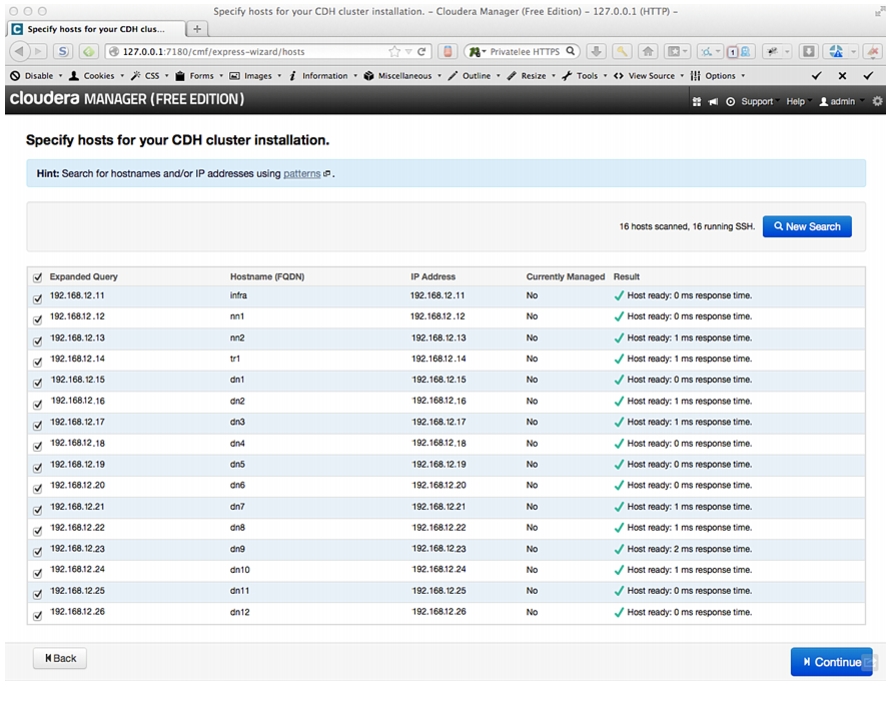

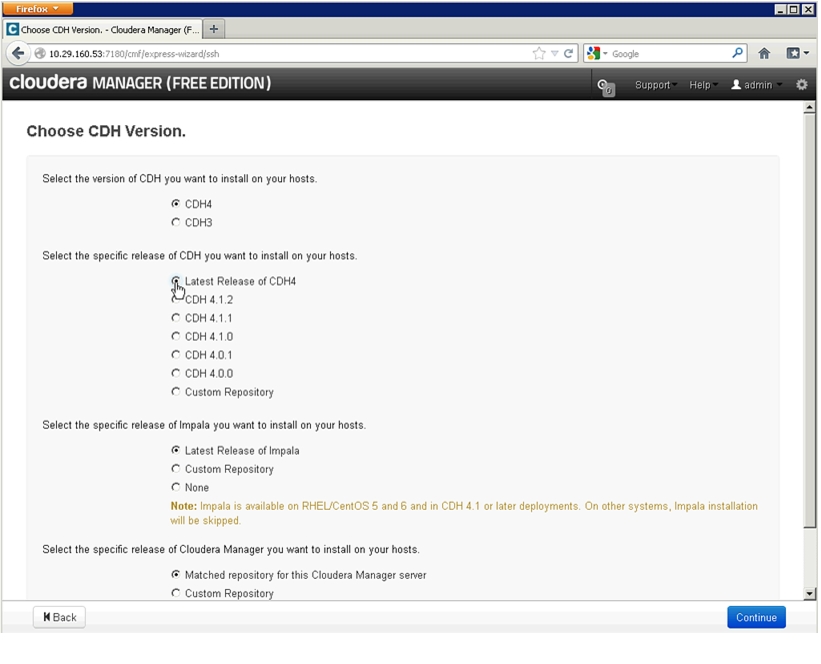

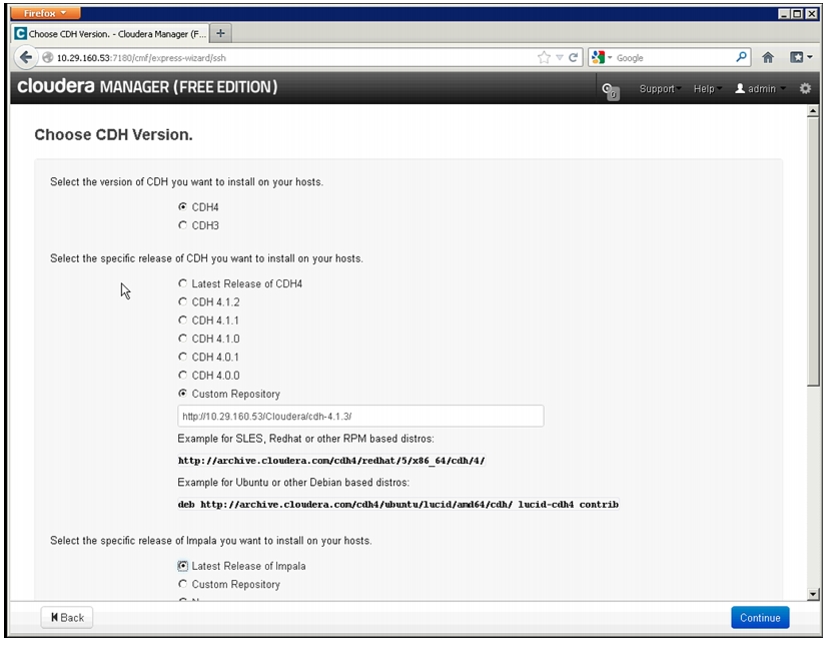

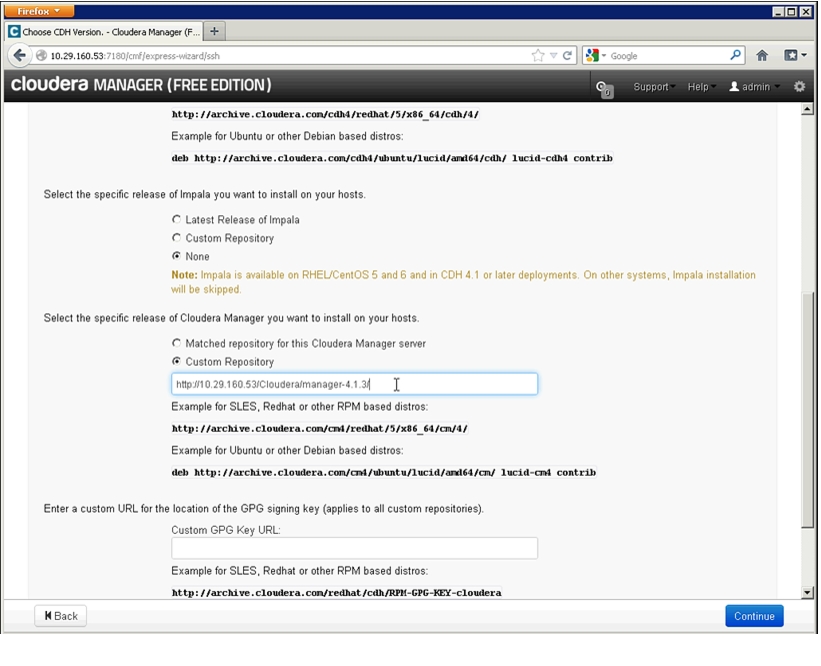

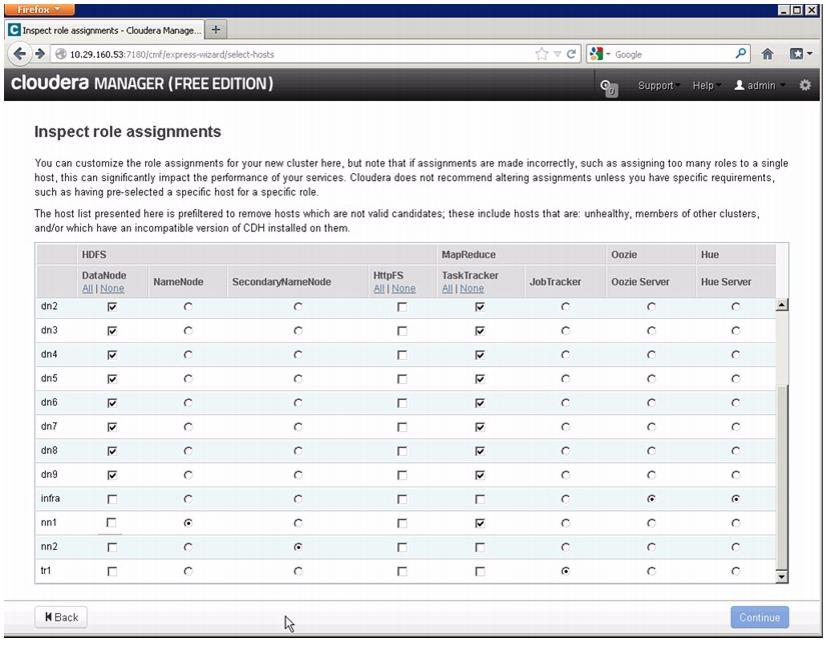

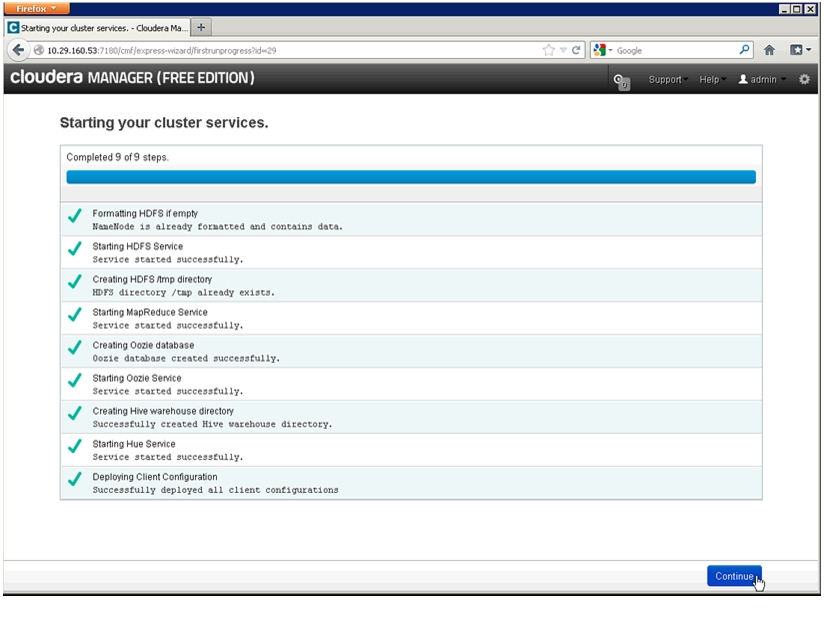

Cloudera Manager and Cloudera Enterprise Core Installation

Install Cloudera Enterprise Core (CDH4)

FlexPod Select with Cloudera's Distribution including Apache Hadoop (CDH)Last Updated: December 16, 2013

Building Architectures to Solve Business Problems

About the Authors

Raghunath Nambiar, Strategist, Data Center Solutions, Cisco SystemsRaghunath Nambiar is a Distinguished Engineer at Cisco's Data Center Business Group. His current responsibilities include emerging technologies and big data strategy.

Prem Jain, Senior Solutions Architect, Big Data team, NetApp SystemsPrem Jain is a Senior Solutions Architect with the NetApp big data team. Prem's 20+ year career in technology is comprised of solution development for data migration, virtualization, HPC and big data initiatives. He has architected innovative big data and data migration solutions and authored several reference architectures and technical white papers.

Acknowledgments

The authors acknowledge the contributions of Karthik Kulkarni, Manan Trivedi, and Sindhu Sudhir in developing this document.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit:

http://www.cisco.com/go/designzone

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

CCDE, CCENT, Cisco Eos, Cisco Lumin, Cisco Nexus, Cisco StadiumVision, Cisco TelePresence, Cisco WebEx, the Cisco logo, DCE, and Welcome to the Human Network are trademarks; Changing the Way We Work, Live, Play, and Learn and Cisco Store are service marks; and Access Registrar, Aironet, AsyncOS, Bringing the Meeting To You, Catalyst, CCDA, CCDP, CCIE, CCIP, CCNA, CCNP, CCSP, CCVP, Cisco, the Cisco Certified Internetwork Expert logo, Cisco IOS, Cisco Press, Cisco Systems, Cisco Systems Capital, the Cisco Systems logo, Cisco Unity, Collaboration Without Limitation, EtherFast, EtherSwitch, Event Center, Fast Step, Follow Me Browsing, FormShare, GigaDrive, HomeLink, Internet Quotient, IOS, iPhone, iQuick Study, IronPort, the IronPort logo, LightStream, Linksys, MediaTone, MeetingPlace, MeetingPlace Chime Sound, MGX, Networkers, Networking Academy, Network Registrar, PCNow, PIX, PowerPanels, ProConnect, ScriptShare, SenderBase, SMARTnet, Spectrum Expert, StackWise, The Fastest Way to Increase Your Internet Quotient, TransPath, WebEx, and the WebEx logo are registered trademarks of Cisco Systems, Inc. and/or its affiliates in the United States and certain other countries.

All other trademarks mentioned in this document or website are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (0809R)

© 2013 Cisco Systems, Inc. All rights reserved.

FlexPod Select with Cloudera's Distribution including Apache Hadoop (CDH)

Overview

Apache Hadoop, a software framework is gaining importance in IT portfolios. The FlexPod Select for Hadoop is an extension of FlexPod initiative built based on Cisco Common Platform Architecture (CPA) for Big Data for deployments that need enterprise class external storage array features. The solution offers a comprehensive analytic stack for big data that includes compute, storage, connectivity, enterprise Hadoop distribution with a full range of services to manage heavy workloads. The offer is a pre-validated solution for enterprise Hadoop deployments with breakthroughs around Hadoop stability, operations, and storage efficiency. By integrating all the hardware and software components and using highly reliable products, businesses can meet their tight SLAs around data performance while reducing the risk of deploying Hadoop.

Audience

The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy FlexPod Select for Hadoop with Cloudera.

Big Data Challenges and Opportunities

Big data is defined as data that is so high in volume and high in speed that it cannot be affordably processed and analyzed using traditional relational database tools. Typically, machine generated data combined with other data sources creates challenges for both businesses and their IT organizations. With data in organizations growing explosively and most of that new data unstructured, companies and their IT groups are facing a number of extraordinary issues related to scalability and complexity.

Lines of business are motivated by top line business benefits to work on unsolvable or unaffordable problems involving machine generated data, often combined with other traditional data sources. They exploit big data to derive competitive advantage, provide better customer experiences and help make decisions faster. Big data can be used to prevent fraud, improve business logistics by correlating buyer behavior with inventory, correlate patient treatments to their cures, improve homeland security and government intelligence, cross correlating very huge data sets from credit card transactions, RFID scans, video surveillance, and many other sources. More specifically to cater to the big data needs, an Apache Hadoop workload or cluster is required.

Big data is more about business opportunities than reducing costs. To address these challenges and risks of big data, companies need analytical solutions that meet the following criteria:

•

Provide resilient and reliable storage for Hadoop.

•

Implement high-performance Hadoop clusters.

•

Build on an open partner-based, ecosystem.

•

Allow efficient Hadoop clustering.

•

Scale compute and storage independently and quickly as data grows in volume.

•

Cost effectiveness.

The FlexPod Select for Hadoop is designed to address these challenges.

FlexPod Select for Hadoop Benefits

The FlexPod Select for Hadoop combines leading edge technologies from Cisco and NetApp to deliver a solution that exceeds the requirements of emerging big data analytics so that businesses can manage, process, and unlock the value of new and large volume data types that they generate. Designed for enterprises in data-intensive industries with business critical SLAs, the solution offers pre-sized storage, networking, and compute in a highly reliable, ready to deploy Apache Hadoop stack.

The key benefits of this solution are described in Table 1.

FlexPod Select for Hadoop with Cloudera Architecture

This section provides an architectural overview on the FlexPod Select for Hadoop with Cloudera. In this section you will find information on solution components and their configuration brief:

Solution Overview

Building upon the success of FlexPod, market leaders, Cisco and NetApp deliver the enterprise-class solution FlexPod Select for Hadoop with a pre-validated, faster Time to Value (*TtV) Hadoop solution for enterprises that provides control of and insights from big data. The FlexPod Select for Hadoop is based on a highly scalable architecture, that can scale from single rack to multiple racks, built using the following components:

*TtV is the time to realize a quantifiable business goal.Connectivity and Management

•

Cisco UCS 6200 Series Fabric Interconnects provide high speed, low latency connectivity for servers and centralized management for all connected devices with UCS Manager. Deployed in redundant pairs they offer full redundancy, performance (active-active), and exceptional scalability for large number of nodes typical in big data clusters. UCS Manger enables rapid and consistent server integration using service profile, ongoing system maintenance activities such as firmware update operations across the entire cluster as a single operation, advanced monitoring, and option to raise alarms and send notifications about the health of the entire cluster.

•

Cisco Nexus 2200 Series Fabric Extenders, act as remote line cards for Fabric Interconnects providing a highly scalable and extremely cost-effective connectivity for large number of nodes.

•

Cisco UCS Manager resides within the Cisco UCS 6200 Series Fabric Interconnects. It makes the system self-aware and self-integrating, managing all of the system components as a single logical entity. Cisco UCS Manager can be accessed through an intuitive GUI, a command-line interface (CLI), or an XML API. Cisco UCS Manager uses service profiles to define the personality, configuration, and connectivity of all resources within Cisco UCS, radically simplifying provisioning of resources so that the process takes minutes instead of days. This simplification allows IT departments to shift their focus from constant maintenance to strategic business initiatives. It also provides the most streamlined, simplified approach commercially available today for updating firmwares of all server components.

Compute

•

Cisco UCS C220M3 Rack-Mount Servers, 2-socket server based on Intel® Xeon® E-2600 series processors optimized for performance and density. This server is expandable to 512 GB of main memory and has 8 small factor internal front-accessible, hot-swappable disk drives and two PCIe Gen 3.0 slots.

•

Cisco UCS Virtual Interface Card 1225, unique to Cisco UCS is a dual-port PCIe Gen 2.0 x8 10-Gbps adapter designed for unified connectivity for Cisco UCS C-series Rack-Mount Servers.

Storage

–

NetApp E5460 storage array provides increased performance and bandwidth for Hadoop clusters along with higher storage efficiency and scalability.

–

NetApp FAS2220 and the Data ONTAP storage operating system provides high reliability for Hadoop with reduced single points of failure, faster recovery time and namenode metadata protection with hardware RAID.

Software

–

Cloudera® Enterprise Core is the Cloudera Distribution for Apache Hadoop (CDH). CDH is a leading Apache Hadoop-based platform which is 100% open source based.

–

Cloudera Manager is Cloudera's advanced Hadoop management platform.

–

Red Hat® Enterprise Linux® Server, the leading enterprise Linux distribution.

Configuration Overview

The solution is offered in a single rack and in multiple racks. The architecture consists of:

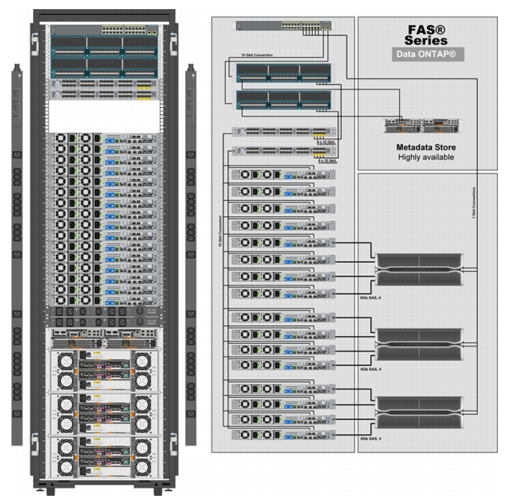

Master rack (single rack) is a standalone solution. The multiple rack solution consists of a master rack and one or more expansion racks. In a single UCS management domain, up to 9 expansion racks are supported. Deployments requiring more than 10 racks can be implemented by interconnecting multiple domains using Cisco Nexus 6000/7000 series switches and managed by UCS Central. Figure 1 shows the FlexPod Select for Hadoop master rack model.

Master Rack

The master rack consists of the following:

•

Two Cisco UCS 6296UP Fabric Interconnects

•

Two Cisco Nexus 2232PP Fabric Extenders

•

Sixteen Cisco UCS C220M3 Rack-Mount Servers

•

One Cisco Catalyst 2960S

•

One NetApp FAS2220

•

Three NetApp E5460

•

Two vertical PDUs

•

Two horizontal PDUs

•

Cisco 42U Rack

Figure 1 Cisco Master Rack

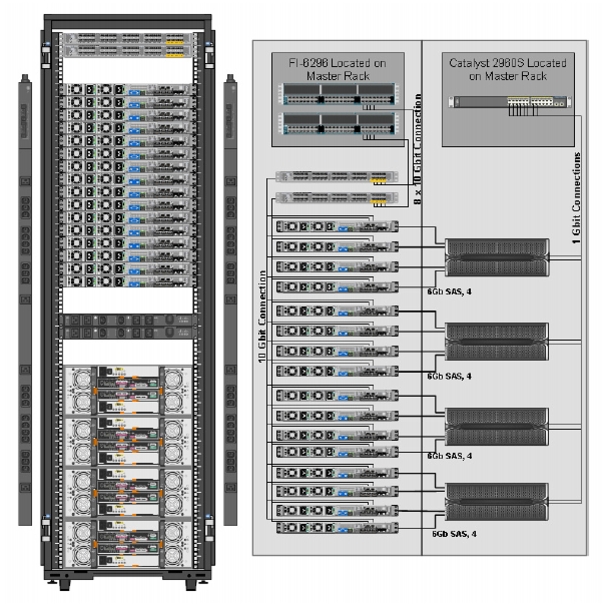

Expansion Rack

Figure 2 shows the FlexPod Select for Hadoop expansion rack model. The expansion rack consists of the following:

•

Two Cisco Nexus 2232PP Fabric Extenders

•

Sixteen UCS C220M3 Rack-Mount Servers

•

Four NetApp E5460

•

Two vertical PDUs

•

Two horizontal PDUs

•

Cisco 42U Rack

Figure 2 Cisco Expansion Rack

Rack and PDU Configuration

The rack configurations of the master rack and expansion rack are shown in Table 2 based on a Cisco 42U rack.

The configuration consists of two vertical PDUs and two horizontal PDUs. The Cisco UCS 6296UP Fabric Interconnects, NetApp E5460s and NetApp FAS2220 are connected to each of the horizontal PDUs. The Cisco Nexus 2232PP Fabric Extenders and Cisco UCS C220M3 Servers are connected to each of the vertical PDUs for redundancy; thereby, ensuring availability during power source failure.

Note

Please contact your Cisco representative for country specific information.

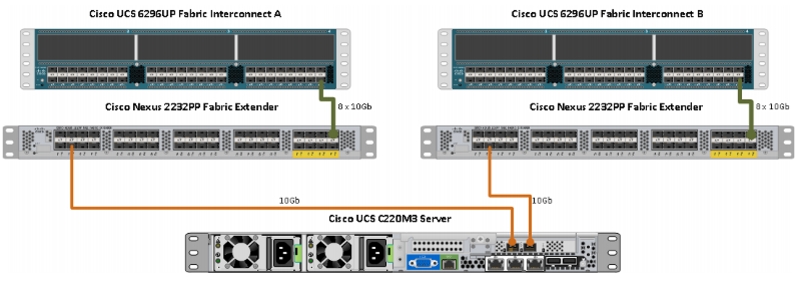

Fabric Configuration

The master rack configuration consists of two Cisco UCS 6296UP Fabric Interconnects and two Cisco Nexus Fabric Extender 2232PP forming two fabrics, Fabric A and Fabric B topology. The Cisco UCS C220M3 Servers 1 to 16 are connected to Fabric A and Fabric B using 10Gb Ethernet connectivity through Cisco Nexus 2232PP Fabric Extenders, with eight uplinks.

The configuration details of the master rack and expansion racks are shown in Figure 1 and Figure 2 respectively.

Storage Configuration

NetApp E5460 belong to the NetApp E5400 modular data storage system family that support big-bandwidth datasets requiring high sequential throughput. The NetApp E5460s are configured with dual SAS controllers and 60 3TB 7.2K RPM SAS disk drives.

For more information, see:

http://www.netapp.com/us/products/storage-systems/e5400/index.aspx

NetApp FAS2200 offers powerful, affordable, flexible data storage for midsized businesses and distributed enterprises. The NetApp FAS2220 has 6 drives (600GB, 10K rpm, SAS) and 4 x 1GbE ports and 2 x 10GbE ports.

For more information, see:

http://www.netapp.com/us/products/storage-systems/fas2200/

Server Configuration and Cabling

Figure 3 illustrates the physical connectivity of Cisco UCS 6296UP Fabric Interconnects, Cisco Nexus 2232PP Fabric Extenders, and Cisco UCS C220M3 Servers.

Figure 3 Cisco Hardware Connectivity

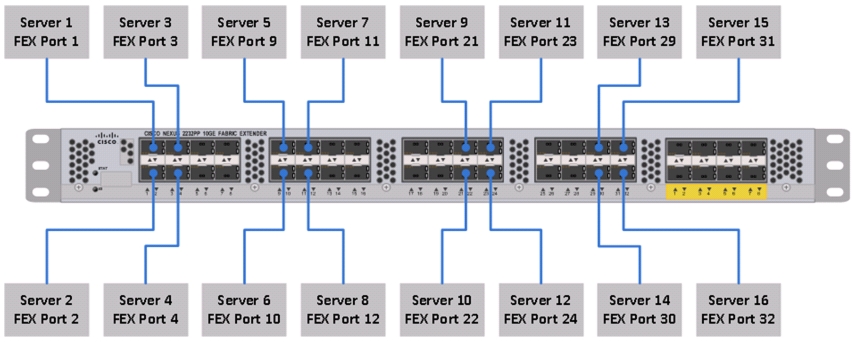

Figure 4 shows the ports of the Cisco Nexus 2232PP Fabric Extender connecting the Cisco UCS C220M3 Servers. Sixteen Cisco UCS C220M3 Servers are used in the master and expansion rack configurations offered by the FlexPod Select for Hadoop.

Figure 4 Connectivity Diagram of Cisco Nexus 2232PP FEX and Cisco UCS C220M3 Servers

Note

Cisco UCS Manager version used for this deployment is UCS 2.1(1e).

For more information on configuring single-wire management, see:

http://www.cisco.com/en/US/docs/unified_computing/ucs/c-series_integration/ucsm2.1/b_UCSM2-1_C-Integration_chapter_010.html

For more information on physical connectivity illustrations and cluster setup, see:

http://www.cisco.com/en/US/docs/unified_computing/ucs/c-series_integration/ucsm2.1/b_UCSM2-1_C-Integration_chapter_010.html#reference_FE5B914256CB4C47B30287D2F9CE3597

Software Requirements

For this deployment we have used Cloudera Distribution for Apache Hadoop (CDH), which is an open source distribution with the World's leading Apache Hadoop solution.

CDH

Cloudera software for Cloudera Distribution for Apache Hadoop is v4.x (CDH4). For more information on Cloudera, see:

RHEL

The operating system supported is Red Hat Enterprise Linux Server 6.2. For more information on the Linux support, see:

Software Versions

Table 3 provides the software version details of all the software requirements for this model.

Fabric Configuration

This section provides details for configuring a fully redundant, highly available configuration for a FlexPod Select for Hadoop. Follow these steps to configure Cisco 6296UP Fabric Interconnect.

1.

Configure FI A

2.

Configure FI B

3.

Connect to IP address of FI A using web browser. Launch Cisco UCS Manger

4.

Edit the chassis discovery policy.

5.

Enable server and Uplink Ports

6.

Create pools and polices for service profile template.

7.

Create SP template, 16 profiles

8.

Start discover process

9.

Associate to server

10.

FI Configuration for NetApp FAS2220

Performing an Initial Setup of Cisco UCS 6296UP Fabric Interconnects

Follow these steps for initial setup of the Cisco UCS 6296 Fabric Interconnects:

Cisco UCS 6296 FI A

1.

Connect to the console port on the first Cisco UCS 6296 Fabric Interconnect.

2.

At the configuration method prompt, enter console.

3.

If asked to either do a new setup or restore from backup, enter setup to continue.

4.

Enter y to continue to set up a new fabric interconnect.

5.

Enter y to enforce strong passwords.

6.

Enter the password for the admin user.

7.

Enter the same password again to confirm the password for the admin user.

8.

When asked if this fabric interconnect is part of a cluster, enter y to continue.

9.

Enter A for the switch fabric.

10.

Enter the cluster name for the system name.

11.

Enter the Mgmt0 IPv4 address for management port on the fabric interconnect.

12.

Enter the Mgmt0 IPv4 subnet mask for the management port on the fabric interconnect.

13.

Enter the IPv4 address of the default gateway.

14.

Enter the cluster IPv4 address.

15.

To configure DNS, enter y.

16.

Enter the DNS IPv4 address.

17.

Enter y to set up the default domain name.

18.

Enter the default domain name.

19.

Review the settings that were printed to the console, and if they are correct, enter yes to save the configuration.

20.

Wait for the login prompt to make sure the configuration is saved successfully.

Cisco UCS 6296UP FI B

1.

Connect to the console port on the second Cisco UCS 6296 Fabric Interconnect.

2.

At the configuration method prompt, enter console.

3.

The installer detects the presence of the partner fabric interconnect and adds this fabric interconnect to the cluster. Enter y to continue the installation.

4.

Enter the admin password for the first fabric interconnects.

5.

Enter the Mgmt0 IPv4 address for the management port on the subordinate fabric interconnect.

6.

Enter y to save the configuration.

7.

Wait for the login prompt to make sure the configuration is saved successfully.

For more information on configuring Cisco UCS 6200 Series Fabric Interconnect, see:

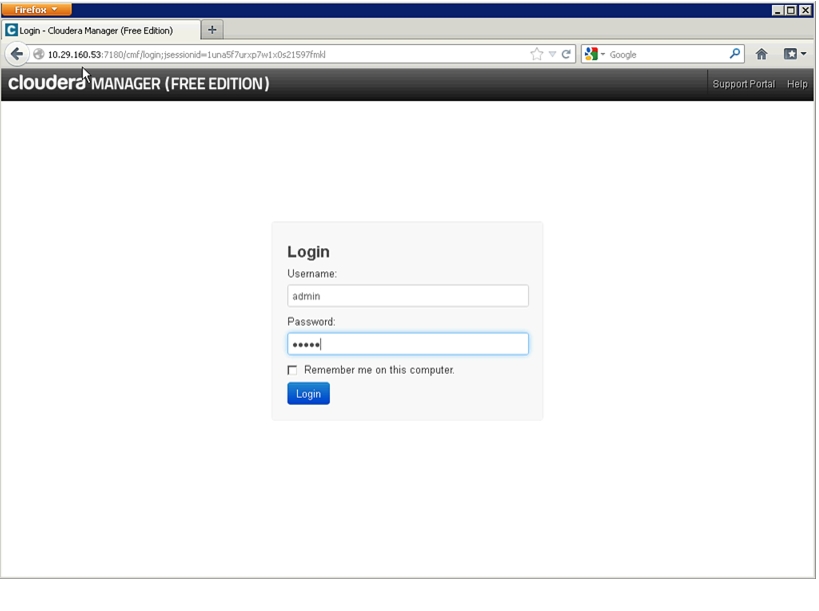

Logging into Cisco UCS Manager

Follow these steps to log into Cisco UCS Manager:

1.

Open a Web browser and type the Cisco UCS 6296UP Fabric Interconnect cluster address.

2.

If a Security Alert dialog box appears, click Yes to accept the security certificate and continue.

3.

In the Cisco UCS Manager launch page, click Launch UCS Manager.

4.

When prompted, enter admin for the user name and enter the administrative password and click Login to log in to the Cisco UCS Manager GUI.

Upgrade Cisco UCS Manager Software to Version 2.1(1e)

This document assumes the use of UCS 2.1(1e). For more information on upgrading the software version to Cisco UCS 2.0 release, see:

This link provides you information on upgrading Cisco UCS Manager software and Cisco UCS 6296 Fabric Interconnect software to version 2.1(1e).

Note

Make sure the Cisco UCS C-Series version 2.1(1e) software bundle is loaded on the Fabric Interconnects.

Adding a Block of IP Addresses for KVM Console

Follow these steps to create a block of KVM IP addresses for server access in the Cisco UCS Manager GUI:

1.

Select the LAN tab at the top in the left pane in the UCSM GUI.

2.

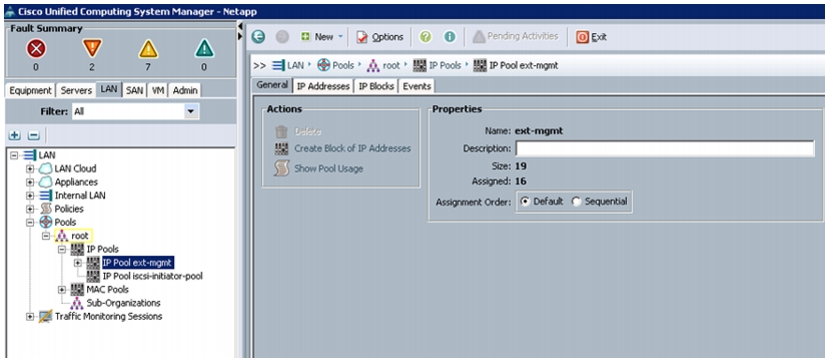

Select Pools > IP Pools > IP Pool ext-mgmt as shown in Figure 5.

Figure 5 Management IP Pool in Cisco UCS Manager

3.

Right-click the IP Pool ext-mgmt.

4.

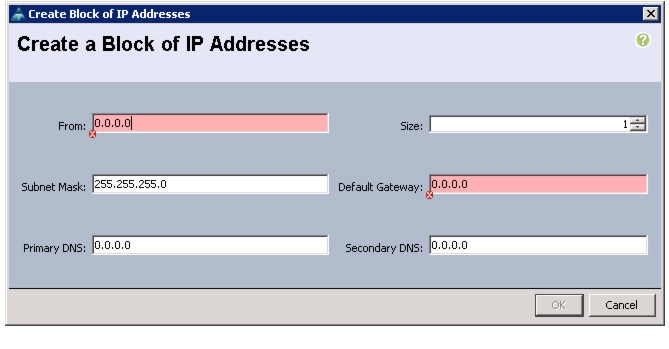

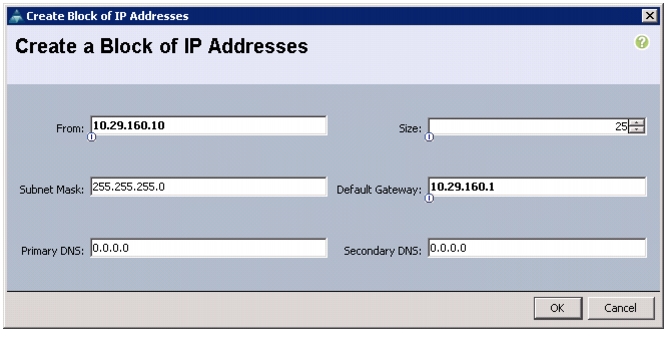

Select Create Block of IP Addresses. Create Block of IP Address window appears as shown in Figure 6.

Figure 6 Creating a Block of IP Addresses

5.

Enter the starting IP address of the block and number of IPs needed as well as the subnet and gateway information.

Figure 7 Entering the Block of IP Addresses

6.

Click OK to create the IP block.

7.

Click OK in the confirmation message box.

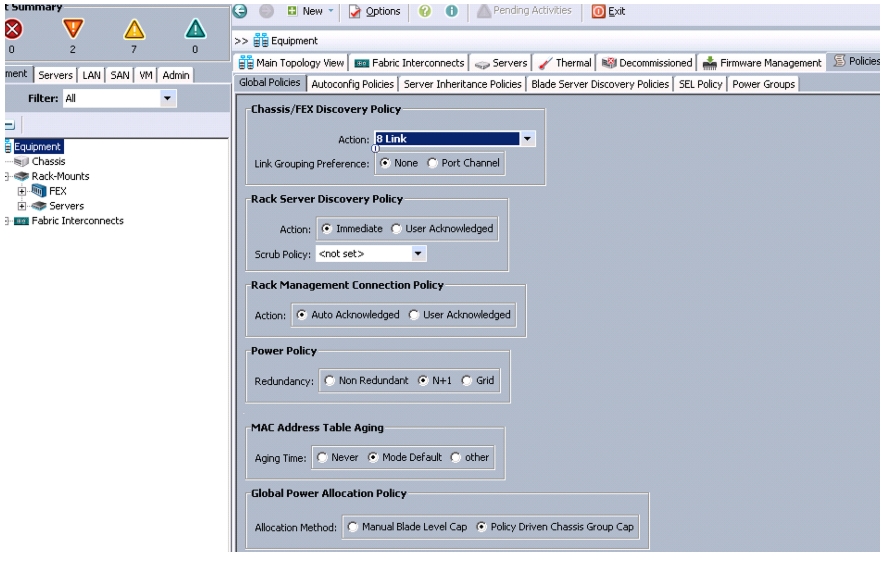

Editing the Chassis Discovery Policy

Setting the discovery policy now will simplify the addition of Cisco UCS B-Series Chassis in the future and additional fabric extenders for further C-Series connectivity.

To modify the chassis discovery policy, follow these steps:

1.

Navigate to the Equipment tab in the left pane in the UCSM GUI.

2.

In the right pane, select the Policies tab.

3.

Under Global Policies, change the Chassis Discovery Policy to 8-link as shown in Figure 8.

Figure 8 Editing the Chassis Discovery Policy

4.

Click Save Changes in the bottom right corner in the Cisco UCSM GUI.

5.

Click OK.

Enabling Server and Uplink Ports

To enable the server ports and uplink ports, follow these steps:

1.

Select the Equipment tab on the top left corner in the left pane in the UCSM GUI.

2.

Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3.

Expand the Unconfigured Ethernet Ports.

4.

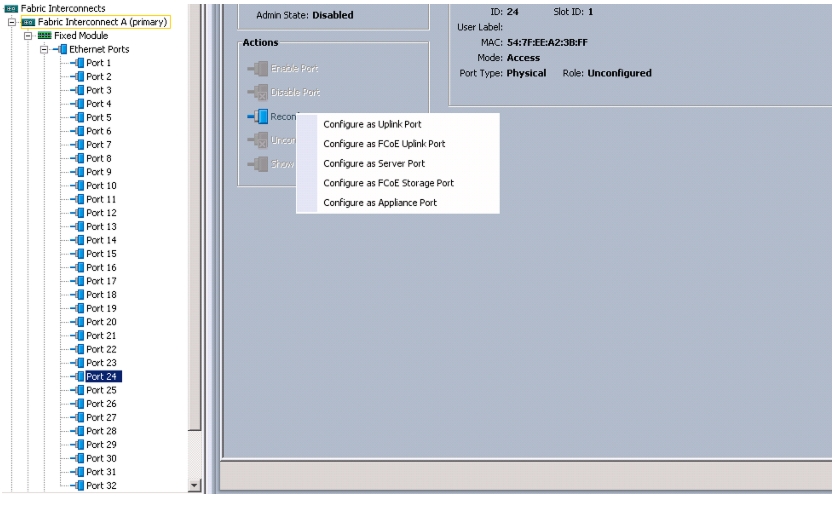

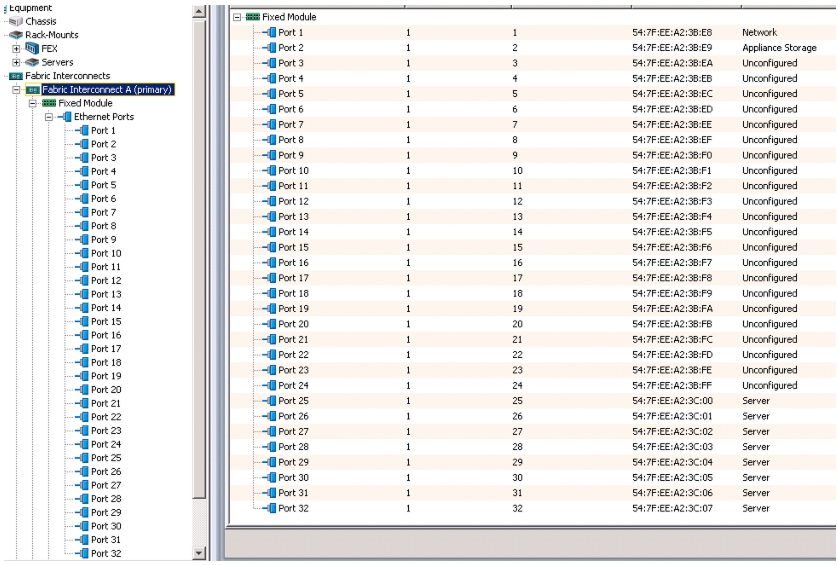

Select the number of ports that are connected to the Cisco Nexus 2232PP FEXs (8 per FEX), right-click them, and select Reconfigure > Configure as a Server Port as shown in Figure 9.

Figure 9 Enabling Server Ports

5.

Select port 1 that is connected to the Cisco Catalyst 2960-S switches, right-click them, and select Reconfigure > Configure as Uplink Port.

6.

Select Show Interface and select 10GB for Uplink Connection.

7.

A pop-up window appears to confirm your selection. Click Yes, then OK to continue.

8.

Select Equipment > Fabric Interconnects > Fabric Interconnect B (subordinate) > Fixed Module.

9.

Expand the Unconfigured Ethernet Ports.

10.

Select the number of ports that are connected to the Cisco Nexus 2232 FEXs (8 per FEX), right-click them, and select Reconfigure > Configure as Server Port.

11.

A pop-up window appears to confirm your selection. Click Yes, then OK to continue.

12.

Select port 1 that is connected to the Cisco Catalyst 2960-S switches, right-click and select Reconfigure > Configure as Uplink Port.

13.

Select Show Interface and select 10GB for Uplink Connection.

14.

A pop-up window appears to confirm your selection. Click Yes, then OK to continue.

Figure 10 Window Showing Server Ports and Uplink Ports

Creating Pools for Service Profile Template

Creating an Organization

Organizations are used as a means to organize and restrict access to various groups within the IT organization, thereby enabling multi-tenancy of the compute resources. This document does not assume the use of Organizations; however the necessary steps are provided for future reference.

Follow these steps to configure an organization in the Cisco UCS Manager GUI:

1.

Click New on the top left corner in the right pane in the UCSM GUI.

2.

Select Create Organization from the options.

3.

Enter a name for the organization.

4.

(Optional) enter a description for the organization.

5.

Click OK.

6.

Click OK in the success message box.

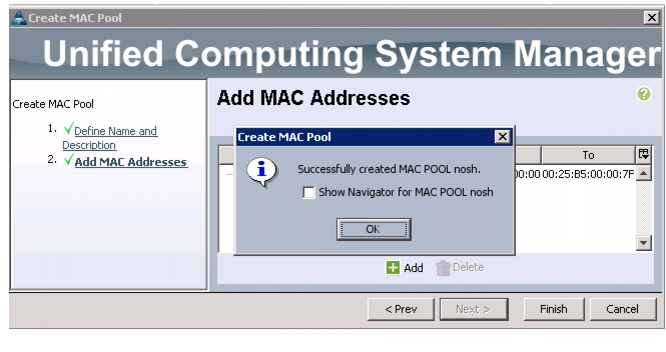

Creating MAC Address Pools

Follow these steps to configure the necessary MAC address pools in the Cisco UCS Manager GUI:

1.

Select the LAN tab in the left pane in the UCSM GUI.

2.

Select Pools > root.

3.

Right-click the MAC Pools under the root organization.

4.

Select Create MAC Pool to create the MAC address pool.

5.

Enter nosh for the name of the MAC pool.

6.

(Optional) enter a description of the MAC pool.

7.

Click Next.

8.

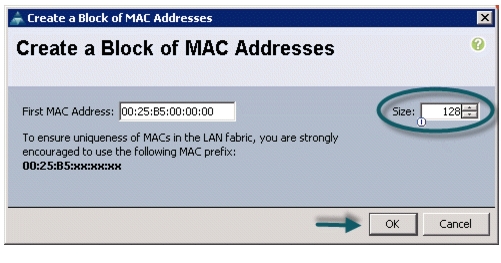

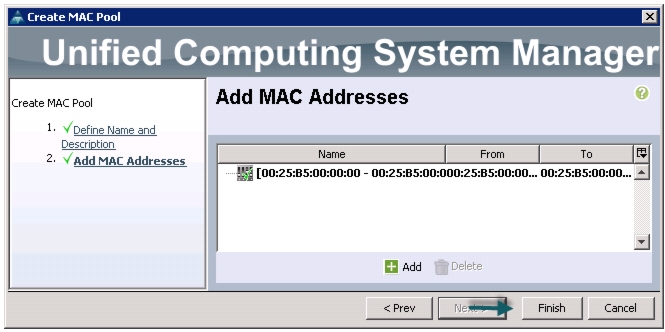

Click Add.

9.

Specify a starting MAC address.

10.

Specify a size of the MAC address pool sufficient to support the available server resources. See Figure 11, Figure 12, and Figure 13.

Figure 11 Specifying the First MAC Address and Size

Figure 12 Range of MAC Addresses

Figure 13 Created MAC Pool

11.

Click OK.

12.

Click Finish.

13.

Click OK in the success message box.

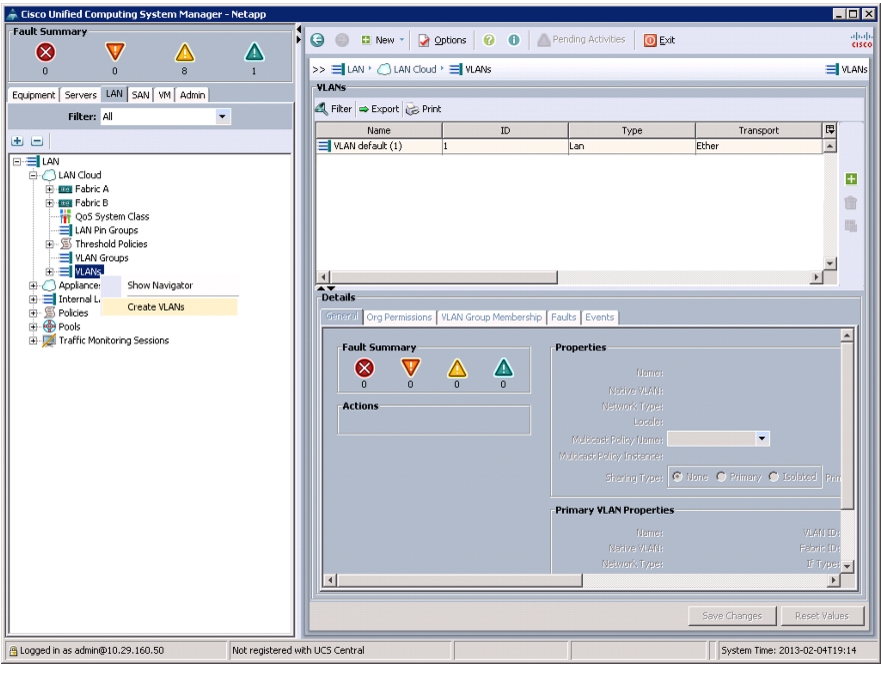

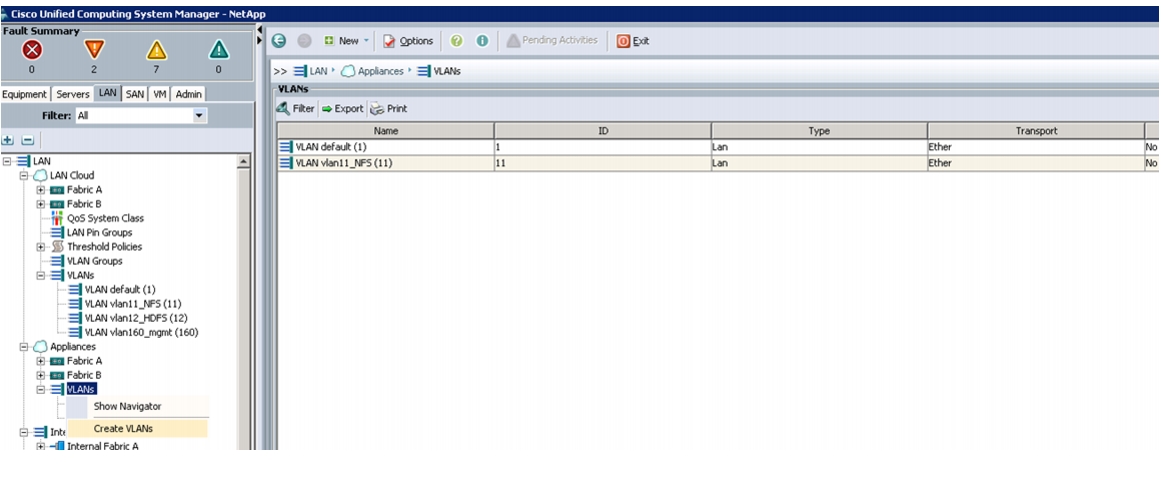

Configuring VLANs

VLANs are configured as shown in Table 4.

For this deployment we are using eth0 (vlan160_mgmt) for management packets, eth1 (vlan11_NFS) for NFS data traffic and eth2 (vlan12_HDFS) for HDFS data traffic.

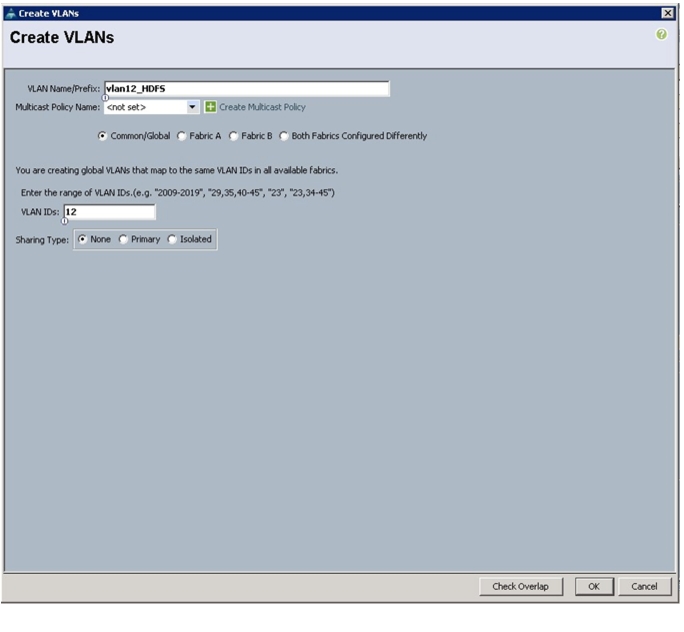

Follow these steps to configure VLANs in the Cisco UCS Manager GUI:

1.

Select the LAN tab in the left pane in the UCSM GUI.

2.

Select LAN > VLANs.

3.

Right-click the VLANs under the root organization.

4.

Select Create VLANs to create the VLAN.

Figure 14 Creating VLANs

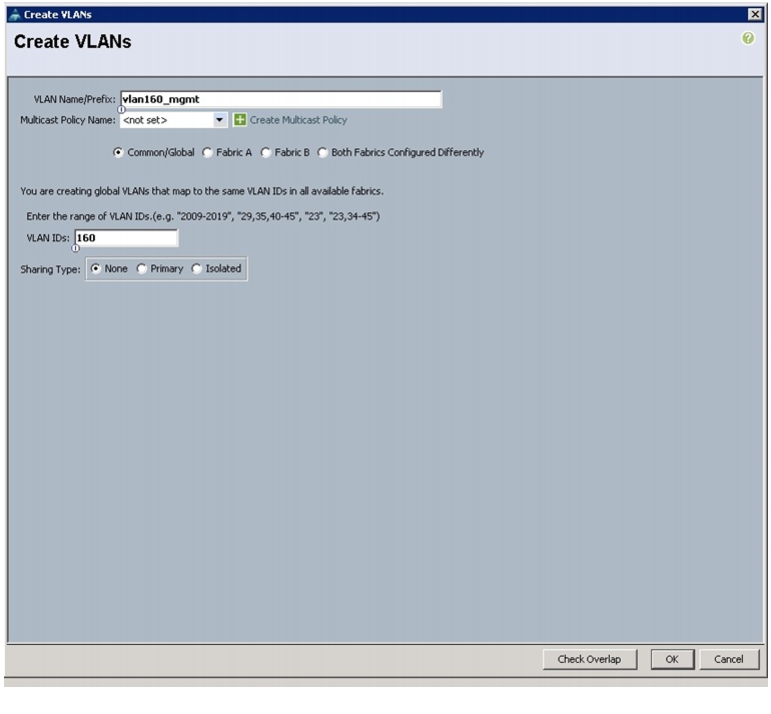

5.

Enter vlan160_mgmt for the VLAN Name.

6.

Select Common/Global for vlan160_mgmt.

7.

Enter 160 on VLAN IDs of the Create VLAN IDs.

Figure 15 Creating VLAN for Fabric A

8.

Click OK and then, click Finish.

9.

Click OK in the success message box.

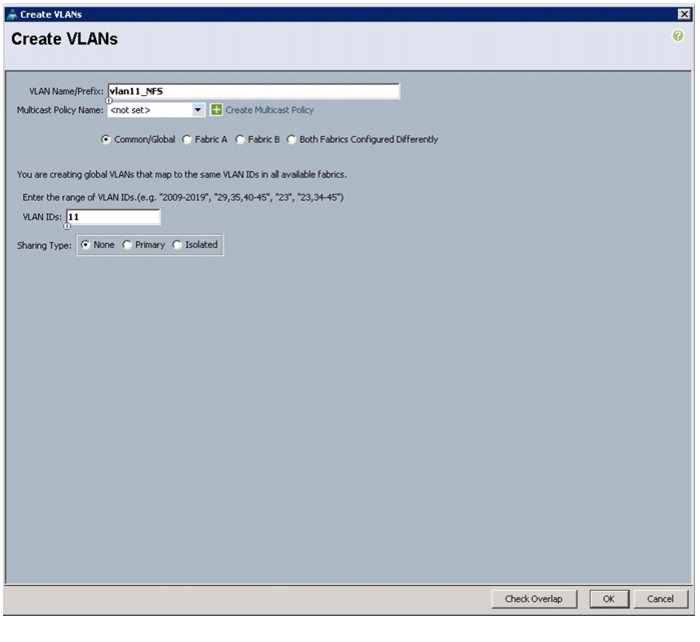

10.

Select the LAN tab in the left pane again.

11.

Select LAN > VLANs.

12.

Right-click the VLANs under the root organization.

13.

Select Create VLANs to create the VLAN.

14.

Enter vlan11_NFS for the VLAN Name.

15.

Select Common/Global for vlan11_NFS.

16.

Enter 11 on VLAN IDs of the Create VLAN IDs.

Figure 16 Creating VLAN for Fabric B

17.

Click OK and then, click Finish.

18.

Click OK in the success message box.

19.

Select the LAN tab in the left pane again.

20.

Select LAN > VLANs.

21.

Right-click the VLANs under the root organization.

22.

Select Create VLANs to create the VLAN.

23.

Enter vlan12_HDFS for the VLAN Name.

24.

Select Common/Global for the vlan12_HDFS.

25.

Enter 12 on VLAN IDs of the Create VLAN IDs.

Figure 17 Creating Global HDFS VLAN

26.

Click OK then click Finish.

Note

All of the VLANs created need to be trunked to the upstream distribution switch connecting the fabric interconnects.

Creating Server Pool

A server pool contains a set of servers. These servers typically share the same characteristics. Those characteristics can be their location in the chassis, or an attribute such as server type, amount of memory, local storage, type of CPU, or local drive configuration. You can manually assign a server to a server pool, or use server pool policies and server pool policy qualifications to automate the assignment.

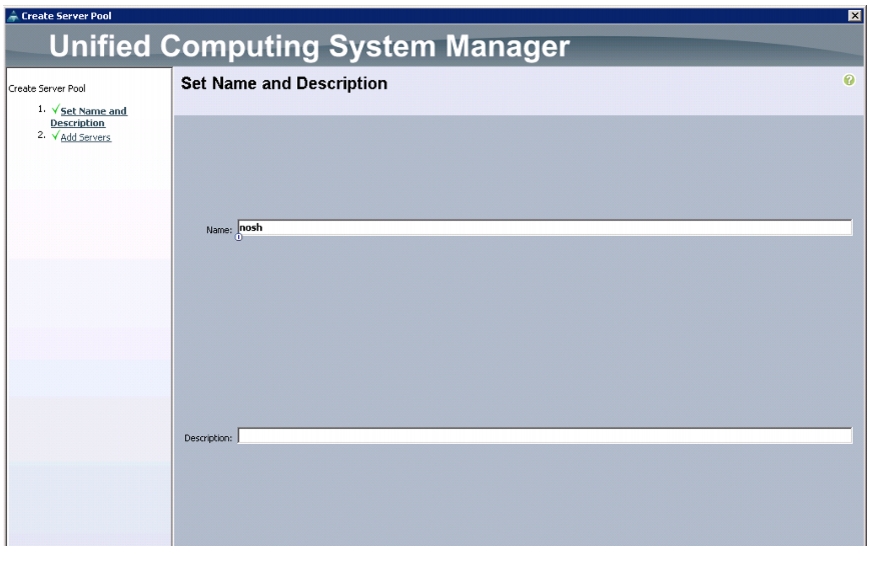

Follow these steps to configure the server pool in the Cisco UCS Manager GUI:

1.

Select the Servers tab in the left pane in the Cisco UCS Manager GUI.

2.

Select Pools > root.

3.

Right-click the Server Pools.

4.

Select Create Server Pool.

5.

Enter nosh for the Server Pool Name.

6.

(Optional) enter a description for the organization.

Figure 18 Creating Server Pool

7.

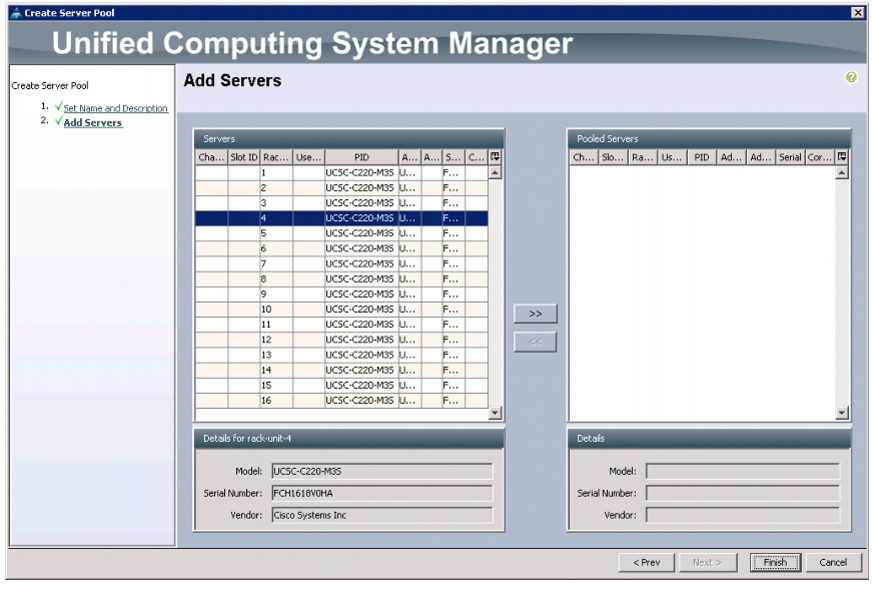

Click Next to add servers.

8.

Select all the Cisco UCS C220M3 servers to be added to the nosh server pool. Click >> to add them to the pool.

Figure 19 Adding Server Pool

9.

Click Finish.

10.

Click OK and then click Finish.

Creating Policies for Service Profile Template

Creating Host Firmware Package Policy

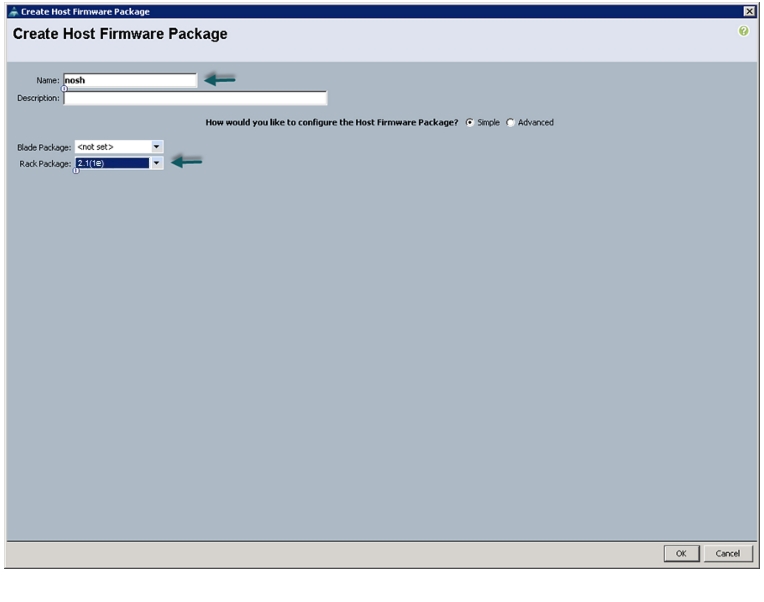

Firmware management policies allow the administrator to select the corresponding packages for a given server configuration. These often include adapter, BIOS, board controller, FC adapters, HBA option ROM, and storage controller properties.

Follow these steps create a firmware management policy for a given server configuration in the Cisco UCS Manager GUI:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click Host Firmware Packages.

4.

Select Create Host Firmware Package.

5.

Enter nosh as the Host firmware package name.

6.

Select Simple radio button to configure the Host Firmware package.

7.

Select the appropriate Rack package that you have.

Figure 20 Creating Host Firmware Package

8.

Click OK to complete creating the management firmware package.

9.

Click OK.

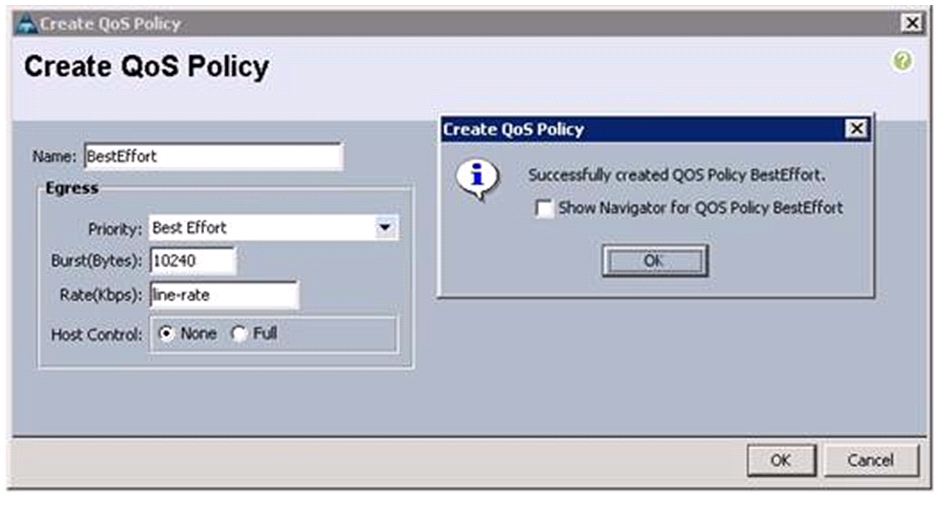

Creating QoS Policies

Follow these steps to create QoS policy for a given server configuration in the Cisco UCS Manager GUI:

BestEffort Policy

1.

Select the LAN tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click QoS Policies and select Create QoS Policy.

4.

Enter BestEffort as the name of the policy.

5.

Select Best Effort for Priority from the drop down menu.

6.

Keep the Burst (Bytes) field as default, which is 10240.

7.

Keep the Rate (Kbps) field as default, which is line-rate.

8.

Make sure the Host Control radio button is None.

9.

Click OK.

Figure 21 Creating QoS Policy - BestEffort

10.

In the pop-up window, click OK to complete the QoS policy creation.

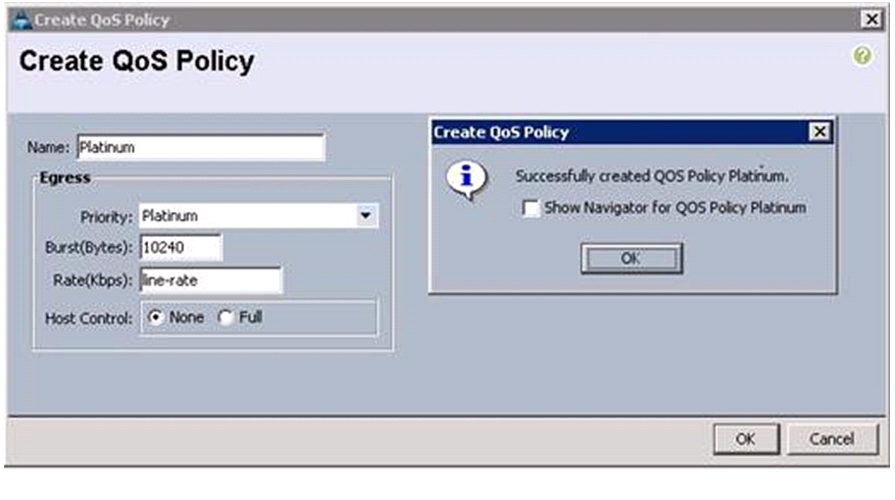

Platinum Policy

1.

Select the LAN tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click QoS Policies and select Create QoS Policy.

4.

Enter Platinum as the name of the policy.

5.

Select Platinum for Priority from the drop down menu.

6.

Keep the Burst (Bytes) field as default, which is 10240.

7.

Keep the Rate (Kbps) field as default, which is line-rate.

8.

Make sure the Host Control radio button is None.

9.

Click OK.

Figure 22 Creating QoS Policy - Platinum

10.

In the pop-up window, click OK to complete the QoS policy creation.

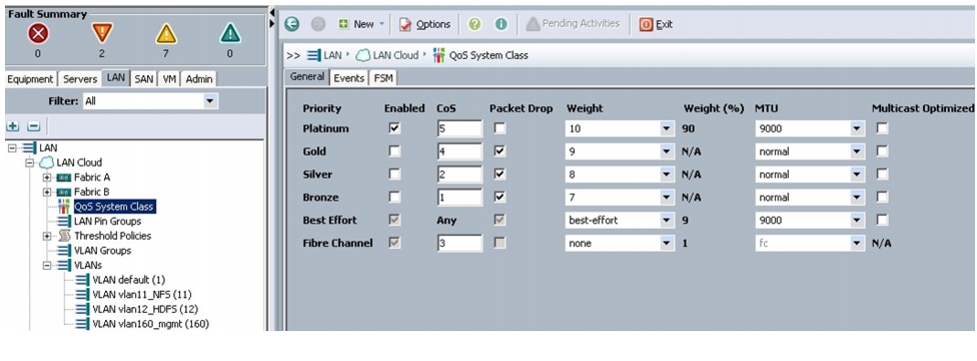

Setting Jumbo Frames

These steps provide details for setting Jumbo frames and enabling the quality of service in the Cisco UCS Fabric:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select LAN Cloud > QoS System Class.

3.

In the right pane, select the General tab.

4.

In the Platinum row, enter 9000 for MTU.

5.

In the Best Effort row, enter 9000 for MTU.

6.

Check the Enabled check box next to Platinum.

Figure 23 Setting Jumbo Frame in Cisco UCS Fabric

7.

Click Save Changes.

8.

Click OK.

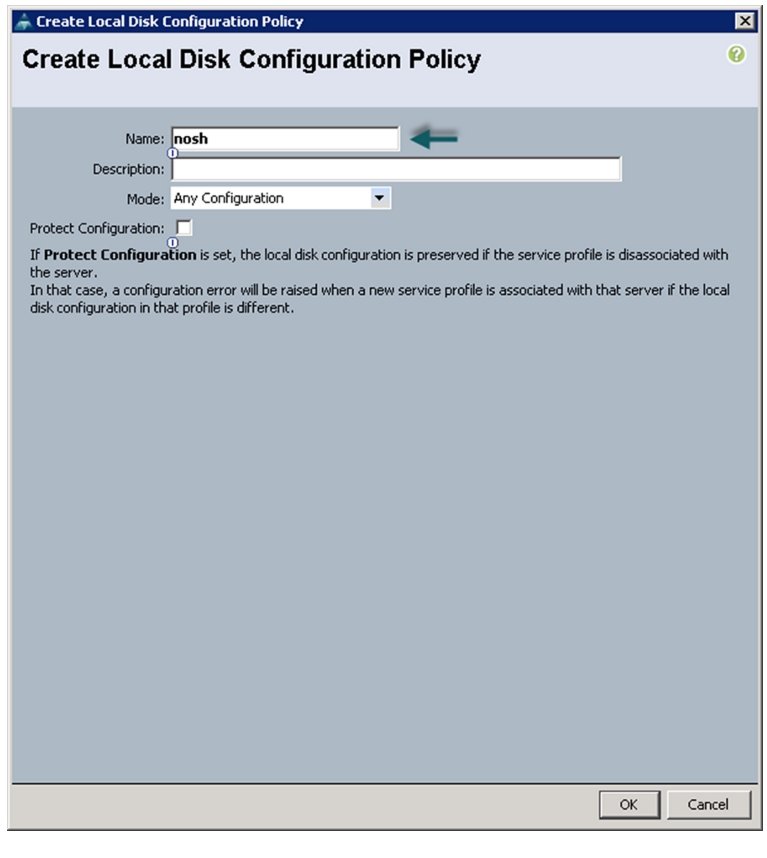

Create a Local Disk Configuration Policy

Follow these steps to create local disk configuration in the Cisco UCS Manager GUI:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click Local Disk Config Policies.

4.

Select Create Local Disk Configuration Policy.

5.

Enter nosh as the local disk configuration policy name.

6.

Change the Mode to Any Configuration. Uncheck the Protect Configuration check box.

Figure 24 Configuring Local Disk Policy

7.

Click OK to create the Local Disk Configuration Policy.

8.

Click OK.

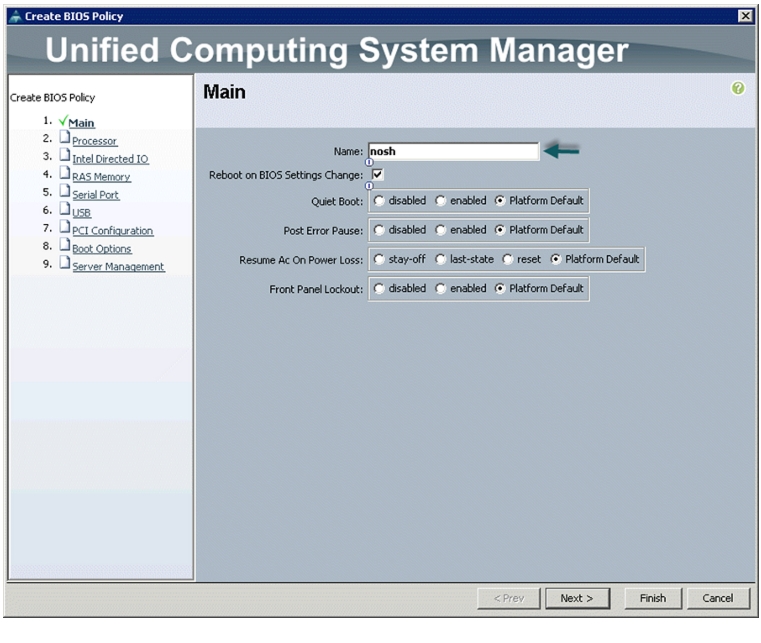

Create a Server BIOS Policy

The BIOS policy feature in Cisco UCS automates the BIOS configuration process.

The traditional method of setting the BIOS is manual and often error-prone. By creating a BIOS policy and assigning the policy to a server or group of servers, you can have the transparency in BIOS settings and configuration.

Follow these steps to create a server BIOS policy in the Cisco UCS Manager GUI:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click BIOS Policies.

4.

Select Create BIOS Policy.

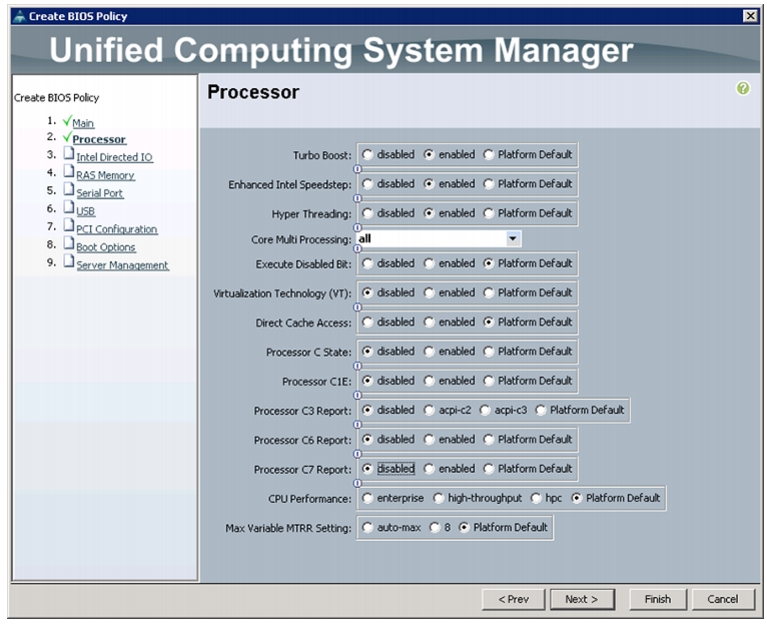

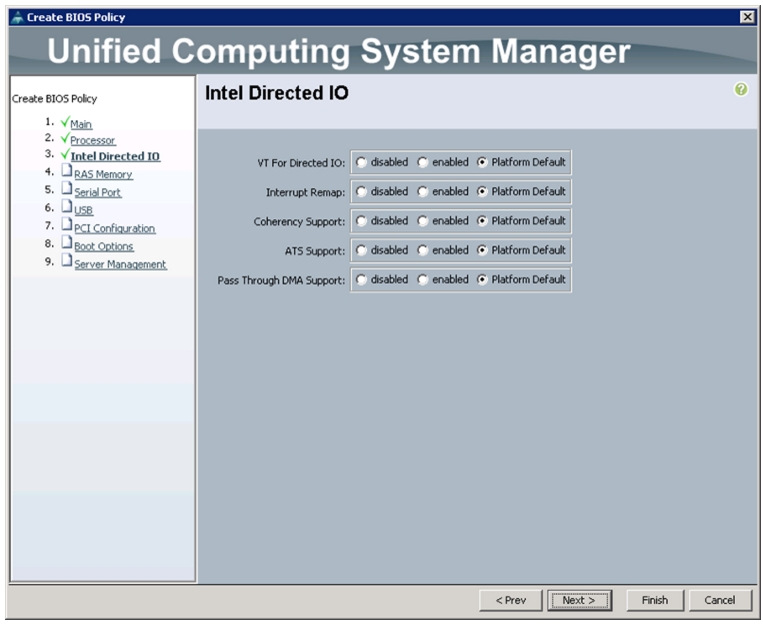

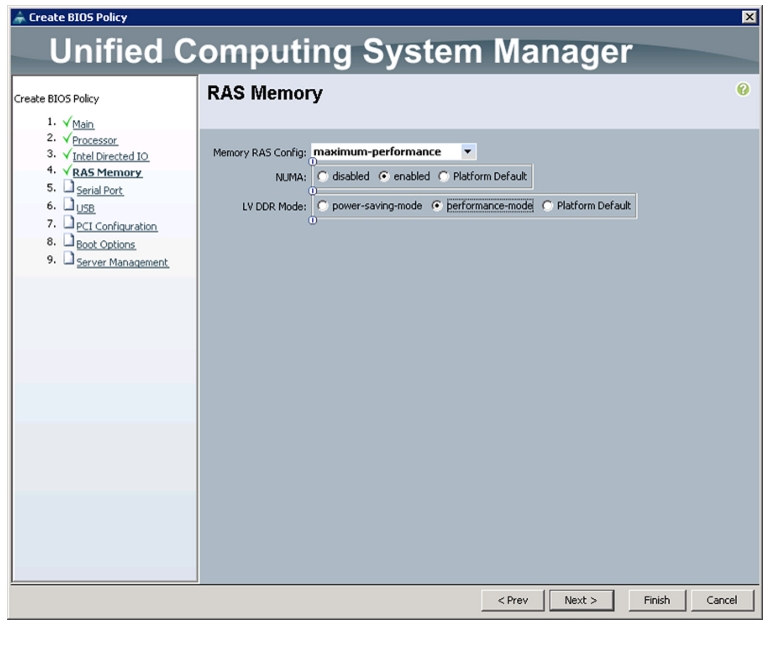

5.

Enter nosh as the BIOS policy name.

6.

Change the BIOS settings as per Figure 25, Figure 26, Figure 27, and Figure 28.

Figure 25 Creating BIOS Policy

Figure 26 Processor Settings

Figure 27 Intel Direct IO Settings

Figure 28 Memory Settings

7.

Click Finish to complete creating the BIOS policy.

8.

Click OK.

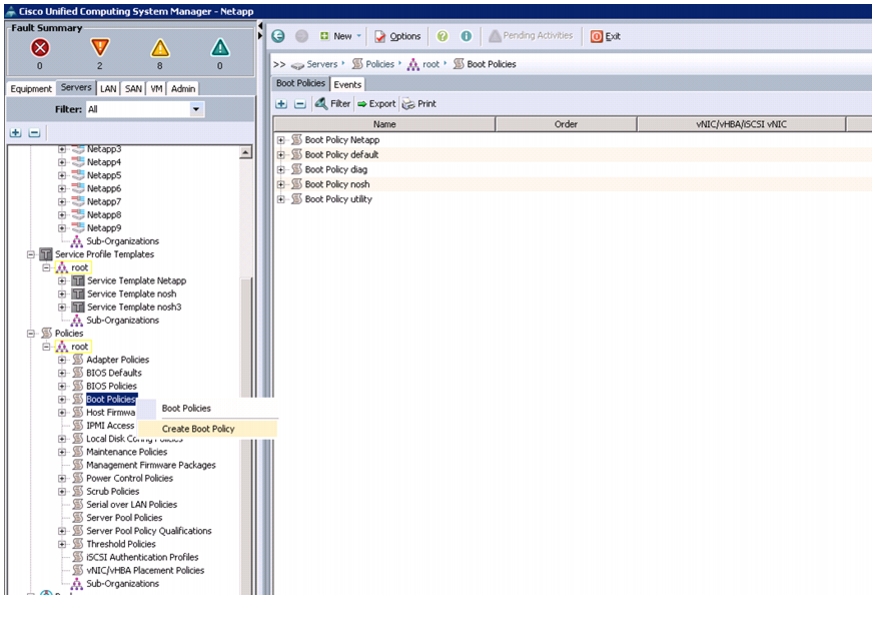

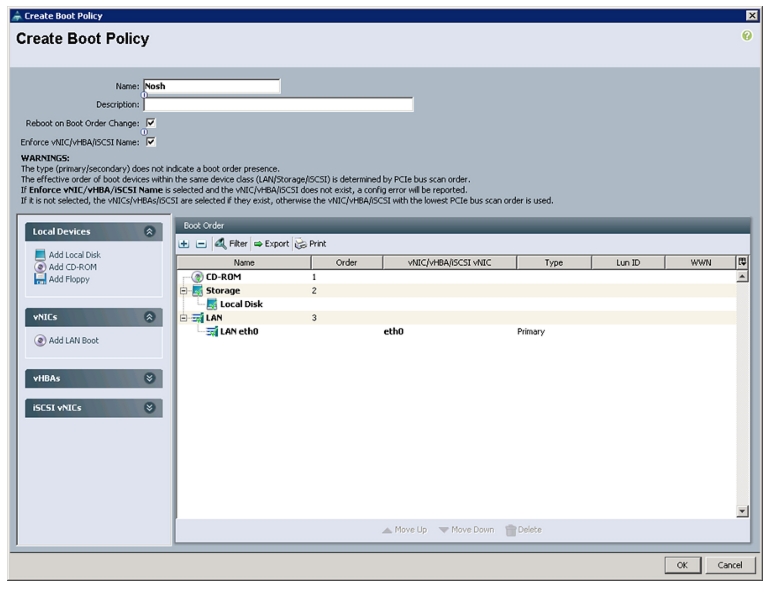

Creating Boot Policies

Follow these steps to create boot policies within the Cisco UCS Manager GUI:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click the Boot Policies.

4.

Select Create Boot Policy.

Figure 29 Creating Boot Policy

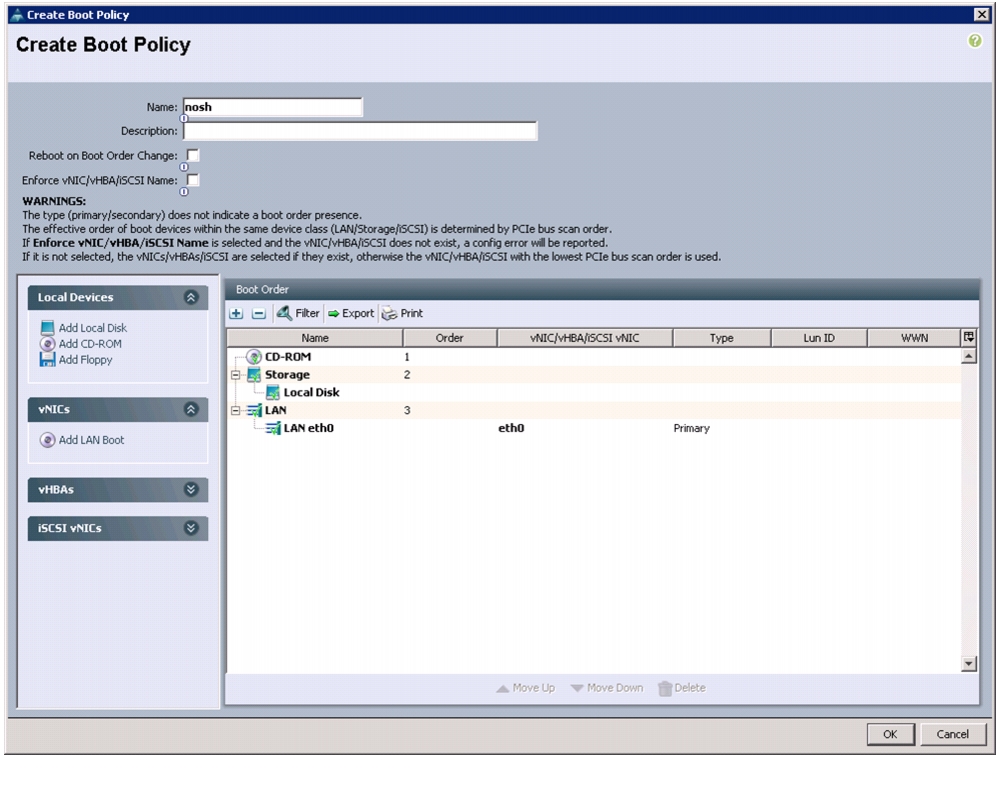

5.

Enter nosh as the boot policy name.

6.

(Optional) enter a description for the boot policy.

7.

Keep the Reboot on Boot Order Change check box unchecked.

8.

Expand Local Devices and select Add CD-ROM.

9.

Expand Local Devices and select Add Local Disk.

10.

Expand vNICs and select Add LAN Boot and enter eth0.

Figure 30 Creating Boot Order

11.

Click OK to add the Boot Policy.

12.

Click OK.

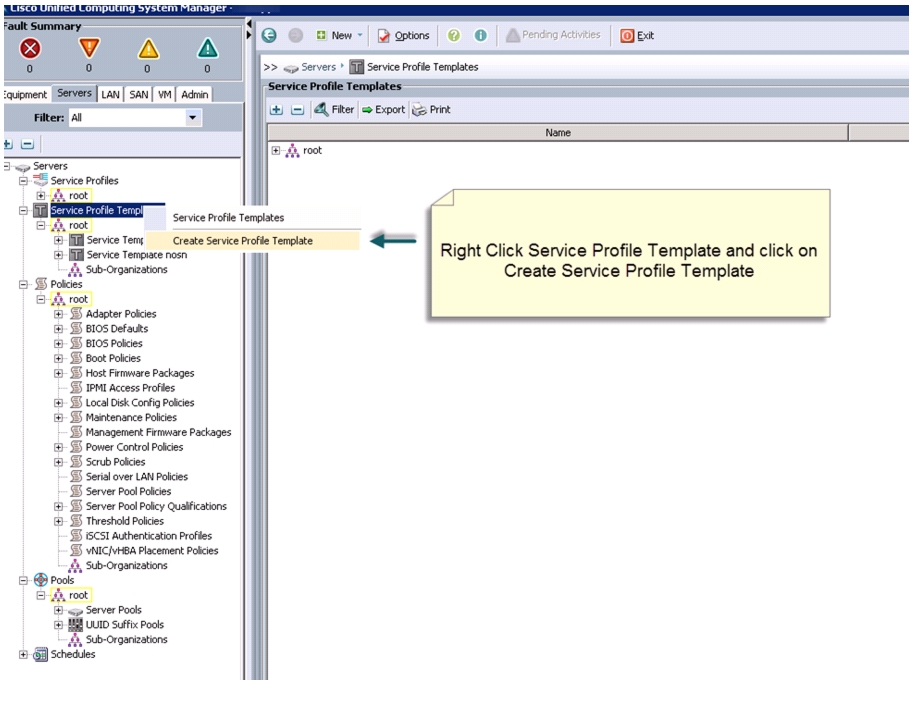

Creating Service Profile Template

To create a service profile template, follow these steps:

1.

Select the Servers tab in the left pane in the UCSM GUI.

2.

Select Policies > root.

3.

Right-click root.

4.

Select Create Service Profile Template.

Figure 31 Creating Service Profile Template

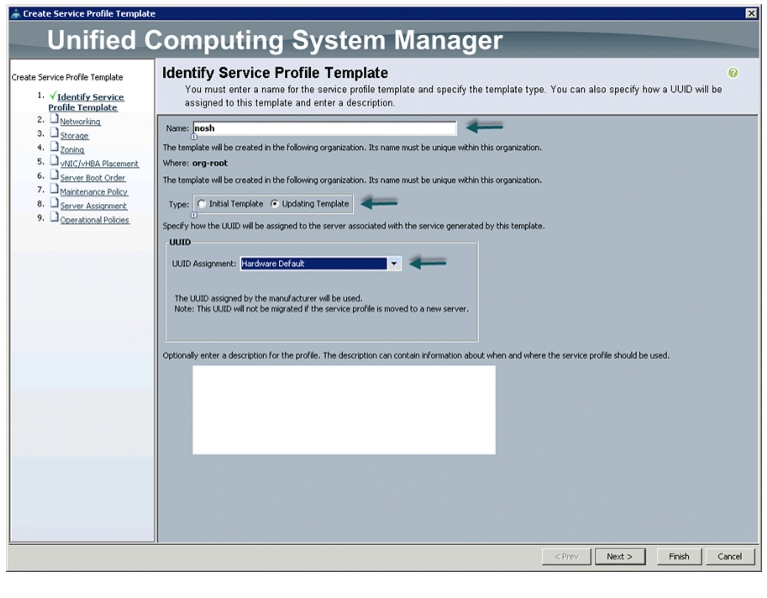

5.

The Create Service Profile Template window appears.

The following steps provide the detailed configuration procedure used to create a service profile template:

a.

Name the service profile template as nosh. Select the Updating Template radio button.

b.

In the UUID section, select Hardware Default as the UUID pool.

Figure 32 Identifying Service Profile Template

c.

Click Next to continue to the next section.

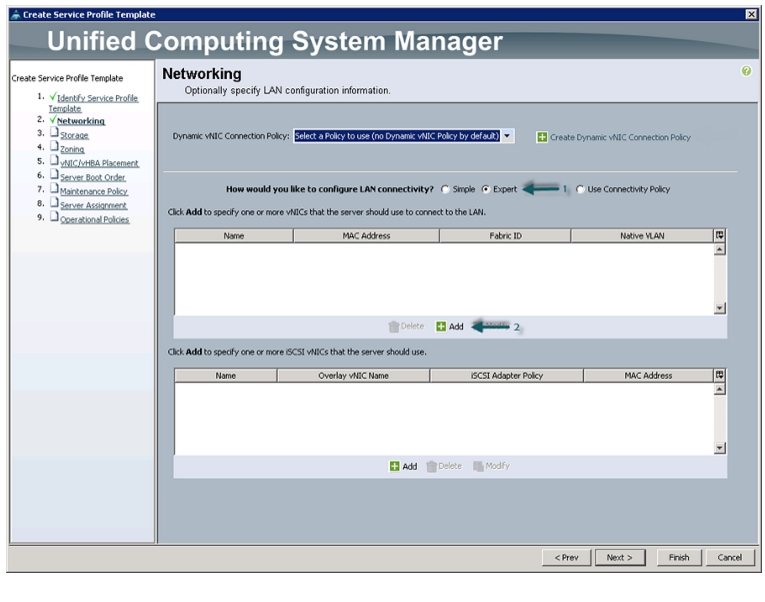

Configuring Network Settings for the Template

In the Networking window, follow these steps to create vNICs:

1.

Keep the Dynamic vNIC Connection Policy field as default.

2.

Select the Expert radio button for the option How would you like to configure LAN connectivity?

3.

Click Add to add a vNIC to the template.

Figure 33 Adding vNICs

4.

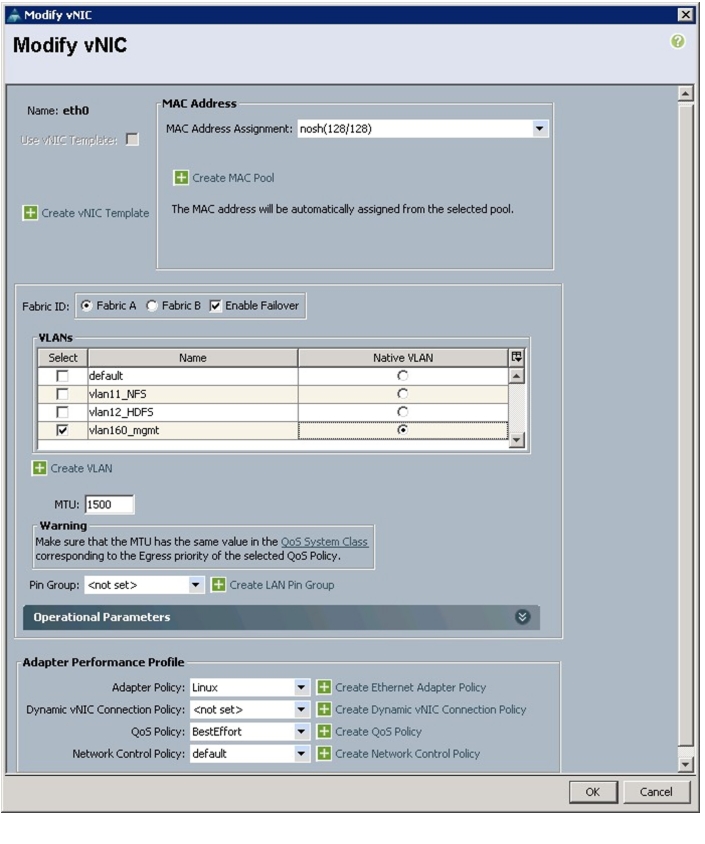

The Create vNIC window displays. Name the vNIC as eth0.

5.

Select nosh in the Mac Address Assignment pool.

6.

Select the Fabric A radio button and check the Enable failover check box for the Fabric ID.

7.

Check the vlan160_mgmt check box for VLANs and select the Native VLAN radio button.

8.

Select MTU size as 1500.

9.

Select adapter policy as Linux.

10.

Keep the Dynamic vNIC connection policy as <no set>.

11.

Select QoS Policy as BestEffort.

12.

Keep the Network Control Policy as Default.

Figure 34 Creating Management vNIC

13.

Click OK.

14.

Click Add to add another vNIC to the template.

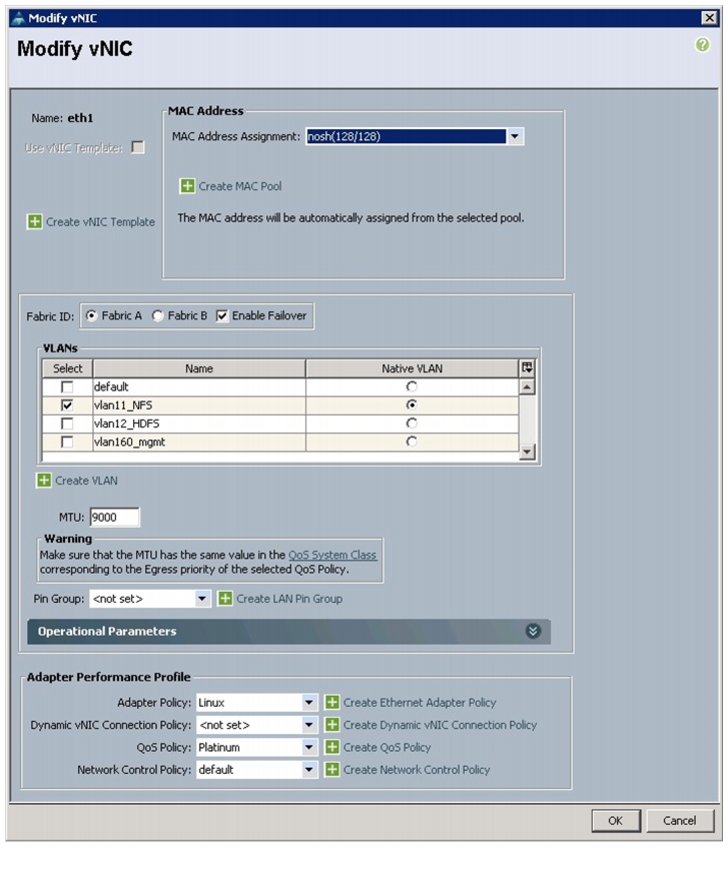

15.

The Create vNIC window appears. Name the vNIC as eth1.

16.

Select nosh in the Mac Address Assignment pool.

17.

Select the Fabric A radio button and check the Enable failover check box for the Fabric ID.

18.

Check the Default and vlan11 check boxes for VLANs and select the vlan11 radio button for Native VLAN.

19.

Select MTU size as 9000.

20.

Select Adapter Policy as Linux.

21.

Keep the Dynamic vNIC Connection Policy as <not set>.

22.

Select QoS Policy to Platinum.

23.

Keep the Network Control Policy as Default.

Figure 35 Creating NFS vNIC

24.

Click OK.

25.

Click Add to add another vNIC to the template.

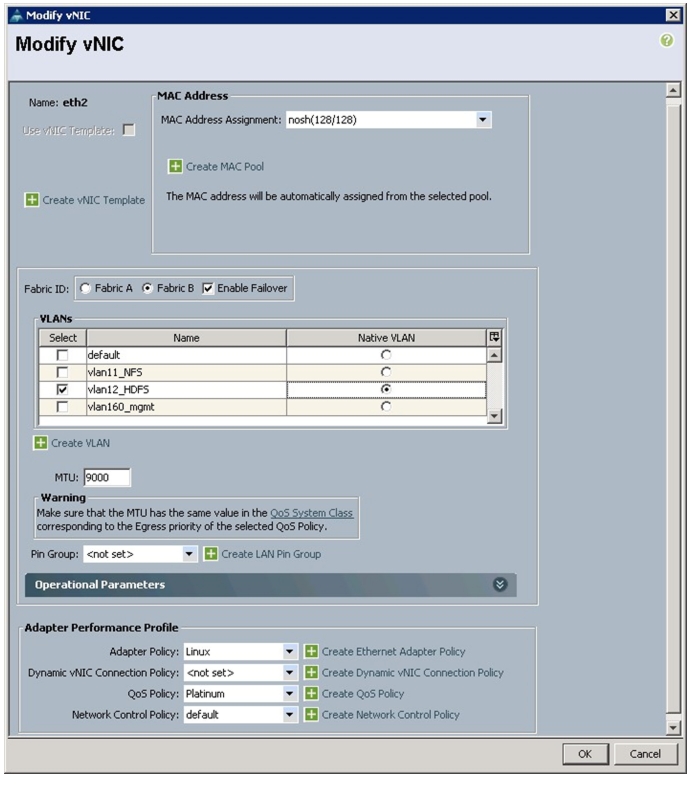

26.

The Create vNIC window appears. Name the vNIC as eth2.

27.

Select nosh in the Mac Address Assignment pool.

28.

Select the Fabric B radio button and check the Enable failover check box for the Fabric ID.

29.

Check the vlan12_HDFS check box for VLANs and select the Native VLAN radio button.

30.

Select MTU size as 9000.

31.

Select adapter policy as Linux.

32.

Keep the Dynamic vNIC Connection Policy as <no set>.

33.

Select QoS Policy as Platinum.

34.

Keep the Network Control Policy as Default.

Figure 36 Creating HDFS vNIC

35.

Click OK.

36.

Click Next to continue to the next section.

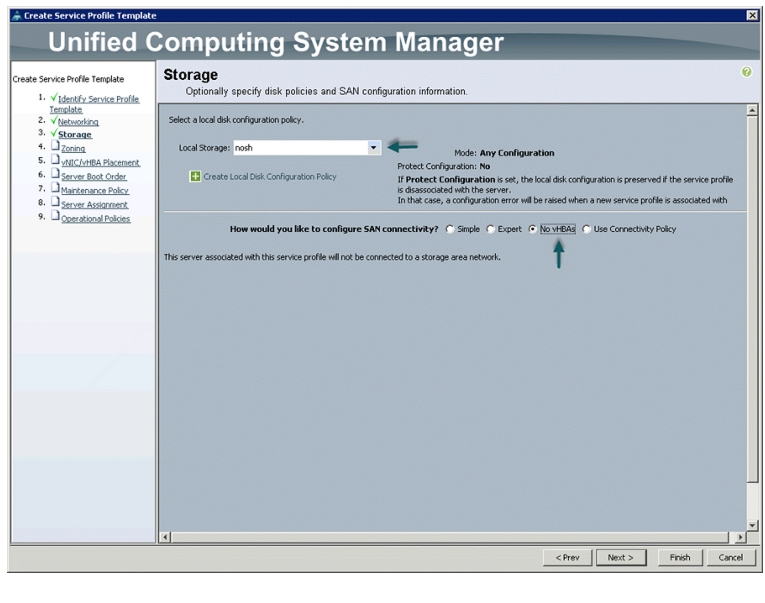

Configuring Storage Policy for the Template

In the Storage window, follow these steps to configure storage:

1.

Select nosh for the local disk configuration policy.

2.

Select the No vHBAs radio button for the option How would you like to configure SAN connectivity?

Figure 37 Storage Settings

3.

Click Next to continue to the next section.

4.

Click Next in the Zoning Window to go to the next section.

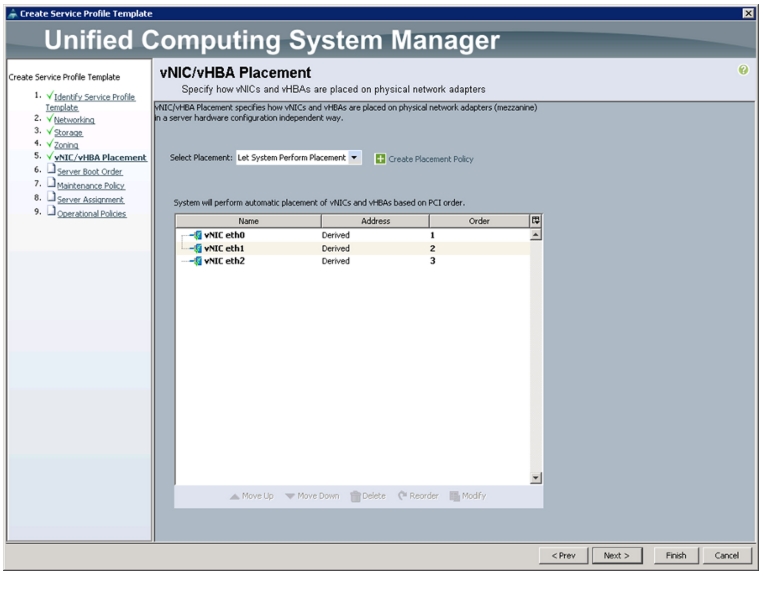

Configuring vNIC/vHBA Placement for the Template

In the vNIC/vHBA Placement Section, follow these steps to configure placement policy:

1.

Select the Default Placement Policy option for Select Placement field.

2.

Select eth0, eth1, and eth2 assign the vNICs in the following order:

–

eth0

–

eth1

–

eth2

3.

Review the table to make sure that all of the vNICs were assigned in the appropriate order.

Figure 38 Creating vNIC and vHBA Policy

4.

Click Next to continue to the next section.

Configuring Server Boot Order for the Template

In the Server Boot Order Section, follow these steps to set the boot order for servers:

1.

Select nosh for the Boot Policy Name field.

2.

Check the Reboot on Boot Order Change check box.

3.

Check the Enforce vNIC/vHBA/iSCSI Name check box.

4.

Review the table to make sure that all of the boot devices were created and identified. Verify that the boot devices are in the correct boot sequence.

Figure 39 Creating Boot Policy

5.

Click OK.

6.

Click Next to continue to the next section.

Configuring Maintenance Policy for the Template

In the Maintenance Policy window, follow these steps to apply maintenance policy:

1.

Keep the Maintenance Policy at no policy used by default.

2.

Click Next to continue to the next section.

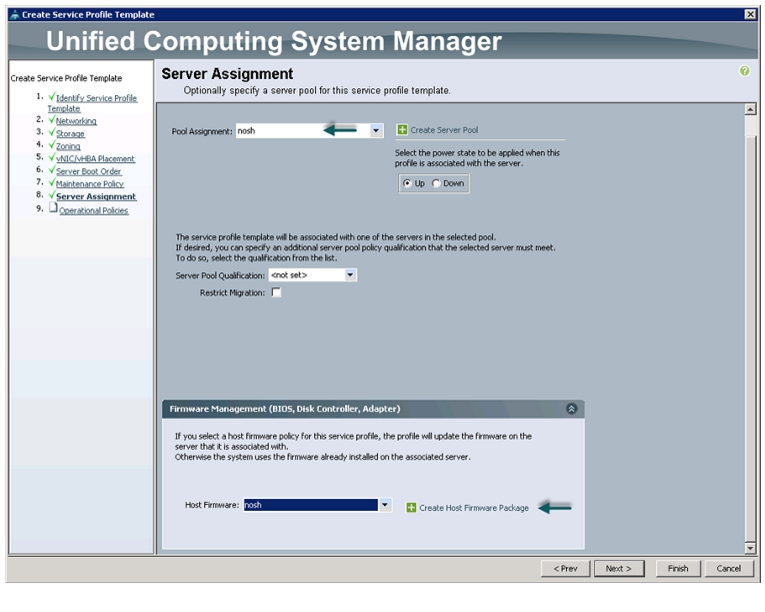

Configuring Server Assignment for the Template

In the Server Assignment window, follow these steps to assign servers to the pool:

1.

Select nosh for the Pool Assignment field.

2.

Keep the Server Pool Qualification field at default.

3.

Select nosh for the Host Firmware Package.

Figure 40 Assigning Sever Pool for Service Profile Template

4.

Click Next to continue to the next section.

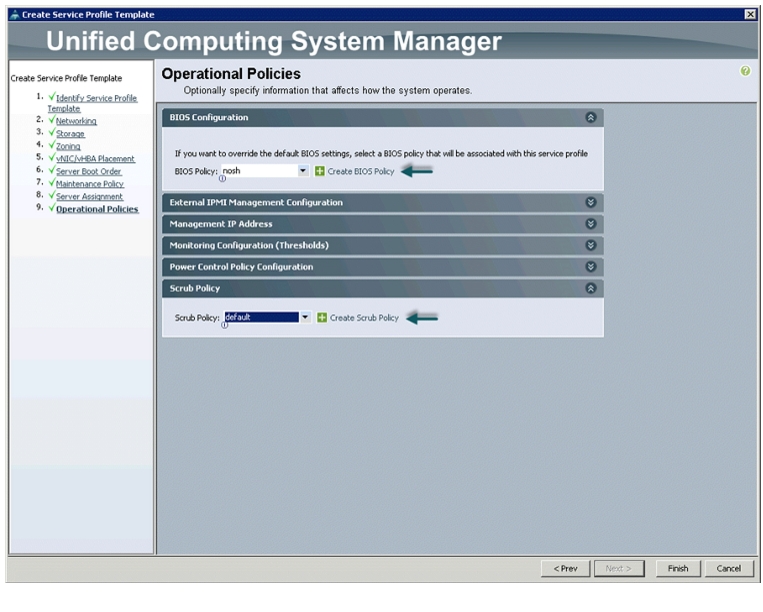

Configuring Operational Policies for the Template

In the Operational Policies window, follow these steps:

1.

Select nosh in the BIOS Policy field.

Figure 41 Creating Operational Policies

2.

Click Finish to create the Service Profile template.

3.

Click OK in the pop-up window to exit the wizard.

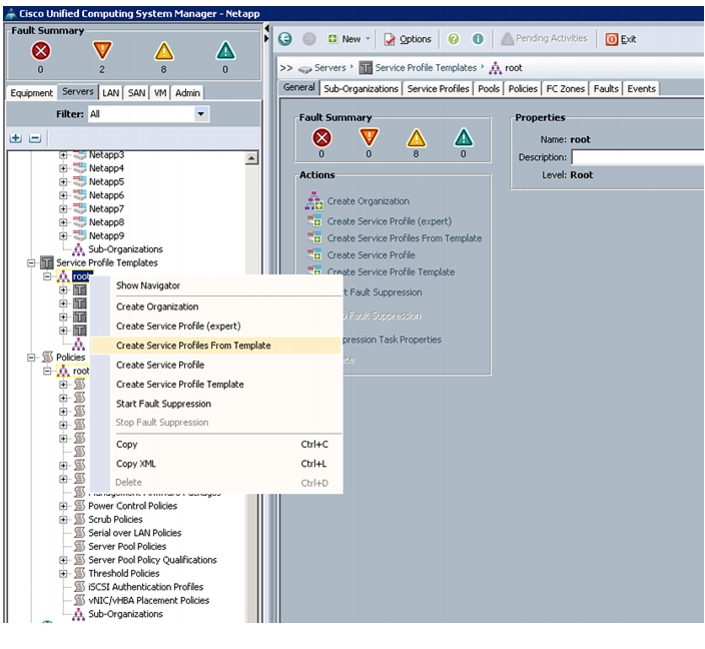

Select the Servers tab in the left pane in the UCSM GUI.

1.

Select Service Profile Templates > root.

2.

Right-click the root.

3.

Select Create Service Profile Template.

Figure 42 Creating Service Profile

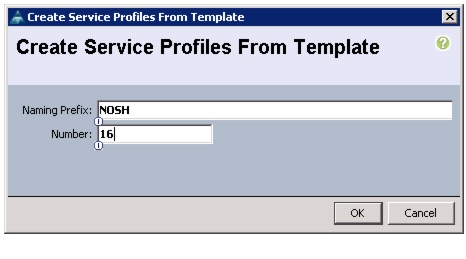

4.

The Create Service Profile from Template window appears.

Figure 43 Creating Service Profile from Template

5.

Now connect the power cable to the servers.

6.

Servers will the be discovered by UCS Manager.

7.

Association of Service Profile will take place automatically.

8.

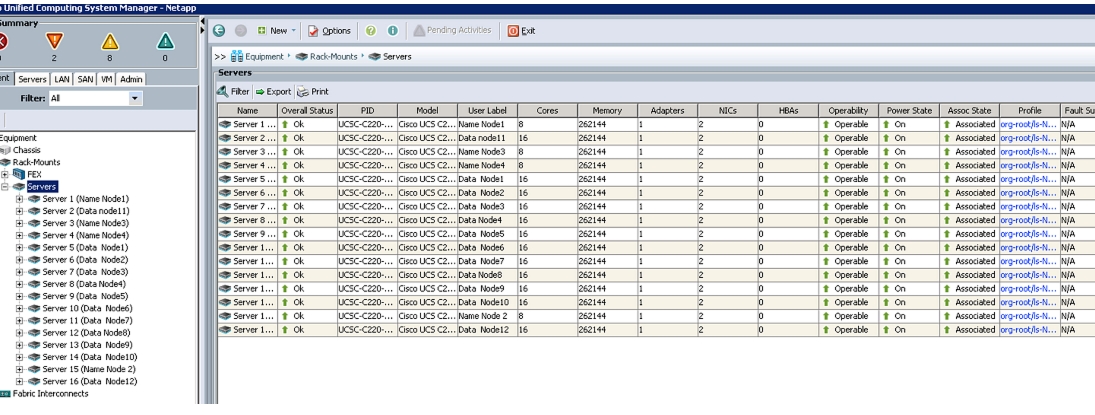

The final Cisco UCS Manager window is shown in Figure 44.

Figure 44 UCS Manager Showing Sixteen Nodes

Cisco UCS 6296UP FI Configuration for NetApp FAS 2220

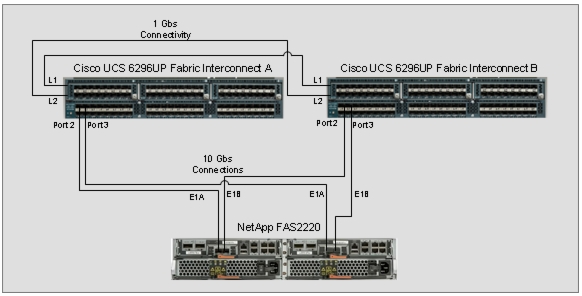

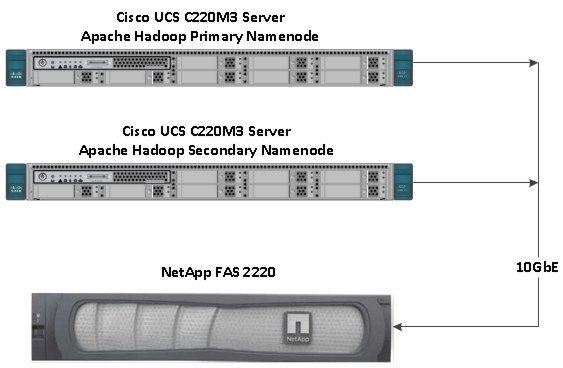

The Cisco UCS 6296UP Fabric Interconnects are deployed in pairs with L1 to L1 and L2 to L2 connectivity for redundancy. NetApp FAS 2220 has one storage controllers. FAS controller port E1A is connected to FI A Port 2 as the Appliance port and E1B is connected to FI B Port 2 as the Appliance port with 10Gbs connectivity as shown in Figure 45.

Figure 45 Cisco UCS 6296UP FIs and NetApp FAS 2220 Connectivity

Configuring VLAN for Appliance Port

Follow these steps to configure VLAN appliance cloud:

1.

Select the LAN tab in the left pane in the UCSM GUI.

2.

Select LAN > Appliances > VLANs.

3.

Right-click VLANs under the root organization.

4.

Select Create VLANs to create the VLAN.

Figure 46 Creating VLANs for Appliance Cloud

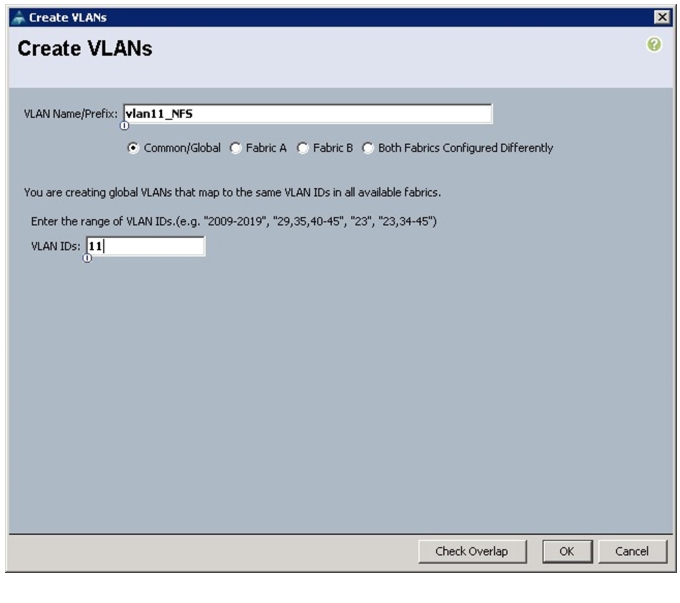

5.

Enter vlan11_NFS for the VLAN Name.

6.

Select the Common/Global radio button.

7.

Enter 11 for VLAN ID.

Figure 47 Creating VLAN for Fabric A

8.

Click OK and then, click Finish.

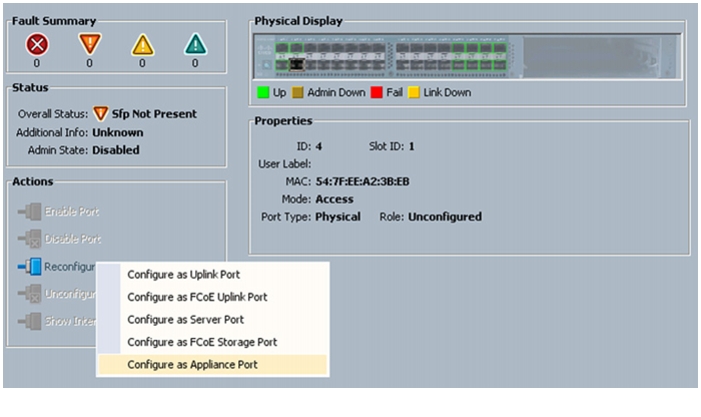

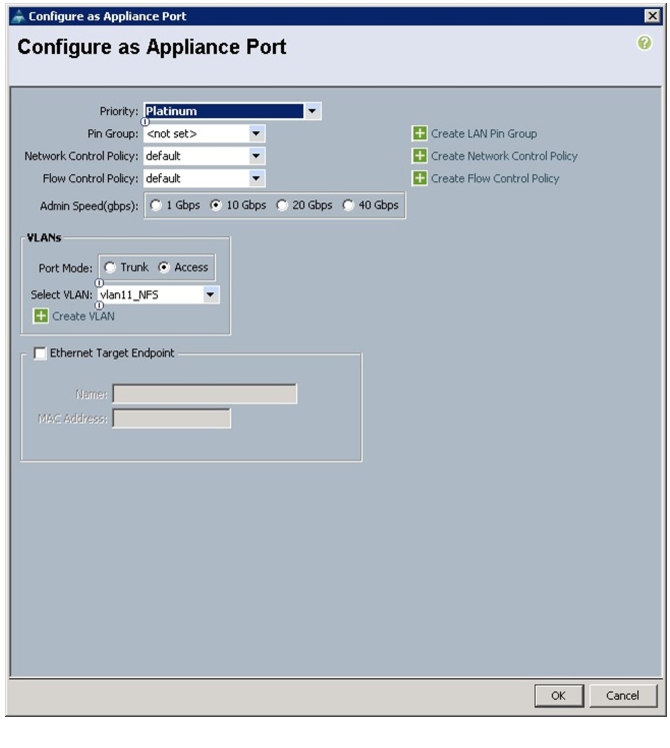

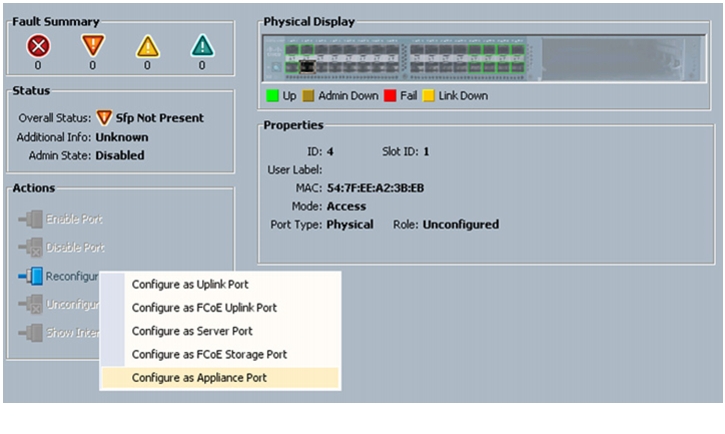

Configure Appliance Port

Follow these steps to configure appliance ports:

1.

Select the Equipment tab in the left pane in the UCSM GUI.

2.

Select Equipment > Fabric Interconnects > Fabric Interconnect A (primary) > Fixed Module.

3.

Expand the Unconfigured Ethernet Ports.

4.

Select the port number 2, and select Reconfigure > Configure as an Appliance Port.

Figure 48 Configuring Fabric A Appliance Port

5.

A confirmation message box appears. Click Yes, then OK to continue.

6.

Select Platinum for the Priority.

7.

Keep the Pin Group as <not set>.

8.

Keep the Network Control Policy as Default.

9.

Keep the Flow Control Policy as Default.

10.

Select the 10Gbps radio button for the Admin Speed.

11.

Select the Access radio button for the Port Mode.

12.

Select vlan11_NFS from the dropdown menu for the Select VLAN.

Figure 49 Configuring Appliance Port

13.

Click OK.

14.

In the message box that appears, click OK.

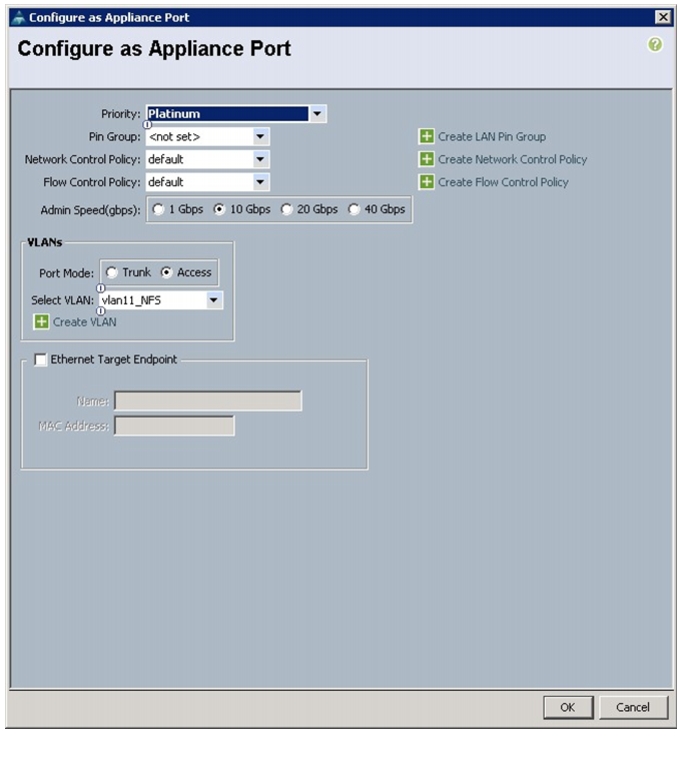

15.

Select Equipment > Fabric Interconnects > Fabric Interconnect B (secondary) > Fixed Module.

16.

Expand the Unconfigured Ethernet Ports.

17.

Select the port number 2, and select Reconfigure > Configure as an Appliance Port.

Figure 50 Configuring Fabric B Appliance Port

18.

A confirmation message box appears. Click Yes, then OK to continue.

19.

Select Platinum for the Priority.

20.

Keep the Pin Group as <not set>.

21.

Keep the Network Control Policy as Default.

22.

Keep the Flow Control Policy as Default.

23.

Select the 10Gbps radio button for the Admin Speed.

24.

Select the Access radio button for the Port Mode.

25.

Select vlan11_NFS from the dropdown menu for the Select VLAN.

Figure 51 Configuring Appliance Port

26.

Click OK.

27.

In the message box that appears, click OK.

Server and Software Configuration

Service profile template creation is explained in "Fabric Configuration" section.

The following sections provide a detailed configuration procedure of the Cisco UCS C-Series Servers. These steps should be followed precisely because a failure to do so could result in an improper configuration.

Performing Initial Setup of C-Series Servers

These steps provide details for initial setup of the Cisco UCS C-Series Servers. It is important to get the systems to a known state with the appropriate firmware package.

Logging into the Cisco UCS 6200 Fabric Interconnects

To log into the Cisco UCS Manager application through Cisco UCS 6200 Series Fabric Interconnect, follow these steps:

1.

Log in to the Cisco UCS 6200 fabric interconnects and launch the Cisco UCS Manager application.

2.

In the UCSM GUI, select the Servers tab.

3.

Select Servers, right-click on Servers and Open KVM Console.

4.

Navigate to the Actions section and click KVM Console.

Configuring Disk Drives for OS

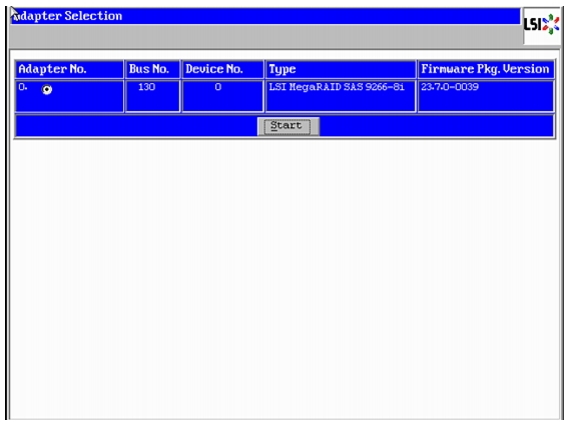

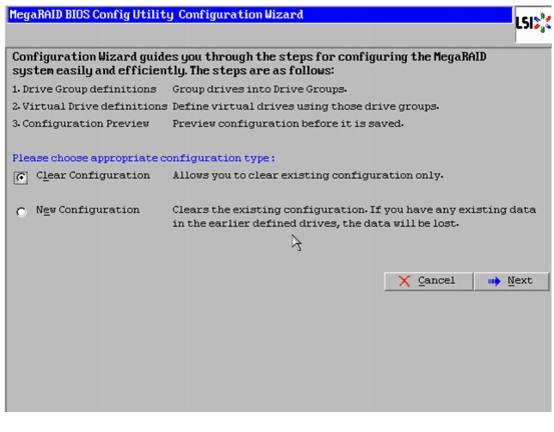

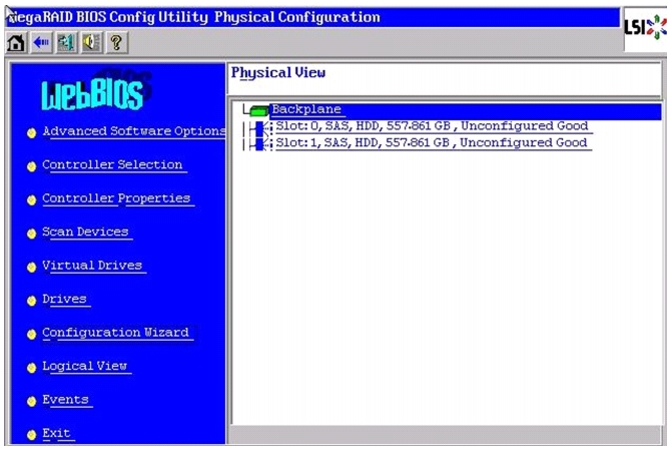

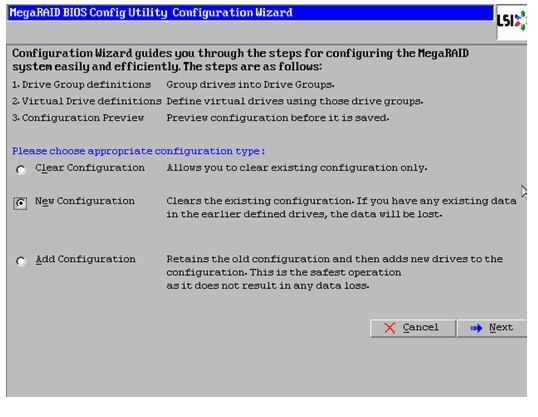

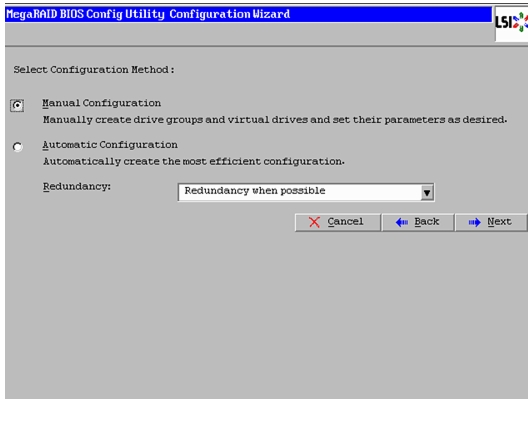

There are several ways to configure RAID: using LSI WebBIOS Configuration Utility embedded in the MegaRAID BIOS, booting DOS and running MegaCLI commands or using third party tools that have MegaCLI integrated. For this deployment, the first disk drive is configured using LSI WebBIOS Configuration Utility. A RAID1 volume of two disk drives is configured for the operating system:

1.

Once the server has booted and the MegaRAID Controller has been detected, the following will appear on the screen:

–

Press <Ctrl><H> for WebBIOS.

–

Press Ctrl+H immediately.

–

The Adapter Selection window appears.

2.

Click Start to continue.

Figure 52 RAID Configuration for LSI MegaRAID SAS Controllers

3.

Click Configuration Wizard.

4.

In the configure wizard window, select the configuration type as Clear Configuration and click Next to clear the existing configuration.

Figure 53 Clearing Existing Configuration

5.

Click Yes when asked to confirm clear configuration.

6.

In the Physical View, make sure all the drives are Unconfigured Good.

Figure 54 Physical View of Unconfigured Drives

7.

Click Configuration Wizard.

8.

In the configure wizard window, select the configuration type as New Configuration and click Next.

Figure 55 Selecting New Configuration

9.

Select the configuration method to be Manual Configuration to have control over all attributes of the new storage configuration such as drive groups, virtual drives, and to set their parameters.

Figure 56 Selecting Manual Configuration

10.

Click Next.

11.

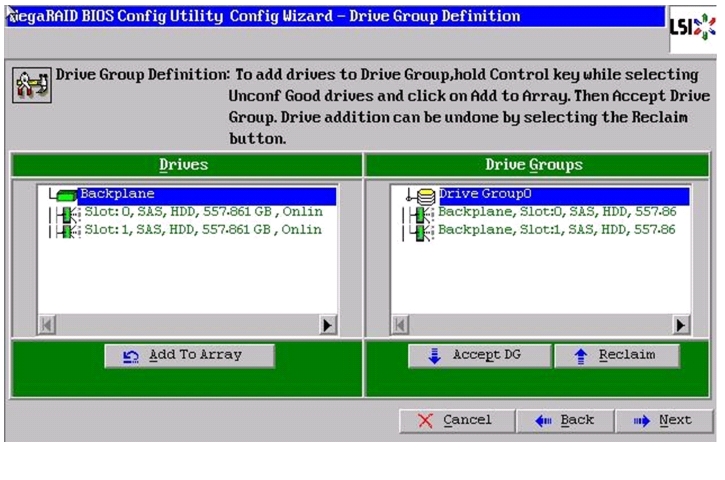

The Drive Group Definition window appears. In this window select the two drives to create drive groups.

12.

Click Add to Array to move the drives to a proposed drive group configuration in the Drive Groups pane. Click Accept DG and then, click Next.

Figure 57 Moving Drives to Drive Groups

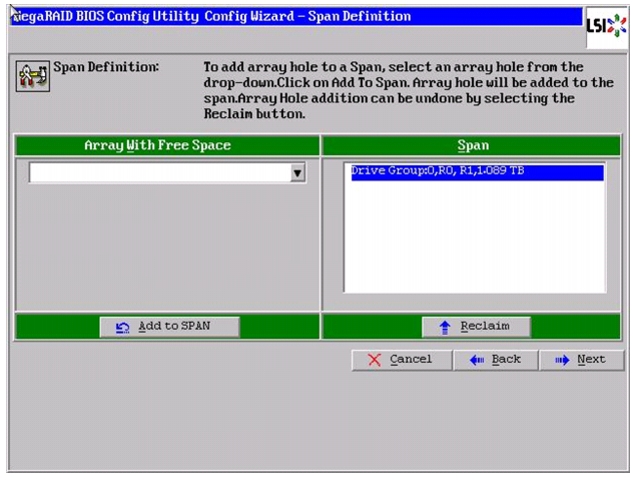

13.

In the Span definitions Window, Click Add to SPAN and then, click Next.

Figure 58 Adding Arrayhole to Span

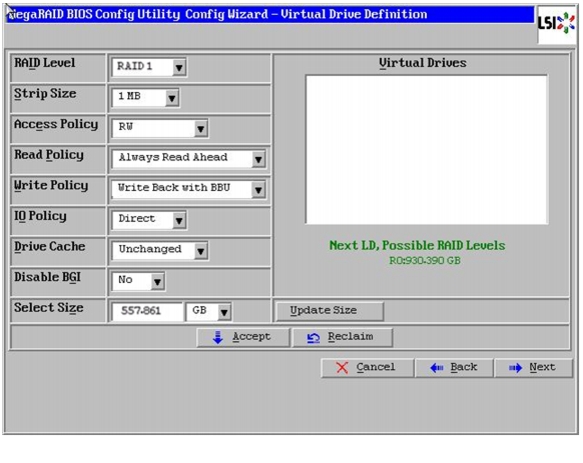

14.

In Virtual Drive definitions window, follow these steps to configure read normal and write through modes:

a.

Change Strip Size to 1MB. A larger strip size produces higher read performance. If your server regularly performs random read requests, select a smaller strip size.

b.

From the read Policy drop down list, choose Read Normal.

c.

From the Write Policy drop down list, choose Write Back with BBU.

d.

Make Sure RAID Level is set to RAID1.

Figure 59 Defining Virtual Drive

e.

Click Accept to accept the changes to the virtual drive definitions.

f.

Click Next.

15.

After you finish the virtual drive definitions, click Next. The Configuration Preview window appears.

16.

Review the virtual drive configuration in the Configuration Preview window and click Accept to save the configuration.

17.

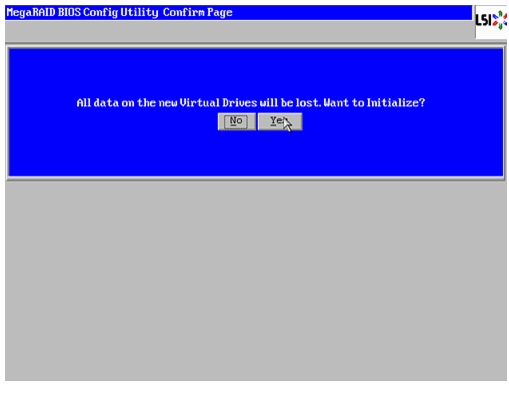

Click Yes to save the configuration.

18.

Click Yes. When asked to confirm to initialize.

Figure 60 Confirmation to Initialize

19.

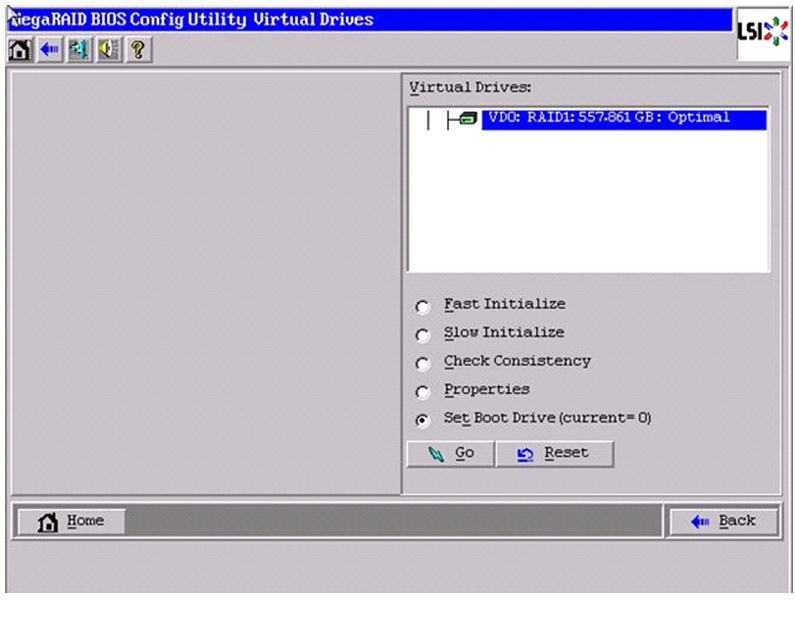

Set VD0 as the Boot Drive and click Go.

Figure 61 Setting Boot Drive

20.

Click Home.

21.

Review the Configuration and Click Exit.

Installing Red Hat Enterprise Linux Server 6.2 using KVM

One of the options to install RHEL is explained in this section.

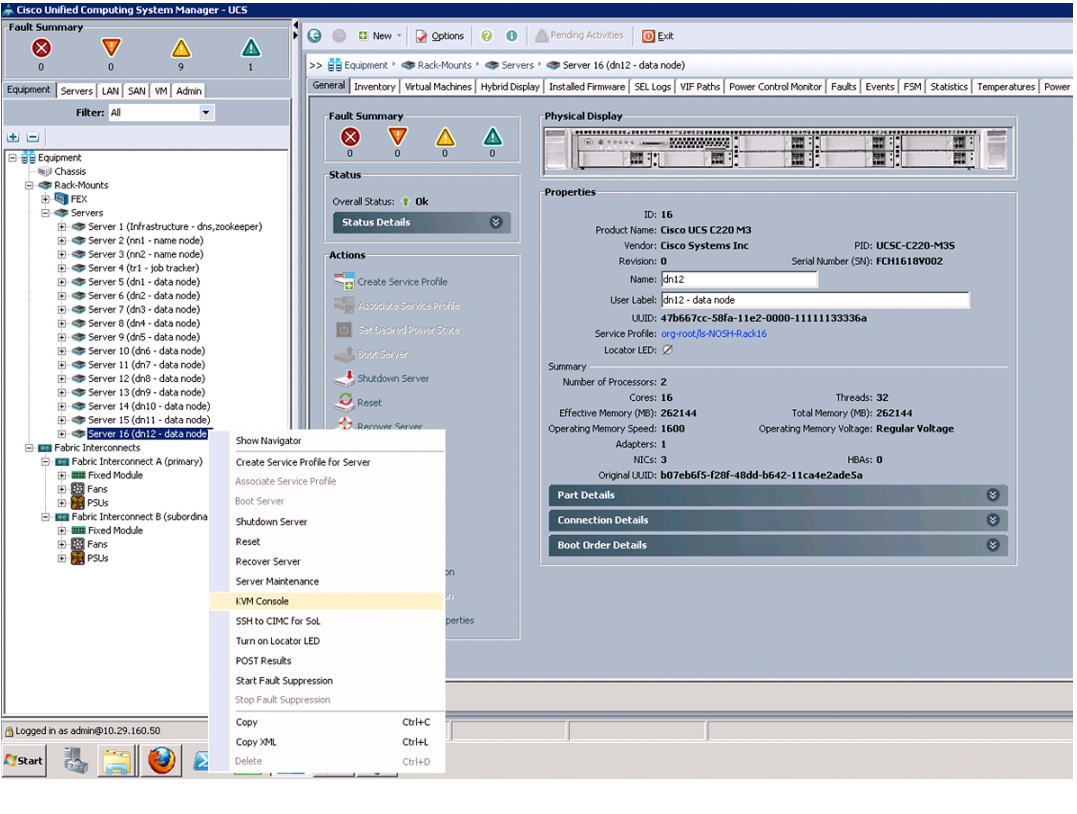

You can install Red Hat Enterprise Linux Server 6.2 using the KVM console of Cisco UCS Manager. To open the KVM console, follow these steps:

1.

Login to Cisco UCS Manager.

2.

Select Equipment tab and navigate to the servers.

3.

Right-click on the server and select KVM Console.

Figure 62 Launching KVM Console

To install Red Hat Linux Server 6.2, follow these steps:

1.

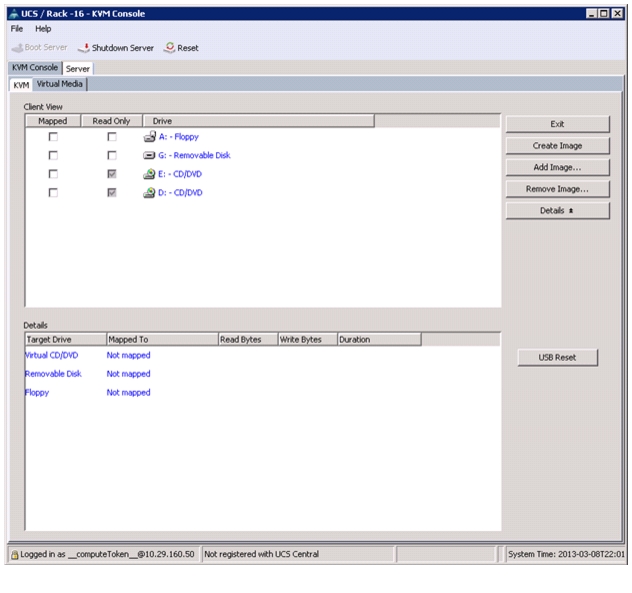

In the KVM window, select the Virtual Media tab.

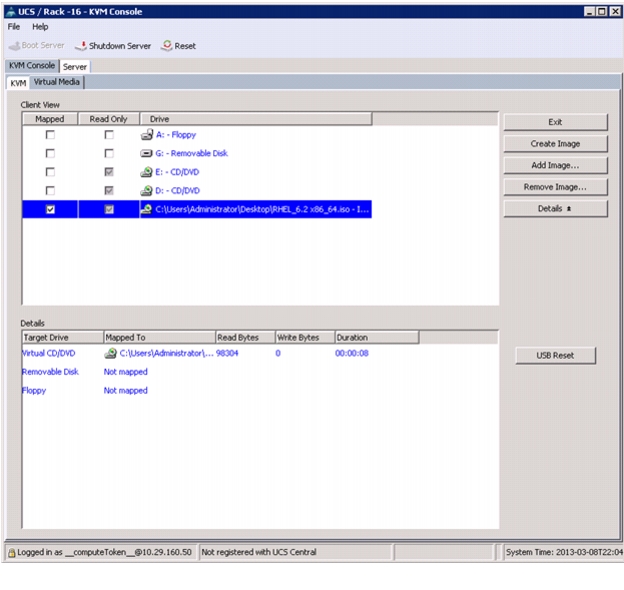

Figure 63 Adding ISO Image

2.

Click Add Image in the window that appeared.

3.

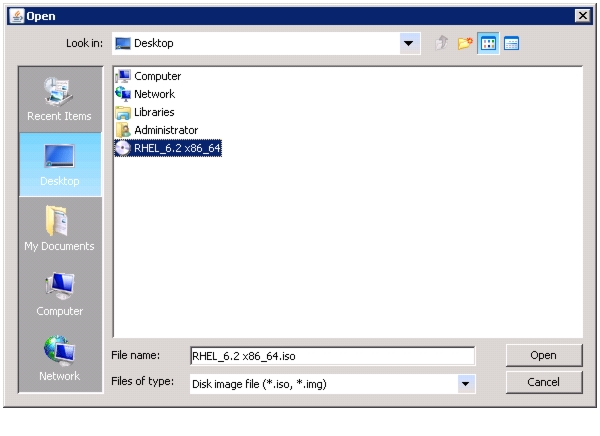

Browse to the Red Hat Enterprise Linux Server 6.2 installer ISO image file.

Note

The Red Hat Enterprise Linux 6.2 DVD is assumed to be on the client machine. If not, create an ISO Image of Red Hat Enterprise Linux DVD using software such as ImgBurn or MagicISO.

Figure 64 Selecting the Red Hat Enterprise Linux ISO Image

4.

Click Open to add the image to the list of virtual media.

5.

Check the check box for Mapped, next to the entry corresponding to the image you just added.

Figure 65 Mapping the ISO Image

6.

In the KVM window, select the KVM tab to monitor during boot.

7.

In the KVM window, select the Boot Server button in the upper left corner.

8.

Click OK.

9.

Click OK to reboot the system.

10.

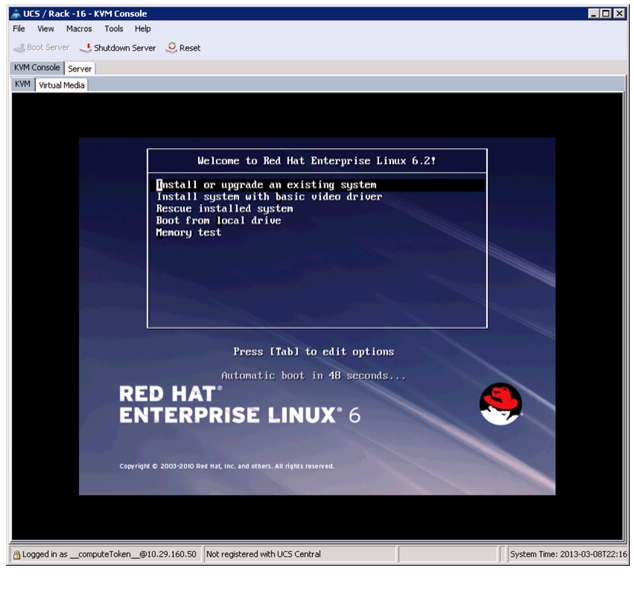

On reboot, the machine detects the presence of the Red Hat Enterprise Linux Server 6.2 install media.

11.

Select the Install or Upgrade an Existing System option.

Figure 66 Selecting the RHEL Installation Option

12.

Skip the Media test as we are installing from ISO Image, click Next to continue.

13.

Select Language for the Installation and click Next.

14.

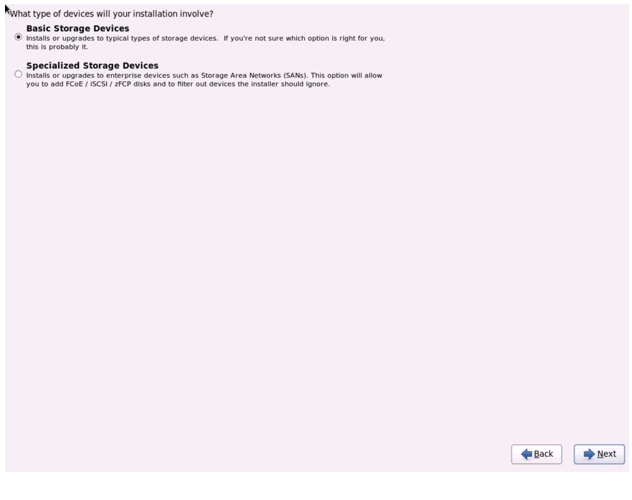

Select Basic Storage Devices and click Next.

Figure 67 Selecting Storage Device Type

15.

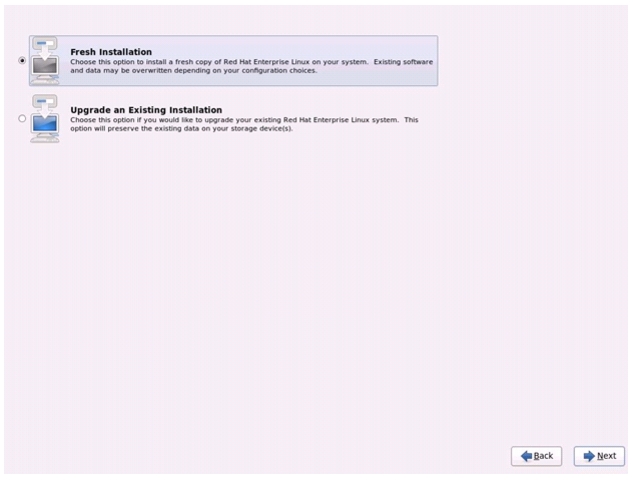

Select Fresh Installation and click Next.

Figure 68 Selecting Installation Type

16.

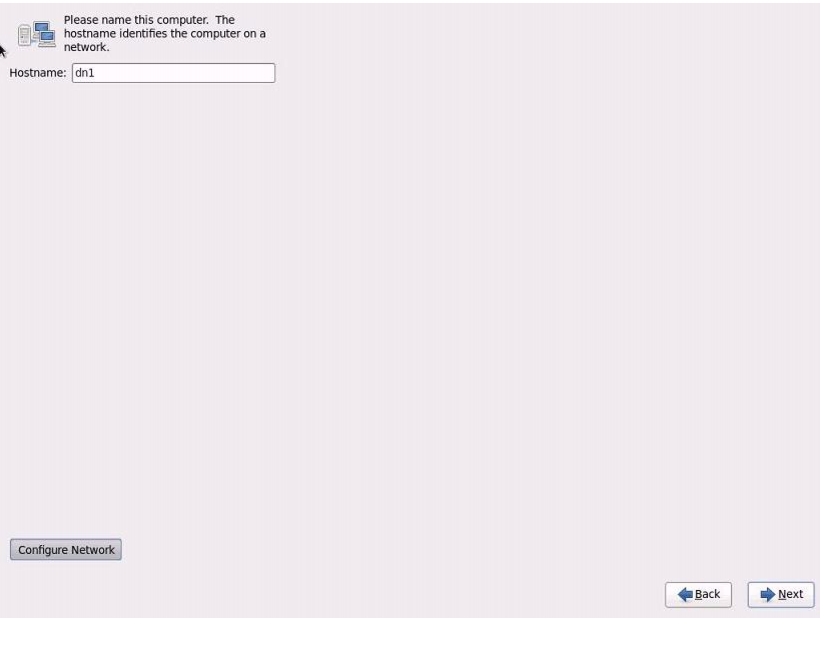

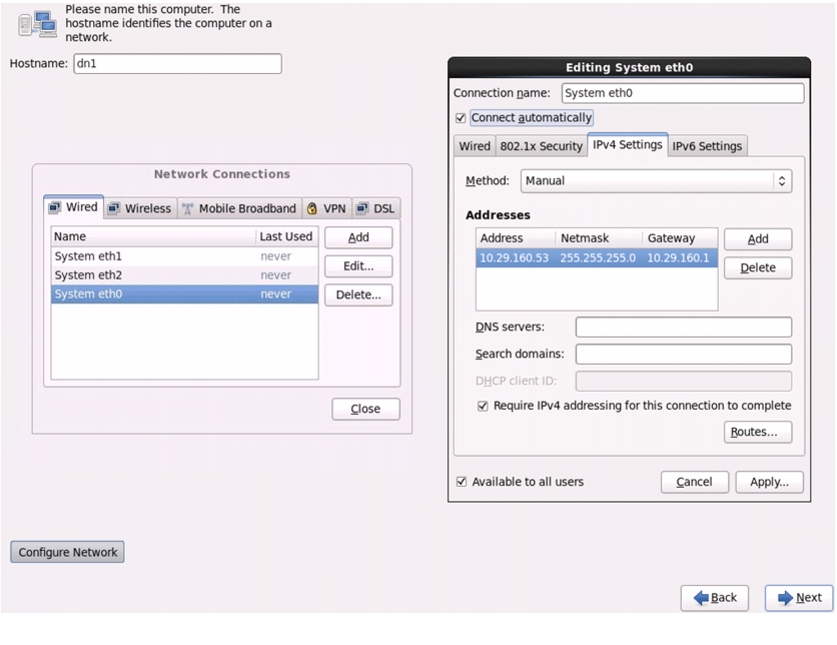

Enter the Host name of the server and click Configure Network.

Figure 69 Entering the Host Name

17.

Network Connections window appears.

18.

In the Network Connections window, Select the tab Wired.

19.

Select the interface System eth0, and click Edit.

20.

Editing System eth0 window appears.

21.

Check the Connect automatically check box.

22.

For the field Method, select Manual from the drop down list.

23.

Click Add and enter IP Address, Netmask and Gateway.

24.

Click Apply.

Figure 70 Configuring Network Connections

25.

Select the Appropriate Time Zone and click Next.

26.

Enter the root Password and click Next.

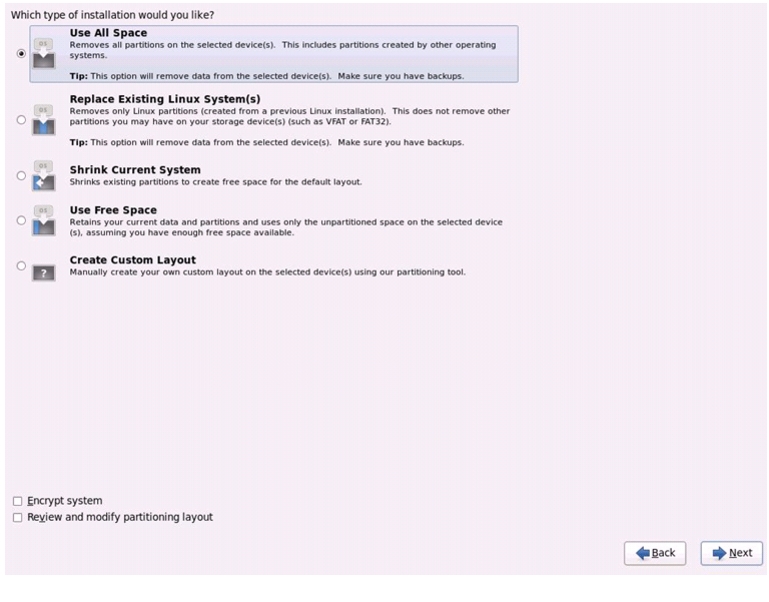

27.

Select Use All Space and Click Next.

Figure 71 Selecting RHEL Install Type

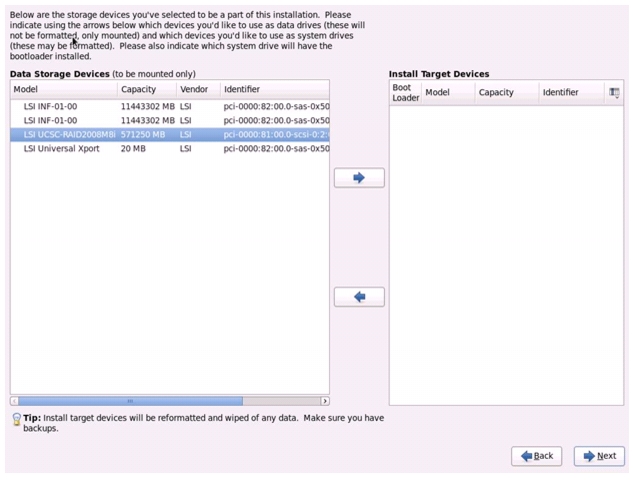

28.

Select appropriate boot device. In this example, LSI UCSC-RAID 2008M-8i is selected. Click

to add the selected boot device to appear in the right pane under Install Target Devices and click Next.

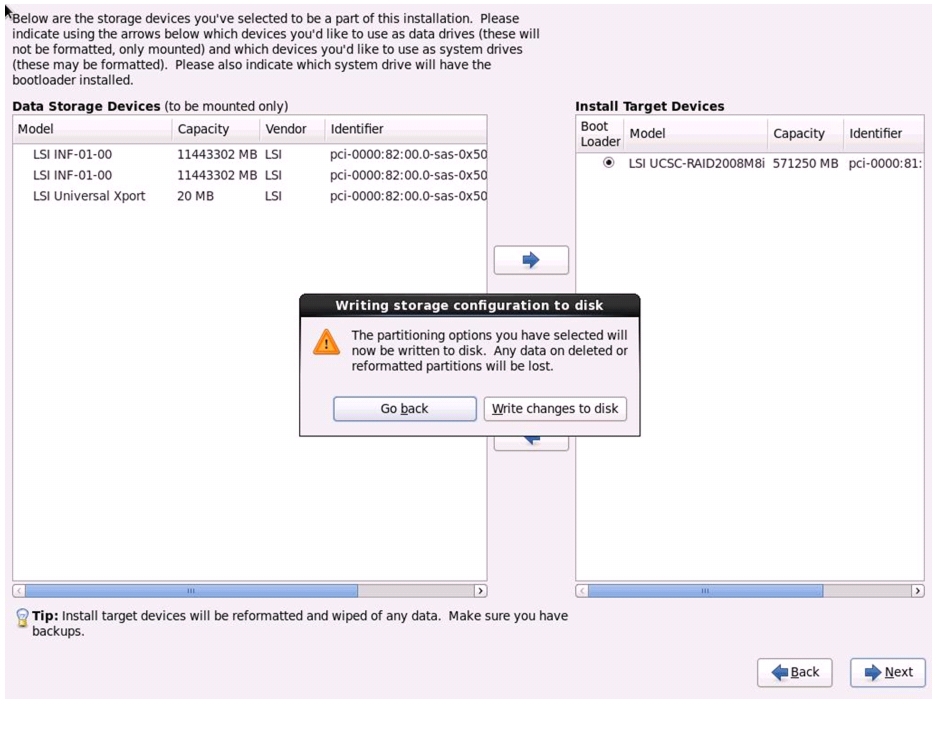

Figure 72 Selecting the Data Storage Device

29.

Click write changes to the disks and then, click Next.

Figure 73 Writing Partitioning Options into the Disk

30.

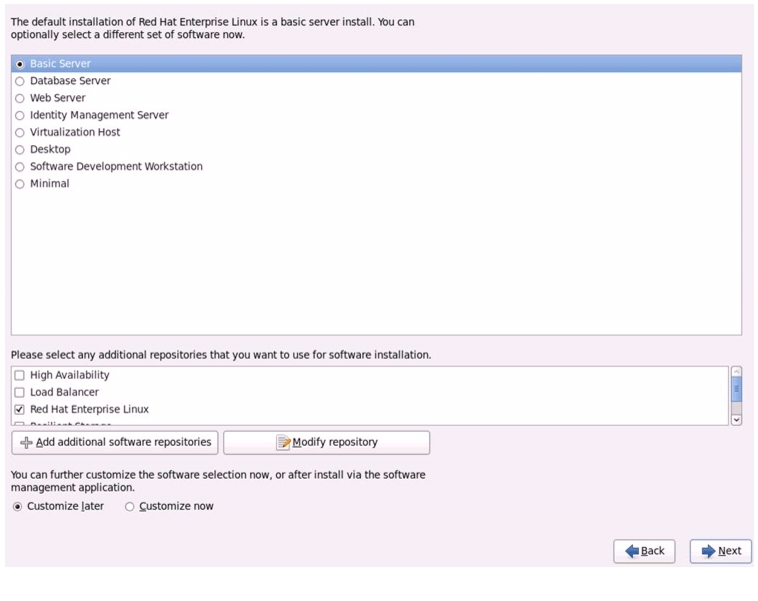

Select Basic Server Installation and Click Next.

Figure 74 Selecting RHEL Installation Option

31.

After the installer is finished loading, press Enter to continue with the install.

Figure 75 Installation Process in Progress

Post OS Install Configuration

Infrastructure Node

This section describes the steps needed to implement an infrastructure node for the cluster. The infrastructure node may provide some or all of the following services to the namenodes and datanodes in the cluster:

•

NTP - time server

•

DNS resolution for cluster-private hostnames

•

DHCP IP address assignment for cluster-private NICs

•

Local mirror of one or more software repository and/or distribution

•

Management server

Note

This section assumes that only the default basic server install has been done.

Installing and Configuring Parallel Shell

Parallel-SSH

Parallel SSH is used to run commands on several hosts at the same time. It takes a file of hostnames and a bunch of common ssh parameters as parameters, executes the given command in parallel on the nodes specified.

The tool can be downloaded from https://code.google.com/p/parallel-ssh/

Fetch and install this tool via the following commands:

cd /tmp/curl https://parallel-ssh.googlecode.com/files/pssh-2.3.1.tar.gz -O -Ltar xzf pssh-2.3.1.tar.gzcd pssh-2.3.1python setup.py installTo make use of pssh, a file containing just only the IP addresses of the nodes in the cluster needs to be created. The following was used for the contents of the /root/pssh.hosts file on all of the nodes and will need to be customized to fit your implementation:

# /root/pssh.hosts - cluster node IPs or names10.29.160.5310.29.160.5410.29.160.5510.29.160.5610.29.160.5710.29.160.5810.29.160.5910.29.160.6010.29.160.6110.29.160.6210.29.160.6310.29.160.6410.29.160.6510.29.160.6610.29.160.6710.29.160.68This file is used with pssh by specifying the -h option on the command line. For example, the following command will execute the hostname command on all of the nodes listed in the /root/pssh.hosts file:

pssh -h /root/pssh.hosts -A hostnameFor information on the -A option and other pssh options, use one or both of the following commands:

pssh -helpman psshCreate Local Redhat Repo

If your infrastructure node and your cluster nodes have Internet access, you may be able to skip this section.

To create a repository using RHEL DVD or ISO on the infrastructure node (in this deployment 10.29.160.53 is as an infrastructure node), create a directory with all the required RPMs, run the createrepo command and then publish the resulting repository.

1.

Create the directories where the local copies of the software installation packages will reside. In this example, they are created under the /var/www/html/ directory.

mkdir -p /var/www/html/Cloudera/mkdir -p /var/www/html/JDK/mkdir -p /var/www/html/RHEL/6.2/2.

Then mount the RHEL DVD. This can be done by loading the DVD disc into a DVD drive attached to the server or by mounting the .iso image as in this example.

mount /rhel-server-6.2-x86_64-dvd.iso/mnt -t iso9660 -o ro,loop=/dev/loop13.

Next, copy the contents of the DVD to the /var/www/html/RHEL/6.2/ directory and then verify that the contents copied match their source.

cd /mnt/;tar -c -p -b 128 -f - .cd /var/www/html/RHEL/6.2/;tar -x -p -b 128 -f - .diff -r /mnt/ /var/www/html/RHEL/6.2/4.

Now create a .repo file for the yum command.

cat > /var/www/html/RHEL/6.2/rhel62copy.repo[rhel6.2]name=Red Hat Enterprise Linux 6.2baseurl=file:///var/www/html/RHEL/6.2/gpgcheck=0enabled=1

Note

Based on this repo file yum requires httpd to be running on the infrastructure node for the other nodes to access the repository. Steps to install and configure httpd are in the following section.

5.

Copy the rhel62copy.repoto all the nodes of the cluster.

pscp -h /root/pssh.hosts \/var/www/html/RHEL/6.2/rhel62copy.repo /etc/yum.repos.d/6.

Creating the Red Hat Repository Database.

Install the createrepo package. Use it to regenerate the repository database(s) for the local copy of the RHEL DVD contents. Then purge the yum caches.

yum -y install createrepocd /var/www/html/RHEL/6.2/createrepo .yum clean all7.

Update Yum on all nodes.

pssh -h /root/allnodes "yum clean all"Install Required Packages

This section assumes that only the default basic server install has been done.

Table 5 provides a list of packages that are required.

Table 5 Required list of packages

xfsprogs

Utilities for managing XFS filesystem

jdk

Java SE Development Kit6, Update 39(JDK 6u39) or more recent

Utilities

dnsmasq, httpd, lynx

Note

Installation of Java and JDK is detailed in a separate section.

Create the following script install_packages.sh to install required packages:

Script install_packages.sh

yum -y install dnsmasq httpd lynx# get and install xfsprogs from local repo on Infrastructure nodecd /tmp/curl http://10.29.160.53/RHEL/6.2/Packages/xfsprogs-3.1.1-6.el6.x86_64.rpm -O -Lrpm -i /tmp/xfsprogs-3.1.1-6.el6.x86_64.rpmCopy script disable_services.sh to all nodes and run the script on all nodes:

pscp -h /root/pssh.hosts /root/install_packages.sh /root/pssh -h /root/pssh.hosts "/root/install_packages.sh"Disable SELinux

Execute the following commands to disable SELinux on all the nodes:

pssh -h /root/pssh.hosts "setenforce 0"pssh -h /root/pssh.hosts "sed -i -e 's/=enforcing/=disabled/g;'\ /etc/selinux/config"Disable Unwanted ServicesExecute the following commands as a script to disable and turn off unwanted services on all nodes:

Script disable_services.sh

$cat disable_services.sh# disble/shutdown things we do not needfor X in bluetooth certmonger cgconfigd cgred cpuspeed cups dnsmasq \ebtables fcoe fcoe-target ip6tables iptables iscsi iscsid ksm ksmtuned \libvirtd-guests libvirtd postfix psacct qpidd rhnsd rhsmcertd \sendmail smartd virt-who vsftpd winbind wpa_supplicant ypbind NetworkManagerdo/sbin/service $X stop/sbin/chkconfig $X offdoneCopy script disable_services.sh to all nodes and run the script on all nodes:

pscp -h /root/pssh.hosts /root/disable_servicesh.h /root/pssh -h /root/pssh.hosts "/root/disable_services.sh"Enable and start the httpd service

Before starting the httpd service, you may need to edit the server configuration file (/etc/httpd/conf/httpd.conf) to change one or more of the following settings:

•

Listen

•

ServerName

•

ExtendedStatus

•

server-status

Ensure httpd is able to read the repofiles

chcon -R -t httpd_sys_content_t /var/www/html/RHEL/6.2/Perform the following commands to enable and start the httpd service:

chkconfig httpd onservice httpd startJDK Installation

Download Java SE 6 Development Kit (JDK)

Using a web browser, click on the following link:

http://www.oracle.com/technetwork/java/index.html

and download the latest Java™ SE 6 Development Kit (JDK™6).

Once the JDK6 package has been downloaded, place it in the /var/www/html/JDK/ directory.

Install JDK6 on All Node

Create the following script install_jdk.sh to install JDK:

Script install_jdk.sh

# Copy and install JDKcd /tmp/curl http://10.29.160.53/JDK/jdk-6u41-linux-x64.bin -O -Lsh ./jdk-6u41-linux-x64.bin -noregisterCopy script disable_services.sh to all nodes and run the script on all nodes:

pscp -h /root/pssh.hosts /root/install_jdk.sh /root/pssh -h /root/pssh.hosts "/root/install_jdk.sh"Local Cloudera Software Repos

This section deals with making local mirrors of the Cloudera repositories for:

•

Cloudera Manager, version 4.x (cm4)

•

Cloudera Enterprise Core, version 4.x (CDH4)

•

Cloudera Impala - beta, version 0.x (impala)

These instructions deal with mirroring the latest releases only.

Note

The Hadoop distribution vendor (Cloudera) may change the layout or accessibility of their repositories. It is possible that any such changes could render one or more of these instructions invalid. For more information on Cloudera, see: http://www.cloudera.com/

Cloudera Manager Repo

The following commands will mirror the latest release of Cloudera Manager, version 4.x:

cd /var/www/html/Cloudera/curl http://archive.cloudera.com/cm4/redhat/6/x86_64/cm/cloudera-manager.repo -O -Lreposync --config=./cloudera-manager.repo --repoid=cloudera-managercreaterepo --baseurl http://10.29.160.53/Cloudera/cloudera-manager/ \${PWD}/cloudera-managerCloudera Manager Installer

The following commands will mirror the latest release of the Cloudera Manager Installer utility:

cd /var/www/html/Cloudera/cloudera-manager/curl http://archive.cloudera.com/cm4/installer/latest/cloudera-manager-installer.bin -O -Lchmod uog+rx cloudera-manager-installer.binCloudera Enterprise Core Repo

The following commands will mirror the latest release of Cloudera Enterprise Core, version 4.x:

cd /var/www/html/Cloudera/curl http://archive.cloudera.com/cdh4/redhat/6/x86_64/cdh/cloudera-cdh4.repo -O -Lreposync --config=./cloudera-cdh4.repo --repoid=cloudera-cdh4createrepo --baseurl http://10.29.160.53/Cloudera/cloudera-cdh4 ${PWD}/cloudera-cdh4Next, a .repo file must be created. The following commands will create a .repo file:

cat > cloudera-manager/cloudera-manager.repo[cloudera-manager]name=Cloudera Managerbaseurl=http://10.29.160.53/Cloudera/cloudera-manager/gpgcheck=0enabled=1priority=1chmod uog-wx cloudera-manager/cloudera-manager.repocp -pv cloudera-manager/cloudera-manager.repo /etc/yum.repos.d/Cloudera Impala Repo

The following commands will mirror the latest release of Cloudera Impala - beta, version 0.x:

cd /var/www/html/Cloudera/curl http://beta.cloudera.com/impala/redhat/6/x86_64/impala/cloudera-impala.repo -O -Lreposync --config=./cloudera-impala.repo --repoid=cloudera-impalacreaterepo --baseurl http://10.29.160.53/Cloudera/cloudera-impala \${PWD}/cloudera-impalaAt this point, the Cloudera repositories for CDH4, CM, Impala and the CM Installer should be mirrored locally on the infrastructure node.

For more information, refer to Cloudera Installation Guide.

Services to Configure On Infrastructure Node

These are some of the other services that you may want to configure on the infrastructure node. This is optional.

DHCP for Cluster Private Interfaces

If DHCP service is needed, it may be done via one of the following services:

•

dnsmasq

•

dhcp

DNS for Cluster Private Interfaces

Hostname resolution for cluster private interfaces may be done by one or two of the following services running on the infrastructure node:

•

/etc/hosts file propagated to all nodes in the cluster

•

dnsmasq

•

bind

The configuration described in this document used both the /etc/hosts file and the dnsmasq service to provide DNS services. The FAS2220 is the main user of the DNS service in this configuration.

The following was used for the contents of the /etc/resolv.conf file on all of the nodes and will need to be customized to fit your implementation:

domain hadoop.localsearch hadoop.localnameserver 10.29.160.53Once configured, the /etc/resolv.conf file can be pushed to all nodes via the following command:

pssh -h /root/pssh.hosts -A /etc/resolv.conf /etc/resolv.confThe following was used for the contents of the /etc/nsswitch.conf file on all of the nodes and may need to be customized to fit your implementation:

# /etc/nsswitch.conf - for all nodespasswd: filesshadow: filesgroup: files#hosts: db files nisplus nis dnshosts: files dnsethers: filesnetmasks: filesnetworks: filesprotocols: filesrpc: filesservices: filesautomount: files nisplusaliases: files nisplusnetgroup: nispluspublickey: nisplusbootparams: nisplus [NOTFOUND=return] filesOnce configured, the /etc/nsswitch.conf file can be pushed to all nodes via the following command:

pssh -h /root/pssh.hosts -A /etc/nsswitch.conf /etc/nsswitch.confThe following was used for the contents of the /etc/hosts file on all of the nodes and will need to be customized to fit your implementation:

# /etc/hosts file for all nodes127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 localhost-stack::1 localhost localhost.localdomain localhost6 localhost6.localdomain610.29.160.1 gateway## NTAP FAS2220 unit# 0.0.0.0 fas2220-e0P10.29.160.43 fas2220-e0M.hadoop.local fas2220-e0M#10.29.160.45 fas2220-e0a.hadoop.local fas2220-e0a# 0.0.0.0 fas2220-e0b# 0.0.0.0 fas2220-e0# 0.0.0.0 fas2220-e0d192.168.11.43 fas2220-e1a.hadoop.local fas2220-e1a192.168.11.45 fas2220.hadoop.local fas2220-e1b fas2220#192.168.11.45 vif-a## NTAP E-Series E5460 units10.29.160.33 e5460-2-A.hadoop.local e5460-2-A10.29.160.34 e5460-2-B.hadoop.local e5460-2-B10.29.160.37 e5460-1-A.hadoop.local e5460-1-A10.29.160.38 e5460-1-B.hadoop.local e5460-1-B10.29.160.35 e5460-3-A.hadoop.local e5460-3-A10.29.160.36 e5460-3-B.hadoop.local e5460-3-B## CISCO eth0 mappings -VLAN16010.29.160.53 infra.hadoop.local infra infra-0.hadoop.local infra-0 mailhost infrastructure-010.29.160.54 nn1-0.hadoop.local nn1-0 namenode1-0 namenode-1-0 nn01-010.29.160.55 nn2-0.hadoop.local nn2-0 namenode2-0 namenode-2-0 nn02-010.29.160.56 tr1-0.hadoop.local tr1-0 tracker1-0 tracker-1-0 tr01-010.29.160.57 dn1-0.hadoop.local dn1-0 datanode1-0 datanode-1-0 dn01-010.29.160.58 dn2-0.hadoop.local dn2-0 datanode2-0 datanode-2-0 dn02-010.29.160.59 dn3-0.hadoop.local dn3-0 datanode3-0 datanode-3-0 dn03-010.29.160.60 dn4-0.hadoop.local dn4-0 datanode4-0 datanode-4-0 dn04-010.29.160.61 dn5-0.hadoop.local dn5-0 datanode5-0 datanode-5-0 dn05-010.29.160.62 dn6-0.hadoop.local dn6-0 datanode6-0 datanode-6-0 dn06-010.29.160.63 dn7-0.hadoop.local dn7-0 datanode7-0 datanode-7-0 dn07-010.29.160.64 dn8-0.hadoop.local dn8-0 datanode8-0 datanode-8-0 dn08-010.29.160.65 dn9-0.hadoop.local dn9-0 datanode9-0 datanode-9-0 dn09-010.29.160.66 dn10-0.hadoop.local dn10-0 datanode10-0 datanode-10-010.29.160.67 dn11-0.hadoop.local dn11-0 datanode11-0 datanode-11-010.29.160.68 dn12-0.hadoop.local dn12-0 datanode12-0 datanode-12-0## CISCO eth1 mappings - VLAN11192.168.11.11 infra-1 infra-1 infrastructure-1192.168.11.12 nn1-1.hadoop.local nn1-1 namenode1-1 nn01-1192.168.11.13 nn2-1.hadoop.local nn2-1 namenode2-1 nn02-1192.168.11.14 tr1-1.hadoop.local tr1-1 tracker1-1 tracker-1-1 tr01-1192.168.11.15 dn1-1.hadoop.local dn1-1 dn01-1192.168.11.16 dn2-1.hadoop.local dn2-1 dn02-1192.168.11.17 dn3-1.hadoop.local dn3-1 dn03-1192.168.11.18 dn4-1.hadoop.local dn4-1 dn04-1192.168.11.19 dn5-1.hadoop.local dn5-1 dn05-1192.168.11.20 dn6-1.hadoop.local dn6-1 dn06-1192.168.11.21 dn7-1.hadoop.local dn7-1 dn07-1192.168.11.22 dn8-1.hadoop.local dn8-1 dn08-1192.168.11.23 dn9-1.hadoop.local dn9-1 dn09-1192.168.11.24 dn10-1.hadoop.local dn10-1192.168.11.25 dn11-1.hadoop.local dn11-1192.168.11.26 dn12-1.hadoop.local dn12-1## eth2 mappings - VLAN12192.168.12.11 infra-2.hadoop.local infra-2 infrastructure-2192.168.12.12 nn1-2.hadoop.local nn1-2 namenode1-2 nn01-2192.168.12.13 nn2-2.hadoop.local nn2-2 namenode2-2 nn02-2192.168.12.14 tr1-2.hadoop.local tr1-2 tracker1-2 tracker-1-2 tr01-2192.168.12.15 dn1-2.hadoop.local dn1-2 dn01-2192.168.12.16 dn2-2.hadoop.local dn2-2 dn02-2192.168.12.17 dn3-2.hadoop.local dn3-2 dn03-2192.168.12.18 dn4-2.hadoop.local dn4-2 dn04-2192.168.12.19 dn5-2.hadoop.local dn5-2 dn05-2192.168.12.20 dn6-2.hadoop.local dn6-2 dn06-2192.168.12.21 dn7-2.hadoop.local dn7-2 dn07-2192.168.12.22 dn8-2.hadoop.local dn8-2 dn08-2192.168.12.23 dn9-2.hadoop.local dn9-2 dn09-2192.168.12.24 dn10-2.hadoop.local dn10-2192.168.12.25 dn11-2.hadoop.local dn11-2192.168.12.26 dn12-2.hadoop.local dn12-2When configured, the /etc/hosts file can be pushed to all nodes through the following command:

pssh -h /root/pssh.hosts -A /etc/hosts /etc/hostsThe following was used for the contents of the /etc/dnsmasq.conf file on the infrastructure node and will need to be customized to fit your implementation should you choose to use the dnsmasq service:

# Configuration file for dnsmasq.## Format is one option per line, legal options are the same# as the long options legal on the command line. See# "/usr/sbin/dnsmasq --help" or "man 8 dnsmasq" for details.domain-neededbogus-privfilterwin2kno-resolvlocal=/hadoop.local/address=/doubleclick.net/127.0.0.1address=/www.google-analytics.com/127.0.0.1address=/google-analytics.com/127.0.0.1interface=eth0interface=eth1interface=eth2bind-interfacesexpand-hostsdomain=hadoop.local,10.29.160.0/24,localdomain=hadoop.local,192.168.11.0/24,localdomain=hadoop.local,192.168.12.0/24,local#dhcp-range=tag:mgmt,10.29.160.54,10.29.160.68,255.255.255.0,24hdhcp-range=tag:csco_eth1,192.168.11.12,192.168.11.39,255.255.255.0,24hdhcp-range=tag:csco_eth2,192.168.12.12,192.168.12.39,255.255.255.0,24h#dhcp-range=tag:data11,192.168.11.40,192.168.11.49,255.255.255.0,24hdhcp-range=tag:data12,192.168.12.40,192.168.12.49,255.255.255.0,24h## NTAP# E-Series E5460 unitsdhcp-host=net:mgmt,00:08:E5:1F:69:34,10.29.160.33,e5460-3-adhcp-host=net:mgmt,00:80:E5:1F:83:08,10.29.160.34,e5460-3-b#dhcp-host=net:mgmt,00:08:E5:1F:69:F4,10.29.160.35,e5460-2-adhcp-host=net:mgmt,00:08:E5:1F:9F:2C,10.29.160.36,e5460-2-b#dhcp-host=net:mgmt,00:08:E5:1F:6B:1C,10.29.160.37,e5460-1-adhcp-host=net:mgmt,00:08:E5:1F:67:A8,10.29.160.38,e5460-1-b## NTAP# FAS2220 unitdhcp-host=net:mgmt,00:a0:98:30:58:1d,10.29.160.43,fas2220-e0Mdhcp-host=net:mgmt,00:a0:98:30:58:18,10.29.160.45,fas2220-e0adhcp-host=net:data11,00:a0:98:1a:19:6c,192.168.11.43,fas2220-e1adhcp-host=net:data11,00:a0:98:1a:19:6d,192.168.11.45,fas2220## CISCO# management (eth0)# name nodes and tracker nodesdhcp-host=net:mgmt,00:25:B5:02:20:6F,10.29.160.53,infra-0dhcp-host=net:mgmt,00:25:B5:02:20:5F,10.29.160.54,nn1-0dhcp-host=net:mgmt,00:25:B5:02:20:0F,10.29.160.55,nn2-0dhcp-host=net:mgmt,00:25:B5:02:20:FF,10.29.160.56,tr1-0dhcp-host=net:mgmt,00:25:B5:02:20:BF,10.29.160.57,dn1-0dhcp-host=net:mgmt,00:25:B5:02:20:8E,10.29.160.58,dn2-0dhcp-host=net:mgmt,00:25:B5:02:20:7E,10.29.160.59,dn3-0dhcp-host=net:mgmt,00:25:B5:02:20:2E,10.29.160.60,dn4-0dhcp-host=net:mgmt,00:25:B5:02:20:1E,10.29.160.61,dn5-0dhcp-host=net:mgmt,00:25:B5:02:20:DE,10.29.160.62,dn6-0dhcp-host=net:mgmt,00:25:B5:02:20:CE,10.29.160.63,dn7-0dhcp-host=net:mgmt,00:25:B5:02:20:9D,10.29.160.64,dn8-0dhcp-host=net:mgmt,00:25:B5:02:20:4D,10.29.160.65,dn9-0dhcp-host=net:mgmt,00:25:B5:02:20:3D,10.29.160.66,dn10-0dhcp-host=net:mgmt,00:25:B5:02:21:0D,10.29.160.67,dn11-0## 10GbE cluster members (eth1)# name nodes and tracker nodes#dhcp-host=net:data11,00:25:B5:02:20:9F,192.168.11.11,infra-1dhcp-host=net:data11,00:25:B5:02:20:4F,192.168.11.12,nn1-1dhcp-host=net:data11,00:25:B5:02:20:3F,192.168.11.13,nn2-1dhcp-host=net:data11,00:25:B5:02:21:0F,192.168.11.14,tr1-1dhcp-host=net:data11,00:25:B5:02:20:EF,192.168.11.15,dn1-1dhcp-host=net:data11,00:25:B5:02:20:AF,192.168.11.16,dn2-1dhcp-host=net:data11,00:25:B5:02:20:6E,192.168.11.17,dn3-1dhcp-host=net:data11,00:25:B5:02:20:5E,192.168.11.18,dn4-1dhcp-host=net:data11,00:25:B5:02:20:0E,192.168.11.19,dn5-1dhcp-host=net:data11,00:25:B5:02:20:FE,192.168.11.20,dn6-1dhcp-host=net:data11,00:25:B5:02:20:BE,192.168.11.21,dn7-1dhcp-host=net:data11,00:25:B5:02:20:8D,192.168.11.22,dn8-1dhcp-host=net:data11,00:25:B5:02:20:7D,192.168.11.23,dn9-1dhcp-host=net:data11,00:25:B5:02:20:2D,192.168.11.24,dn10-1dhcp-host=net:data11,00:25:B5:02:20:1D,192.168.11.25,dn11-1dhcp-host=net:data11,00:25:B5:02:20:DD,192.168.11.26,dn12-1## 10GbE cluster members (eth2)# name nodes and tracker nodes#dhcp-host=net:data12,00:25:B5:02:20:8F,192.168.12.11,infra-2dhcp-host=net:data12,00:25:B5:02:20:7F,192.168.12.12,nn1-2dhcp-host=net:data12,00:25:B5:02:20:2F,192.168.12.13,nn2-2dhcp-host=net:data12,00:25:B5:02:20:1F,192.168.12.14,tr1-2dhcp-host=net:data12,00:25:B5:02:20:DF,192.168.12.15,dn1-2dhcp-host=net:data12,00:25:B5:02:20:CF,192.168.12.16,dn2-2dhcp-host=net:data12,00:25:B5:02:20:9E,192.168.12.17,dn3-2dhcp-host=net:data12,00:25:B5:02:20:4E,192.168.12.18,dn4-2dhcp-host=net:data12,00:25:B5:02:20:3E,192.168.12.19,dn5-2dhcp-host=net:data12,00:25:B5:02:21:0E,192.168.12.20,dn6-2dhcp-host=net:data12,00:25:B5:02:20:EE,192.168.12.21,dn7-2dhcp-host=net:data12,00:25:B5:02:20:AE,192.168.12.22,dn8-2dhcp-host=net:data12,00:25:B5:02:20:6D,192.168.12.23,dn9-2dhcp-host=net:data12,00:25:B5:02:20:5D,192.168.12.24,dn10-2dhcp-host=net:data12,00:25:B5:02:20:0D,192.168.12.25,dn11-2dhcp-host=net:data12,00:25:B5:02:20:FD,192.168.12.26,dn12-2dhcp-vendorclass=set:csco_eth1,Linuxdhcp-vendorclass=set:csco_eth2,Linuxdhcp-option=26,9000# Set the NTP time server addresses to 192.168.0.4 and 10.10.0.5dhcp-option=option:ntp-server,10.29.160.53dhcp-lease-max=150dhcp-leasefile=/var/lib/misc/dnsmasq.leasesdhcp-authoritativelocal-ttl=5Once the /etc/dnsmasq.conf file has been configured, the dnsmasq service must be started via the commands:

chkconfig dnsmasq onservice dnsmasq restartNTP

If needed, the Infrastructure server can act as a time server for all nodes in the cluster via one of the following methods:

•

ntp service

•

cron or at job to push the time to the rest of the nodes in the cluster

The configuration described in this document used the ntp service running on the infrastructure node to provide time services for the other nodes in the cluster.

The following was used for the contents of the /etc/ntp.conf file on the infrastructure node and may need to be customized to fit your implementation should you choose to use the ntp service:

# /etc/ntp.conf - infrastructure node NTP config# For more information about this file, see the man pages ntp.conf(5),# ntp_acc(5), ntp_auth(5), ntp_clock(5), ntp_misc(5), ntp_mon(5).driftfile /var/lib/ntp/drift# Permit time synchronization with our time source, but do not# permit the source to query or modify the service on this system.restrict default kod nomodify notrap nopeer noqueryrestrict -6 default kod nomodify notrap nopeer noquery# Permit all access over the loopback interface.restrict 127.0.0.1restrict -6 ::1# Hosts on local network are less restricted.#restrict 192.168.1.0 mask 255.255.255.0 nomodify notrap# Use public servers from the pool.ntp.org project.# Please consider joining the pool (http://www.pool.ntp.org/join.html).#server 0.rhel.pool.ntp.org#server 1.rhel.pool.ntp.org#server 2.rhel.pool.ntp.org#broadcast 192.168.1.255 autokey # broadcast server#broadcastclient # broadcast client#broadcast 224.0.1.1 autokey # multicast server#multicastclient 224.0.1.1 # multicast client#manycastserver 239.255.254.254 # manycast server#manycastclient 239.255.254.254 autokey # manycast client# Undisciplined Local Clock. This is a fake driver intended for backup# and when no outside source of synchronized time is available.server 127.127.1.0 # local clockfudge 127.127.1.0 stratum 10includefile /etc/ntp/crypto/pwkeys /etc/ntp/keysThe following was used for the contents of the /etc/ntp.conf file on the other nodes and may need to be customized to fit your implementation should you choose to use the ntp service:

# /etc/ntp.conf - all other nodesserver 10.29.160.53driftfile /var/lib/ntp/driftrestrict default kod nomodify notrap nopeer noqueryrestrict -6 default kod nomodify notrap nopeer noqueryrestrict 127.0.0.1restrict -6 ::1includefile /etc/ntp/crypto/pwkeys /etc/ntp/keysOnce all of the /etc/ntp.conf files have been configured, the ntpd service must be started by executing the following commands on then infrastructure node and then all of the other nodes:

chkconfig ntpd onservice ntpd restartpssh -h /root/pssh.hosts -A chkconfig ntpd onpssh -h /root/pssh.hosts -A service ntpd restartSystem Tunings

/etc/sysctl.conf

The following should be appended to the /etc/sysctl.conf file on all of the nodes:

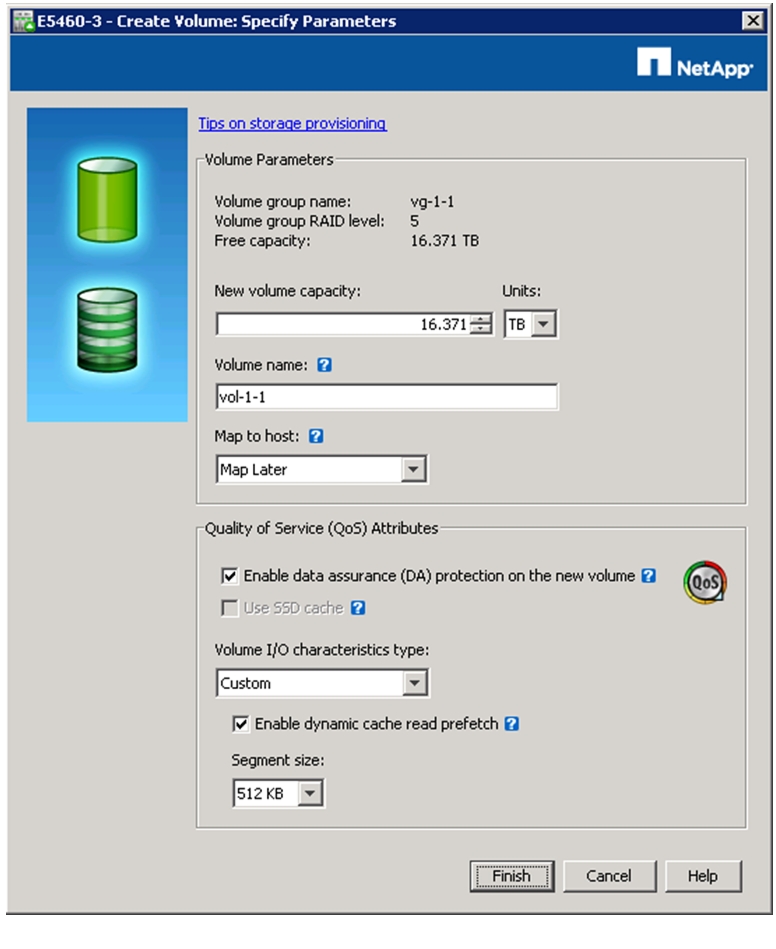

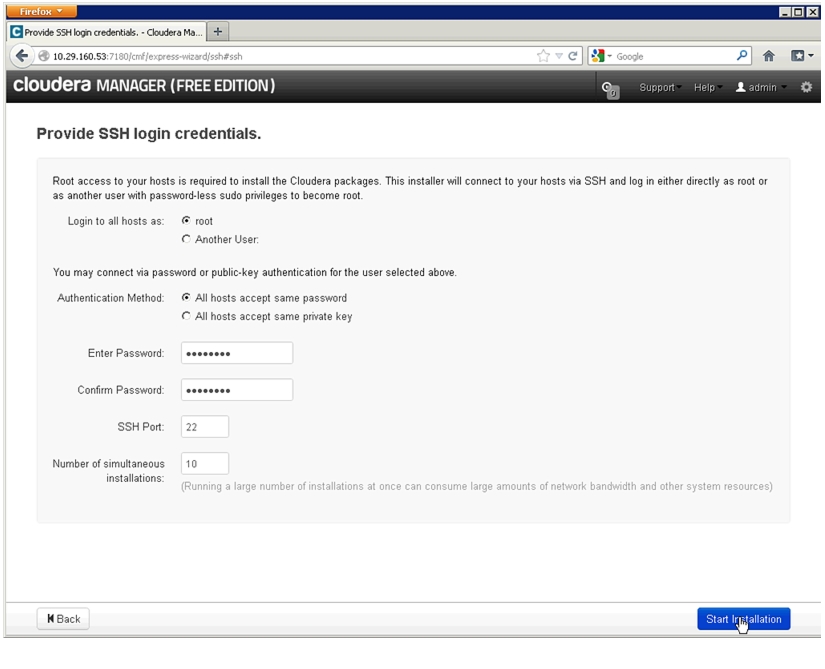

# ----------# /etc/sysctl.conf -- append to the file on all nodes# BEGIN: Hadoop tweaks#sunrpc.tcp_slot_table_entries=128net.core.rmem_default=262144net.core.rmem_max=16777216net.core.wmem_default=262144net.core.wmem_max=16777216net.ipv4.tcp_window_scaling=1fs.file-max=6815744fs.xfs.rotorstep=254vm.dirty_background_ratio=1## END: Hadoop tweaks# ----------This can be accomplished via the following commands: