Table Of Contents

About Cisco Validated Design (CVD) Program

FlexPod Data Center with Oracle RAC on Oracle VM

Oracle Database 11g Release 2 RAC on FlexPod with Oracle Direct NFS Client

Cisco Unified Computing System

Cisco UCS B200 M3 Blade Server

Cisco UCS Virtual Interface Card 1240

Cisco UCS 6248UP Fabric Interconnect

NetApp Storage Technologies and Benefits

NetApp OnCommand System Manager 2.1

Advantage of Using Oracle VM for Oracle RAC Database

Oracle Database 11g Release 2 RAC

Oracle Database 11g Direct NFS Client

Hardware and Software used for this Solution

Cisco UCS Networking and NetApp NFS Storage Topology

Cisco UCS Manager Configuration Overview

High Level Steps for Cisco UCS Configuration

Configuring Fabric Interconnects for Blade Discovery

Configuring LAN and SAN on UCS Manager

Set Jumbo Frames in Both the Cisco UCS Fabrics

Configure vNIC and vHBA Templates

Configure Ethernet Uplink Port Channels

Create Local Disk Configuration Policy (Optional)

Service Profile creation and Association to UCS Blades

Create Service Profile Template

Create Service Profiles from Service Profile Templates

Associating Service Profile to Servers

Nexus 5548UP Configuration for FCoE Boot and NFS Data Access

Cisco Nexus 5548 A and Cisco Nexus 5548 B

Cisco Nexus 5548 A and Cisco Nexus 5548 B

Add Individual Port Descriptions for Troubleshooting

Cisco Nexus 5548 A and Cisco Nexus 5548 B

Cisco Nexus 5548 A and Cisco Nexus 5548 B

Configure Virtual Port Channels

Create VSANs, Assign and Enable Virtual Fibre Channel Ports

Create Device Aliases for FCoE Zoning

NetApp Storage Configuration Overview

Storage Configuration for FCoE Boot

Create and Configure Aggregate, Volumes and Boot LUNs

Create and Configure Initiator Group (igroup) and LUN mapping

Create and Configure Volumes and LUNs for Guest VMs

Create and Configure Initiator Group (igroup) and Mapping of LUN for Guest VM

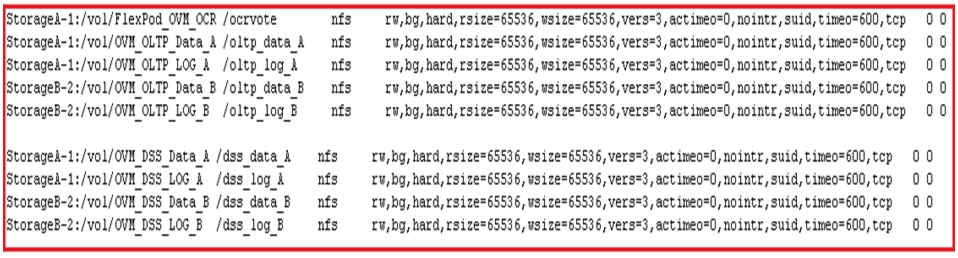

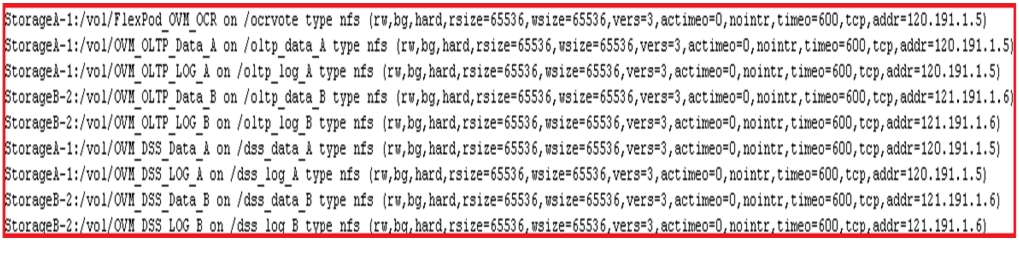

Storage Configuration for NFS Storage Network

Create and Configure Aggregate, Volumes

Create and Configure VIF Interface (Multimode)

VIF Configuration on Controller A

VIF Configuration on Controller B

Check the NetApp Configuration

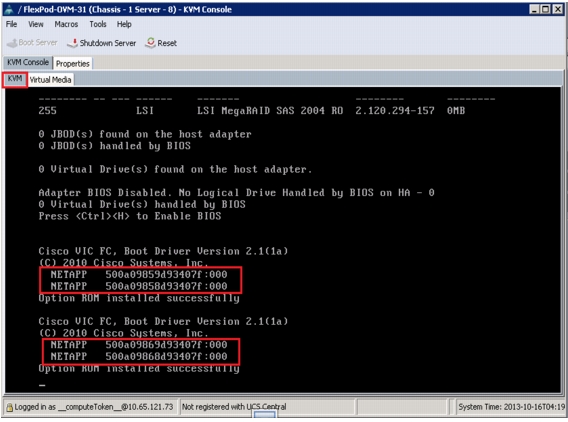

UCS Servers and Stateless Computing via FCoE Boot

Quick Summary for Boot from SAN Configuration

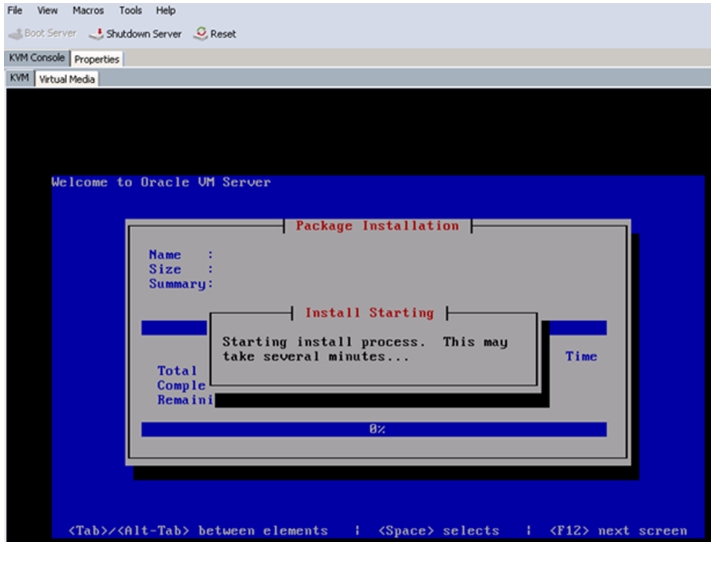

Oracle VM Server Install Steps and Recommendations

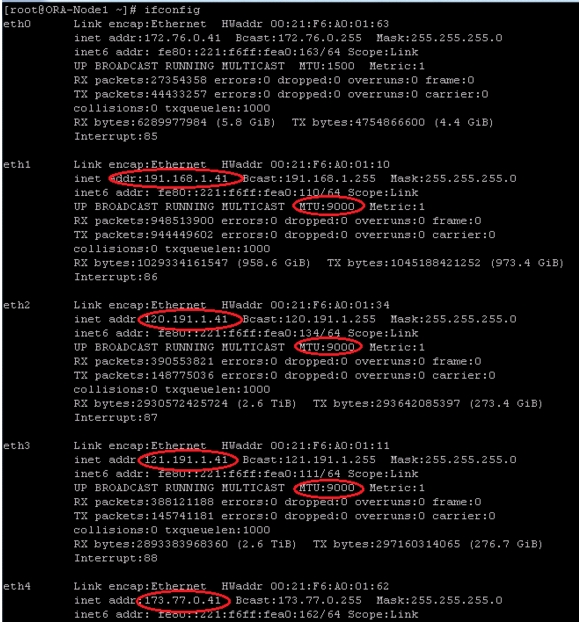

Oracle VM Server Network Architecture

Oracle VM Manager Installation

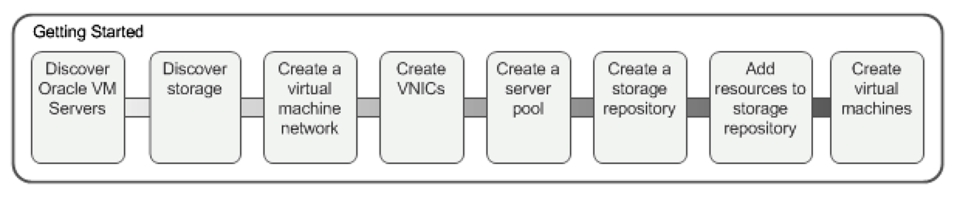

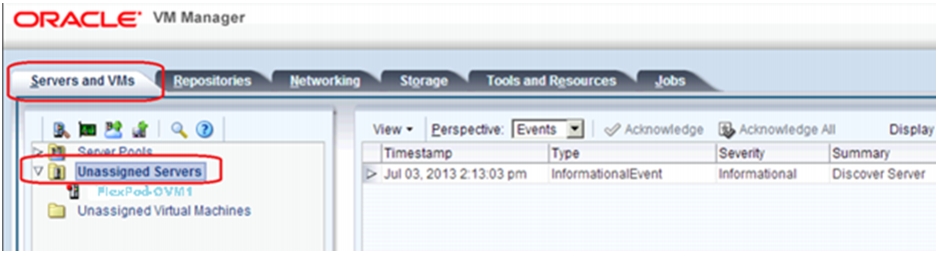

Oracle VM Server Configuration Using Oracle VM Manager

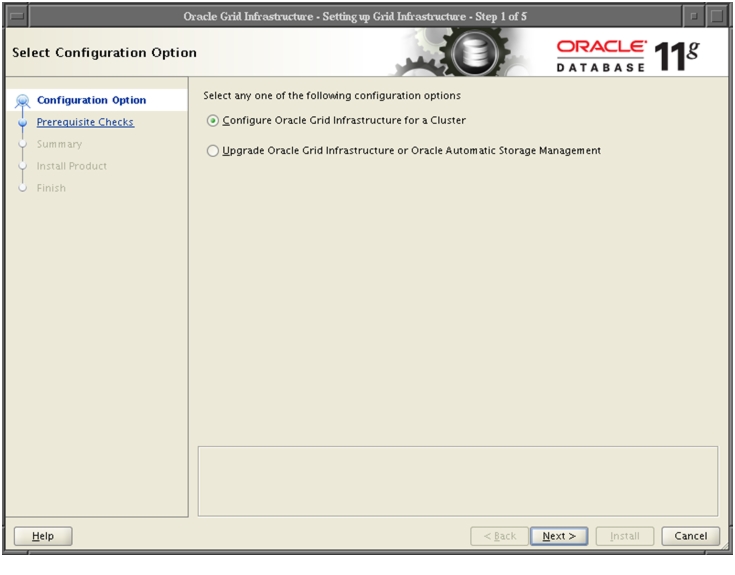

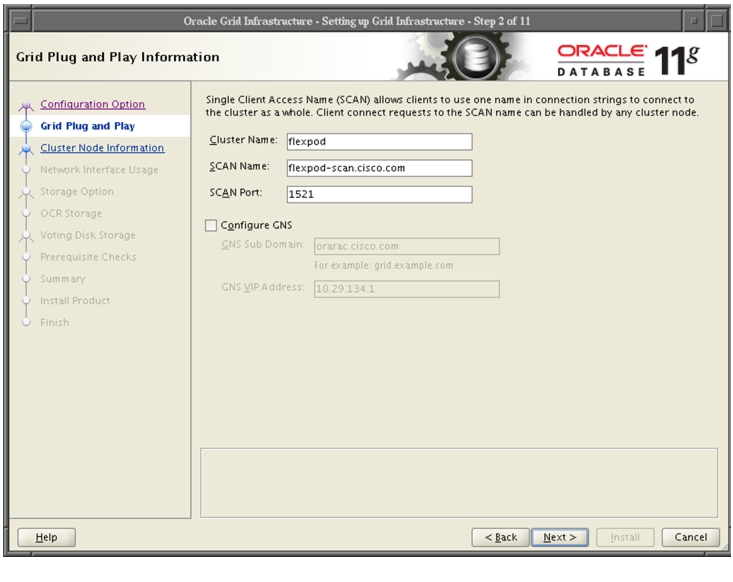

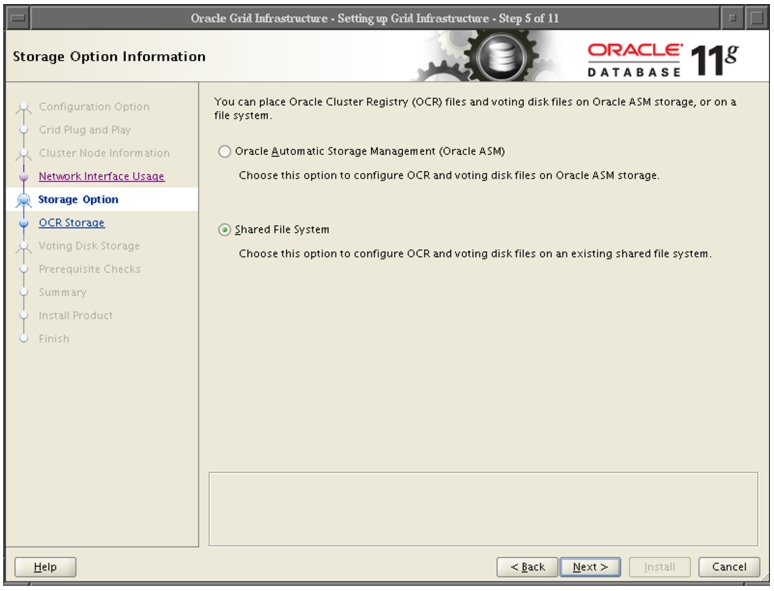

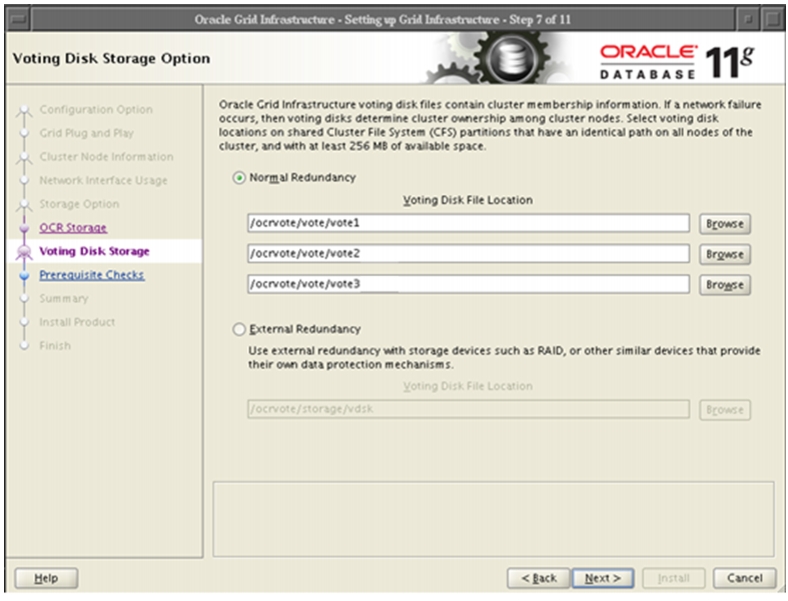

Oracle Database 11g Release 2 Grid Infrastructure with RAC Option Deployment

Installing Oracle RAC 11g Release 2

Workloads and Database Configuration

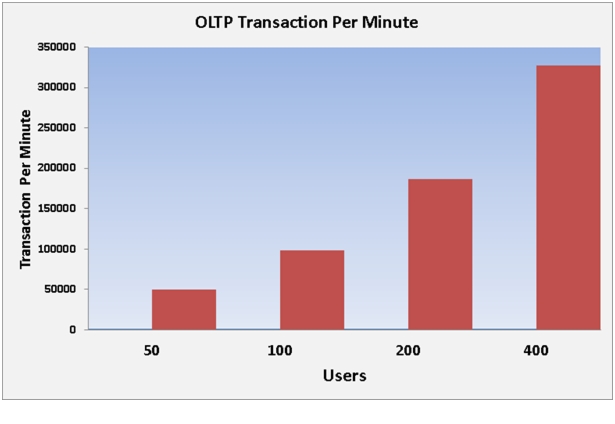

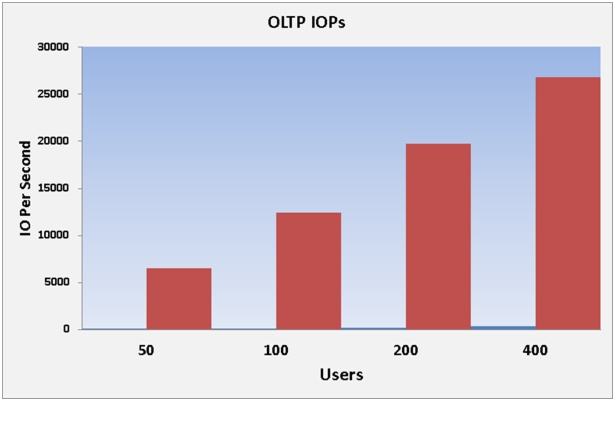

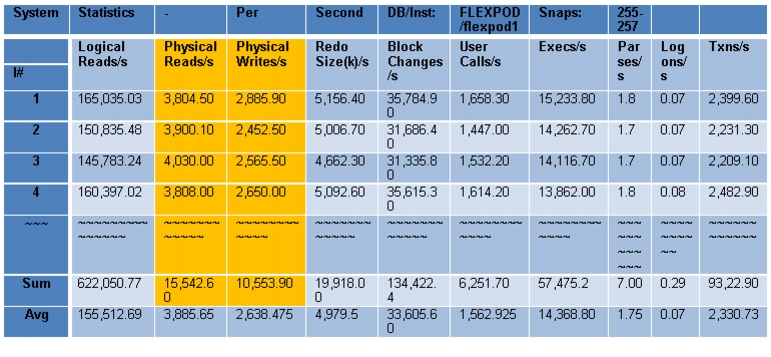

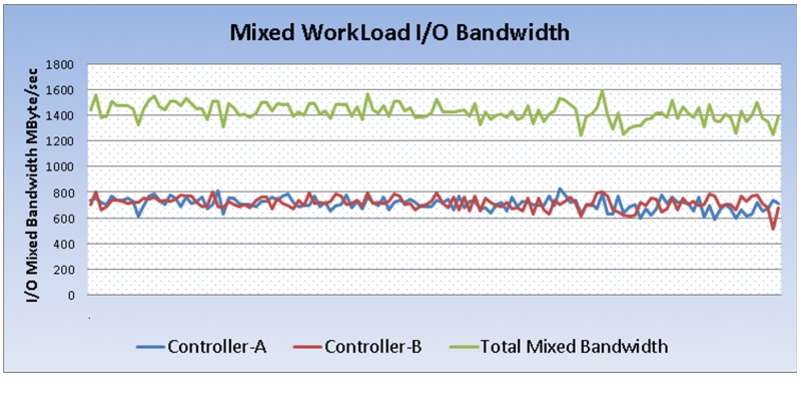

Performance Data from the Tests

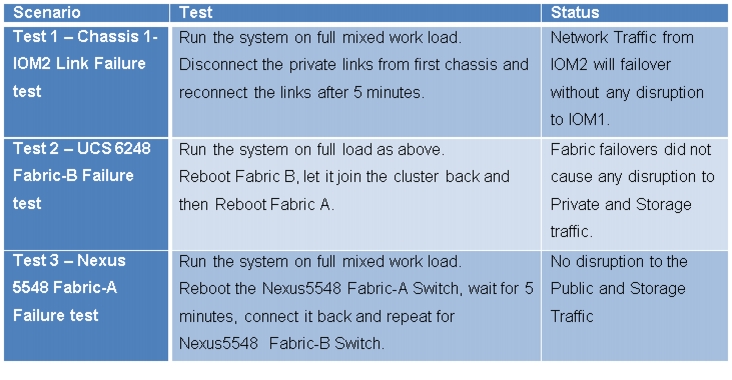

Destructive and Hardware failover Tests

Appendix A: Nexus 5548UP Configuration

Nexus 5548 Fabric A Configuration

Nexus 5548 Fabric B Configuration

Appendix B: Verify Oracle RAC Cluster Status Command Output

FlexPod Data Center with Oracle RAC on Oracle VM with 7-ModeDeployment Guide for FlexPod with Oracle Database 11g Release 2 RAC on Oracle VM 3.1.1November 22, 2013

Building Architectures to Solve Business Problems

About the Authors

Niranjan, Technical Marketing Engineer, SAVBU, Cisco SystemsNiranjan Mohapatra is a Technical Marketing Engineer in Cisco Systems Data Center Group (DCG) and specialist on Oracle RAC RDBMS. He has over 14 years of extensive experience on Oracle RAC Database and associated tools. Niranjan has worked as a TME and a DBA handling production systems in various organizations. He holds a Master of Science (MSc) degree in Computer Science and is also an Oracle Certified Professional (OCP -DBA) and NetApp accredited storage architect. Niranjan also has strong background in Cisco UCS, NetApp Storage and Virtualization.

Acknowledgment

For their support and contribution to the design, validation, and creation of the Cisco Validated Design, I would like to thank:

•

Siva Sivakumar- Cisco

•

Vadiraja Bhatt- Cisco

•

Tushar Patel- Cisco

•

Ramakrishna Nishtala- Cisco

•

John McAbel- Cisco

•

Steven Schuettinger- NetApp

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit:

http://www.cisco.com/go/designzone

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at http://www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

© 2013 Cisco Systems, Inc. All rights reserved.

FlexPod Data Center with Oracle RAC on Oracle VM

Executive Summary

Industry trends indicate a vast data center transformation toward shared infrastructures. Enterprise customers are moving away from silos of information and moving toward shared infrastructures to virtualized environments and eventually to the cloud to increase agility and operational efficiency, optimize resource utilization, and reduce costs.

FlexPod is a pretested data center solution built on a flexible, scalable, shared infrastructure consisting of Cisco UCS servers with Cisco Nexus® switches and NetApp unified storage systems running Data ONTAP. The FlexPod components are integrated and standardized to help you eliminate the guesswork and achieve timely, repeatable, consistent deployments. FlexPod has been optimized with a variety of mixed application workloads and design configurations in various environments such as virtual desktop infrastructure and secure multitenancy environments.

One main benefit of the FlexPod architecture is the ability to customize the environment to suit a customer's requirements. This is why the reference architecture detailed in this document highlights the resiliency, cost benefit, and ease of deployment of an FCoE-based storage solution. A storage system capable of serving multiple protocols across a single interface is the customer's choice and investment protection.

Large enterprises are adopting virtualization, have much higher I/O requirements. For them, FCoE is a better solution. Customers who have adopted Cisco® MDS 9000 family switches will probably prefer FCoE as it offers inherent coexistence with Fibre Channel, with no need to migrate existing Fibre Channel infrastructures. FCoE will take a large share of the SAN market. It will not make iSCSI obsolete, but it will reduce its potential market.

Virtualization started as a means of server consolidation, but IT needs are evolving as data centers are becoming service providers. An isolated hypervisor cannot provide the speed and time to market required to deploy a complete application stack. To realize the full benefits of virtualization, Oracle offers an integrated virtualization from desktop to the data center and enables you to virtualize and manage your complete hardware and software stack.

Oracle Real Application Clusters (RAC) allows an Oracle Database to run any packaged or custom applications, unchanged across a pool of servers. This provides the highest levels of RAS (Reliability, Availability and Scalability). If a server in the pool fails, the Oracle database continues to run on the remaining servers. When you need more processing power, simply add another server to the pool without taking users offline. Oracle Real Application Clusters provide a foundation for Oracle's Private Cloud Architecture. Oracle RAC 11g Release 2 in addition enables customers to build a dynamic private cloud infrastructure.

FlexPod Data Center with Oracle RAC on Oracle VM includes NetApp storage, Cisco® networking, Cisco UCS, and Oracle virtualization software in a single package. This solution is deployed and tested on a defined set of hardware and software.

This Cisco Validated Design describes how the Cisco Unified Computing System™ can be used in conjunction with NetApp FAS storage systems to implement an optimized system to run Oracle Real Application Clusters (RAC) in Oracle VM.

Target Audience

This document is intended to assist solution architects, project managers, infrastructure managers, sales engineers, field engineers, and consultants in planning, designing, and deploying Oracle Database 11g Release 2 RAC hosted on FlexPod. This document assumes that the reader has an architectural understanding of the Cisco Unified Computing System, Oracle 11g Release 2 Grid Infrastructure, Oracle Real Application Clusters, Oracle VM, NetApp storage systems, and related software.

Purpose of this Guide

This FlexPod CVD demonstrates how enterprises can apply best practices to deploy Oracle Database 11g Release 2 RAC using Oracle VM, Cisco Unified Computing System, Cisco Nexus family switches, and NetApp FAS storage. This design solution shows the deployment and scaling of a four-node Oracle Database 11g Release 2 RAC in a virtualized environment using typical OLTP and DSS workloads to demonstrate stability, performance and resiliency design as demanded by mission critical data center deployments.

Business Needs

Business applications are moving into integrated stacks consisting of compute, network, and storage. This FlexPod solution helps to reduce costs and complexity of a traditional Oracle Database 11g Release 2 RAC deployment. Following business needs for Oracle Database 11g Release 2 RAC deployment on Oracle VM are addressed by this solution.

•

Increasing DBA's productivity by ease of provisioning and simplified yet scalable architecture.

•

Reduced risk for a solution that is tested for end-to-end interoperability of compute, storage, and network.

•

Save costs, power, and lab space by reducing the number of physical servers.

•

Enable a global virtualization policy.

•

Create a balanced configuration that yields predictable purchasing guidelines at the computing, network, and storage tiers for a given workload.

•

With Oracle VM and Oracle RAC, which are referred to as complementary technologies, additional high availability can be achieved.

•

Oracle VM application-driven server virtualization is designed for rapid application deployment and ease of lifecycle management. Using Oracle VM Templates, entire application stacks can be deployed into your new FlexPod architecture in hours and minutes rather than days and weeks, helping to accelerate time to value, at the same time standardizing your application deployment process to ensure reliability and minimize risks.

•

Oracle offers a complete applications-to-disk stack, and virtualization is fully integrated across all layers. Oracle can provision and manage applications, middleware, and databases.

•

Benefits of using Oracle VM for Oracle RAC Databases are Sub-capacity licensing, Server Consolidation, Rapid provisioning and Create a virtual cluster.

Solution Overview

Oracle Database 11g Release 2 RAC on FlexPod with Oracle Direct NFS Client

This solution provides an end-to-end architecture with Cisco UCS, Oracle, and NetApp technologies that demonstrate the implementation of Oracle Database 11g Release 2 RAC on FlexPod and Oracle VM. This solution demonstrates the implementation, capabilities and advantages of Oracle Database 11g Release 2 RAC and Oracle VM on FlexPod.

The following infrastructure and software components are used for this solution:

•

Cisco Unified Computing System*

•

Cisco Nexus 5548UP switches

•

NetApp storage components

•

NetApp OnCommand® System Manager 2.1

•

Oracle VM

•

Oracle Database 11g Release 2 RAC

•

Swingbench benchmark kit for OLTP and DSS workloads.

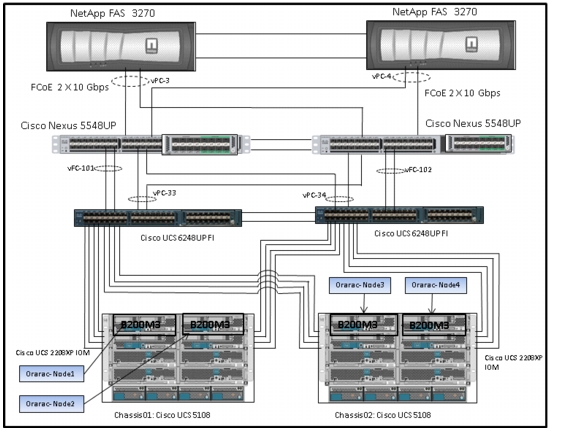

* Cisco Unified Computing System includes all the hardware and software components required for this deployment solution.Figure 1 shows the architecture and the connectivity layout for this deployment model.

Figure 1 Solution Architecture

Let us look at individual components that define this architecture.

Technology Overview

Cisco Unified Computing System

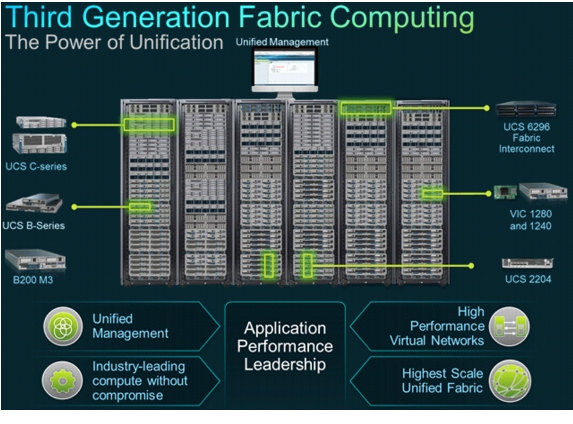

Figure 2 Cisco Unified Computing System

The Cisco Unified Computing System is a third-generation data center platform that unites computing, networking, storage access, and virtualization resources into a cohesive system designed to reduce TCO and increase business agility. The system integrates a low-latency, lossless 10 Gigabit Ethernet (10GbE) unified network fabric with enterprise-class, x86-architecture servers. The system is an integrated, scalable, multi-chassis platform in which all the resources participate in a unified management domain that is controlled and managed centrally.

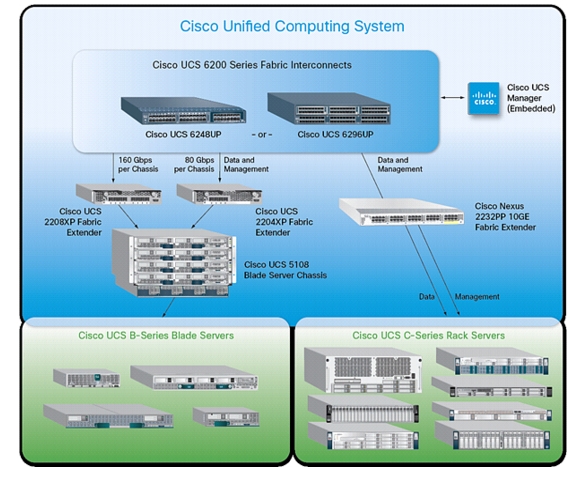

Figure 3 Cisco UCS Components

Figure 4 Cisco UCS Components

The main components of the Cisco UCS are:

•

Compute

The system is based on an entirely new class of computing system that incorporates blade servers based on Intel Xeon® E5-2600 Series Processors. Cisco UCS B-Series Blade Servers work with virtualized and non-virtualized applications to increase performance, energy efficiency, flexibility and productivity.

•

Network

The system is integrated onto a low-latency, lossless, 80-Gbps unified network fabric. This network foundation consolidates LANs, SANs, and high-performance computing networks which are separate networks today. The unified fabric lowers costs by reducing the number of network adapters, switches, and cables, and by decreasing the power and cooling requirements.

•

Storage access

The system provides consolidated access to both storage area network (SAN) and network-attached storage (NAS) over the unified fabric. By unifying storage access, Cisco UCS can access storage over Ethernet, Fiber Channel, Fiber Channel over Ethernet (FCoE), and iSCSI. This provides customers with the options for setting storage access and investment protection. Additionally, server administrators can reassign storage-access policies for system connectivity to storage resources, thereby simplifying storage connectivity and management for increased productivity.

•

Management

The system uniquely integrates all the system components which enable the entire solution to be managed as a single entity by the Cisco UCS Manager. The Cisco UCS Manager has an intuitive graphical user interface (GUI), a command-line interface (CLI), and a robust application programming interface (API) to manage all the system configuration and operations.

The Cisco UCS is designed to deliver:

•

A reduced Total Cost of Ownership (TCO), increased Return on Investment (ROI) and increased business agility.

•

Increased IT staff productivity through just-in-time provisioning and mobility support.

•

A cohesive, integrated system which unifies the technology in the data center. The system is managed, serviced and tested as a whole.

•

Scalability through a design for hundreds of discrete servers and thousands of virtual machines and the capability to scale I/O bandwidth to match demand.

•

Industry standards supported by a partner ecosystem of industry leaders.

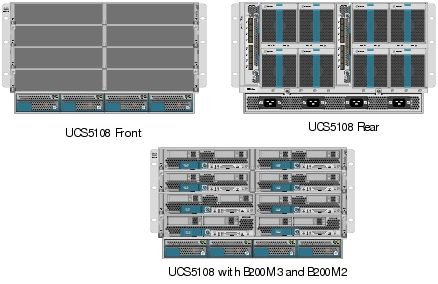

Cisco UCS Blade Chassis

The Cisco UCS 5100 Series Blade Server Chassis is a crucial building block of the Cisco Unified Computing System, delivering a scalable and flexible blade server chassis.

The Cisco UCS 5108 Blade Server Chassis is six rack units (6RU) high and can mount in an industry-standard 19-inch rack. A single chassis can house up to eight half-width Cisco UCS B-Series Blade Servers and can accommodate both half-width and full-width blade form factors.

Four single-phase, hot-swappable power supplies are accessible from the front of the chassis. These power supplies are 92 percent efficient and can be configured to support non-redundant, N+ 1 redundant and grid-redundant configurations. The rear of the chassis contains eight hot-swappable fans, four power connectors (one per power supply), and two I/O bays for Cisco UCS 2208 XP Fabric Extenders.

A passive mid-plane provides up to 40 Gbps of I/O bandwidth per server slot and up to 80 Gbps of I/O bandwidth for two slots. The chassis is capable of supporting future 80 Gigabit Ethernet standards.

Figure 5 Cisco Blade Server Chassis (Front, Rear and Populated with Blades View)

Cisco UCS B200 M3 Blade Server

The Cisco UCS B200 M3 Blade Server is a half-width, two-socket blade server. The system uses two Intel Xeon® E5-2600 Series Processors, up to 384 GB of DDR3 memory, two optional hot-swappable small form factor (SFF) serial attached SCSI (SAS) disk drives, and two VIC adaptors that provides up to 80 Gbps of I/O throughput. The server balances simplicity, performance, and density for production-level virtualization and other mainstream data center workloads.

Figure 6 Cisco UCS B200 M3 Blade Server

Cisco UCS Virtual Interface Card 1240

A Cisco innovation, the Cisco UCS VIC 1240 is a four-port 10 Gigabit Ethernet, FCoE-capable modular LAN on motherboard (mLOM) designed exclusively for the M3 generation of Cisco UCS B-Series Blade Servers. When used in combination with an optional port expander, the Cisco UCS VIC 1240 capabilities can be expanded to eight ports of 10 Gigabit Ethernet.

Cisco UCS 6248UP Fabric Interconnect

•

The Fabric interconnects provide a single point for connectivity and management for the entire system. Typically deployed as an active-active pair, the system's fabric interconnects integrate all the components into a single, highly-available management domain controlled by Cisco UCS Manager. The fabric interconnects manage all I/O efficiently and securely at a single point, resulting in deterministic I/O latency regardless of a server or virtual machine's topological location in the system.

•

Cisco UCS 6200 Series Fabric Interconnects support the system's 80-Gbps unified fabric with low-latency, lossless, cut-through switching that supports IP, storage, and management traffic using a single set of cables. The fabric interconnects feature virtual interfaces that terminate both physical and virtual connections equivalently, establishing a virtualization-aware environment in which blade, rack servers, and virtual machines are interconnected using the same mechanisms. The Cisco UCS 6248UP is a 1-RU fabric interconnect that features up to 48 universal ports that can support 80 Gigabit Ethernet, Fiber Channel over Ethernet, or native Fiber Channel connectivity.

Figure 7 Cisco UCS 6248UP Fabric Interconnect

Cisco UCS Manager

Cisco UCS Manager is an embedded, unified manager that provides a single point of management for Cisco UCS. Cisco UCS Manager can be accessed through an intuitive GUI, a command-line interface (CLI), or the comprehensive open XML API. It manages the physical assets of the server and storage and LAN connectivity, and it is designed to simplify the management of virtual network connections through integration with several major hypervisor vendors. It provides IT departments with the flexibility to allow people to manage the system as a whole, or to assign specific management functions to individuals based on their roles as managers of server, storage, or network hardware assets. It simplifies operations by automatically discovering all the components available on the system and enabling a stateless model for resource use.

Some of the key elements managed by Cisco UCS Manager include:

•

Cisco UCS Integrated Management Controller (IMC) firmware

•

RAID controller firmware and settings

•

BIOS firmware and settings, including server universal user ID (UUID) and boot order

•

Converged network adapter (CNA) firmware and settings, including MAC addresses and worldwide names (WWNs) and SAN boot settings

•

Virtual port groups used by virtual machines, using Cisco Data Center VM-FEX technology

•

Interconnect configuration, including uplink and downlink definitions, MAC address and WWN pinning, VLANs, VSANs, quality of service (QoS), bandwidth allocations, Cisco Data Center VM-FEX settings, and Ether Channels to upstream LAN switches

Cisco UCS is designed from the start to be programmable and self-integrating. A server's entire hardware stack, ranging from server firmware and settings to network profiles, is configured through model-based management. With Cisco virtual interface cards (VICs), even the number and type of I/O interfaces is programmed dynamically, making every server ready to power any workload at any time.

With model-based management, administrators manipulate a desired system configuration and associate a model's policy driven service profiles with hardware resources, and the system configures itself to match requirements. This automation accelerates provisioning and workload migration with accurate and rapid scalability. The result is increased IT staff productivity, improved compliance, and reduced risk of failures due to inconsistent configurations. This approach represents a radical simplification compared to traditional systems, reducing capital expenditures (CAPEX) and operating expenses (OPEX) while increasing business agility, simplifying and accelerating deployment, and improving performance.

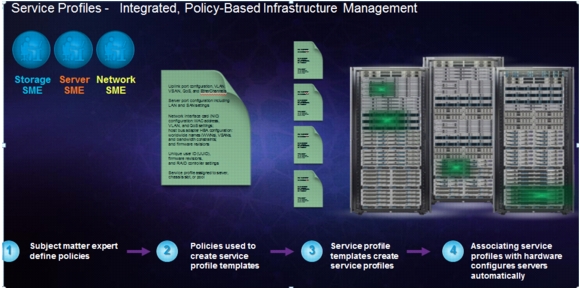

UCS Service Profiles

Figure 8 Traditional Provisioning Approach

A server's identity is made up of many properties such as UUID, boot order, IPMI settings, BIOS firmware, BIOS settings, RAID settings, disk scrub settings, number of NICs, NIC speed, NIC firmware, MAC and IP addresses, number of HBAs, HBA WWNs, HBA firmware, FC fabric assignments, QoS settings, VLAN assignments, remote keyboard/video/monitor etc. I think you get the idea. It's a LONG list of "points of configuration" that need to be configured to give this server its identity and make it unique from every other server within your data center. Some of these parameters are kept in the hardware of the server itself (like BIOS firmware version, BIOS settings, boot order, FC boot settings, etc.) while some settings are kept on your network and storage switches (like VLAN assignments, FC fabric assignments, QoS settings, ACLs, and so on.). This results in following server deployment challenges:

Lengthy deployment cycles

•

Every deployment requires coordination among server, storage, and network teams

•

Need to ensure correct firmware & settings for hardware components

•

Need appropriate LAN & SAN connectivity

Response time to business needs

•

Tedious deployment process

•

Manual, error prone processes, that are difficult to automate

•

High OPEX costs, outages caused by human errors

Limited OS and application mobility

•

Storage and network settings tied to physical ports and adapter identities

•

Static infrastructure leads to over-provisioning, higher OPEX costs

Cisco UCS has uniquely addressed these challenges with the introduction of service profiles (see Figure 9) that enables integrated, policy based infrastructure management. UCS Service Profiles hold the DNA for nearly all configurable parameters required to set up a physical server. A set of user defined policies (rules) allow quick, consistent, repeatable, and secure deployments of UCS servers.

Figure 9 Service Profiles

UCS Service Profiles contain values for a server's property settings, including virtual network interface cards (vNICs), MAC addresses, boot policies, firmware policies, fabric connectivity, external management, and high availability information. By abstracting these settings from the physical server into a Cisco Service Profile, the Service Profile can then be deployed to any physical compute hardware within the Cisco UCS domain. Furthermore, Service Profiles can, at any time, be migrated from one physical server to another. This logical abstraction of the server personality separates the dependency of the hardware type or model and is a result of Cisco's unified fabric model (rather than overlaying software tools on top).

This innovation is still unique in the industry despite competitors claiming to offer similar functionality. In most cases, these vendors must rely on several different methods and interfaces to configure these server settings. Furthermore, Cisco is the only hardware provider to offer a truly unified management platform, with UCS Service Profiles and hardware abstraction capabilities extending to both blade and rack servers.

Some of key features and benefits of UCS service profiles are:

•

Service Profiles and Templates

A service profile contains configuration information about the server hardware, interfaces, fabric connectivity, and server and network identity. The Cisco UCS Manager provisions servers utilizing service profiles. The UCS Manager implements a role-based and policy-based management focused on service profiles and templates. A service profile can be applied to any blade server to provision it with the characteristics required to support a specific software stack. A service profile allows server and network definitions to move within the management domain, enabling flexibility in the use of system resources.

Service profile templates are stored in the Cisco UCS 6200 Series Fabric Interconnects for reuse by server, network, and storage administrators. Service profile templates consist of server requirements and the associated LAN and SAN connectivity. Service profile templates allow different classes of resources to be defined and applied to a number of resources, each with its own unique identities assigned from predetermined pools.

The UCS Manager can deploy the service profile on any physical server at any time. When a service profile is deployed to a server, the Cisco UCS Manager automatically configures the server, adapters, Fabric Extenders, and Fabric Interconnects to match the configuration specified in the service profile. A service profile template parameterizes the UIDs that differentiate between server instances.

This automation of device configuration reduces the number of manual steps required to configure servers, Network Interface Cards (NICs), Host Bus Adapters (HBAs), and LAN and SAN switches.

•

Programmatically Deploying Server Resources

Cisco UCS Manager provides centralized management capabilities, creates a unified management domain, and serves as the central nervous system of the Cisco UCS. Cisco UCS Manager is embedded device management software that manages the system from end-to-end as a single logical entity through an intuitive GUI, CLI, or XML API. Cisco UCS Manager implements role- and policy-based management using service profiles and templates. This construct improves IT productivity and business agility. Now infrastructure can be provisioned in minutes instead of days, shifting IT's focus from maintenance to strategic initiatives.

•

Dynamic Provisioning

Cisco UCS resources are abstract in the sense that their identity, I/O configuration, MAC addresses and WWNs, firmware versions, BIOS boot order, and network attributes (including QoS settings, ACLs, pin groups, and threshold policies) all are programmable using a just-in-time deployment model. A service profile can be applied to any blade server to provision it with the characteristics required to support a specific software stack. A service profile allows server and network definitions to move within the management domain, enabling flexibility in the use of system resources. Service profile templates allow different classes of resources to be defined and applied to a number of resources, each with its own unique identities assigned from predetermined pools.

Cisco Nexus 5548UP Switch

The Cisco Nexus 5548UP is a 1RU 1 Gigabit and 10 Gigabit Ethernet switch offering up to 960 gigabits per second throughput and scaling up to 48 ports. It offers 32 1/10 Gigabit Ethernet fixed enhanced Small Form-Factor Pluggable (SFP+) Ethernet/FCoE or 1/2/4/8-Gbps native FC unified ports and three expansion slots. These slots have a combination of Ethernet/FCoE and native FC ports.

Figure 10 Cisco Nexus 5548UP switch

The Cisco Nexus 5548UP Switch delivers innovative architectural flexibility, infrastructure simplicity, and business agility, with support for networking standards. For traditional, virtualized, unified, and high-performance computing (HPC) environments, it offers a long list of IT and business advantages, including:

•

Architectural Flexibility

–

Unified ports that support traditional Ethernet, Fiber Channel (FC), and Fiber Channel over Ethernet (FCoE)

–

Synchronizes system clocks with accuracy of less than one microsecond, based on IEEE 1588

–

Supports secure encryption and authentication between two network devices, based on Cisco TrustSec IEEE 802.1AE

–

Offers converged Fabric extensibility, based on emerging standard IEEE 802.1BR, with Fabric Extender (FEX) Technology portfolio, including:

–

Cisco Nexus 2000 FEX

–

Adapter FEX

–

VM-FEX

•

Infrastructure Simplicity

–

Common high-density, high-performance, data-center-class, fixed-form-factor platform

–

Consolidates LAN and storage

–

Supports any transport over an Ethernet-based fabric, including Layer 2 and Layer 3 traffic

–

Supports storage traffic, including iSCSI, NAS, FC, RoE, and IBoE

–

Reduces management points with FEX Technology

•

Business Agility

–

Meets diverse data center deployments on one platform

–

Provides rapid migration and transition for traditional and evolving technologies

–

Offers performance and scalability to meet growing business needs

•

Specifications at a Glance

–

A 1 -rack-unit, 1/10 Gigabit Ethernet switch

–

32 fixed Unified Ports on base chassis and one expansion slot totaling 48 ports

–

The slot can support any of the three modules: Unified Ports, 1/2/4/8 native Fiber Channel, and Ethernet or FCoE

–

Throughput of up to 960 Gbps

NetApp Storage Technologies and Benefits

NetApp storage platform can handle different type of files and data from various sources—including user files, e-mail, and databases. Data ONTAP is the fundamental NetApp software platform that runs on all the NetApp storage systems. Data ONTAP is a highly optimized, scalable operating system that supports mixed NAS and SAN environments and a range of protocols, including Fiber Channel, iSCSI, FCoE, NFS, and CIFS. The platform includes the Write Anywhere File Layout (WAFL®) file system and storage virtualization capabilities. By leveraging the Data ONTAP platform, the NetApp Unified Storage Architecture offers the flexibility to manage, support, and scale to different business environments by using a common knowledge base and tools. This architecture enables users to collect, distribute, and manage data from all locations and applications at the same time. This allows the investment to scale by standardizing processes, cutting management time, and increasing availability. Figure 11 shows the various NetApp Unified Storage Architecture platforms.

Figure 11 NetApp Unified Storage Architecture Platforms

The NetApp storage hardware platform used in this solution is the FAS3270A. The FAS3200 series is an excellent platform for primary and secondary storage for an Oracle Database 11g Release 2 Grid Infrastructure deployment.

A number of NetApp tools and enhancements are available to augment the storage platform. These tools assist in deployment, backup, recovery, replication, management, and data protection. This solution makes use of a subset of these tools and enhancements.

Storage Architecture

The storage design for any solution is a critical element that is typically responsible for a large percentage of the solution's overall cost, performance, and agility.

The basic architecture of the storage system's software is shown in the figure below. A collection of tightly coupled processing modules handles CIFS, FCP, FCoE, HTTP, iSCSI, and NFS requests. A request starts in the network driver and moves up through network protocol layers and the file system, eventually generating disk I/O, if necessary. When the file system finishes the request, it sends a reply back to the network. The administrative layer at the top supports a command line interface (CLI) similar to UNIX® that monitors and controls the modules below. In addition to the modules shown, a simple real-time kernel provides basic services such as process creation, memory allocation, message passing, and interrupt handling.

The networking layer is derived from the same Berkeley code used by most UNIX systems, with modifications made to communicate efficiently with the storage appliance's file system. The storage appliance provides transport-independent seamless data access using block- and file-level protocols from the same platform. The storage appliance provides block-level data access over an FC SAN fabric using FCP and over an IP-based Ethernet network using iSCSI. File access protocols such as NFS, CIFS, HTTP, or FTP provide file-level access over an IP-based Ethernet network.

Figure 12 Storage Architecture

RAID-DP

RAID-DP® is NetApp's implementation of double-parity RAID 6, which is an extension of NetApp's original Data ONTAP WAFL® RAID 4 design. Unlike other RAID technologies, RAID-DP provides the ability to achieve a higher level of data protection without any performance impact, while consuming a minimal amount of storage. For more information on RAID-DP, see: http://www.netapp.com/us/products/platform-os/raid-dp.html

Snapshot

NetApp Snapshot technology provides zero-cost, near-instantaneous backup and point-in-time copies of the volume or LUN by preserving the Data ONTAP WAFL consistency points.

Creating Snapshot copies incurs minimal performance effect because data is never moved, as it is with other copy-out technologies. The cost for Snapshot copies is at the rate of block-level changes and not 100% for each backup, as it is with mirror copies. Using Snapshot can result in savings in storage cost for backup and restore purposes and opens up a number of efficient data management possibilities.

FlexVol

NetApp® FlexVol® storage-virtualization technology enables you to respond to changing storage needs fast, lower your overhead, avoid capital expenses, and reduce disruption and risk. FlexVol technology aggregates physical storage in virtual storage pools, so you can create and resize virtual volumes as your application needs change.

With FlexVol you can improve—even double—the utilization of your existing storage and save the expense of acquiring more disk space. In addition to increasing storage efficiency, you can improve I/O performance and reduce bottlenecks by distributing volumes across all the available disk drives.

NetApp Flash Cache

NetApp® Flash Cache controller-attached PCIe intelligent caching instead of more hard disk drives (HDDs) or solid-state drives (SSDs) to optimize your storage system performance.

Flash Cache speeds data access through intelligent caching of recently read user data or NetApp metadata. No setup or ongoing administration is needed, and operations can be tuned. Flash Cache works with all the NetApp storage protocols and software, enabling you to:

•

Increase I/O throughput by up to 75%

•

Use up to 75% fewer disk drives without compromising performance

•

Increase e-mail users by up to 67% without adding disk drives

For more information on RAID-DP, see: http://www.netapp.com/us/products/storage-systems/flash-cache/index.aspx

NetApp OnCommand System Manager 2.1

System Manager is a powerful management tool for NetApp storage that allows administrators to manage a single NetApp storage system as well as clusters, quickly and easily.

Some of the benefits of the System Manager Tool are:

•

Easy to install

•

Easy to manage from a Web browser

•

Does not require storage expertise

•

Increases storage productivity and response time

•

Cost effective

•

Leverages storage efficiency features such as thin provisioning and compression

Oracle VM 3.1.1

Oracle VM is a platform that provides a fully equipped environment with all the latest benefits of virtualization technology. Oracle VM enables you to deploy operating systems and application software within a supported virtualization environment. Oracle VM is a Xen-based hypervisor that runs at nearly bare-metal speeds.

Oracle VM Architecture

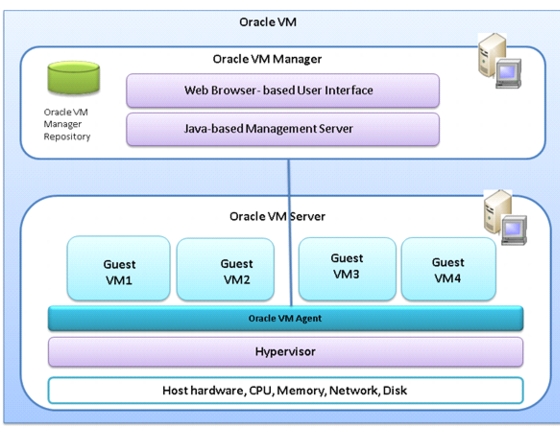

Figure 13 shows the Oracle VM architecture.

Figure 13 Oracle VM Architecture

The Oracle VM architecture has three main parts:

•

Oracle VM Manager

Provides the user interface, which is a standard ADF (Application Development Framework) web application, to manage Oracle VM Servers. Manages virtual machine lifecycle, including creating virtual machines from installation media or from a virtual machine template, deleting, powering off, uploading, deployment and live migration of virtual machines. Manages resources, including ISO files, virtual machine templates and sharable hard disks.

•

Oracle VM Server

A self-contained virtualization environment designed to provide a lightweight, secure, server-based platform for running virtual machines. Oracle VM Server is based upon an updated version of the underlying Xen hypervisor technology, and includes Oracle VM Agent.

•

Oracle VM Agent

Installed with Oracle VM Server. It communicates with Oracle VM Manager for management of virtual machines.

Advantage of Using Oracle VM for Oracle RAC Database

Oracle's virtualization technologies are an excellent delivery vehicle for Independent Software Vendors (ISV's) looking for a simple, easy-to-install and easy-to-support application delivery solution.

Oracle VM providing software based virtualization infrastructure (Oracle VM) and the market leading high availability solution Oracle Real Application Clusters (RAC), Oracle now offers a highly available, grid-ready virtualization solution for your data center, combining all the benefits of a fully virtualized environment.

The combination of Oracle VM and Oracle RAC enables a better server consolidation (RAC databases with under utilized CPU resources or peaky CPU utilization can often benefit from consolidation with other workloads using server virtualization) sub-capacity licensing, and rapid provisioning. Oracle RAC on Oracle VM also supports the creation of non-production virtual clusters on a single physical server for production demos and test/dev environments. This deployment combination permits dynamic changes to pre-configured database resources for agile responses to changing service level requirements common in consolidated environments.

Oracle VM is the only software based virtualization solution that is fully supported and certified for Oracle real Application Clusters.

There are several reasons why you may want to run Oracle RAC in an Oracle VM environment. The more more common reasons are:

•

Server consolidation

Oracle RAC databases or Oracle RAC One Node databases with under utilized CPU resources or variable CPU utilization can often benefit from consolidation with other workloads using server virtualization. A typical use case for this scenario would be the consolidation of several Oracle databases (Oracle RAC, Oracle RAC One Node or Oracle single instance databases) into a single Oracle RAC database or multiple Oracle RAC databases where the hosting Oracle VM guests have pre-defined resource limits configured for each VM guest.

•

Sub-capacity licensing

The current Oracle licensing model requires the Oracle RAC database to be licensed for all CPUs on each server in the cluster. Sometimes customers wish to use only a subset of the CPUs on the server for a particular Oracle RAC database. Oracle VM can be configured in such way that it is recognized as a hard partition. Hard partitions allow customers to only license those CPUs used by the partition instead of licensing all CPUs on the physical server. More information on sub-capacity licensing using hard partitioning can be found in the Oracle partitioning paper. For more information on using hard partitioning with Oracle VM refer to the "Hard Partitioning with Oracle VM" white paper.

•

Create a virtual cluster

Oracle VM enables the creation of a virtual cluster on a single physical server. This use case is particularly interesting for product demos, educational settings, and test environments. This configuration should never be used to run production Oracle RAC environments. The following are valid deployments for this use case:

–

Test and development cluster

–

Demonstration cluster

–

Education cluster

•

Rapid provisioning

The provisioning time of a new application consists of the server (physical or virtual) deployment time, and the software install and configuration time. Oracle VM can help reduce the deployment time for both of these components. Oracle VM supports the ability to create deployment templates. These templates can then be used to rapidly provision new (Oracle RAC) systems.

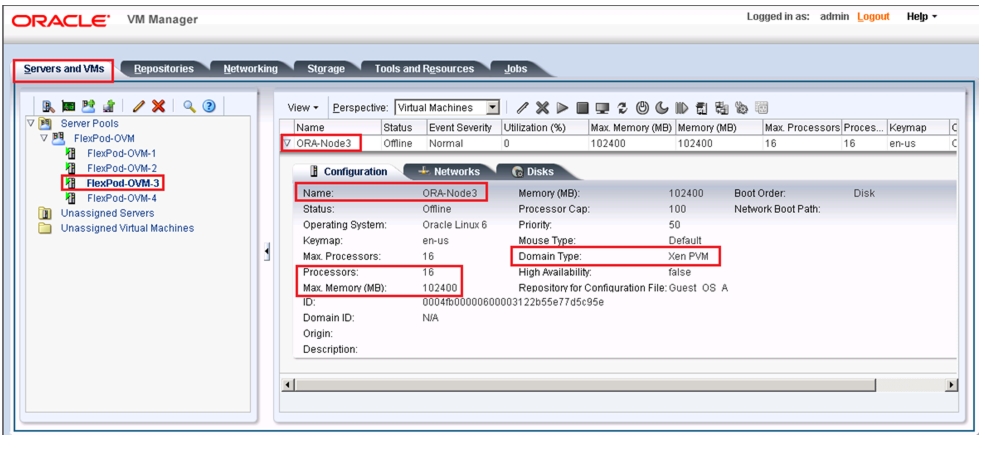

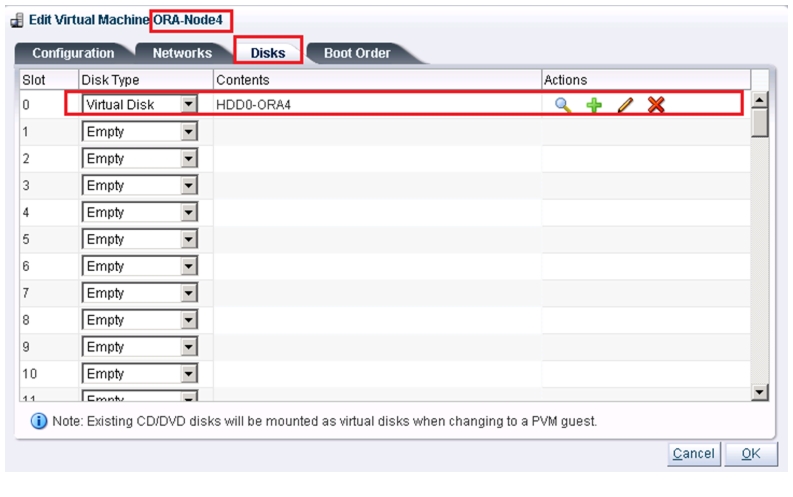

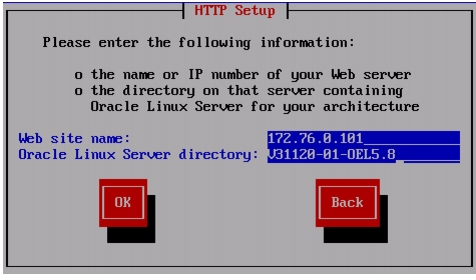

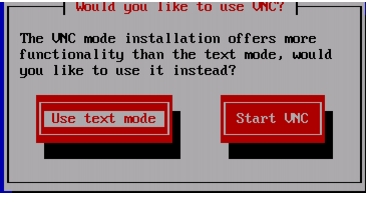

For Oracle RAC, currently only para-virtualized VM (PVM) mode is supported. Some of the advantages of using para-virtualized VM mode is mentioned in the next sub-section.

Para-virtualized VM (PVM)

Guest virtual machines running on Oracle VM server should be configured in para-virtualized virtualization mode. In this mode the kernel of the guest operating system is modified to distinguish that it is running on a hypervisor instead of on the bare metal hardware. As a result, I/O actions and system clock timers in particular are handled more efficiently, as compared with non para-virtualized systems where I/O hardware and timers have to be emulated in the operating system. Oracle VM supports PV kernels for Oracle Linux and Red Hat Enterprise Linux, offering better performance and scalability.

Oracle Database 11g Release 2 RAC

Oracle Database 11g Release 2 provides the foundation for IT to successfully deliver more information with higher quality of service, reduce the risk of change within IT, and make more efficient use of IT budgets.

Oracle Database 11g Release 2 Enterprise Edition provides industry-leading performance, scalability, security, and reliability on a choice of clustered or single-servers with a wide range of options to meet user needs. Cloud computing relieves users from concerns about where data resides and which computer processes the requests. Users request information or computation and have it delivered - as much as they want, whenever they want it. For a DBA, the cloud is about resource allocation, information sharing, and high availability. Oracle Database with Real Application Clusters provide the infrastructure for your database cloud. Oracle Automatic Storage Management provides the infrastructure for a storage cloud. Oracle Enterprise Manager Cloud Control provides you with holistic management of your could.

Oracle Database 11g Direct NFS Client

Direct NFS client is an Oracle developed, integrated, and optimized client that runs in user space rather than within the operating system kernel. This architecture provides for enhanced scalability and performance over traditional NFS v3 clients. Unlike traditional NFS implementations, Oracle supports asynchronous I/O across all operating system environments with Direct NFS client. In addition, performance and scalability are dramatically improved with its automatic link aggregation feature. This allows the client to scale across as many as four individual network pathways with the added benefit of improved resiliency when Network connectivity is occasionally compromised. It also allows Direct NFS client to achieve near block level Performance. For more information on Direct NFS Client comparison to block protocols, see: http://media.netapp.com/documents/tr-3700.pdf.

Design Topology

This section presents physical and logical high-level design considerations for Cisco UCS networking and computing on NetApp storage for Oracle Database 11g Release 2 RAC deployments.

Hardware and Software used for this Solution

Table 1 shows the Software and Hardware Used for Oracle Database 11g Release 2 Grid Infrastructure with the Oracle RAC Option Deployment

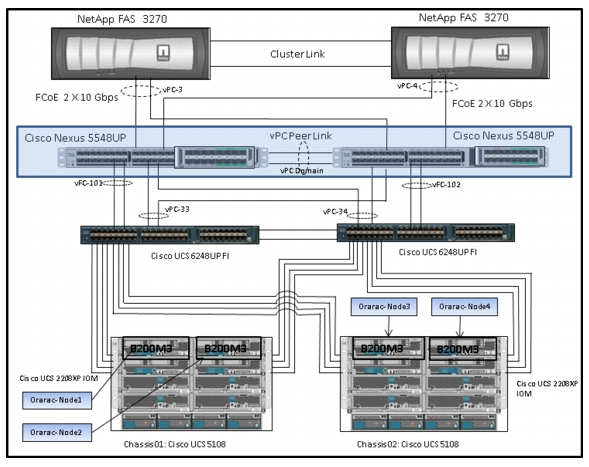

Cisco UCS Networking and NetApp NFS Storage Topology

This section explains Cisco UCS networking and computing design considerations when deploying Oracle Database 11g Release 2 RAC in an NFS Storage Design. In this design, the NFS traffic is isolated from the regular management and application data network using the same Cisco UCS infrastructure by defining logical VLAN networks to provide better data security. Figure 14, presents a detailed view of the physical topology, and some of the main components of Cisco UCS in an NFS network design.

Figure 14 Cisco UCS Networking and NFS Storage Network Topology

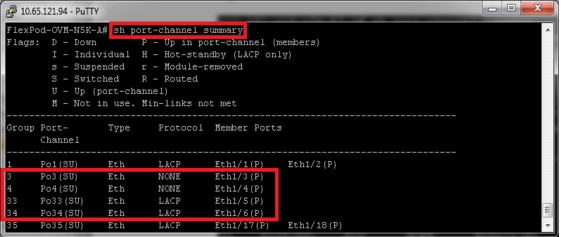

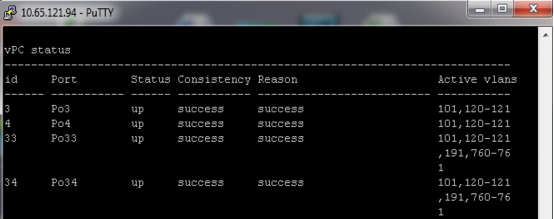

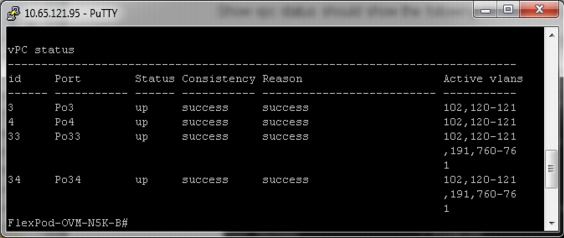

Table 2 vPC Details

Public

33

760,761,191,120,121

Private

34

760,761,191,120,121

NetApp-Storage1

3

120,121

NetApp-Storage2

4

120,121

As shown in Figure 14, a pair of Cisco UCS 6248UP fabric interconnects carries both storage and network traffic from the blades with the help of Cisco Nexus 5548UP switch. The 10GB FCoE traffic leaves the UCS Fabrics through Nexus 5548 Switches to NetApp Array. As larger enterprises are adopting virtualization, they have much higher I/O requirements. To effectively handle the higher I/O requirements, FCoE boot is a better solution.

Both the fabric interconnect and the Cisco Nexus 5548UP switch are clustered with the peer link between them to provide high availability. Two virtual Port Channels (vPCs) are configured to provide public network, private network and storage access paths for the blades to northbound switches. Each vPC has VLANs created for application network data, NFS storage data, and management data paths. For more information about vPC configuration on the Cisco Nexus 5548UP Switch, see:

As illustrated in Figure 14, 8 (4 per chassis) links go to Fabric Interconnect A (ports 1 through 8). Similarly, 8 links go to Fabric Interconnect B. Fabric Interconnect A links are used for Oracle Public network and NFS Storage Network traffic and Fabric Interconnect B links are used for Oracle private interconnect traffic and NFS Storage network traffic.

Note

For an Oracle RAC configuration on UCS, we recommend to keep all private interconnects local on a single Fabric interconnect. In such case, the private traffic will stay local to that fabric interconnect and will not be routed via northbound network switch. In other words, all inter blade (or Oracle RAC node private) communication will be resolved locally at the fabric interconnect and this significantly reduces latency for Oracle Cache Fusion traffic.

Cisco UCS Manager Configuration Overview

High Level Steps for Cisco UCS Configuration

Given below are high level steps involved for a Cisco UCS configuration:

1.

Configuring Fabric Interconnects for Chassis and Blade Discovery

a.

Configure Global Policies

b.

Configuring Server Ports

2.

Configuring LAN and SAN on UCS Manager

a.

Configure and Enable Ethernet LAN uplink Ports

b.

Configure and Enable FC SAN uplink Ports

c.

Configure VLAN

d.

Configure VSAN

3.

Configuring UUID, MAC, WWWN and WWPN Pool

a.

UUID Pool Creation

b.

IP Pool and MAC Pool Creation

c.

WWNN Pool and WWPN Pool Creation

4.

Configuring vNIC and vHBA Template

a.

Create vNIC templates

b.

Create Public vNIC template

c.

Create Private vNIC template

d.

Create Storage vNIC template

e.

Create HBA templates

5.

Configuring Ethernet Uplink Port Channels

6.

Create Server Boot Policy for SAN Boot

Details for each step are discussed in the following subsequent sections.

Configuring Fabric Interconnects for Blade Discovery

Cisco UCS 6248 UP Fabric Interconnects are configured for redundancy. It provides resiliency in case of failures. The first step is to establish connectivity between the blades and fabric interconnects.

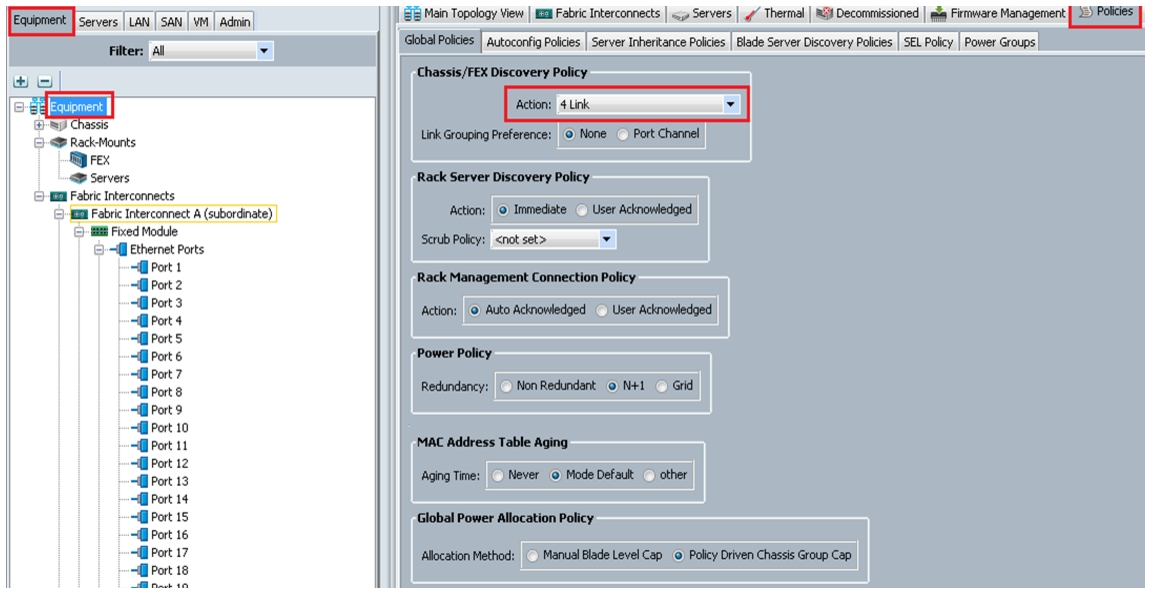

Configure Global Policies

To configure global policies, follow theses steps

1.

Log into UCS Manager.

2.

Click the Equipment tab in the navigation pane.

3.

Choose Equipment > Policies > Global Policies.

4.

Under Chassis/FEX Discovery Policy field select 4-link from the Action drop-down list.

Figure 15 Configure Global Policy

Configuring Server Ports

To configure server ports, follow these steps:

1.

Log into UCS Manager.

2.

Click the Equipment tab in the navigation pane.

3.

Choose Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

4.

Select the desired number of ports by using the CTRL key and mouse click combination.

5.

Right click and choose Configure as Server Port as show in Figure 16.

Figure 16 Configuring Ethernet Ports as Server Ports

Figure 17 Configured Server Ports

Configuring LAN and SAN on UCS Manager

Perform LAN and SAN configuration steps in UCS Manager as shown in the figures below.

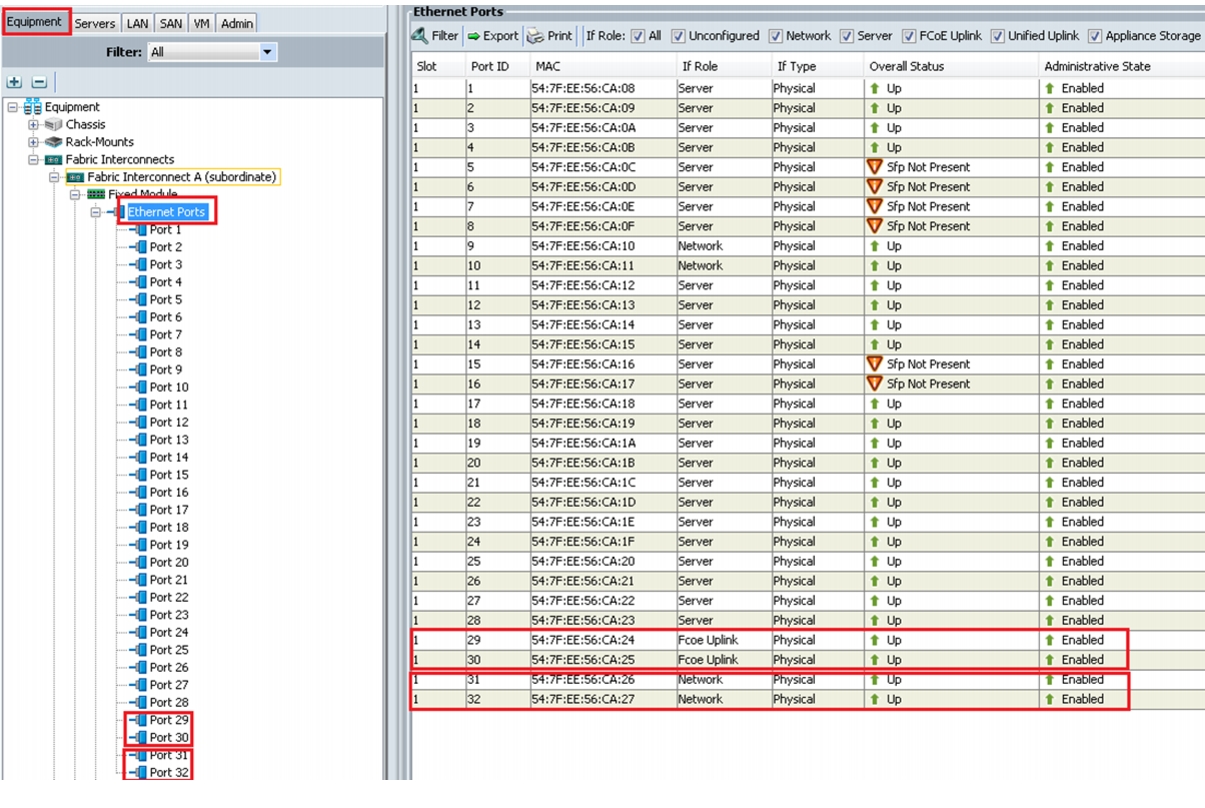

Configure and Enable Ethernet LAN Uplink Ports

To configure and enable Ethernet LAN uplink ports, follow these steps:

1.

Log into UCS Manager.

2.

Click the Equipment tab in the navigation pane.

3.

Choose Equipment > Fabric Interconnects > Fabric Interconnect A > Fixed Module > Ethernet Ports.

4.

Select the desired number of ports by using the CTRL key and click the combination.

5.

Right-click and choose Configure as Uplink Port as shown in Figure 18.

Figure 18 Configure Ethernet LAN Uplink Ports

As shown Figure 18, we have selected Port 31 and 32 on Fabric interconnect A and configured them as Ethernet uplink ports. Repeat the same step on Fabric interconnect B to configure Port 31 and 32 as Ethernet uplink ports. We have selected port 29 and Port 30 on both the fabrics and configured them as FCoE Uplink ports for FCoE boot.

Note

You will use these ports to create port channels in later sections.

Important Oracle RAC Best Practices and Recommendations for vLANs and vNIC Configuration

•

For Direct NFS clients running on Linux, best practices recommend always to use multipaths in separate subnets. If multiple paths are configured in the same subnet, the operating system invariably picks the first available path from the routing table. All the traffic flows through this path and the load balancing and scaling do not work as expected. Please refer to Oracle metalink note 822481.1 for more details.

For this configuration, we have created VLAN 120 and VLAN 121 for storage access, and VSAN 101 and VSAN 102 for FCoE boot.

•

Oracle Grid Infrastructure can activate a maximum of four private network adapters for availability and bandwidth requirements. If you want to configure HAIP for Grid Infrastructure, you will need to create additional vNICs. We strongly recommend using a separate VLAN for each private vNIC. For Cisco UCS, a single UCS 10GE private vNIC configured with failover does not require HAIP configuration from bandwidth and availability perspective. As a general best practice, it is a good idea to localize all the private interconnect traffic to single fabric interconnect. For more information on Oracle HAIP, please refer to Oracle metalink note 1210883.1.

Note

After selection of VLAN and vNICs, you can configure vLANs for this setup.

Configure VLAN

To configure VLAN, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the LAN tab in the navigation pane.

3.

Choose LAN > LAN Cloud > VLAN.

4.

Right click and choose Create VLANs.

In this solution, we need to create five VLANs:

•

One for private (VLAN 191)

•

One for public network (VLAN 760)

•

Two for storage traffic (VLAN 120 and 121)

•

One for live migration (VLAN 761).

Note

These five VLANs will be used in the vNIC templates.

Figure 19 Create VLAN for Public Network

In Figure 19, we have highlighted VLAN 760 creation for public network. It is also very important that you create both VLANs as global across both fabric interconnects. This way, VLAN identity is maintained across the fabric interconnects in case of NIC failover.

Create VLANs for public, storage and live migration. In case you are using Oracle HAIP feature, you may have to configure additional vlans to be associated with additional vnics as well.

Here is the summary of VLANs once you complete VLAN creation.

•

VLAN ID 760 for public interfaces.

•

VLAN ID 191 for Oracle RAC private interconnect interfaces.

•

VLAN ID 120 and VLAN 121 for storage access.

•

VLAN ID 761 for live migration.

Note

Even though private VLAN traffic stays local within UCS domain during normal operating conditions, it is necessary to configure entries for these private VLANs in northbound network switch. This will allow the switch to route interconnect traffic appropriately in case of partial link failures. These scenarios and traffic routing are discussed in details in later sections.

Figure 20 summarizes all the VLANs for Public and Private network and Storage access.

Figure 20 VLAN Summary

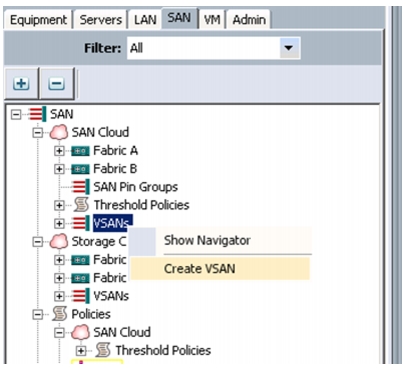

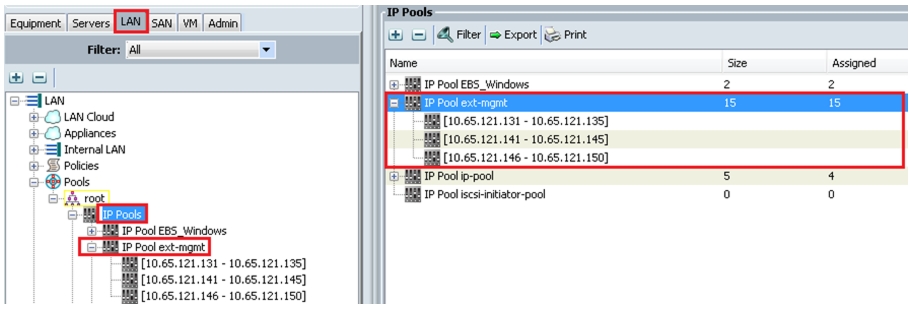

Configure VSAN

To configure VSAN, follow these steps:

1.

Log into Cisco UCS Manager

2.

Click the SAN tab in the navigation pane.

3.

Choose SAN > SAN Cloud > VSANs.

4.

Right-click and choose Create VSAN. See Figure 21.

Note

In this study we created VSAN 25 for SAN Boot.

Figure 21 Configuring VSAN in UCS Manager

Figure 22 Creating VSAN for Fabric A

We have created a VSAN on both the Fabrics. For the VSAN on Fabric A the VSAN ID is 101 and FCoE VLAN ID is 101 and similarly, for Fabric B the VSAN ID is 102 and the FCoE VLAN ID is 102.

Note

It is mandatory to specify VLAN ID even if FCoE traffic for SAN Storage is not used.

Figure 23 shows the created VSANs in UCS Manager.

Figure 23 VSAN Summary

Configure Pools

After VLANs and VSAN are created, configure pools for UUID, MAC Addresses, Management IP and WWN.

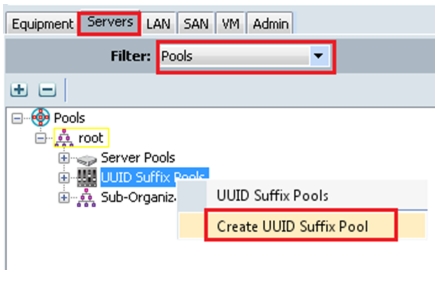

UUID Pool Creation

To create UUID pools, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the Servers tab in the navigation pane.

3.

Choose Servers > Pools > UUID Suffix Pools.

4.

Right-click and choose Create UUID Suffix Pool, to create a new pool. See Figure 24.

Figure 24 Create UUID Pools

As shown in Figure 25, we have created Flexpod-OVM-UUID.

Figure 25 UUID Pool Summary

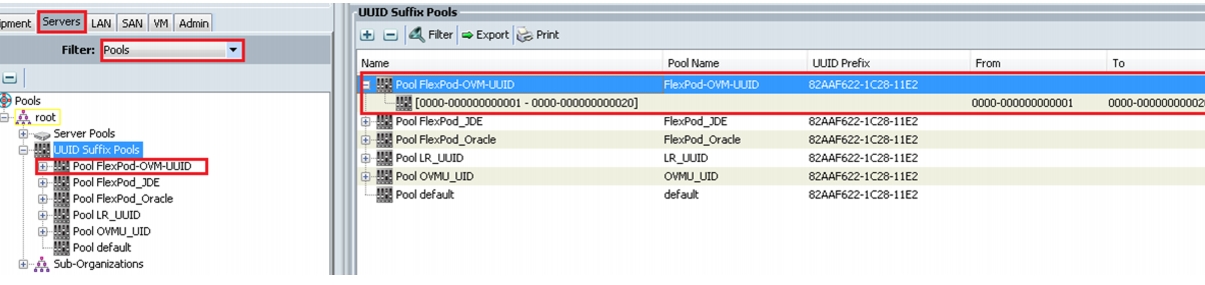

IP Pool and MAC Pool Creation

To create IP and MAC pools, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the LAN tab in the navigation pane.

3.

Choose LAN > Pools > IP Pools

4.

Right-click and choose Create IP Pool Ext-mgmt.

Figure 26 shows the creation of ext-mgmt IP pool.

Figure 26 Create IP Pool

To create MAC pools, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the LAN tab in the navigation pane.

3.

Choose LAN > Pools > MAC Pools

4.

Right-click and choose Create MAC Pools.

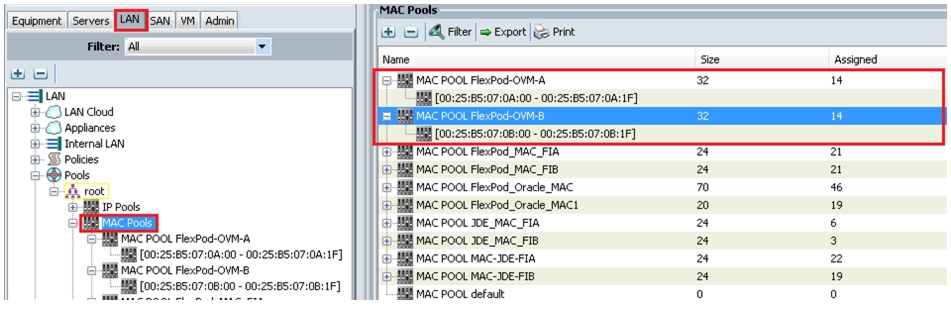

Figure 27 shows the creation of all the vNIC MAC pool addresses for Flexpod-OVM-A and Flexpod-OVM-B.

Figure 27 Create MAC Pool

Note

The IP pools will be used for console management, while MAC addresses will be used for the vNICs.

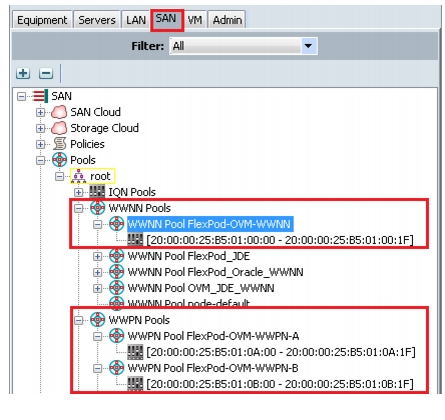

WWNN Pool and WWPN Pool Creation

To create WWNN and WWPN pools, follow these steps:

1.

Log into UCS Manager

2.

Click the SAN tab in the navigation pane.

3.

Choose SAN > Pools > WWNN Pools.

4.

Right-click and choose Create WWNN Pools.

5.

Choose SAN > Pools > WWPN Pools.

6.

Right-click and choose Create WWPN Pools.

Note

The WWNN and WWPN entries will be used for Boot from SAN configuration.

Figure 28 shows the creation of Flexpod-OVM WWNN, and Flexpod-OVM-A WWPN and Flexpod-OVM-B WWPN.

Figure 28 Create WWNN and WWPN Pool

Note

This completes pool creation for this setup. Next, you need to create vNIC and vHBA templates.

Set Jumbo Frames in Both the Cisco UCS Fabrics

To configure jumbo frames and enable quality of service in the Cisco UCS Fabric, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the LAN tab in the navigation pane.

3.

Choose LAN > LAN Cloud > QoS System Class.

4.

In the right pane, click the General tab.

5.

On the Best Effort row, enter 9216 in the box under the MTU column.

6.

Click Save Changes.

7.

Click OK.

Figure 29 Setting Jumbo Frame

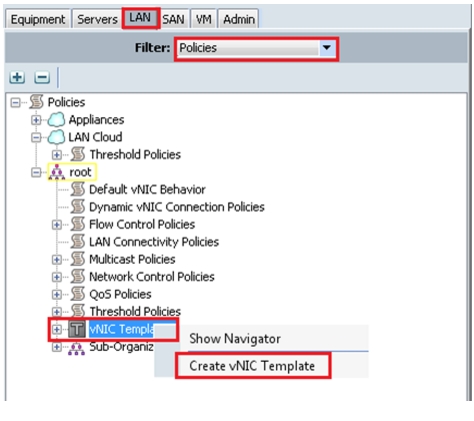

Configure vNIC and vHBA Templates

Create vNIC Templates

To create vNIC templates, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Choose the LAN tab in the navigation pane.

3.

Choose LAN > Policies > vNIC Templates.

4.

Right-click and choose Create vNIC Template. See Figure 30.

Figure 30 Create vNIC Template

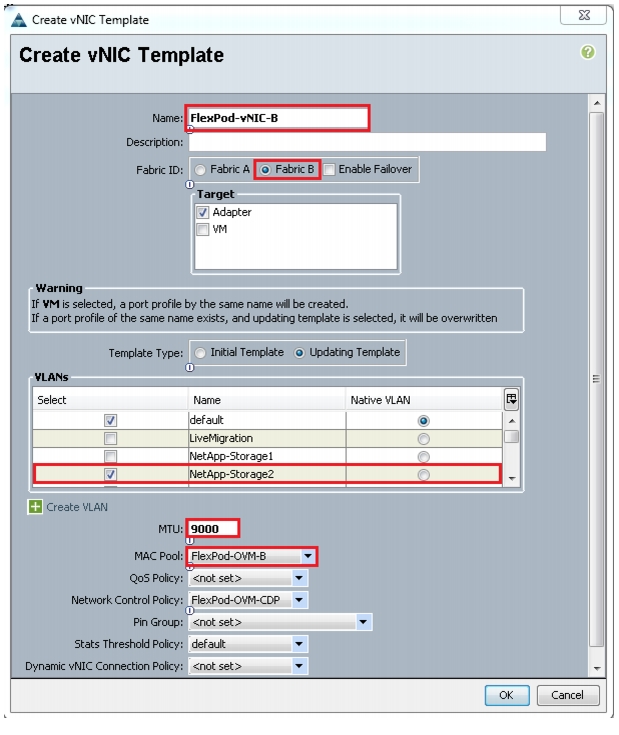

Figure 31 and Figure 32 show vNIC templates for Fabric A and Fabric B.

Figure 31 vNIC Template for Fabric A

Figure 32 vNIC Template for Fabric B

Figure 33 shows the vNIC template summary.

Figure 33 vNIC Template Summary

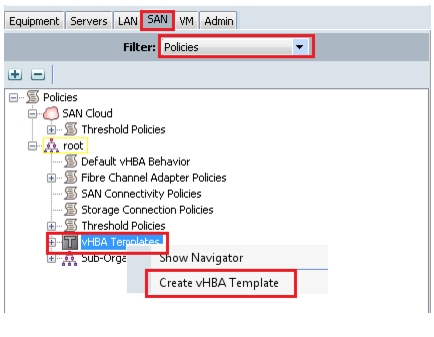

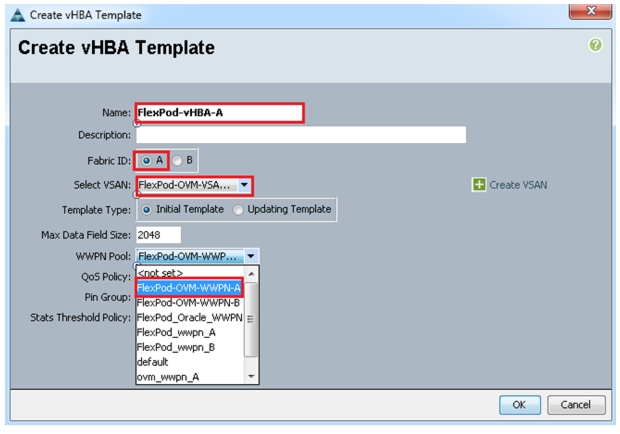

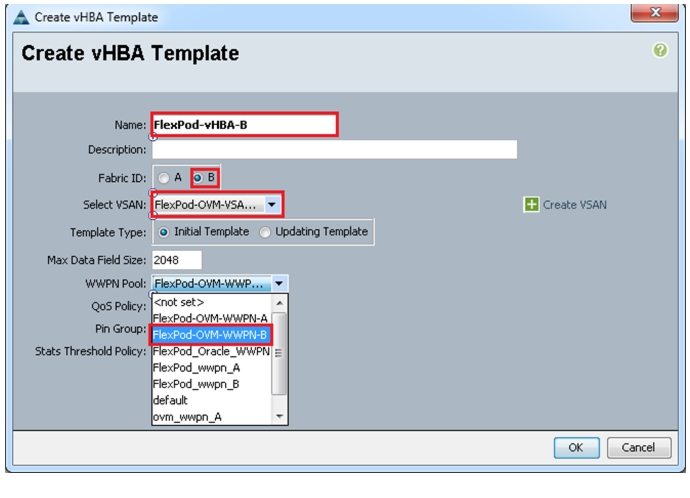

Create vHBA templates

To create vHBA templates, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the SAN tab in the navigation pane.

3.

Choose SAN > Policies > vHBA Templates.

4.

Right-click and choose Create vHBA Template. See Figure 34.

Figure 34 Create vHBA Templates

Figure 35 vHBA Template for Fabric A

Figure 36 vHBA Template for Fabric B

Figure 35 and Figure 36 show two vHBA templates created, HBA Template Flexpod-vHBA-A, and HBA Template Flexpod-vHBA-B.

Next, we will configure Ethernet uplink port channels.

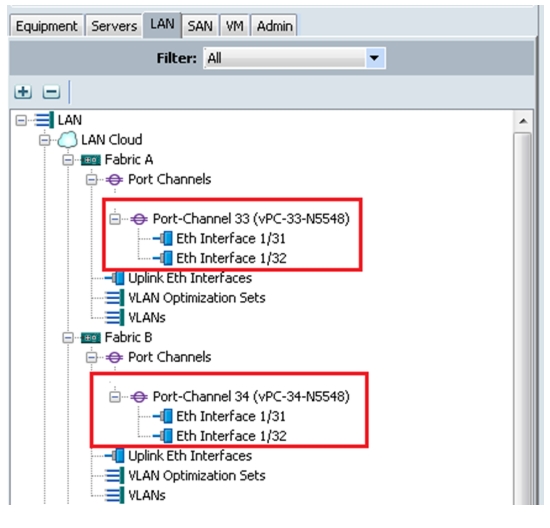

Configure Ethernet Uplink Port Channels

To configure Ethernet uplink port channels, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Choose the LAN tab in the navigation pane.

3.

Choose LAN > LAN Cloud > Fabric A > Port Channels.

4.

Right-click and choose Create Port-Channel.

5.

Select the desired Ethernet Uplink ports configured earlier for Channel A.

6.

Choose LAN > LAN Cloud > Fabric B> Port Channels.

7.

Right-click and choose Create Port-Channel.

8.

Select the desired Ethernet Uplink ports configured earlier for Channel B.

Note

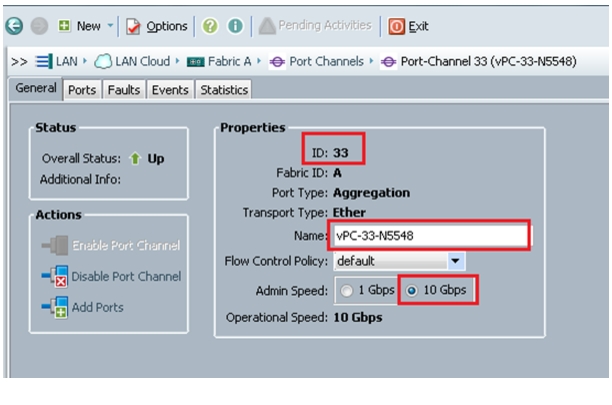

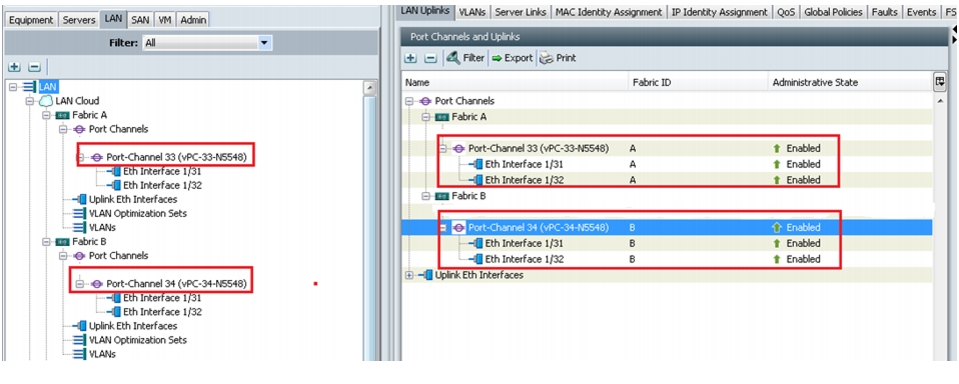

In the current setup, we have used ports 31 and 32 on Fabric A and configured as port channel 33. Similarly, ports 31 and 32 on Fabric B are configured to create port channel 34.

Figure 37 and Figure 38 show the configuration of port channels for Fabric A and Fabric B.

Figure 37 Configuring Port Channels

Figure 38 Fabric A Ethernet Port-Channel Details

Figure 39 shows the configured port-channels on Fabric A and Fabric B.

Figure 39 Port-Channels on Fabric A and Fabric B

Once the above preparation steps are complete we are ready to create a service template from which the service profiles can be easily derived.

Create Local Disk Configuration Policy (Optional)

A local disk configuration for the Cisco UCS environment is necessary if the servers in the environment do not have a local disk.

Note

This policy should not be used on servers that contain local disks.

To create a local disk configuration policy, follow these steps:

1.

Log into Cisco UCS Manager,

2.

Click the Servers tab in the navigation pane.

3.

Choose Policies > root.

4.

Right-click Local Disk Config Policies.

5.

Choose Create Local Disk Configuration Policy.

6.

Enter SAN-Boot as the local disk configuration policy name.

7.

Change the mode to No Local Storage.

8.

Click OK to create the local disk configuration policy. See Figure 40.

Figure 40 Creating Local Disk Configuration Policy

Create FCoE Boot Policies

This procedure applies to a Cisco UCS environment in which the storage FCoE ports are configured in the following ways:

•

The FCoE ports 5a on storage controllers 1 and 2 are connected to the Cisco Nexus 5548 switch A.

•

The FCoE ports 5b on storage controllers 1 and 2 are connected to the Cisco Nexus 5548 switch B.

Two boot policies are configured in this procedure:

•

The first configures the primary target to be FCoE port 5a on storage controller 1.

•

The second configures the primary target to be FCoE port 5b on storage controller 1.

To create boot policies for the Cisco UCS environment, follow these steps:

1.

In Cisco UCS Manager, click the Servers tab in the navigation pane.

2.

Choose Policies > root.

3.

Right-click on Boot Policies. and choose Create Boot Policy.

4.

Enter Boot-FCoE-OVM-A as the name of the boot policy.

5.

Enter a description for the boot policy. This field is optional.

6.

Uncheck the Keep the Reboot on Boot Order Change check box.

7.

Expand the Local Devices drop-down menu and choose Add CD-ROM.

8.

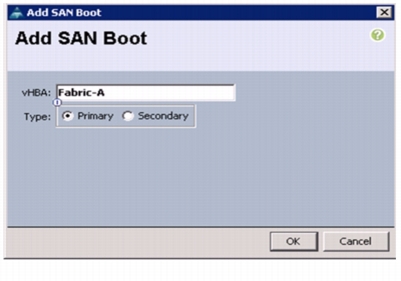

Expand the vHBAs drop-down menu and choose Add SAN Boot.

9.

In the Add SAN Boot dialog box, enter Fabric-A in the vHBA field.

10.

Select the Primary radio button as the SAN boot type.

11.

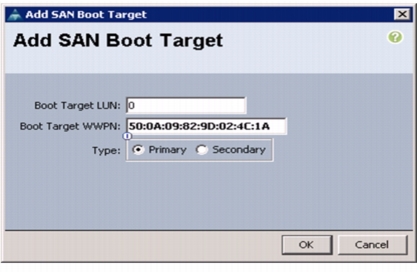

Click OK to add the SAN boot initiator. See Figure 41.

Figure 41 Adding SAN Boot Initiator for Fabric A

12.

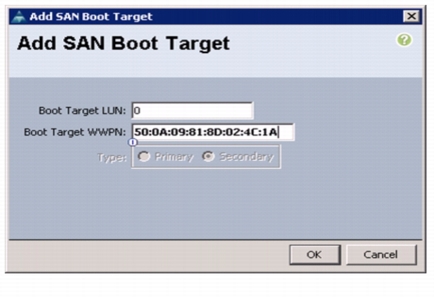

From the vHBA drop-down menu, choose Add SAN Boot Target.

13.

Keep 0 as the value for Boot Target LUN.

14.

Enter the WWPN for FCoE port 5a on storage controller 1.

Note

To obtain this information, log in to storage controller 1 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

15.

Select the Primary radio button as the SAN boot target type.

16.

Click OK to add the SAN boot target. See Figure 42.

Figure 42 Adding SAN Boot Target for Fabric A

17.

From the vHBA drop-down menu, choose Add SAN Boot Target.

18.

Enter 0 as the value for Boot Target LUN.

19.

Enter the WWPN for FCoE port 5a on storage controller 2.

Note

To obtain this information, log in to storage controller 2 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

20.

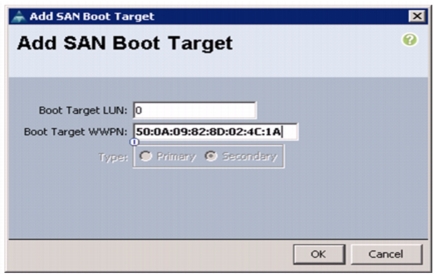

Click OK to add the SAN boot target. See Figure 43.

Figure 43 Adding Secondary SAN Boot Target for Fabric A

21.

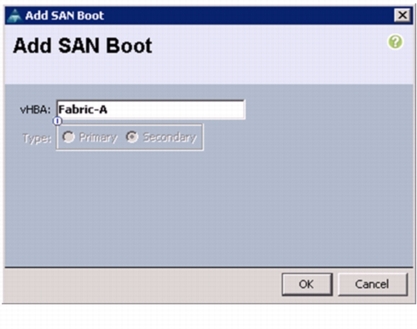

From the vHBA drop-down menu, choose Add SAN Boot.

22.

In the Add SAN Boot dialog box, enter Fabric-B in the vHBA box.

23.

The SAN boot type should automatically be set to Secondary, and the Type option should be greyed out and unavailable.

24.

Click OK to add the SAN boot initiator. See Figure 44.

Figure 44 Adding SAN Boot Initiator for Fabric B

25.

From the vHBA drop-down menu, choose Add SAN Boot Target.

26.

Keep 0 as the value for Boot Target LUN.

27.

Enter the WWPN for FCoE port 5b on storage controller 1.

Note

To obtain this information, log in to storage controller 1 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

28.

Select the Primary radio button as the SAN boot target type.

29.

Click OK to add the SAN boot target. See Figure 45.

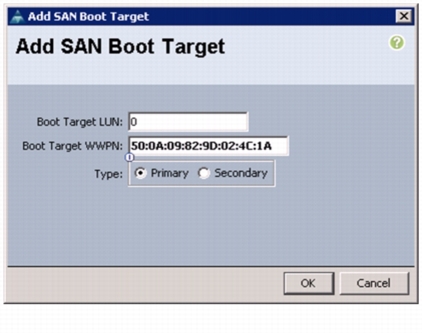

Figure 45 Adding Primary SAN Boot Target for Fabric B

30.

From the vHBA drop-down menu, choose Add SAN Boot Target.

31.

Enter 0 as the value for Boot Target LUN.

32.

Enter the WWPN for FCoE port 5b on storage controller 2.

Note

To obtain this information, log in to storage controller 2 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

33.

Click OK to add the SAN boot target. See Figure 46.

Figure 46 Adding Secondary SAN Boot Target

34.

Click OK, and then OK again to create the boot policy.

35.

Right-click Boot Policies, and choose Create Boot Policy.

36.

Enter Boot-FCoE-OVM-B as the name of the boot policy.

37.

Enter a description of the boot policy. This field is optional.

38.

Uncheck the Reboot on Boot Order Change check box.

39.

From the Local Devices drop-down menu choose Add CD-ROM.

40.

From the vHBA drop-down menu choose Add SAN Boot.

41.

In the Add SAN Boot dialog box, enter Fabric-B in the vHBA box.

42.

Select the Primary radio button as the SAN boot type.

43.

Click OK to add the SAN boot initiator. See Figure 47.

Figure 47 Adding SAN Boot Initiator for Fabric B

44.

From the vHBA drop-down menu, choose Add SAN Boot Target.

45.

Enter 0 as the value for Boot Target LUN.

46.

Enter the WWPN for FCoE port 5b on storage controller 1.

Note

To obtain this information, log in to storage controller 1 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

47.

Select the Primary radio button as the SAN boot target type.

48.

Click OK to add the SAN boot target. See Figure 48.

Figure 48 Adding Primary SAN Boot Target for Fabric B

49.

From the vHBA drop-down menu, choose Add SAN Boot Target.

50.

Enter 0 as the value for Boot Target LUN.

51.

Enter the WWPN for FCoE port 5b on storage controller 2.

Note

To obtain this information, log in to storage controller 2 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

52.

Click OK to add the SAN boot target. See Figure 49.

Figure 49 Adding Secondary SAN Boot Target for Fabric B

53.

From the vHBA menu, choose Add SAN Boot.

54.

In the Add SAN Boot dialog box, enter Fabric-A in the vHBA box.

55.

The SAN boot type should automatically be set to Secondary, and the Type option should be greyed out and unavailable.

56.

Click OK to add the SAN boot initiator. See Figure 50.

Figure 50 Adding SAN Boot for Fabric A

57.

From the vHBA menu, choose Add SAN Boot Target.

58.

Enter 0 as the value for Boot Target LUN.

59.

Enter the WWPN for FCoE port 5a on storage controller 1.

Note

To obtain this information, log in to storage controller 1 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

60.

Select the Primary radio button as the SAN boot target type.

61.

Click OK to add the SAN boot target. See Figure 51.

Figure 51 Adding Primary SAN Boot Target for Fabric A

62.

From the vHBA drop-down menu, choose Add SAN Boot Target.

63.

Enter 0 as the value for Boot Target LUN.

64.

Enter the WWPN for FCoE port 5a on storage controller 2.

Note

To obtain this information, log in to storage controller 2 and run the fcp show adapters command. Ensure you enter the port name and not the node name.

65.

Click OK to add the SAN boot target. See Figure 52.

Figure 52 Adding Secondary SAN Boot Target for Fabric A

66.

Click OK, and then click OK again to create the boot policy.

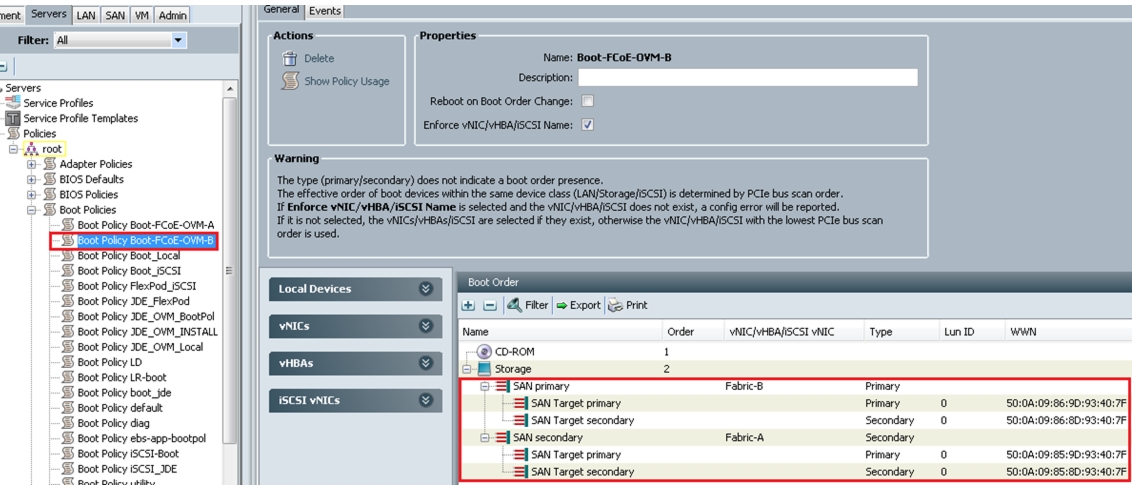

After creating the FCoE boot policies for Fabric A and Fabric B, you can view the boot order in the UCS Manager GUI. To view the boot order, navigate to Servers > Policies > Boot Policies. Select Boot Policy Boot-FCoE-OVM-A to view the boot order for Fabric A in the right pane of the UCS Manager. Similarly, select Boot Policy Boot-FCoE-OVM-B to view the boot order for Fabric B in the right pane of the UCS Manager. Figure 53 and Figure 54 show the boot policies for Fabric A and Fabric B respectively in the UCS Manager.

Figure 53 Boot Policy for Fabric A

Figure 54 Boot Policy for Fabric B

Service Profile creation and Association to UCS Blades

Service profile templates enable policy based server management that helps ensure consistent server resource provisioning suitable to meet predefined workload needs.

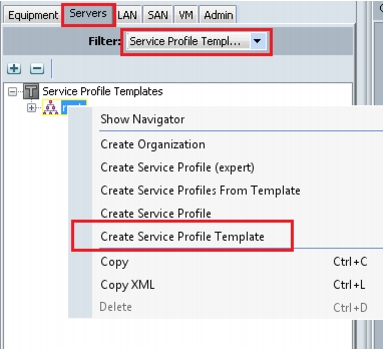

Create Service Profile Template

To create service profile template, follow these steps:

1.

Log into Cisco UCS Manager.

2.

Click the Servers tab in the navigation pane,

3.

Choose Servers > Service Profile Templates > root.

4.

Right-click on root and choose Create Service Profile Template.

Figure 55 Create Service Profile Template

5.

Enter template name, and select UUID Pool that was created earlier. See Figure 56.

6.

Click Next.

Figure 56 Creating Service Profile Template - Identify

7.

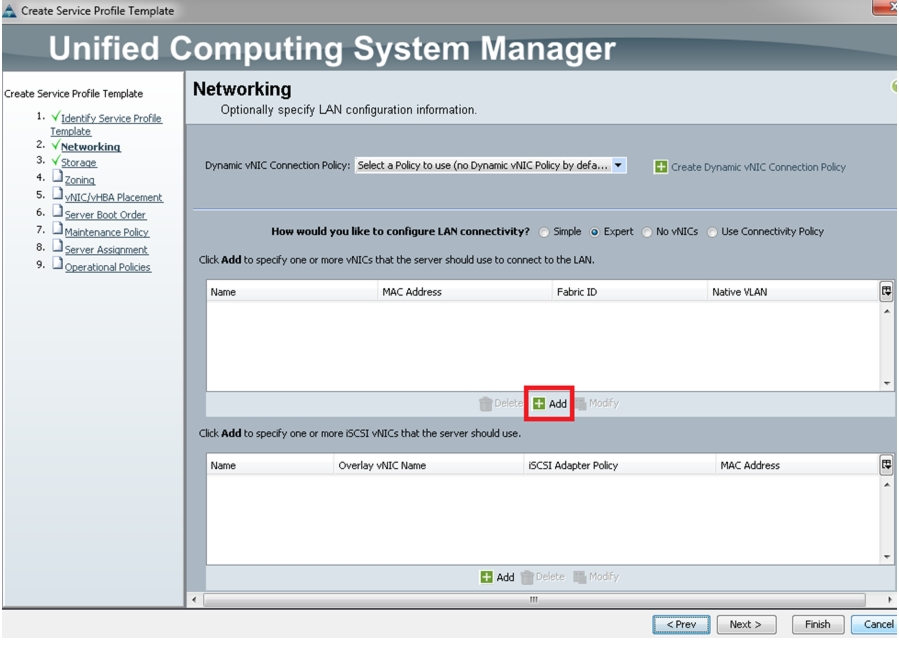

In the Networking window, select the Dynamic vNIC that was created earlier and move on to the next window. See Figure 57.

Figure 57 Creating Service Profile Template - Networking

8.

In the Networking page create vNICs, one on each fabric and associate them with the VLAN policies created earlier.

9.

Select Expert Mode, and click Add to add one or more vNics that the server should use to connect to the LAN.

10.

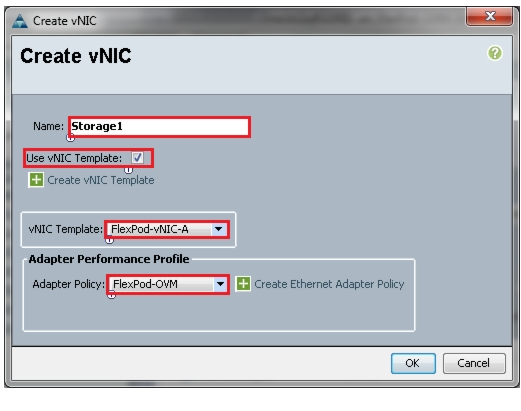

In the Create vNIC page, select Use vNIC template and adapter policy as Flexpod-OVM.

11.

Enter vNIC Storage1 as the vNIC name.

Figure 58 Creating Service Profile Template - Create vNIC

12.

Similarly, create vNIC Storage2, and vNIC for Public. Private and for Live Migration for side A & B with appropriate vNIC template mapping for each vNIC.

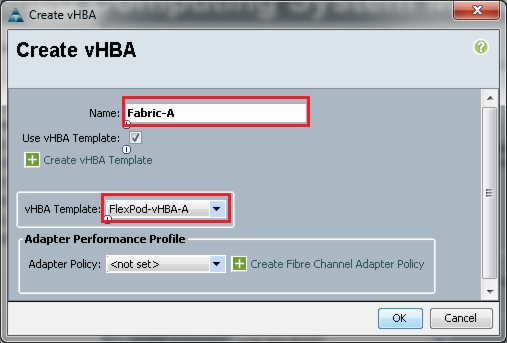

Once vNICs are created, we need to create vHBAs.

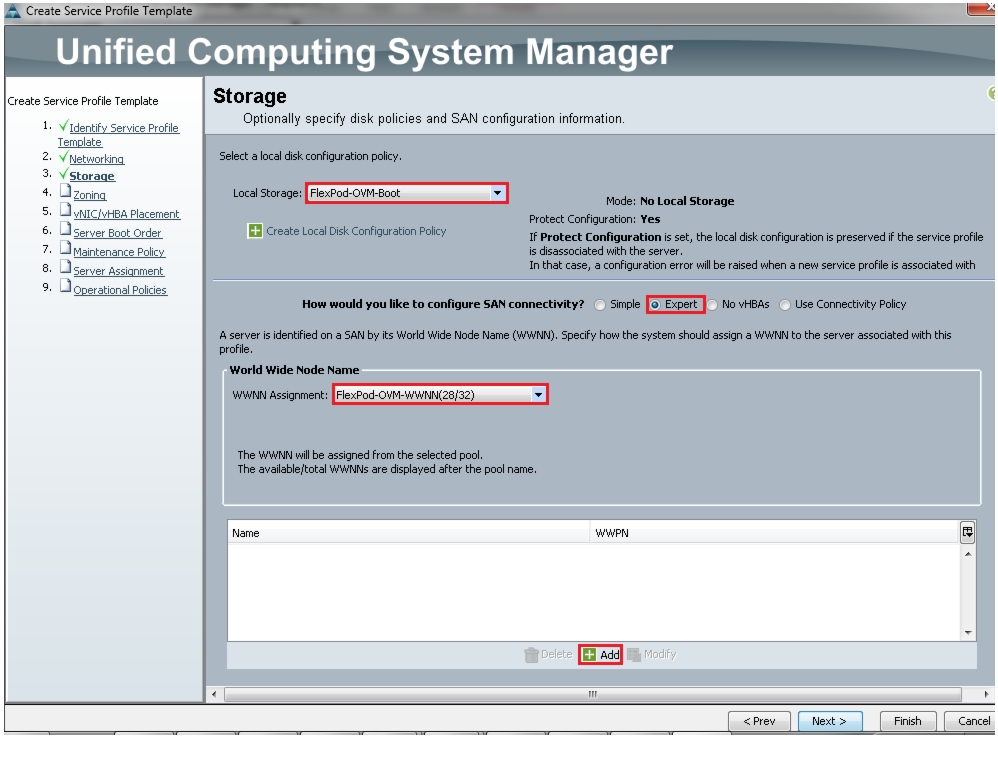

In the storage page, select expert mode, choose the WWNN pool created earlier and click Add to create vHBAs.

Figure 59 Creating Service Profile Template - Storage

We have created two vHBAs that are shown below.

•

Fabric-A using template Flexpod-vHBA-A.

•

Fabric-B using template Flexpod-vHBA-B.

Figure 60 Creating Service Profile Template - Create vHBA

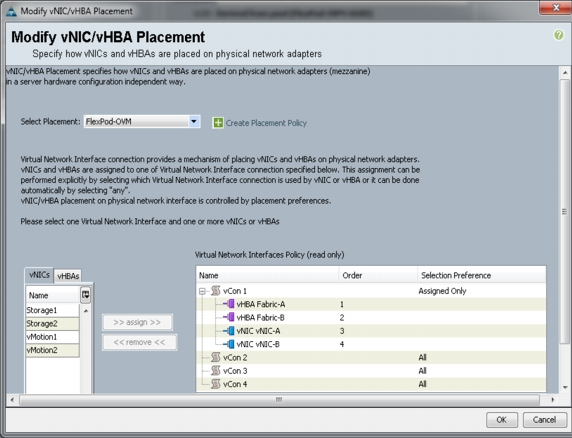

For this Flexpod configuration, we used Nexus 5548UP for zoning, so we will skip the zoning section and use default vNIC/vHBA placement.

Figure 61 Creating Service Profile Template - vNIC/vHBA Placement

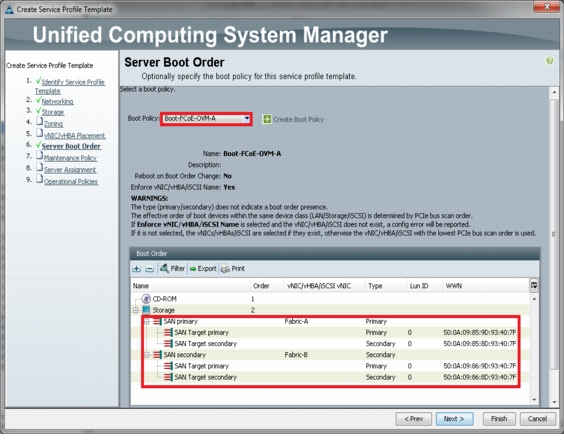

Server Boot Policy

In the Server Boot Order page, choose the Boot Policy we created for SAN boot and click Next.

Figure 62 Configure Server Boot Policy

The Maintenance and Assignment policies are kept at default in our configuration. However, they may vary from site to site depending on your work loads, best practices and policies.

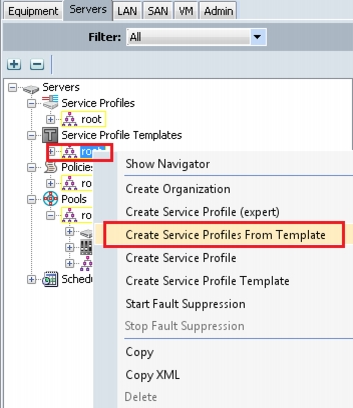

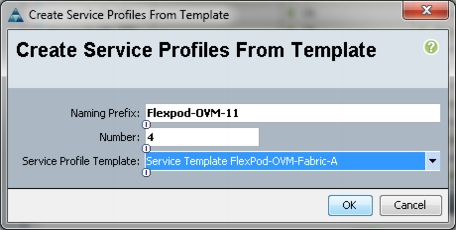

Create Service Profiles from Service Profile Templates

To create service profiles from service profile templates, follow these steps:

1.

Log into Cisco UCS Manager,

2.

Click on the Servers tab in the navigation pane

3.

Choose Servers > Service Profile Templates and

4.

Right-click and choose Create Service Profiles from Template. See Figure 63.

Figure 63 Create Service profile from Service Profile template

Figure 64 Create Service Profile from Service Profile Template

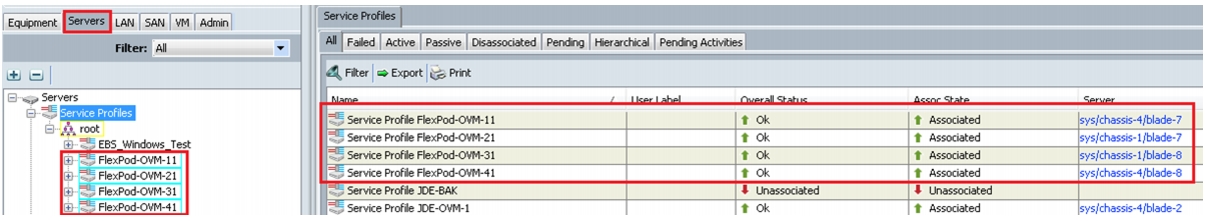

We have created four service profiles:

•

Flexpod-OVM-11

•

Flexpod-OVM-21

•

Flexpod-OVM-31

•

Flexpod-OVM-41

Associating Service Profile to Servers

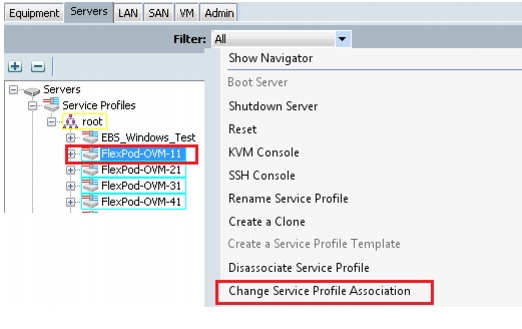

As service profiles are created now, we are ready to associate them to the servers. To associate service profiles to servers, follow these steps:

1.

Log into Cisco UCS Manager

2.

Click the Servers tab in the navigation pane

3.

Under the Servers tab, select the desired service profile, and select change service profile association. See Figure 65.

Figure 65 Associating Service Profile to UCS Blade Servers

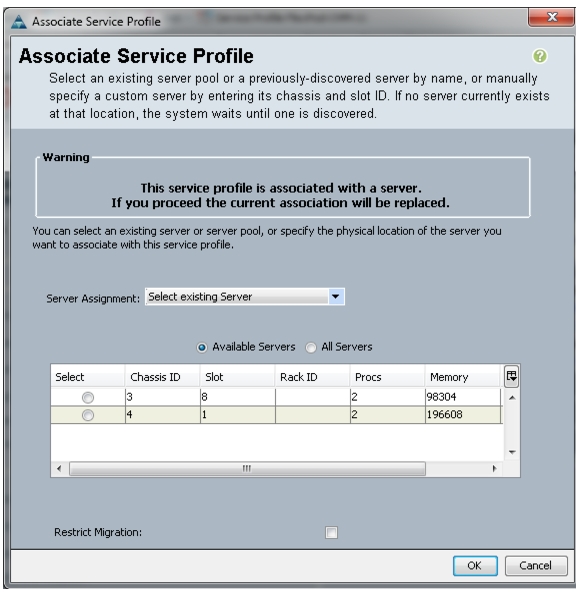

4.

In the Change Service Profile Association page, from the Server Assignment drop-down, select existing server that you would like to assign.

5.

Click OK. See Figure 66.

Figure 66 Changing Service Profile Association

6.

Repeat the same steps to associate remaining 3 service profiles for the respective Blade servers. Ensure all the service profiles are associated as shown in Figure 67.

Figure 67 Associated Service Profiles Summary

Nexus 5548UP Configuration for FCoE Boot and NFS Data Access

Enable Licenses

Cisco Nexus A

To license the Cisco Nexus A switch on <<var_nexus_A_hostname>>, follow these steps:

1.

Log in as admin.

2.

Run the following commands:

config t

feature fcoe

feature npiv

feature lacp

feature vpc

Cisco Nexus B

To license the Cisco Nexus B switch on <<var_nexus_B_hostname>>, follow these steps:

1.

Log in as admin.

2.

Run the following commands:

config t

feature fcoe

feature npiv

feature lacp

feature vpc

Set Global Configurations

Cisco Nexus 5548 A and Cisco Nexus 5548 B

To set global configurations, follow these steps on both switches:

Run the following commands to set global configurations and jumbo frames in QoS:

1.

Login as admin user

2.

Run the following commands

conf t

spanning-tree port type network default

spanning-tree port type edge bpduguard default

port-channel load-balance ethernet source-dest-port

policy-map type network-qos jumbo

class type network-qos class-default

mtu 9216

exit

class type network-qos class-fcoe

pause no-drop

mtu 2158

exit

exit

system qos

service-policy type network-qos jumbo

exit

copy run start

Create VLANs

Cisco Nexus 5548 A and Cisco Nexus 5548 B

To create the necessary virtual local area networks (VLANs), follow these steps on both switches:

From the global configuration mode, run the following commands:

1.

Login as admin user

2.

Run the following commands

conf t

vlan 760

name Public-VLAN

exit

vlan 191

name Private-VLAN

exit

vlan 120

name Storage1-VLAN

exit

vlan 121

name Storage2-VLAN

exit

vlan 761

name LM-VLAN

exit

Add Individual Port Descriptions for Troubleshooting

Cisco Nexus 5548 A

To add individual port descriptions for troubleshooting activity and verification for switch A, follow these steps:

From the global configuration mode, run the following commands:

1.

Login as admin user

2.

Run the following commands

conf t

interface Eth1/1

description Nexus5k-B-Cluster-Interconnect

exit

interface Eth1/2

description Nexus5k-B-Cluster-Interconnect

exit

interface Eth1/3

description NetApp_Storage1:e5a

exit

interface Eth1/4

description NetApp_Storage2:e5a

exit

interface Eth1/5

description Fabric_Interconnect_A:1/31

exit

interface Eth1/6

description Fabric_Interconnect_B:1/31

exit

interface eth1/17

description FCoE_FI_A:1/29

exit

interface eth1/18

description FCoE_FI_A:1/30

exit

Cisco Nexus 5548 B

To add individual port descriptions for troubleshooting activity and verification for switch B, follow these steps:

From the global configuration mode, run the following commands:

1.

Login as admin user

2.

Run the following commands

conf t

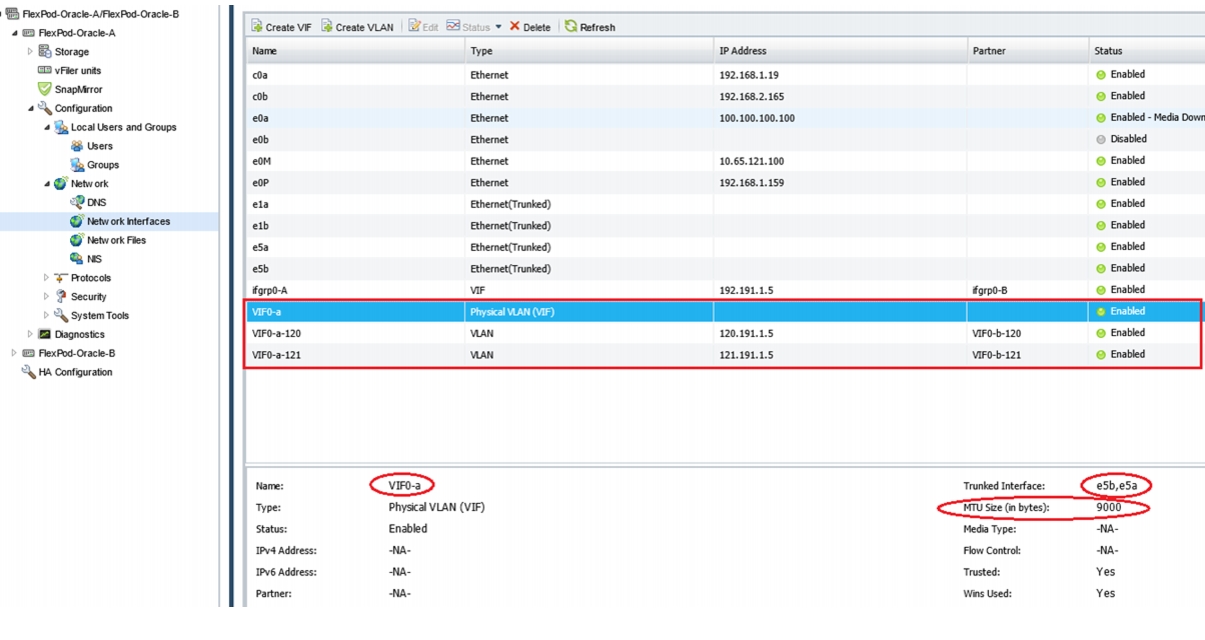

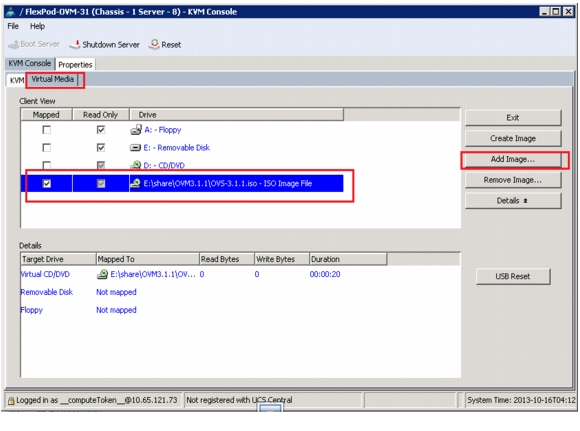

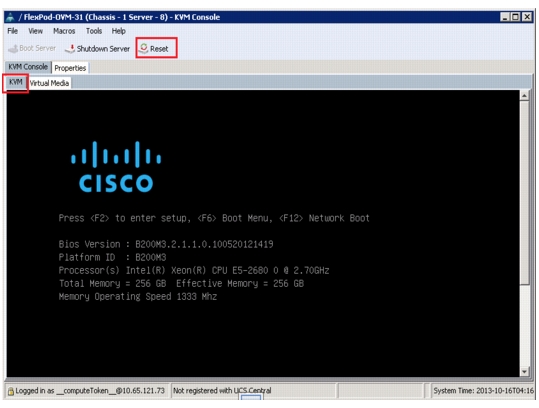

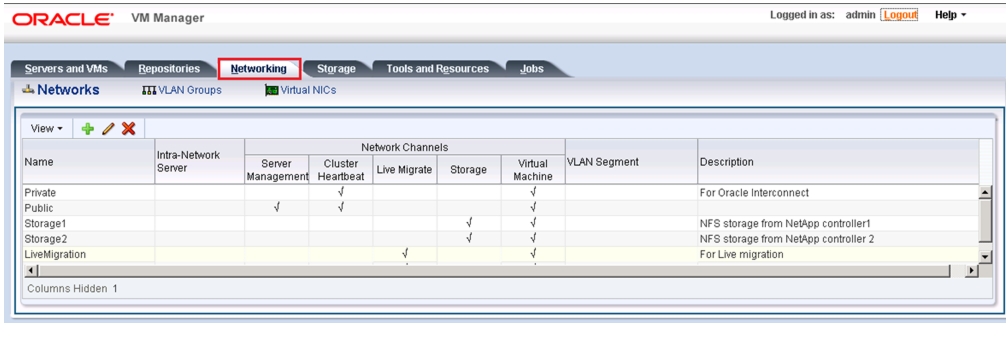

interface Eth1/1