Table Of Contents

About Cisco Validated Design (CVD) Program

FlexPod for VMware Deployment Model

FlexPod for VMware Architecture

FlexPod for VMware Configuration Deployment

NetApp FAS3210A Deployment Procedure—Part I

Cisco Nexus 5548 Deployment Procedure—Part I

Cisco Unified Computing System Deployment Procedure

Cisco Nexus 5548 Deployment Procedure—Part II

NetApp FAS3210A Deployment Procedure—Part II

VMware ESXi Deployment Procedure

VMware vCenter Server Deployment Procedure

Cisco Nexus 1010 and 1000V Deployment Procedure

NetApp Virtual Storage Console Deployment Procedure

NetApp Operations Manager Deployment Procedure

Appendix—FlexPod for VMware Configuration Information

Global Configuration Information

NetApp Configuration Information

Cisco Configuration Information

VMware Configuration Information

NetApp FAS3200 Sample Configuration

Filer Sample Interface Configuration

Sample Startup Information Configuration

Sample Initiator Group (igroup) Information

Sample List of Defined ipspaces and Interface Assignment

Sample vFiler Context Route Configuration

Sample vFiler Context Exported Directories and Files

Cisco Nexus 5548 Sample Running Configuration

Cisco Nexus 1010 Sample Running Configuration

Cisco Nexus 1000v Sample Running Configuration

Cisco Unified Computing System Configuration Extracts

Sample Chassis Discovery Policy Configuration

Create a Network Control Policy

Define QoS Policies and Jumbo Frames

Create Uplink Port-Channels to the Cisco Nexus 5548 Switches

Create Service Profile Templates

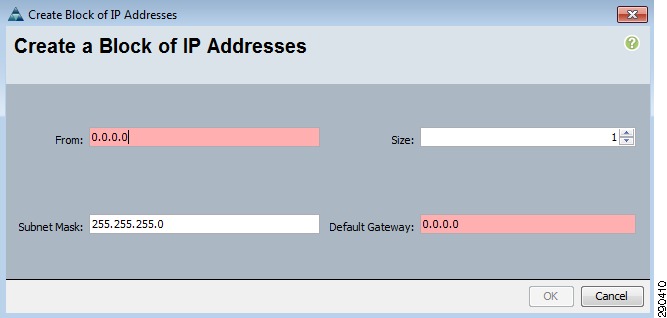

Add a Block of IP Addresses for KVM Access

FlexPod for VMware Deployment ModelLast Updated: February 24, 2011

Building Architectures to Solve Business Problems

About the Authors

Chris O'Brien, Solutions Architect, Systems Architecture and Strategy, Cisco SystemsChris O'Brien is a Solutions Architect for data center technologies in Cisco's Systems Architecture and Strategy group. He is currently focused on data center design validation and application optimization. Previously, O'Brien was an application developer and has been working in the IT industry for more than 15 years.

John George, Reference Architect, Infrastructure and Cloud Enablement, NetAppJohn George is a Reference Architect in NetApp's Infrastructure and Cloud Engineering group and is focused on developing, validating, and supporting cloud infrastructure solutions that include NetApp products. Before his current role, he supported and administrated Nortel's worldwide training network and VPN infrastructure. John holds a Master's Degree in Computer Engineering from Clemson University.

Mike Flannery, Manager, High Velocity Technical Sales, NetAppMike Flannery leads the High Velocity Technical Sales group. Mike and his team focus on architecting, sizing, and validating NetApp storage configurations for customers in the Midsized Enterprise space. Mike has been with NetApp for 6 years and prior to NetApp Mike worked at Informix Software and IBM where he focused on Database and Data Management solutions.

Mark Hayakawa, Technical Sales Architect, High Velocity Sales, NetAppMark Hayakawa is a Technical Sales Architect in the High Velocity Sales group. His primary duty is the creation, validation, and documentation of storage system configurations for the Mid Size Enterprise. Mark has been with NetApp for 10 years and in prior roles, he was a Technical Marketing Engineer supporting DB2, SAS, Lotus, SnapLock, and Storage Efficiency as well as a Field Technical Lead supporting archive and compliance.

Dustin Schoenbrun, Systems Architect, Infrastructure and Cloud Enablement, NetAppDustin Schoenbrun is a Systems Architect in NetApp's Infrastructure and Cloud Engineering team who tests, validates, and documents solutions based on NetApp and other various vendor technologies. Before working for NetApp, he worked for the University of New Hampshire InterOperability Laboratory (UNH-IOL) where he tested next generation storage devices and developed test tools for FCoE devices.

Mike Zimmerman, Reference Architect, Infrastructure and Cloud Enablement, NetAppMike Zimmerman is a Reference Architect in NetApp's Infrastructure and Cloud Engineering team. He focuses on the implementation, compatibility, and testing of various vendor technologies to develop innovative end-to-end cloud solutions for customers. Zimmerman started his career at NetApp as an architect and administrator of Kilo Client, NetApp's internal cloud infrastructure, where he gained extensive knowledge and experience building end-to-end shared architectures based upon server, network, and storage virtualization.

Wen Yu, Senior Infrastructure Technologist, VMwareWen Yu is a Sr. Infrastructure Technologist at VMware, with a focus on partner enablement and evangelism of virtualization solutions. Wen has been with VMware for six years during which time four years have been spent providing engineering level escalation support for customers. Wen specializes in virtualization products for continuous availability, backup recovery, disaster recovery, desktop, and vCloud. Wen Yu is VMware, Red Hat, and ITIL certified.

Alan Crouch, Technical Architect, Enterprise Infrastructure Management Solutions, NetAppAlan Crouch is a Technical Architect in the Enterprise Infrastructure Management Solutions team, based out of NetApp's Sunnyvale Corporate Headquarters. He is focused on developing integrated storage management and automated provisioning solutions utilizing NetApp and partner technologies.

Alan is a seasoned IT professional with a career that spans nineteen years, in which time he has developed systems for a wide variety of businesses. As an experienced systems engineer, Alan has designed, built, documented, and managed application delivery solutions for companies in several industries including healthcare, ecommerce, network infrastructure, marketing, and high technology.

Ganesh Kamath, Technical Architect, Enterprise Infrastructure Management Solutions, NetAppGanesh Kamath is a Technical Architect in NetApp's Enterprise Infrastructure Management Solutions team and is based in NetApp Bangalore. His focus is on storage infrastructure management solutions that include NetApp software as well as partner orchestration solutions. Ganesh's diverse experiences at NetApp include working as a Technical Marketing Engineer as well as a member of NetApp's Rapid Response Engineering team qualifying specialized solutions for our most demanding customers.

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at http://www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

FlexPod for VMware Deployment Model

© 2011 Cisco Systems, Inc. All rights reserved.

FlexPod for VMware Deployment Model

FlexPod for VMware Overview

Industry trends indicate a vast data center transformation toward shared infrastructures. Enterprise customers are moving away from silos of information and moving toward shared infrastructures to virtualized environments and eventually to the cloud to increase agility and reduce costs.

FlexPod™ is a predesigned, base configuration that is built on the Cisco® Unified Computing System™ (UCS), Cisco Nexus® data center switches, NetApp® FAS storage components, and a range of software partners. FlexPod can scale up for greater performance and capacity or it can scale out for environments that need consistent, multiple deployments. FlexPod is a baseline configuration, but also has the flexibility to be sized and optimized to accommodate many different use cases.

Cisco, NetApp, and VMware® have developed FlexPod for VMware as a platform that can address current virtualization needs and simplify their evolution to ITaaS infrastructure. FlexPod for VMware is built on the FlexPod infrastructure stack with added VMware components including VMware vSphere™, vCenter™ for virtualized application workloads.

FlexPod for VMware serves as a base infrastructure layer for a variety of IT solutions. A detailed study of six practical solutions deployed on FlexPod for VMware, including VDI with VMware View™ and Enhanced Secure Multi-tenancy, can be found in FlexPod for VMware Solutions Guide at: http://media.netapp.com/documents/tr-3884.pdf.

NetApp partners can access the FlexPod Implementation Guide at: https://fieldportal.netapp.com/viewcontent.asp?qv=1&docid=30428.

Audience

This document describes the basic architecture of FlexPod for VMware as well as the general procedures for deploying a base FlexPod for VMware configuration. The intended audience of this document includes, but is not limited to, sales engineers, field consultants, professional services, IT managers, partner engineering, and customers who want to deploy the core FlexPod for VMware architecture.

Note

For more detailed deployment information, Cisco, NetApp, and VMware partners should contact their local account teams or visit http://www.netapp.com.

FlexPod for VMware Architecture

As the name details, the FlexPod architecture is highly modular or "pod" like. While each customer's FlexPod may vary in its exact configuration, once a FlexPod unit is built it can easily be scaled as requirements and demand change. This includes scaling both up (adding additional resources within a FlexPod unit) and out (adding additional FlexPod units).

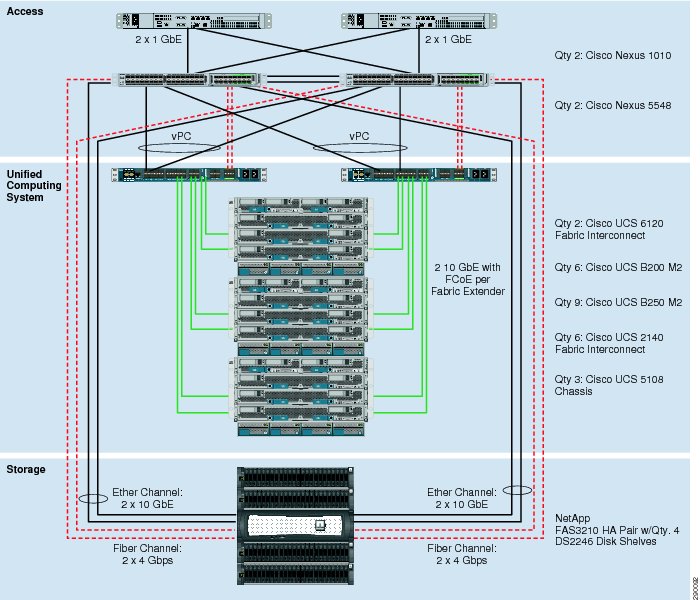

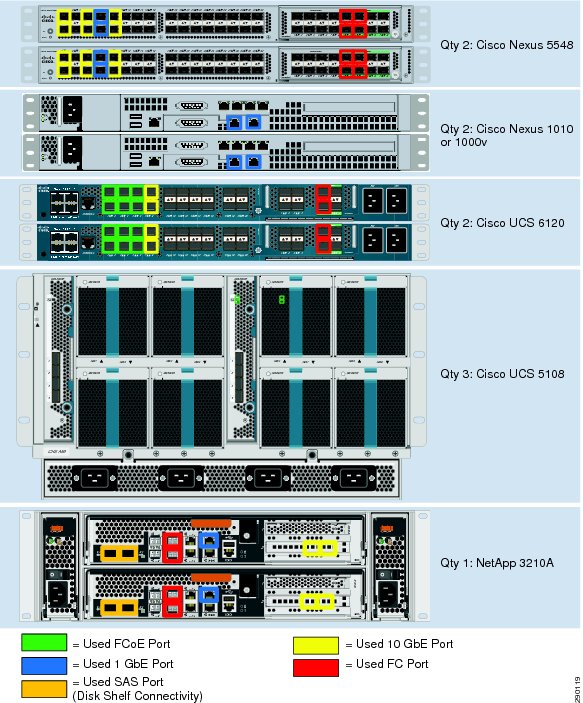

Specifically, FlexPod is a defined set of hardware and software that serves as an integrated building block for all virtualization solutions. FlexPod for VMware includes NetApp storage, Cisco networking, the Cisco Unified Computing System (Cisco UCS), and VMware virtualization software in a single package in which the computing and storage fit in one data center rack with the networking residing in a separate rack. Due to port density the networking components can accommodate multiple FlexPod for VMware configurations. Figure 1 shows the FlexPod for VMware components.

Figure 1 FlexPod for VMware Components

The default hardware involved includes two Cisco Nexus 5548 switches, two Cisco UCS 6120 fabric interconnects, and three chassis of Cisco UCS blades with two fabric extenders per chassis. Storage is provided by a NetApp FAS3210CC (HA-configuration within a single chassis) with accompanying disk shelves. All systems and fabric links feature redundancy, providing for end-to-end high availability. For server virtualization, the deployment includes VMware vSphere Enterprise Plus with vCenter Standard. While this is the default base design, each of the components can be scaled flexibly to support the specific business requirements in question. For example, more (or different) blades and chassis could be deployed to increase compute capacity, additional disk shelves could be deployed to improve IO capacity and throughput, or special hardware or software features could be added to introduce new features (such as NetApp FlashCache for dedupe-aware caching or VMware View for VDI deployments).

The remainder of this document will guide the reader through the steps necessary to deploy the base architecture as shown above. This includes everything from physical cabling to compute and storage configuration to configuring virtualization with VMware vSphere.

FlexPod for VMware Configuration Deployment

The following section provides detailed information on configuring all aspects of a base FlexPod for VMware environment. As the FlexPod for VMware architecture is flexible, the exact configuration detailed below may vary from customer implementations depending on specific requirements. While customer implementations may deviate from the information that follows, the practices, features, and configurations below should still be used as a reference to building a customized FlexPod for VMware architecture.

Cabling Information

The following information is provided as a reference for cabling the physical equipment in a FlexPod for VMware environment. The tables include both local and remote device and port locations in order to simplify cabling requirements.

Note

The following tables are for the prescribed and supported configuration of the FAS3210 running Data ONTAP 7.3.5. This configuration leverages the onboard FC storage target ports, a dual-port 10GbE add-on adapter, and the onboard SAS ports for disk shelf connectivity. For any modifications of this prescribed architecture, consult the currently available Interoperability Matrix Tool (IMT): http://now.netapp.com/matrix.

Note

The FlexPod for VMware deployment guide assumes that out-of-band management ports are plugged into existing management infrastructure at the deployment site.

Note

Be sure to cable as detailed below, because failure to do so will result in necessary changes to the deployment procedures that follow as specific port locations are mentioned.

Note

It is possible to order a FAS3210A system in a different configuration than what is prescribed below. Make sure that your configuration matches what is described in the tables and diagrams below before starting.

Table 1 FlexPod for VMware Ethernet Cabling Information

Cisco Nexus1 5548 A

Eth1/1

10GbE

NetApp Controller A

e2a

Eth1/2

10GbE

NetApp Controller B

e2a

Eth1/5

10GbE

Cisco Nexus 5548 B

Eth1/5

Eth1/6

10GbE

Cisco Nexus 5548 B

Eth1/6

Eth1/7

1GbE

Cisco Nexus 1010 A

Eth1

Eth1/8

1GbE

Cisco Nexus 1010 B

Eth1

Eth1/9

10GbE

Cisco UCS Fabric Interconnect A

Eth1/7

Eth1/10

10GbE

Cisco UCS Fabric Interconnect B

Eth1/7

MGMT0

100MbE

100MbE Management Switch

Any

Cisco Nexus1 5548 B

Eth1/1

10GbE

NetApp Controller A

e2b

Eth1/2

10GbE

NetApp Controller B

e2b

Eth1/5

10GbE

Cisco Nexus 5548 A

Eth1/5

Eth1/6

10GbE

Cisco Nexus 5548 A

Eth1/6

Eth1/7

1GbE

Cisco Nexus 1010 A

Eth2

Eth1/8

1GbE

Cisco Nexus 1010 B

Eth2

Eth1/9

10GbE

Cisco UCS Fabric Interconnect A

Eth1/8

Eth1/10

10GbE

Cisco UCS Fabric Interconnect B

Eth1/8

MGMT0

100MbE

100MbE Management Switch

Any

NetApp Controller A

e0M

100MbE

100MbE Management Switch

Any

e0P

1GbE

SAS shelves

ACP port

e2a

10GbE

Nexus 5548 A

Eth1/1

e2b

10GbE

Nexus 5548 B

Eth1/1

NetApp Controller B

e0M

100MbE

100MbE management switch

any

e0P

1GbE

SAS shelves

ACP port

e2a

10GbE

Nexus 5548 A

Eth1/2

e2b

10GbE

Nexus 5548 B

Eth1/2

Cisco UCS Fabric Interconnect

Eth1/7

10GbE

Nexus 5548 A

Eth1/9

Eth1/8

10GbE

Nexus 5548 B

Eth1/9

Eth1/1

10GbE/FCoE

Chassis 1 FEX A

port 1

Eth1/2

10GbE/FCoE

Chassis 1 FEX A

port 2

Eth1/3

10GbE/FCoE

Chassis 2 FEX A

port 1

Eth1/4

10GbE/FCoE

Chassis 2 FEX A

port 2

Eth1/5

10GbE/FCoE

Chassis 3 FEX A

port 1

Eth1/6

10GbE/FCoE

Chassis 3 FEX A

port 2

MGMT0

100MbE

100MbE Management Switch

Any

L1

1GbE

UCS Fabric Interconnect B

L1

L2

1GbE

UCS Fabric Interconnect B

L2

Cisco UCS Fabric Interconnect

Eth1/7

10GbE

Nexus 5548 A

Eth1/10

Eth1/8

10GbE

Nexus 5548 B

Eth1/10

Eth1/1

10GbE/FCoE

Chassis 1 FEX B

port 1

Eth1/2

10GbE/FCoE

Chassis 1 FEX B

port 2

Eth1/3

10GbE/FCoE

Chassis 2 FEX B

port 1

Eth1/4

10GbE/FCoE

Chassis 2 FEX B

port 2

Eth1/5

10GbE/FCoE

Chassis 3 FEX B

port 1

Eth1/6

10GbE/FCoE

Chassis 3 FEX B

port 2

MGMT0

100MbE

100MbE Management Switch

Any

L1

1GbE

UCS Fabric Interconnect A

L1

L2

1GbE

UCS Fabric Interconnect A

L2

Nexus 1010 A

Eth1

1GbE

Nexus 5548 A

Eth1/7

Eth2

1GbE

Nexus 5548 B

Eth1/7

Nexus 1010 B

Eth1

1GbE

Nexus 5548 A

Eth1/8

Eth2

1GbE

Nexus 5548 B

Eth1/8

1 The Cisco Nexus 1010 virtual appliances require the use of two 1GbE Copper SFP+'s (GLC-T=).

Figure 2 FlexPod Cabling

NetApp FAS3210A Deployment Procedure—Part I

This section describes the procedures for configuring the NetApp FAS3210A for use in a FlexPod for VMware environment. This section has the following objectives:

•

Establishment of a functional Data ONTAP 7.3.5 failover cluster with proper licensing

•

Creation of data aggregates

•

Creation of Flex volumes

•

Configure NFS exports

•

Creation of infrastructure vFiler

The following measures should be taken to meet these objectives:

•

Assign the Controller Disk Ownership.

•

Downgrade from Data ONTAP 8.0.1 to 7.3.5.

Note

This step is not necessary if Data ONTAP 7.3.5 is already installed on your storage controllers.

•

Set up Data ONTAP 7.3.5.

•

Install Data ONTAP to the Onboard Flash Storage.

•

Install Required Licenses.

•

Start FCP service and ensure proper FC port configuration.

•

Enable Active-Active Configuration Between the two Storage Systems.

•

Create the data aggregate "aggr1".

•

Enable 802.1q VLAN trunking and add the NFS VLAN.

•

Harden Storage System Logins and Security.

•

Create SNMP Requests role and assign SNMP Login privileges.

•

Create SNMP Management group and assign SNMP Request role to it.

•

Create SNMP user and assign to SNMP Management group.

•

Enable SNMP on the storage controllers.

•

Delete SNMP v1 communities from storage controllers.

•

Set SNMP contact information for each of the storage controllers.

•

Set SNMP location information for each of the storage controllers.

•

Establish SNMP Trap Destinations.

•

Re-Initialize SNMP on the storage controllers.

•

Enable FlashCache.

•

Create the necessary infrastructure volumes (Flexible Volumes).

•

Create the Infrastructure IP Space.

•

Create the Infrastructure vFiler units.

•

Map the necessary infrastructure volumes to the infrastructure vFiler.

•

Export the infrastructure volumes to the ESXi servers over NFS.

•

Set the Priority Levels for the Volumes.

Cisco Nexus 5548 Deployment Procedure—Part I

This section describes the procedures for deploying the Cisco Nexus 5548 platforms for use in a FlexPod for VMware and achieves the following objectives:

•

Establish a functional pair of Cisco Nexus 5548 switches with proper licensing and feature enabled.

•

Establish connectivity between FlexPod elements including via traditional and virtual port channels.

•

Establish connectivity to existing data center infrastructure.

The following actions are necessary to configure the Cisco Nexus 5548 switches for use in a FlexPod for VMware environment.

•

Execute the Cisco Nexus 5548 setup script.

•

Enable the appropriate Cisco Nexus features and licensing.

•

Set Global Configurations.

•

Create Necessary VLANs including NFS, management, vMotion, Nexus 1000v control and packet, as well as VM data VLANs.

•

Add individual port descriptions for troubleshooting.

•

Create Necessary Port-Channels including the vPC peer-link.

•

Add Port-Channel Configurations.

•

Configure Virtual Port-Channels (vPCs) to UCS fabric interconnects and NetApp controllers.

•

Configure uplinks into existing network infrastructure, preferably via vPC.

•

Configure trunk ports for the Cisco Nexus 1010 virtual appliances.

•

Save the configuration.

Cisco Unified Computing System Deployment Procedure

This section provides the procedure for configuring the Cisco Unified Computing System for use in a FlexPod for VMware environment. This workflow should achieve the following goals:

•

Creates a functional Cisco UCS fabric cluster

•

Creates the logical building blocks for UCS management model including MAC, WWNN, WWPN, UUID and server pools, vNIC and vHBA templates, VLANs and VSANs via UCSM

•

Defines policies enforcing inventory discovery, network control and server boot rules via UCSM

•

Creates Service Profile templates

•

Instantiates Service Profiles by association templates to physical blades

The following process should be followed for proper configuration.

•

Execute the initial setup of the Cisco UCS 6100 Fabric Interconnects.

•

Log into the Cisco UCS Manager via Web browser.

•

Edit the Chassis Discovery Policy to reflect the number of links from the chassis to the fabric interconnects.

•

Enable Fibre Channel Server and Uplink Ports.

•

Create an Organization which manages the FlexPod infrastructure and owns the logical building blocks.

•

Create MAC Address Pools under infrastructure organization.

•

Create global VLANs, including NFS, vMotion, Nexus 1000v control and packet, as well as VM data VLANs.

•

Create a Network Control Policy under infrastructure Organization.

•

Create vNIC Template under infrastructure Organization using previously defined pools.

•

Create Uplink Port-Channels to the Cisco Nexus 5548 Switches.

•

Create WWNN Pool under infrastructure Organization.

•

Create WWPN Pools under infrastructure Organization.

•

Create global VSANs.

•

Create vHBA Templates for Fabric A and B under infrastructure Organization.

•

Create Boot Policies under infrastructure Organization.

•

Create Server Pools under infrastructure Organization.

•

Create UUID Suffix Pools under infrastructure Organization.

•

Create Service Profile Templates under infrastructure Organization.

•

Create Service Profiles under infrastructure Organization.

•

Add a block of IP Addresses for KVM access.

Gather Necessary Information

Once the Cisco UCS Service Profiles have been created above, the infrastructure blades in the environment each have a unique configuration. In order to proceed with the FlexPod for VMware deployment, specific information must be gathered from each Cisco UCS blade as well as the Netapp controllers. Table 3 and Table 4 detail the information that is needed for later use.

Note

On each NetApp controller use the "show fcp adapters" to gather the above information.

Cisco Nexus 5548 Deployment Procedure—Part II

This section describes the procedures for additional Fibre Channel functionality on the Cisco Nexus 5548 platforms within the FlexPod for VMware environment and achieves the following objectives:

•

Dedicated VSANs for each Fibre Channel fabric

•

Allocates ports as Fibre Channel resources

•

Defines Fibre Channel aliases for Service Profiles and NetApp controller ports

•

Establishes Fibre Channel Zoning and working sets

The following measures should be taken on each Nexus platform:

•

Create VSANs for fabric "A" or "B" on respective Nexus platform.

•

Assign to VSAN appropriate FC interfaces.

•

Create device aliases on each Cisco Nexus 5548 for each service profile using corresponding fabric PWWN.

•

Create device aliases on each Cisco Nexus 5548 for each service NetApp controller using corresponding fabric PWWN.

•

Create Zones for each service profile and assign devices as members via Fibre Channel aliases.

•

Activate the zoneset.

•

Save the configuration.

NetApp FAS3210A Deployment Procedure—Part II

This section describes additional procedures necessary on the NetApp controllers to provide UCS stateless boot functionality. At the end of this workflow the following objectives should be met:

•

Fibre Channel target ports defined

•

Fibre Channel Interface Groups (igroups) defined for each service profile

•

Boot LUNs allocated for each Cisco UCS service profile

•

Boot LUN mapped to associated Cisco UCS service profile

The following process outlines the steps necessary:

•

Create igroups.

•

Create LUNs for the Service Profiles.

•

Map LUNs to igroups.

VMware ESXi Deployment Procedure

This section describes the installation of ESXi on the Cisco UCS and should result in the following:

•

A functional ESXi host

•

NFS and vMotion network connectivity

•

Availability of NFS datastores to the ESXi host

The following outlines the process for installing VMware ESXi within a FlexPod for VMware environment.

•

VMware ESXi Deployment via UCSM KVM Console.

There are multiple methods for installing ESXi within such an environment. In this case, an ISO image is mounted to via the KVM console to make ESXi accessible to the blade.

•

Set up the ESXi Host's Administration Password.

•

Set up the ESXi Host's Management Networking.

•

Set up the management VLAN.

•

Set up DNS.

•

Set up the NFS and VMotion VMkernel ports with Jumbo Frames MTU.

•

Access the ESXi host via Web browser and download VMware vSphere Client.

•

Log into VMware ESXi Host using VMware vSphere Client.

•

Set up the vMotion VMkernel Port on the Virtual Switch for individual hosts.

•

Change VLAN ID for default VM-Traffic Port-group called "VM-Network".

•

Mount the Required datastores for individual hosts.

•

Set NTP time configuration for individual hosts.

•

Move the swapfile from local to NFS export location.

VMware vCenter Server Deployment Procedure

The following section describes the installation of VMware vCenter within a FlexPod for VMware environment and results in the following:

•

A running VMware vCenter virtual machine

•

A running SQL virtual machine acting as the vCenter database server

•

A vCenter DataCenter with associated ESXi hosts

•

VMware DRS and HA functionality enabled

The deployment procedures necessary to achieve these objectives include:

•

Log into VMware ESXi Host using VMware vSphere Client.

•

Build a SQL Server VM using Windows Server 2008 R2 x64 image.

•

Create the required databases and database users. Use the script provided in the vCenter installation directory.

Note

VMware vCenter can use one of a number of vendor Databases. This deployment guide assumes Microsoft SQL Server 2008. If a database server already exists and it is compatible with vCenter you can create the required database instance for vCenter and skip this step.

•

Build a vCenter virtual machine on another Windows Server 2008 R2 virtual machine instance.

•

Install SQL Server 2008 R2 Native Client on the vCenter virtual machine.

•

Create Data Source Name referencing the SQL instance on the vCenter machine.

•

Install VMware vCenter Server referencing the SQL server data source previously established.

•

Create a vCenter Datacenter.

•

Create a new management cluster with DRS and HA enabled.

•

Add Hosts to the management cluster.

Cisco Nexus 1010 and 1000V Deployment Procedure

The following section outlines the procedures to deploy the Cisco Nexus 1010 and 1000v platforms within a FlexPod for VMware environment. At the completion of this section the following should be in place:

•

A clustered pair of Cisco Nexus 1010s

•

An active/standby pair of Nexus 1000v virtual supervisor modules (VSM)

•

The Nexus 1000v acting as the virtual distributed switching platform for vSphere supporting VM, NFS and vMotion traffic types

The following procedures are required to meet these objective.

•

Log into Cisco Nexus 1010 virtual appliance console.

•

Configure the CIMC or "out-of-band" management interface.

•

Execute the Cisco Nexus 1010 Virtual Appliances setup.

•

Create and install the Cisco Nexus 1000V VSM on a Nexus 1010 virtual service blade.

•

Register the Cisco Nexus 1000V as a vCenter Plug-in.

•

Configure Networking on the Cisco Nexus 1000V, including:

–

Management, NFS, vMotion and virtual machine data traffic VLANs

–

vCenter connectivity

–

Port profiles

•

Install the Nexus 1000V VEMs on each ESXi host.

•

Replace the default virtual switch with the Cisco Nexus 1000V and add uplink ports to Cisco Nexus 1000V.

•

Enable Jumbo Frames in the Nexus 1000V.

NetApp Virtual Storage Console Deployment Procedure

The following presents the general procedures for installing the NetApp Virtual Storage Console for use in a FlexPod for VMware environment.

•

Install the NetApp Virtual Storage Console on a dedicated virtual machine running Microsoft Windows Server 2008 R2 x64 with 4 GB of RAM, 30 GB of storage, and two network interfaces for management and NFS traffic.

Note

The VSC download is available at: http://.now.netapp.com.

Note

This machine may also host the NetApp Data Fabric Manager.

•

Configure the VSC plug-in to register with vCenter.

•

Configure the VSC via vCenter NetApp tab to work with the FlexPod vFilers.

•

Set the recommended values for ESXi hosts via NetApp best practices for HBA/CNA, MPIO, and NFS.

NetApp Operations Manager Deployment Procedure

The following section provides the general procedures for configuring the NetApp Operations Manager which is part of the DataFabric Manager (DFM) 4.0 suite for use in a FlexPod for VMware environment. After completing this section the following should be available:

•

A Microsoft Windows 2008 virtual machine running NetApp DataFabric Manager Suite including:

–

Operations Manager

–

Provisioning Manager

–

Protection Manger

•

NetApp Operations Manager monitoring both FlexPod for VMware storage controllers

The following section provides the procedures for configuring NetApp Operations Manager for use in a FlexPod for VMware environment.

•

Install DFM on the same Windows virtual machine hosting the virtual storage controller via Web browser (Windows).

Note

DFM is available at: http://now.netapp.com/NOW/download/software/dfm_win/Windows/.

•

Generate a secure SSL key for the DFM HTTPs server.

•

Enable HTTPs.

•

Add a license in DFM server.

•

Enable SNMP v3 configuration.

•

Configure AutoSupport information.

•

Run diagnostics to verify DFM communication with FlexPod controllers.

•

Configure an SNMP Trap Host.

•

Configure Operations Manager to generate E-mails for every Critical or higher Event and send E-mails

Appendix—FlexPod for VMware Configuration Information

The following tables outline the information which needs to be available to complete the setup and deployment of FlexPod for VMware.

Global Configuration Information

This information is used throughout the deployment across multiple layers in the environment.

NetApp Configuration Information

The information in Table 6 through Table 9 is specific to the NetApp portion of the deployment only.

Cisco Configuration Information

The information in Table 10 through Table 12 is specific to the Cisco portion of the deployment only.

VMware Configuration Information

The information in Table 13 is specific to the VMware portion of the deployment only.

NetApp FAS3200 Sample Configuration

Filer Sample Interface Configuration

ntap3200-1a> ifconfig -ac0a: flags=0x354a867<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 9000 PRIVATEinet 192.168.1.85 netmask-or-prefix 0xffffff00 broadcast 192.168.1.255ether 00:a0:98:13:d2:d0 (auto-unknown-enabling) flowcontrol fullc0b: flags=0x3d4a867<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 9000 PRIVATEinet 192.168.2.135 netmask-or-prefix 0xffffff00 broadcast 192.168.2.255ether 00:a0:98:13:d2:d1 (auto-10g_kr-fd-up) flowcontrol fulle0M: flags=0x694c867<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM,NOWINS> mtu 1500inet 10.61.185.144 netmask-or-prefix 0xffffff00 broadcast 10.61.185.255partner e0M (not in use)ether 00:a0:98:13:d2:d2 (auto-100tx-fd-up) flowcontrol fulle0P: flags=0x2d4c867<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500inet 192.168.2.48 netmask-or-prefix 0xfffffc00 broadcast 192.168.3.255 noddnsether 00:a0:98:13:d2:d3 (auto-100tx-fd-up) flowcontrol fulle0a: flags=0x250c866<BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500ether 00:a0:98:13:d2:ce (auto-unknown-cfg_down) flowcontrol fulle0b: flags=0x250c866<BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 1500ether 00:a0:98:13:d2:cf (auto-unknown-cfg_down) flowcontrol fulle2a: flags=0x8bd0a867<BROADCAST,RUNNING,MULTICAST,TCPCKSUM,VLAN> mtu 9000ether 02:a0:98:13:d2:d0 (auto-10g_sr-fd-up) flowcontrol fulltrunked vif0e2b: flags=0x8bd0a867<BROADCAST,RUNNING,MULTICAST,TCPCKSUM,VLAN> mtu 9000ether 02:a0:98:13:d2:d0 (auto-10g_sr-fd-up) flowcontrol fulltrunked vif0lo: flags=0x1948049<UP,LOOPBACK,RUNNING,MULTICAST,TCPCKSUM> mtu 8160inet 127.0.0.1 netmask-or-prefix 0xff000000 broadcast 127.0.0.1ether 00:00:00:00:00:00 (RNIC Provider)vif0: flags=0xa3d0a863<BROADCAST,RUNNING,MULTICAST,TCPCKSUM,VLAN> mtu 9000ether 02:a0:98:13:d2:d0 (Enabled virtual interface)vif0-900: flags=0x394a863<UP,BROADCAST,RUNNING,MULTICAST,TCPCKSUM> mtu 9000inet 192.168.90.144 netmask-or-prefix 0xffffff00 broadcast 192.168.90.255partner vif0-900 (not in use)ether 02:a0:98:13:d2:d0 (Enabled virtual interface)Sample Startup Information Configuration

ntap3200-1a> rdfile /etc/rchostname ntap3200-1avif create lacp vif0 -b ip e1a e1bvlan create vif0 3150 900ifconfig e0M `hostname`-e0M netmask 255.255.255.0 mtusize 1500 -wins flowcontrol full partner e0Mroute add default 10.61.185.1 1routed onoptions dns.domainname rtp.netapp.comoptions dns.enable onoptions nis.enable offsavecorevlan create vif0 900ifconfig vif0-900 mtusize 9000ifconfig vif0-900 partner vif0-900ifconfig vif0-900 192.168.90.144 netmask 255.255.255.0vlan add vif0 3150ifconfig vif0-3150 `hostname`-vif0-3150 netmask 255.255.255.0 mtusize 1500 -wins partner vif0-3150ifconfig vif0-3150 192.168.150.1 netmask 255.255.255.0Sample Volume Information

ntap3200-1a> vol statusVolume State Status Optionsinfrastructure_root online raid_dp, flex guarantee=none,fractional_reserve=0vol0 online raid_dp, flex rootinfrastructure_datastore_1 online raid_dp, flex guarantee=none,sis fractional_reserve=0esxi_boot_A online raid_dp, flex guarantee=none,sis fractional_reserve=0Sample LUN Information

ntap3200-1a> lun show -mLUN path Mapped to LUN ID Protocol-----------------------------------------------------------------------/vol/esxi_boot_A/ucs2b-1-sc ucs2b-1-sc_A 0 FCPucs2b-1-sc_B 0 FCPSample Initiator Group (igroup) Information

ntap3200-1a> igroup showucs2b-1-sc_A (FCP) (ostype: vmware):20:00:00:25:b5:00:0a:9f (logged in on: 0c)ucs2b-1-sc_B (FCP) (ostype: vmware):20:00:00:25:b5:00:0b:df (logged in on: 0d)Sample vFiler Structure

ntap3200-1a> vfiler statusvfiler0 runninginfrastructure_1_vfiler runningSample List of Defined ipspaces and Interface Assignment

ntap3200-1a> ipspace listNumber of ipspaces configured: 3default-ipspace (e0M e0P e0a e0b )infrastructure (vif0-900 )Sample vFiler Context Route Configuration

infrastructure_1_vfiler@ntap3200-1a> route -sRouting tablesInternet:Destination Gateway Flags Refs Use Interface192.168.90 link#12 UC 0 0 vif0-900192.168.90.109 0:50:56:70:f8:9a UHL 2 409 vif0-900192.168.90.110 0:50:56:77:8a:ac UHL 2 5181 vif0-900192.168.90.111 0:50:56:70:c0:80 UHL 2 9 vif0-900192.168.90.112 0:50:56:7b:df:f9 UHL 2 9 vif0-900192.168.90.117 0:50:56:a0:0:0 UHL 0 18 vif0-900Sample vFiler Context Exported Directories and Files

infrastructure_1_vfiler@ntap3200-1a> exportfs/vol/infrastructure_datastore_1-sec=sys,rw=192.168.90.109:192.168.90.110:192.168.90.111:19 2.168.90.112:192.168.95.10,root=192.168.90.109:192.168.90.110:192.168.90.111:192.168.90.11 2:192.168.95.10/vol/infrastructure_root-sec=sys,rw,anon=0Cisco Nexus 5548 Sample Running Configuration

version 5.0(2)N2(1)feature fcoefeature npivfeature telnetcfs ipv4 distributecfs eth distributefeature lacpfeature vpcfeature lldpusername admin password 5 $1$L3ZfgcnE$jVX7X6bkIQiIr32esCZ2O. role network-adminip domain-lookupip domain-lookupswitchname n5k-2system jumbomtu 9000logging event link-status defaultip access-list classify_COS_410 permit ip 192.168.91.0/24 any20 permit ip any 192.168.91.0/24ip access-list classify_COS_510 permit ip 192.168.90.0/24 any20 permit ip any 192.168.90.0/24class-map type qos class-fcoeclass-map type qos match-all Silver_Trafficmatch access-group name classify_COS_4class-map type qos match-all Platinum_Trafficmatch access-group name classify_COS_5class-map type queuing class-all-floodmatch qos-group 2class-map type queuing class-ip-multicastmatch qos-group 2policy-map type qos Global_Classifyclass Platinum_Trafficset qos-group 2class Silver_Trafficset qos-group 4class-map type network-qos class-all-floodmatch qos-group 2class-map type network-qos Silver_Traffic_NQmatch qos-group 4class-map type network-qos class-ip-multicastmatch qos-group 2class-map type network-qos Platinum_Traffic_NQmatch qos-group 2policy-map type network-qos Setup_QOSclass type network-qos Platinum_Traffic_NQset cos 5mtu 9000class type network-qos Silver_Traffic_NQset cos 4mtu 9000class type network-qos class-fcoepause no-dropmtu 2158class type network-qos class-defaultsystem qosservice-policy type network-qos Setup_QOSservice-policy type qos input Global_Classifysnmp-server user admin network-admin auth md5 0xbc83a1f2e2679352248d184bc5580243 priv 0xbc83a1f2e2679352248d184bc5580243 localizedkeysnmp-server enable traps entity fruvrf context managementip route 0.0.0.0/0 10.61.185.1vlan 1vlan 185name MGMT_VLANvlan 900name NFS_VLANvlan 901name vMotion_VLANvlan 950name Packet_Control_VLANspanning-tree port type edge bpduguard defaultspanning-tree port type edge bpdufilter defaultspanning-tree port type network defaultvpc domain 23role priority 20peer-keepalive destination 10.61.185.69 source 10.61.185.70vsan databasevsan 102 name "Fabric_B"device-alias databasedevice-alias name ucs2b-1_B pwwn 20:00:00:25:b5:00:0b:dfdevice-alias name ucs2b-2_B pwwn 20:00:00:25:b5:00:0b:ffdevice-alias name ntap3200-1a_0d pwwn 50:0a:09:82:8d:dd:93:e8device-alias name ntap3200-1b_0d pwwn 50:0a:09:82:9d:dd:93:e8device-alias commitfcdomain fcid databasevsan 102 wwn 20:41:00:05:9b:79:07:80 fcid 0x9e0000 dynamicvsan 102 wwn 50:0a:09:82:00:05:5c:71 fcid 0x9e0001 dynamicvsan 102 wwn 50:0a:09:82:00:05:5c:b1 fcid 0x9e0002 dynamicvsan 102 wwn 20:00:00:25:b5:00:0b:df fcid 0x9e0003 dynamic! [ucs2b-1_B]vsan 102 wwn 20:00:00:25:b5:00:0b:ff fcid 0x9e0004 dynamic! [ucs2b-2_B]vsan 102 wwn 50:0a:09:82:9d:dd:93:e8 fcid 0x9e0005 dynamic! [ntap3200-1b_0d]vsan 102 wwn 50:0a:09:82:8d:dd:93:e8 fcid 0x9e0006 dynamic! [ntap3200-1a_0d]interface port-channel10description vPC Peer-Linkswitchport mode trunkvpc peer-linkswitchport trunk native vlan 2spanning-tree port type networkinterface port-channel11description ntap3200-1aswitchport mode trunkvpc 11switchport trunk native vlan 2switchport trunk allowed vlan 900spanning-tree port type edge trunkinterface port-channel12description ntap3200-1bswitchport mode trunkvpc 12switchport trunk native vlan 2switchport trunk allowed vlan 900spanning-tree port type edge trunkinterface port-channel13description ucsm-2-Aswitchport mode trunkvpc 13switchport trunk allowed vlan 185,900-901,950spanning-tree port type edge trunkinterface port-channel14description ucsm-2-Bswitchport mode trunkvpc 14switchport trunk allowed vlan 185,900-901,950spanning-tree port type edge trunkinterface port-channel20description mgmt-1 uplinkswitchport mode trunkvpc 20switchport trunk native vlan 2switchport trunk allowed vlan 185spanning-tree port type networkvsan databasevsan 102 interface fc2/1vsan 102 interface fc2/2vsan 102 interface fc2/3interface fc2/1no shutdowninterface fc2/2no shutdowninterface fc2/3no shutdowninterface fc2/4interface Ethernet1/1description ntap3200-1a:e1bswitchport mode trunkswitchport trunk native vlan 2switchport trunk allowed vlan 900channel-group 11 mode activeinterface Ethernet1/2description ntap3200-1b:e1bswitchport mode trunkswitchport trunk native vlan 2switchport trunk allowed vlan 900channel-group 12 mode activeinterface Ethernet1/3interface Ethernet1/4interface Ethernet1/5description n5k-1:Eth1/5switchport mode trunkswitchport trunk native vlan 2channel-group 10 mode activeinterface Ethernet1/6description n5k-1:Eth1/6switchport mode trunkswitchport trunk native vlan 2channel-group 10 mode activeinterface Ethernet1/7description n1010-1:Eth2switchport mode trunkswitchport trunk allowed vlan 185,950spanning-tree port type edge trunkspeed 1000interface Ethernet1/8description n1010-2:Eth2switchport mode trunkswitchport trunk allowed vlan 185,950spanning-tree port type edge trunkspeed 1000interface Ethernet1/9description ucsm-2-A:Eth1/8switchport mode trunkswitchport trunk allowed vlan 185,900-901,950channel-group 13 mode activeinterface Ethernet1/10description ucsm-2-B:Eth1/8switchport mode trunkswitchport trunk allowed vlan 185,900-901,950channel-group 14 mode activeinterface Ethernet1/20description mgmt-1:Eth1/13switchport mode trunkswitchport trunk native vlan 2switchport trunk allowed vlan 185channel-group 20 mode activeinterface Ethernet2/1interface Ethernet2/2interface Ethernet2/3interface Ethernet2/4interface mgmt0ip address 10.61.185.70/24line consoleline vtyboot kickstart bootflash:/n5000-uk9-kickstart.5.0.2.N2.1.binboot system bootflash:/n5000-uk9.5.0.2.N2.1.bininterface fc2/1interface fc2/2interface fc2/3interface fc2/4!Full Zone Database Section for vsan 102zone name ucs2b-1_B vsan 102member pwwn 20:00:00:25:b5:00:0b:df! [ucs2b-1_B]member pwwn 50:0a:09:82:8d:dd:93:e8! [ntap3200-1a_0d]zone name ucs2b-2_B vsan 102member pwwn 20:00:00:25:b5:00:0b:ff! [ucs2b-2_B]member pwwn 50:0a:09:82:9d:dd:93:e8! [ntap3200-1b_0d]zoneset name flexpod vsan 102member ucs2b-1_Bmember ucs2b-2_Bzoneset activate name flexpod vsan 102Cisco Nexus 1010 Sample Running Configuration

version 4.0(4)SP1(1)username admin password 5 $1$EVg2LPBC$EX8pjL9GBayKAaUmwjLjD. role network-adminntp server 10.61.185.9ip domain-lookupip host n1010-1 10.61.185.165kernel core target 0.0.0.0kernel core limit 1system default switchportsnmp-server user admin network-admin auth md5 0x7ccf323f71b74c6cf1cba6d255e9ded9 priv 0x7ccf323f71b74c6cf1cba6d255e9ded9 localizedkeysnmp-server enable traps licensevrf context managementip route 0.0.0.0/0 10.61.185.1switchname n1010-1vlan 1,162,950vlan 902name datavdc n1010-1 id 1limit-resource vlan minimum 16 maximum 513limit-resource monitor-session minimum 0 maximum 64limit-resource vrf minimum 16 maximum 8192limit-resource port-channel minimum 0 maximum 256limit-resource u4route-mem minimum 32 maximum 80limit-resource u6route-mem minimum 16 maximum 48network-uplink type 3virtual-service-blade drs1-vsm1virtual-service-blade-type name VSM-1.0interface control vlan 950interface packet vlan 950ramsize 2048disksize 3no shutdownvirtual-service-blade drs2-vsm1virtual-service-blade-type name VSM-1.0interface control vlan 950interface packet vlan 950ramsize 2048disksize 3no shutdownvirtual-service-blade drs3-vsm1virtual-service-blade-type name VSM-1.0interface control vlan 950interface packet vlan 950ramsize 2048disksize 3no shutdownvirtual-service-blade NAMvirtual-service-blade-type name NAM-1.0interface data vlan 902ramsize 2048disksize 53no shutdown primaryinterface mgmt0ip address 10.61.185.165/16interface control0logging logfile messages 6boot kickstart bootflash:/nexus-1010-kickstart-mz.4.0.4.SP1.1.binboot system bootflash:/nexus-1010-mz.4.0.4.SP1.1.binboot kickstart bootflash:/nexus-1010-kickstart-mz.4.0.4.SP1.1.binboot system bootflash:/nexus-1010-mz.4.0.4.SP1.1.binsvs-domaindomain id 51control vlan 950management vlan 162Cisco Nexus 1000v Sample Running Configuration

version 4.0(4)SV1(3b)username admin password 5 $1$hgzMSZ3F$NCCbwTw4Z8QU5yjIo7Me11 role network-adminssh key rsa 2048ntp server 10.61.185.3ip domain-lookupip host n1010-1-vsm 10.61.185.137kernel core target 0.0.0.0kernel core limit 1system default switchportvem 3host vmware id 737ff954-0de3-11e0-0000-000000000001vem 4host vmware id 737ff954-0de3-11e0-0000-000000000002snmp-server user admin network-admin auth md5 0xfe02f063cf936282f39c604c06e628df priv 0xfe02f063cf936282f39c604c06e628df localizedkeysnmp-server enable traps licensevrf context managementip route 0.0.0.0/0 10.61.185.1hostname n1010-1-vsmvlan 1vlan 185name MGMT-VLANvlan 900name NFS-VLANvlan 901name vMotion-VLANvlan 950name VM-Traffic-VLANvdc n1010-1-vsm id 1limit-resource vlan minimum 16 maximum 513limit-resource monitor-session minimum 0 maximum 64limit-resource vrf minimum 16 maximum 8192limit-resource port-channel minimum 0 maximum 256limit-resource u4route-mem minimum 32 maximum 80limit-resource u6route-mem minimum 16 maximum 48port-profile type vethernet MGMT-VLANvmware port-groupswitchport mode accessswitchport access vlan 185no shutdownsystem vlan 185state enabledport-profile type vethernet NFS-VLANvmware port-groupswitchport mode accessswitchport access vlan 900no shutdownsystem vlan 900state enabledport-profile type ethernet Unused_Or_Quarantine_Uplinkdescription Port-group created for Nexus1000V internal usage. Do not use.vmware port-groupshutdownstate enabledport-profile type vethernet Unused_Or_Quarantine_Vethdescription Port-group created for Nexus1000V internal usage. Do not use.vmware port-groupshutdownstate enabledport-profile type vethernet VM-Traffic-VLANvmware port-groupswitchport mode accessswitchport access vlan 950no shutdownsystem vlan 950state enabledport-profile type ethernet system-uplinkdescription system profile for blade uplink portsvmware port-groupswitchport mode trunkswitchport trunk allowed vlan 185,900-901,950system mtu 9000channel-group auto mode on mac-pinningno shutdownsystem vlan 185,900-901,950state enabledport-profile type vethernet vMotion-VLANvmware port-groupswitchport mode accessswitchport access vlan 901no shutdownsystem vlan 901state enabledinterface port-channel1inherit port-profile system-uplinkmtu 9000interface port-channel2inherit port-profile system-uplinkmtu 9000interface Ethernet3/1inherit port-profile system-uplinkmtu 9000interface Ethernet3/2inherit port-profile system-uplinkmtu 9000interface Ethernet4/1inherit port-profile system-uplinkmtu 9000interface Ethernet4/2inherit port-profile system-uplinkmtu 9000interface mgmt0ip address 10.61.185.137/24interface Vethernet1inherit port-profile MGMT-VLANdescription VMware VMkernel, vmk0vmware dvport 35interface Vethernet2inherit port-profile NFS-VLANdescription VMware VMkernel, vmk1vmware dvport 67interface Vethernet3inherit port-profile vMotion-VLANdescription VMware VMkernel, vmk2vmware dvport 130interface control0boot kickstart bootflash:/nexus-1000v-kickstart-mz.4.0.4.SV1.3b.bin sup-1boot system bootflash:/nexus-1000v-mz.4.0.4.SV1.3b.bin sup-1boot kickstart bootflash:/nexus-1000v-kickstart-mz.4.0.4.SV1.3b.bin sup-2boot system bootflash:/nexus-1000v-mz.4.0.4.SV1.3b.bin sup-2svs-domaindomain id 10control vlan 950packet vlan 950svs mode L2svs connection vCenterprotocol vmware-vimremote ip address 10.61.185.114 port 80vmware dvs uuid "2d 5b 20 50 21 69 05 64-2c 68 d0 b3 63 bf b2 9f" datacenter-name FlexPod_DC_1connectCisco Unified Computing System Configuration Extracts

All configurations in this section occur after the initial UCS cluster setup scripts have completed and the UCS Manager is accessible to the administrator. Use the configuration information described above to execute the setup script and complete the deployment required in your environment.

For more information on the initial setup of Cisco UCS Manager, go to: http://www.cisco.com/en/US/products/ps10281/products_installation_and_configuration_guides_list.html and select the appropriate release of the "System Configuration" documentation.

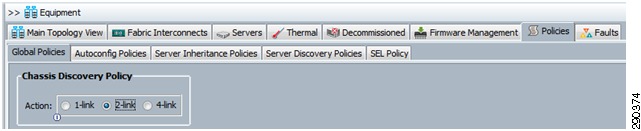

Sample Chassis Discovery Policy Configuration

Define the Chassis Discovery Policy to reflect the number of links from the chassis to the fabric interconnects. FlexPod requires at a minimum two links.

Figure 3 Chassis Discovery Policy Screen

Define and enable Fibre Channel, Server, and Uplink Ports.

Figure 4 Fibre Channel Server and Uplink Ports Screen

The physical display after completing this procedure is shown in Figure 5.

Figure 5 Physical Display after Procedure Completion

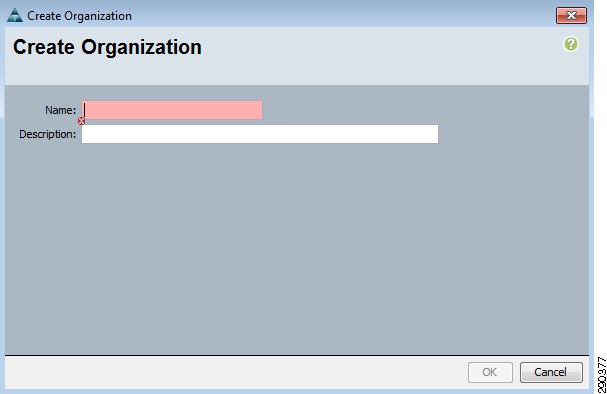

Create an Organization

The use of organizations allows the physical UCS resources to be logically divided. Each organization can have its own policies, pools, and quality of service definitions. Organizations are hierarchical in nature, allowing sub-organizations to inherit characteristics from higher organizations or establish their policies, pools, and service definitions.

To create an Organization, go to the Main panel New menu drop-down list and select Create Organization to create an organization which manages the FlexPod infrastructure and owns the logical building blocks.

Figure 6 Create Organization Screen

Create MAC Address Pools

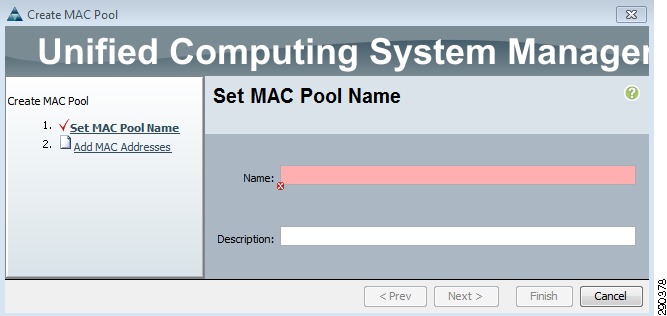

In the Navigation pane, select the LAN tab, click Pools, and select the proper sub-organization. On the Main panel, select Create MAC Pool and compete the form.

Figure 7 Create MAC Pool Screen

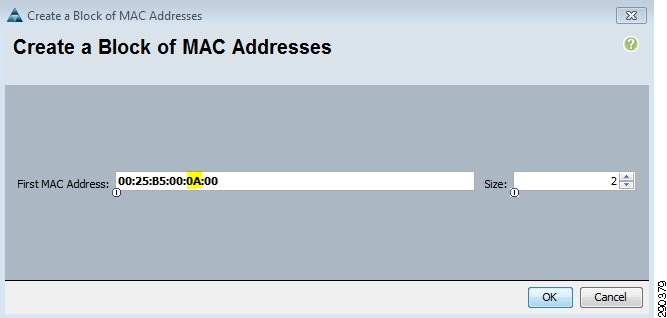

Click Next and complete the form. Notice that the MAC address field should indicate if the MAC Pool will be used in fabric a or fabric b in the second octet position; the size should be set to 2.

Figure 8 Create Block of MAC Addresses Screen

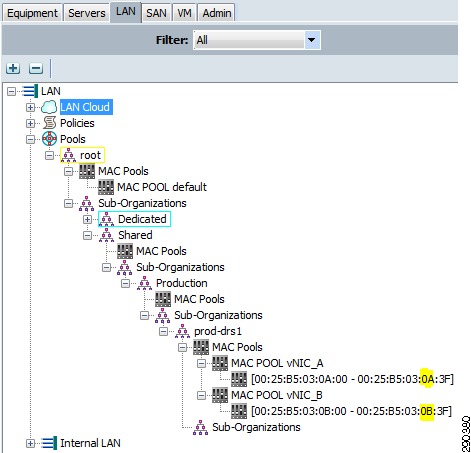

In the example below, the "prod-drs1" organization defines two MAC pools. Each MAC pool uses a unique hex value to indicate the unique MAC address aligning with fabric A or fabric B. The alignment of organizations and resources is a fundamental feature of the Cisco UCS.

Figure 9 MAC Pool Examples

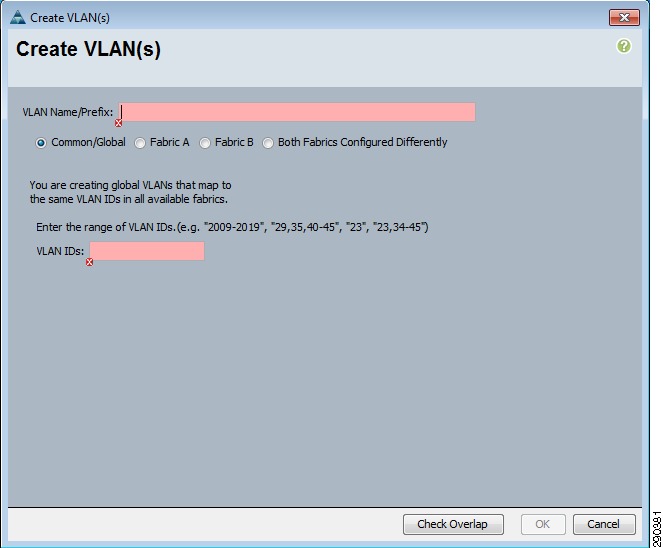

Create Global VLAN Pools

In the Navigation pane, select the LAN tab, select LAN Cloud, and then select VLANs. On the main panel, click New and select Create VLAN(s).

Figure 10 Create LANs Screen

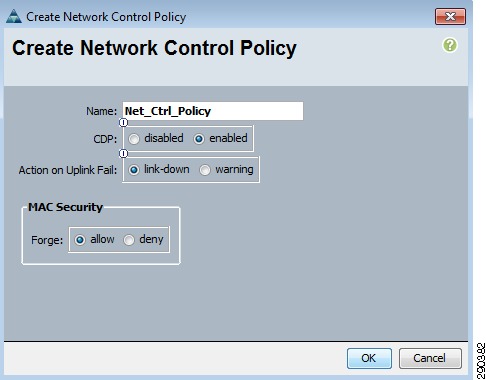

Create a Network Control Policy

In the Navigation pane select the LAN tab, select LAN Cloud, and then select Policies. Select the appropriate organization for the new network control policy. In the work pane, click the General tab and select Create Network Control Policy. Provide a name for the policy and select the enabled CDP radio button.

Figure 11 Create Network Control Policy Screen

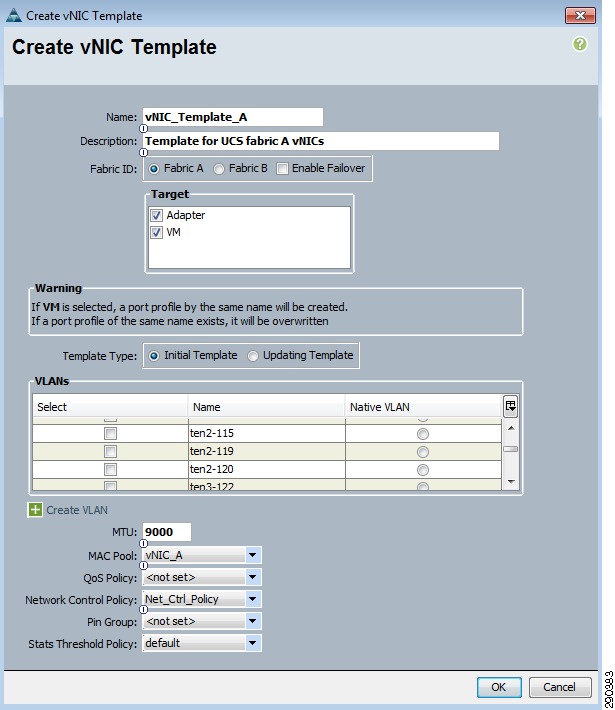

Create vNIC Template

In the Navigation pane, select the LAN tab, select LAN Cloud, and then select Policies. Select the organization requiring a new vNIC Template. In the work pane, click the General tab and select Create vNIC Template. Complete the form and be sure to employ the previously-defined global VLANs, MAC pools, and Network Control Policy. The MTU should be set to 9000.

Create two vNIC templates, one for use in fabric a and one for use in fabric b. The only differences are the name, description, and MAC pool referenced.

Figure 12 Create vNIC Template Screen

Define QoS Policies and Jumbo Frames

In the Navigation pane, select the LAN tab, select LAN Cloud, and then select QoS System Class. Set the Best Effort QoS system class to 9000 MTU.

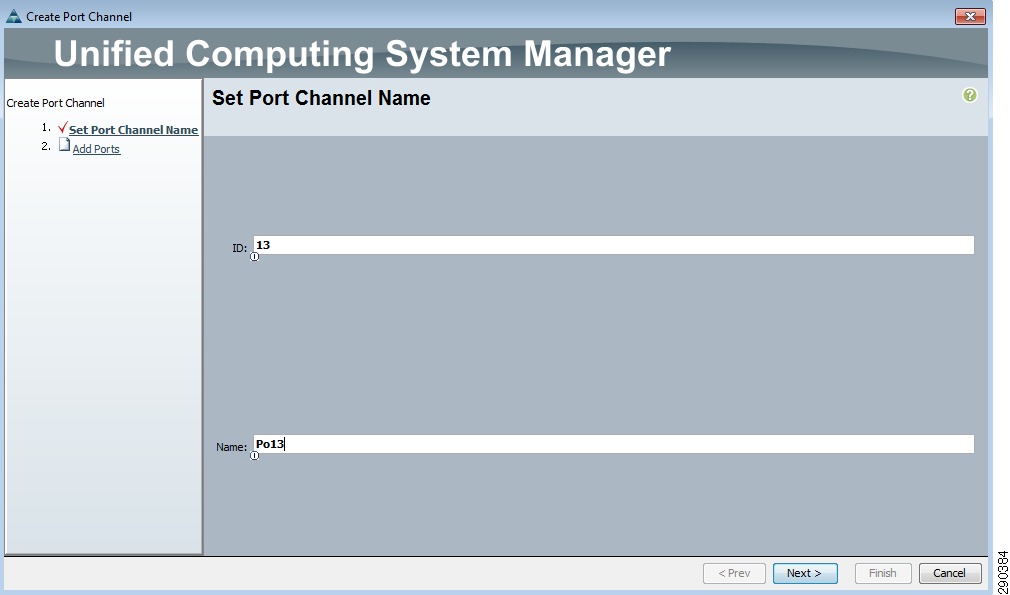

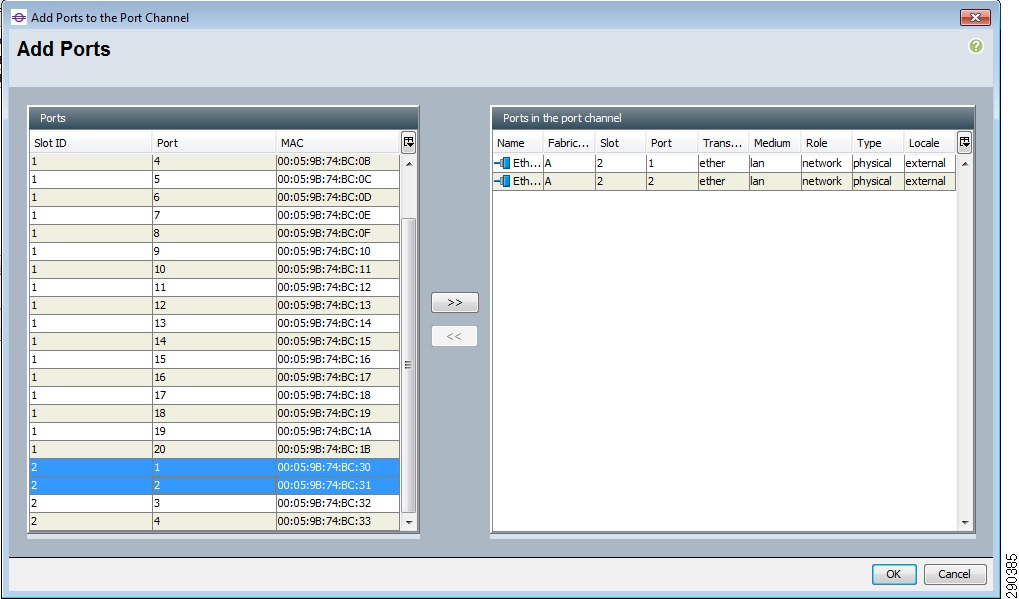

Create Uplink Port-Channels to the Cisco Nexus 5548 Switches

In the Navigation pane, select LAN tab, select LAN Cloud, and then select Fabric A. Right-click on the Port Channels item and select Create Port Channel. Complete the form and click Next. Select uplink ports Ethernet slot 2 ports 1 and 2 and click OK.

Figure 13 Create Port Channel Screen

Figure 14 Add Ports Screen

Create WWNN Pool

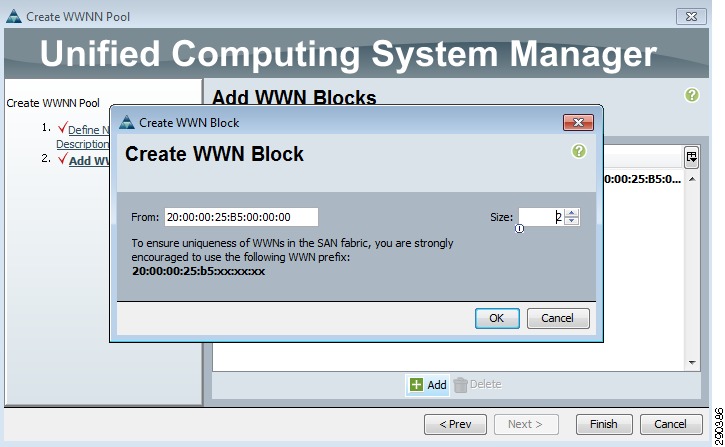

In the Navigation pane, select the SAN tab, select Pools, and select the appropriate sub-organization. Right-click on the WWNN Pools of that sub-organization and select the Create WWNN Pool item. A wizard will launch to create a WWNN Pool. Complete the first form using WWNN_Pool as the name. Click Next and then Add a WWN Block with a size of 2. Click OK, then click Finish.

Figure 15 Create WWNN Pool Screen

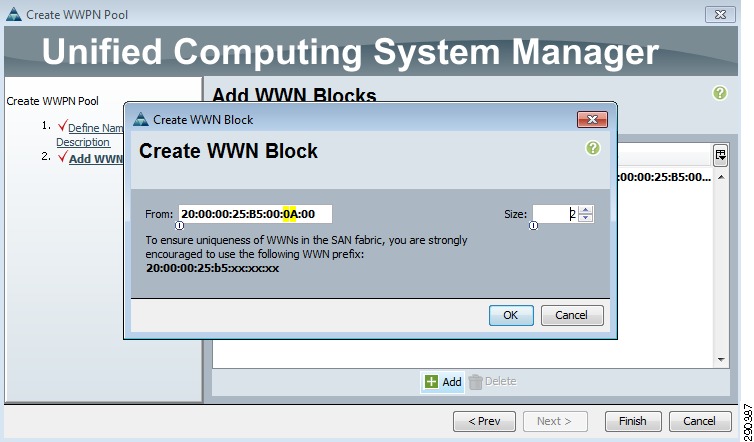

Create WWPN Pools

In the Navigation pane, select the SAN tab, select Pools, and select the appropriate sub-organization. Right-click on the WWPN Pools of that sub-organization and select the Create WWPN Pool item. A wizard will launch to create a WWPN Pool. Complete the first form using WWNN_Pool_A as the name. Click Next and then Add a WWN Block with a size of 2 and a block indicating the fabric assignment in the second octet. Click OK, then click Finish. Repeat this process to create another WWPN pool named WWNN_Pool_B.

Figure 16 Create WWPN Pool Screen

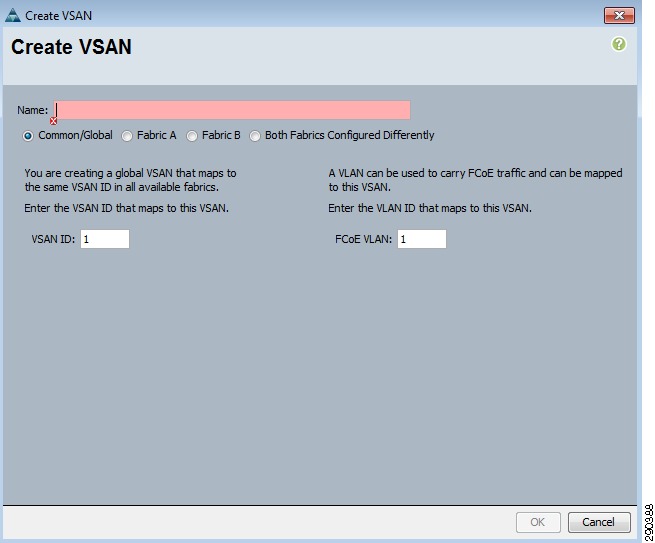

Create Global VSANs

In the Navigation pane, select the SAN tab, select SAN Cloud, and then select VSANs. On the main panel, click New, then select Create VSAN. Create two VSANs, one for fabric a and one for fabric b.

Figure 17 Create VSAN Screen

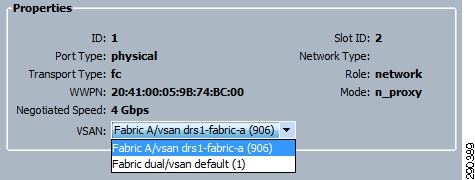

The VSANs must then be associated to the appropriate fibre channel uplink ports. To associate the VSANs to the UCS fibre channel uplinks, select the Equipment tab in the Navigation pane. Select Fabric Interconnect A and Expansion Module 2. Select Uplink FC Ports, select FC Port 1 uplink, and assign the previously created fabric a VSAN to the port by selecting it from the VSAN drop-down list on the work panel. Repeat this process for FC Port 2 on Fabric Interconnect A and for Fabric Interconnect B FC ports 1 and 2.

Figure 18 VSAN Properties Screen

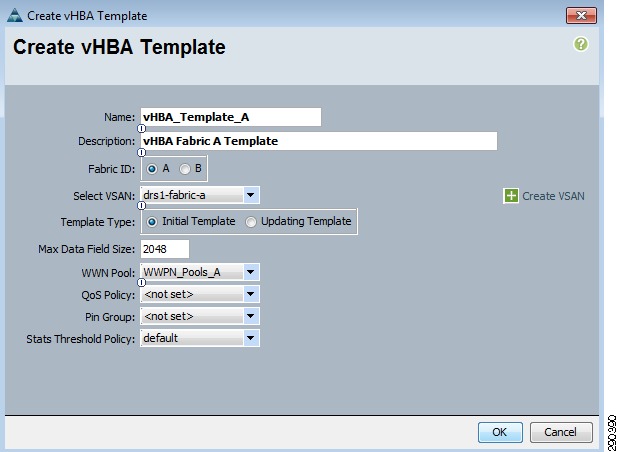

Create vHBA Templates

In the SAN tab on the Navigation pane, select Policies and the appropriate sub-organization. In the work panel, select Create vHBA Template; a wizard will launch. Name the template vHBA_Template_A, select the fabric a VSAN, and set the WWN Pool to the WWPN pool previously defined for fabric a. Repeat this process for Fabric B using similar naming standard and the appropriate selections.

Figure 19 Create vHBA Template Screen

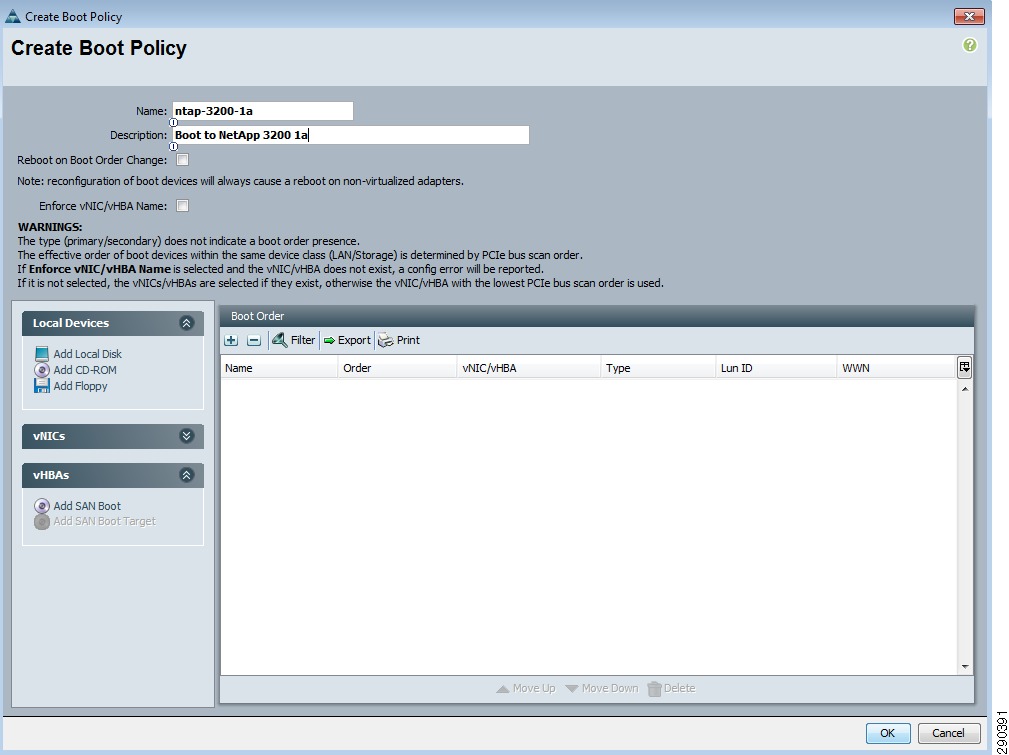

Create Boot Policies

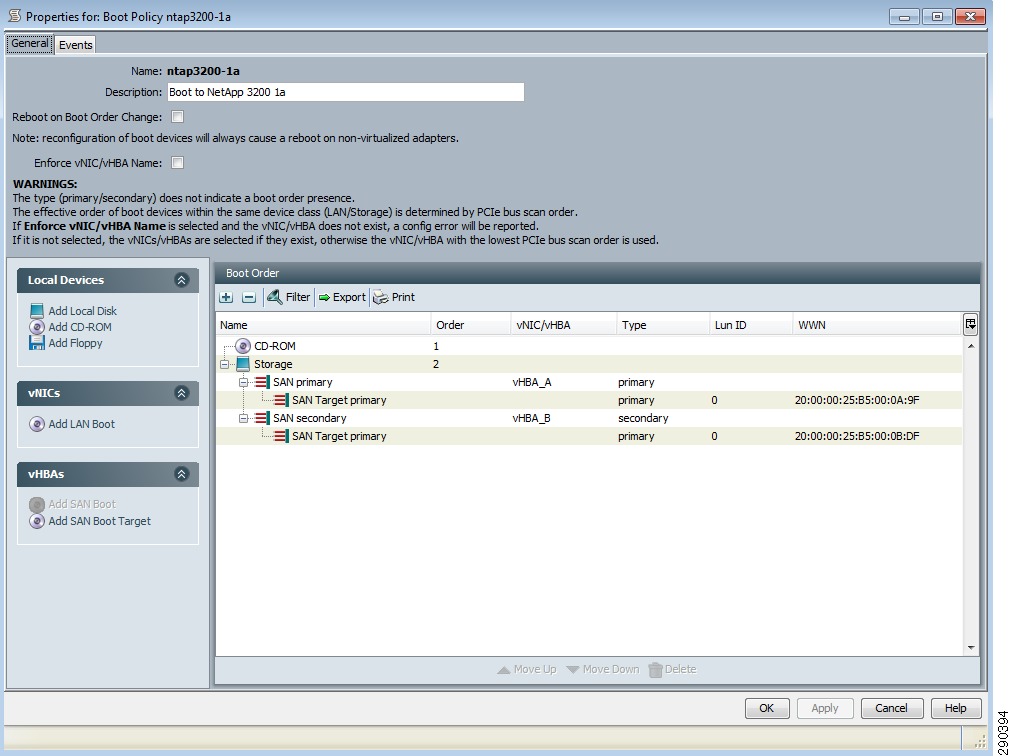

Navigate to the Servers tab in the Navigation pane and select Policies and the appropriate sub-organization. Select Create Boot Policy in the work pane. A wizard window will launch. Name the boot policy after the NetApp controller it will target and provide an optional description of the policy. Leave Reboot on Boot Order Change and Enforce vNIC/vHBA Name unchecked. Select Add CD-ROM under the Local Devices menu.

Figure 20 Create Boot Policy Screen

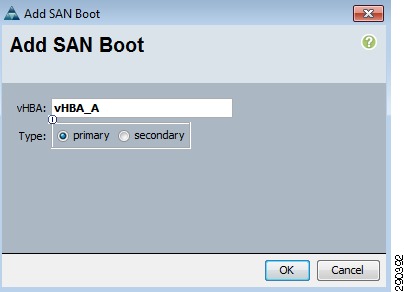

Select Add SAN Boot under the vHBAs menu.

Figure 21 Add SAN Boot Screen

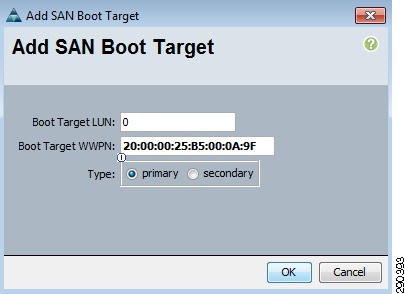

Select Add SAN Boot Target under the vHBAs menu.

Figure 22 Add SAN Boot Target Screen

Note that the Boot Target WWPN matches the NetApp filer defined earlier.

Figure 23 is a complete view of Create Boot Policy workspace. Repeat this process for the secondary filer using similar naming conventions.

Figure 23 Properties for Boot Policy Screen

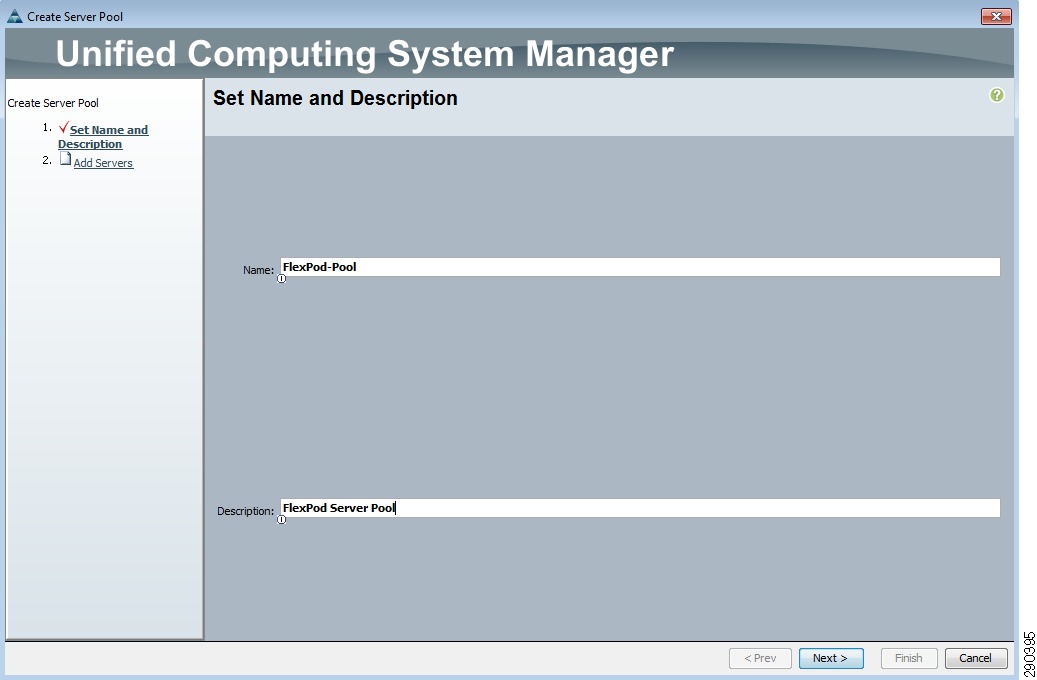

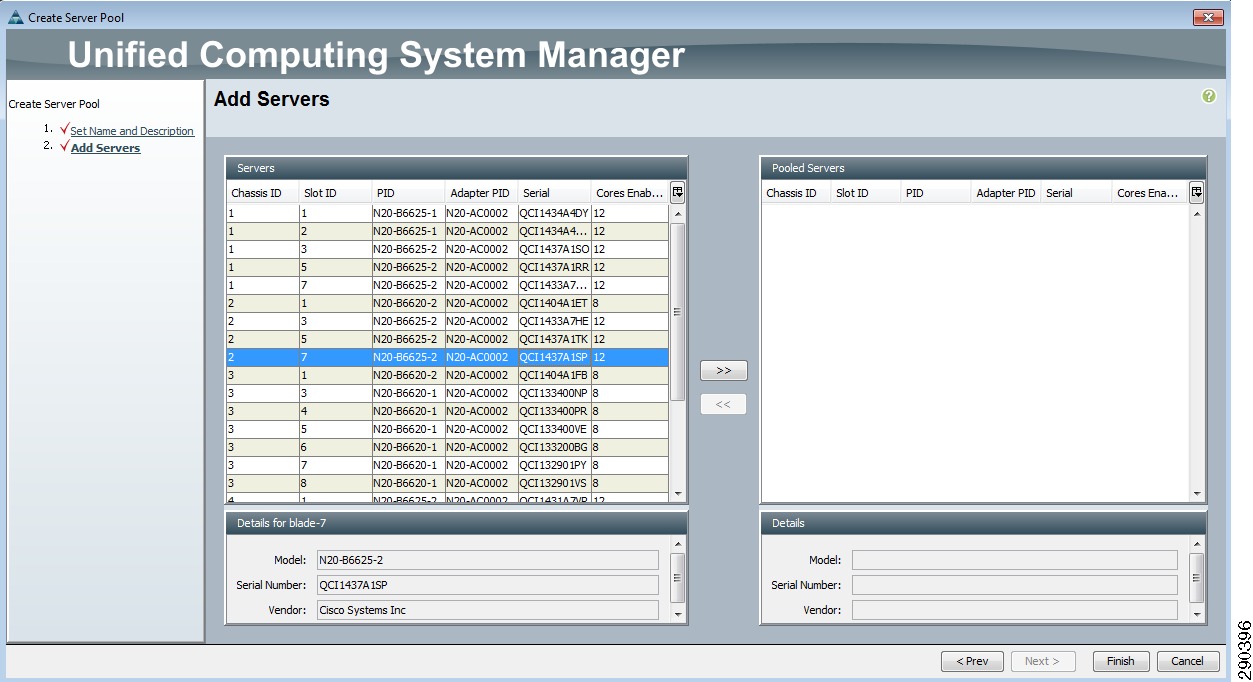

Create Server Pools

Navigate to the Servers tab in the Navigation pane and select Pools and the appropriate sub-organization. In the work pane, select Create Server Pool to launch the Server Pool wizard application. Complete the forms and migrate the appropriate physical server blade resources into the pool. Click Finish.

Figure 24 Create Server Pool Screen

Figure 25 Create Server Pool—Add Servers Screen

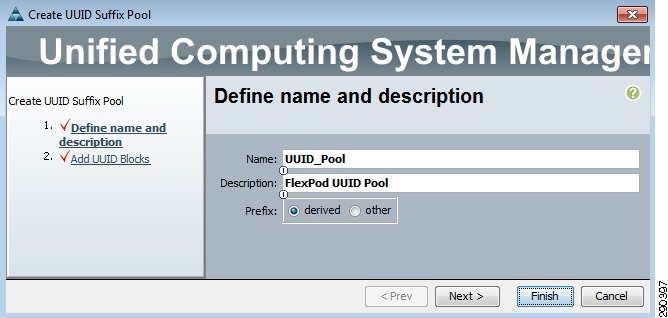

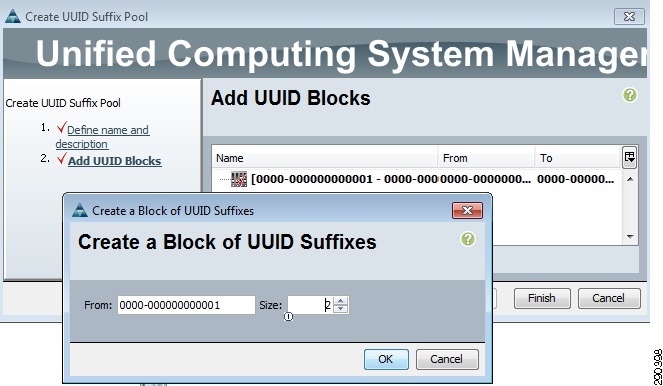

Create UUID Suffix Pools

Navigate to the Servers tab in the Navigation pane and select Pools and the appropriate sub-organization. In the work pane, select Create UUID Suffix Pool to launch the associated wizard application. Complete the associated forms and click Finish.

Figure 26 Create UUID Suffix Pool Screen 1

Figure 27 Create UUID Suffix Pool Screen 2

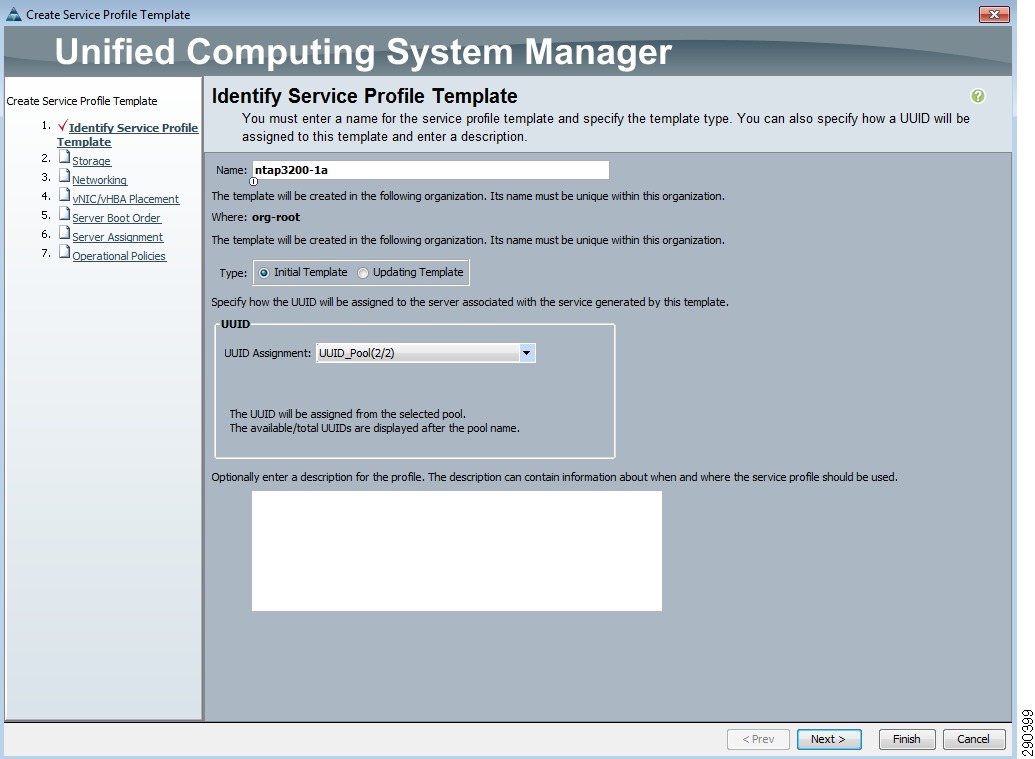

Create Service Profile Templates

In the Navigation pane, select the Servers tab and select the Service Profile Templates and the appropriate sub-organization under this item. In the work pane, select Create Service Profile Template; the Create Service Profile Template wizard is launched.

Provide a name for the service profile template; the name should reflect the NetApp controller used to boot service profiles based on this template. Select the UUID Suffix Pool previously defined. Click Next.

Figure 28 Create Service Profile Template—Identify Service Profile Template Screen

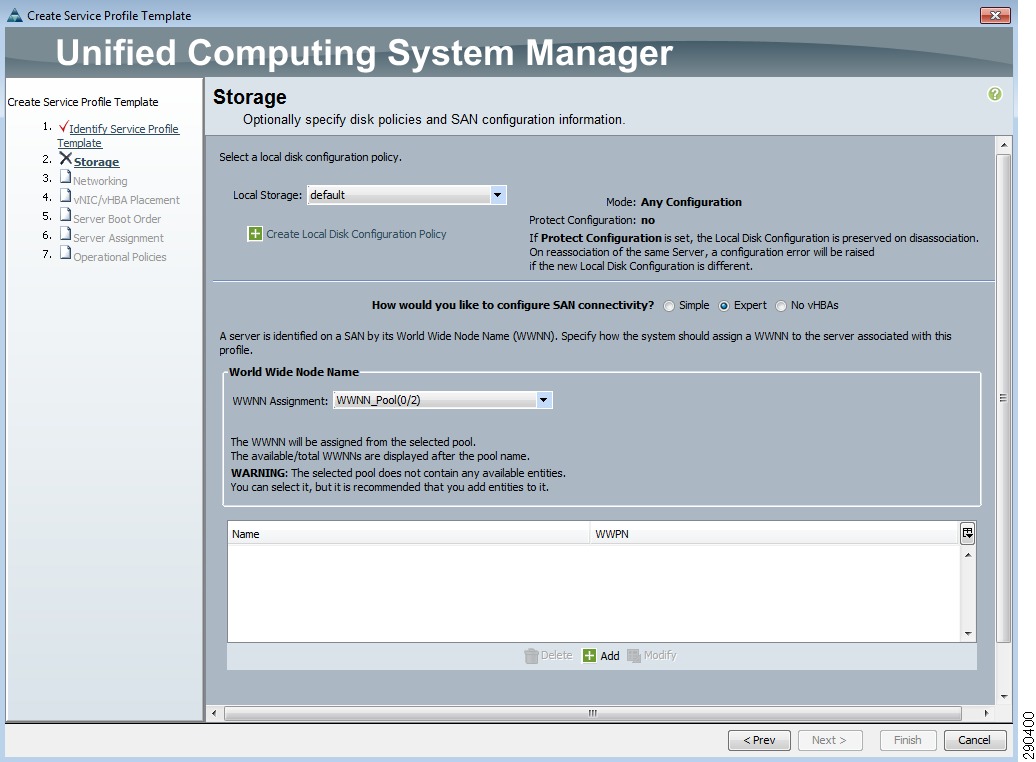

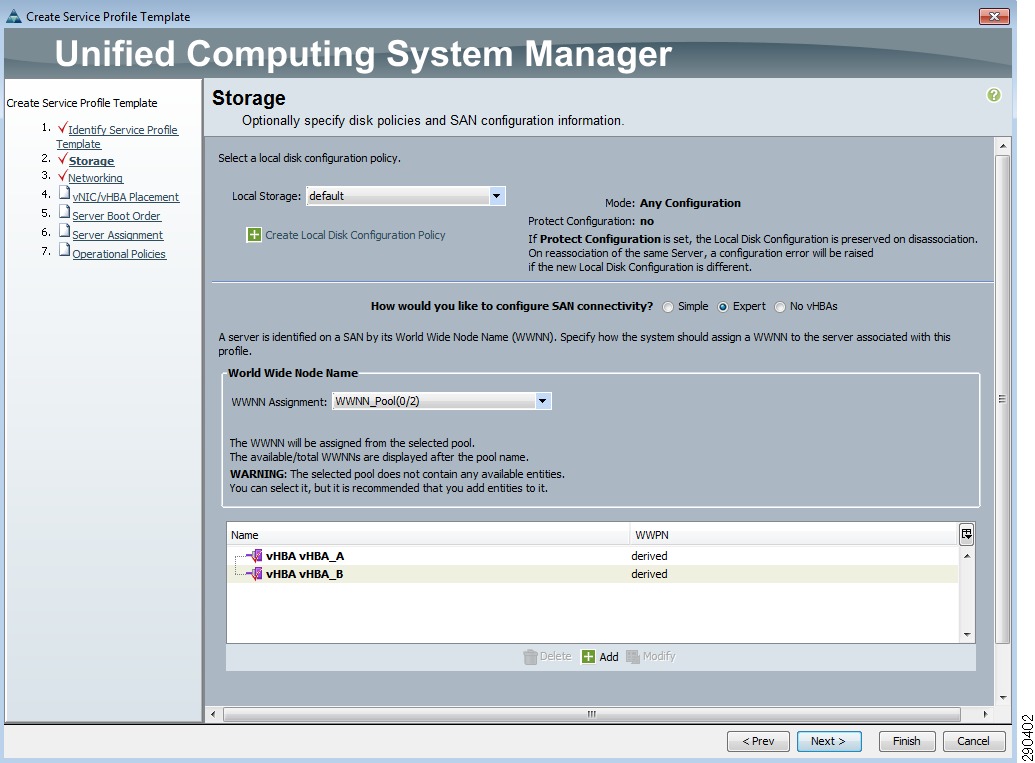

In the work pane, select default for Local Storage, select Expert SAN configuration mode, and select the WWNN Pool previously defined. Click Add.

Figure 29 Create Service Profile Template—Storage Screen 1

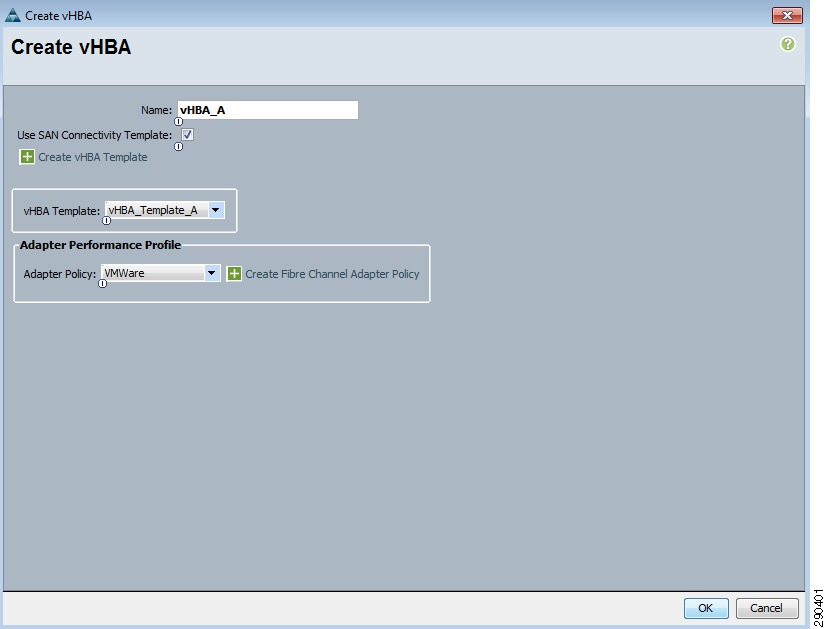

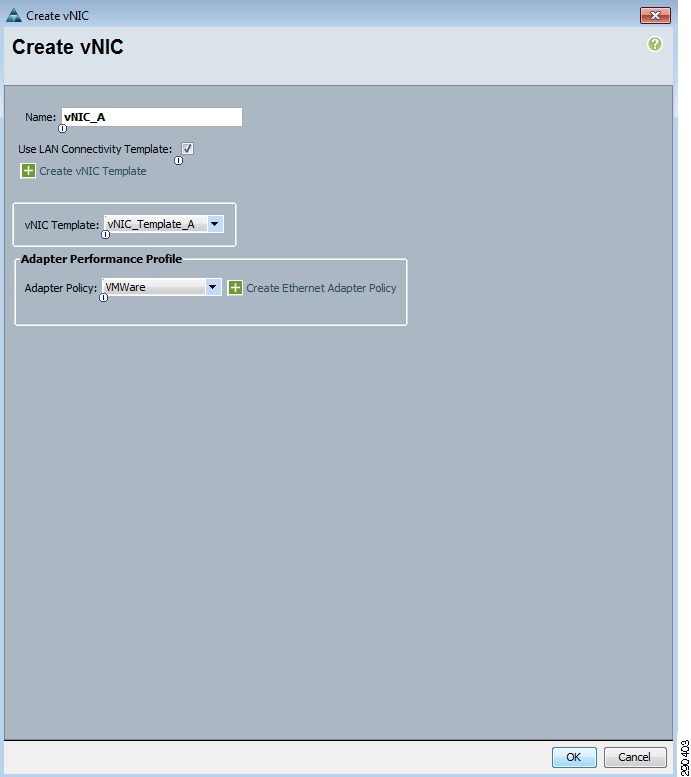

Type a name for the vHBA; it is considered best practice to include the fabric the vHBA is using. Select Use SAN Connectivity Template and then select the vHBA Template previously defined that is associated with the fabric. The Adapter Policy should be set to VMWare.

Figure 30 Create vHBA Screen

Repeat the previous step using similar naming standards, but referencing fabric b vHBA Template. Click Next after returning to the Create Service Profile Template Storage panel.

Figure 31 Create Service Profile Template—Storage Screen 2

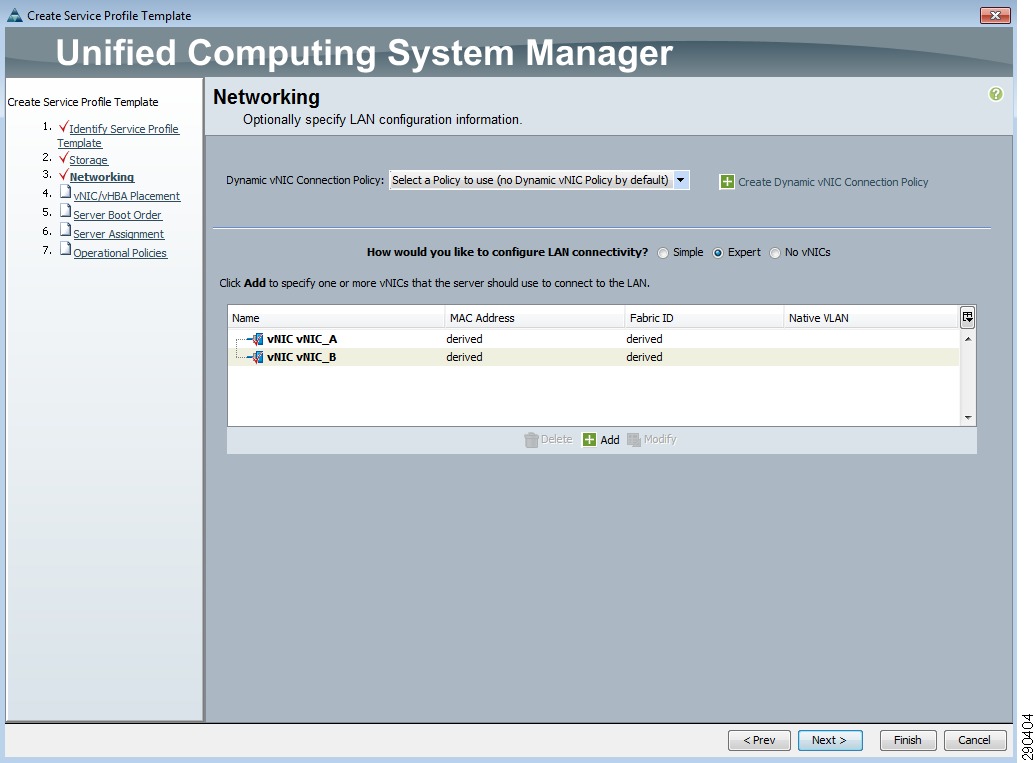

Type a name for the vNIC and select Use LAN Connectivity Template. Select the vNIC Template associated with fabric A that was previously created and select the VMWare adapter policy. Click Next to complete this phase. Repeat this process for the vNIC instantiation on fabric b.

Figure 32 Create vNIC Screen

Click Next after successfully completing the Network phase of the Service Profile Template creation process.

Figure 33 Create Service Profile Template—Networking Screen

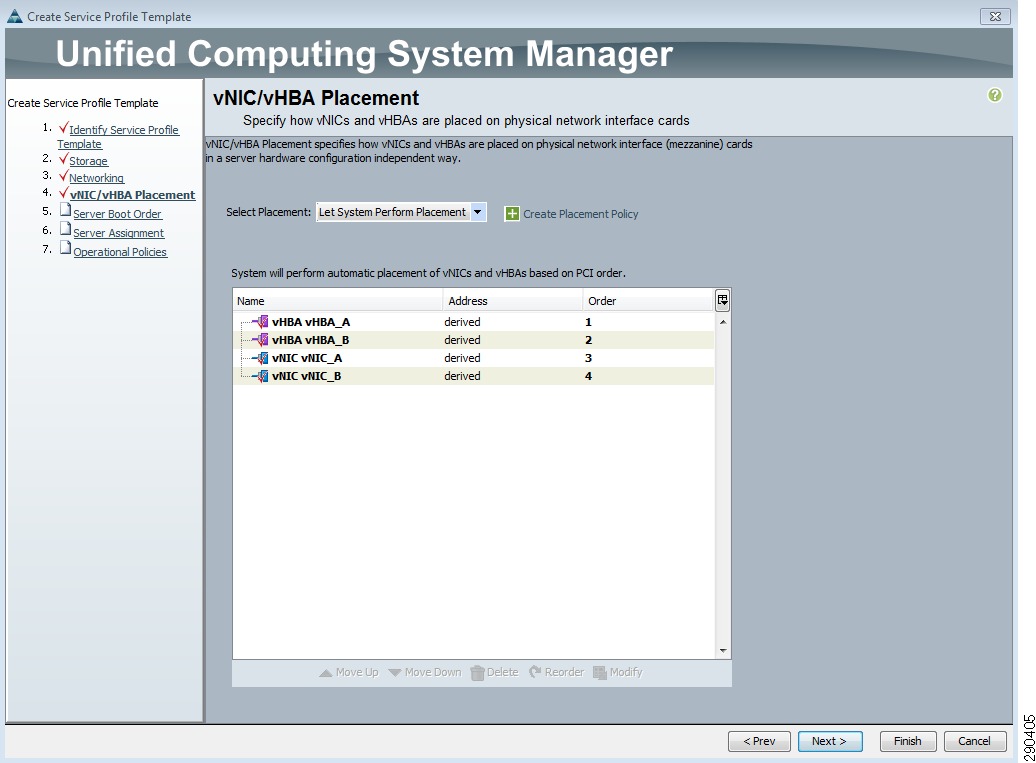

Click Next to accept the default placement by the system.

Figure 34 Create Service Profile Template—vNIC/vHBA Placement Screen

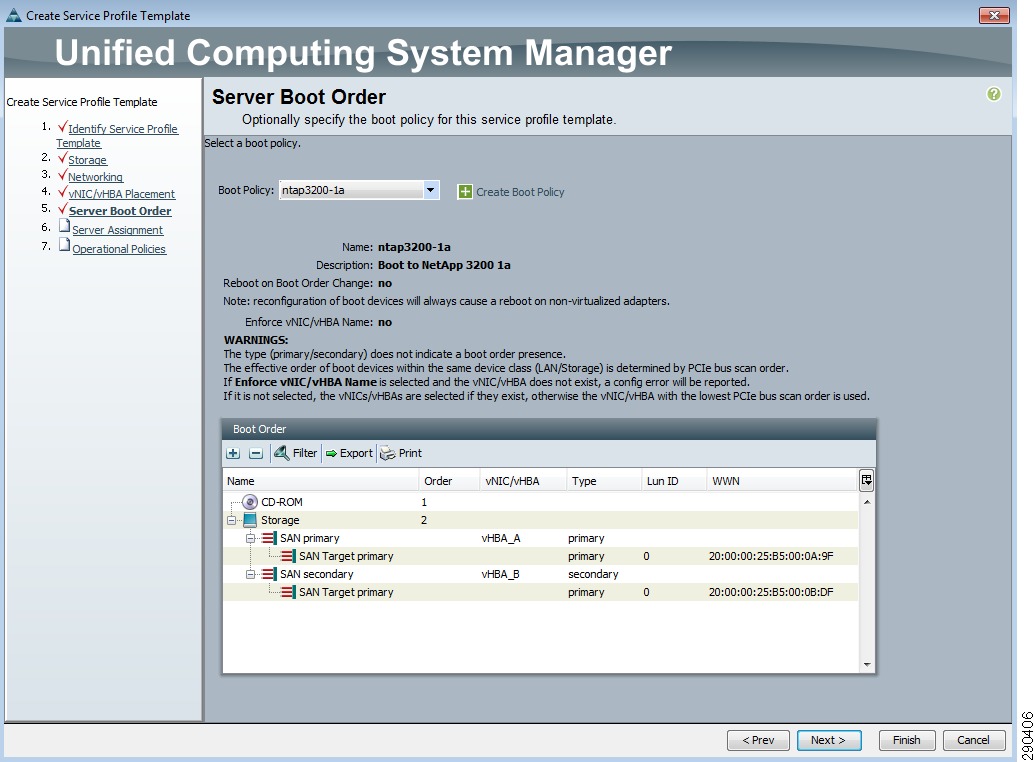

Select the boot policy defined previously for the filer support fabric a. Verify the order, adapters, and targets. Click Next.

Figure 35 Create Service Profile Template—Server Boot Order Screen

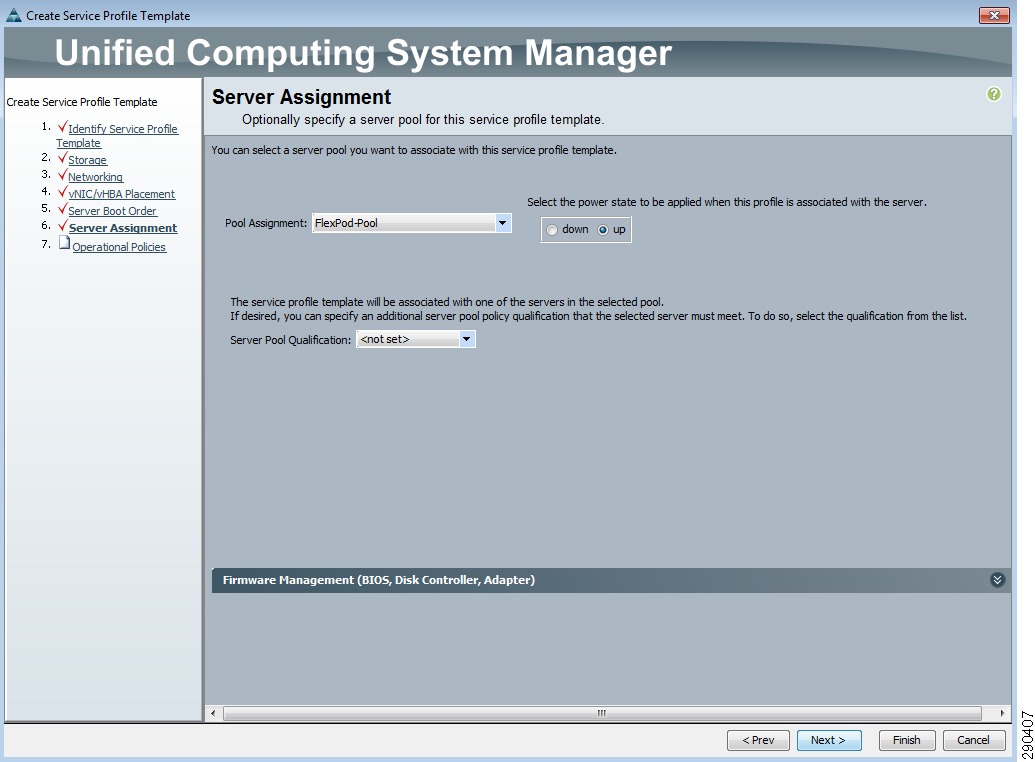

Select the previously-defined server pool associated with this sub-organization. Do not set Server Pool Qualifications. Click Next.

Figure 36 Service Profile Template—Server Assignment Screen

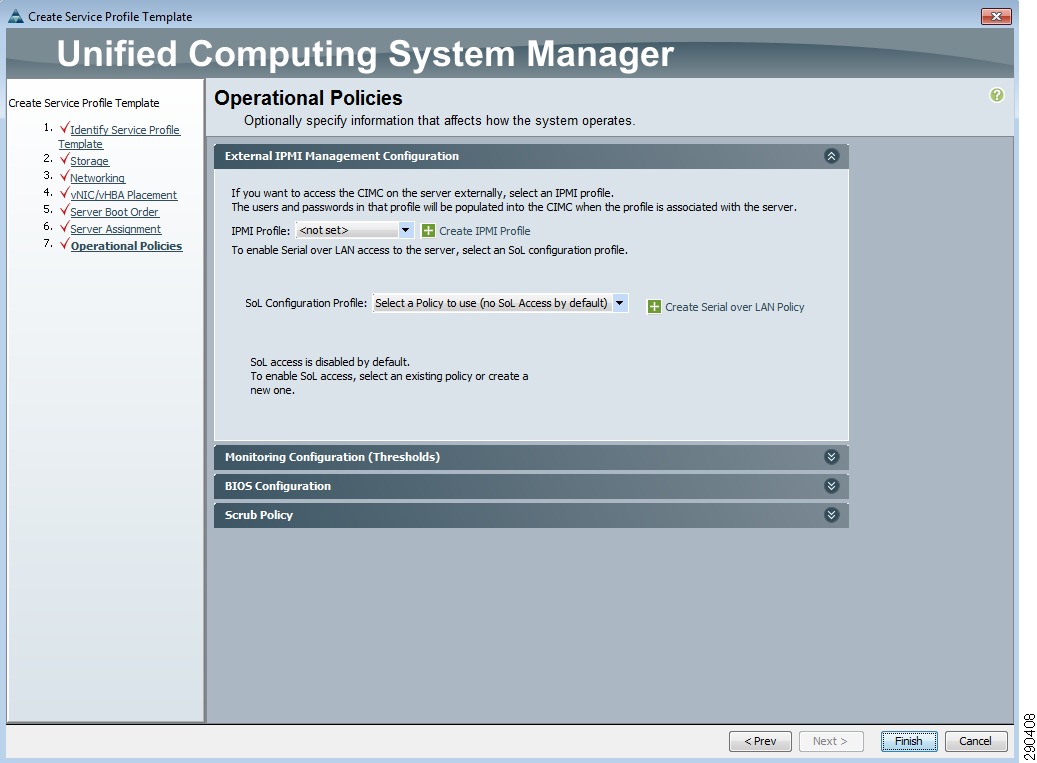

Keep the default operational policies and click Finish.

Figure 37 Service Profile Template—Operational Policies Screen

Create Service Profile

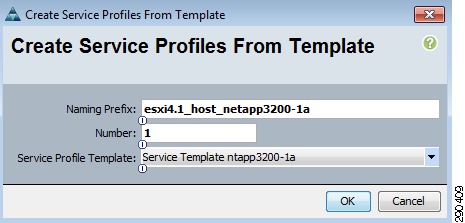

In the Navigation pane, select the Servers tab and the appropriate sub-organization. In the work pane, select Create Service Profiles From Template. The Create Service Profile Template wizard will launch. Name the service profile to reflect the operating system, instance, and storage target. Set the Number to 1 and leverage the service profile template previously configured.

Figure 38 Create Service Profiles From Template Screen

Add a Block of IP Addresses for KVM Access

In the Navigation pane, select Communication Management and then Management IP Pool to create a pool of KVM IP addresses. In the work pane, click Create Block of IP Addresses to launch a form-based wizard. Complete the form using the values related to your environment.

Figure 39 Create a Block of IP Addresses Screen

References

•

Cisco Nexus 1010 Virtual Services Appliance: http://www.cisco.com/en/US/products/ps10785/index.html

•

Cisco Nexus 5548 Switch: http://www.cisco.com/en/US/products/ps11215/index.html

•

Cisco Unified Computing System (UCS): http://www.cisco.com/en/US/netsol/ns944/index.html

•

NetApp FAS3210 Storage Controller: http://now.netapp.com/NOW/knowledge/docs/hardware/hardware_index.shtml#Storage%20appliances%20and%20V-series%20systems/gFilers

•

NetApp On The Web (NOW) Site: http://.now.netapp.com

•

VMware vSphere: http://www.vmware.com/products/vsphere/