Feedback Feedback

|

Table Of Contents

Cisco Virtual Workspace (VXI) Smart Solution 2.7 with Citrix XenDesktop 5.6

Cisco Virtual Workspace (VXI) Smart Solution Vision

Document Audience and Objectives

What is New in Cisco Virtual Workspace (VXI) Smart Solution 2.7

Cisco Virtual Workspace (VXI) Smart Solution Advantages

Cisco Virtual Workspace (VXI) Smart Solution Deployment Models

Virtualized Data Center Architecture

Compute Subsystem Design and Best Practices

Compute Subsystem Building Blocks

Connectivity for Cisco UCS Servers

Deploying Cisco UCS Fabric Interconnects

Server Topology Recommendations

Determining Cisco UCS BIOS Settings for Desktop Virtualization

Virtualization Design and Best Practices

Storage Design and Best Practices

Storage Capacity Best Practices

VLANs for the Virtualized Data Center

Virtualized Data Center Management

Cisco Network Deployment Models

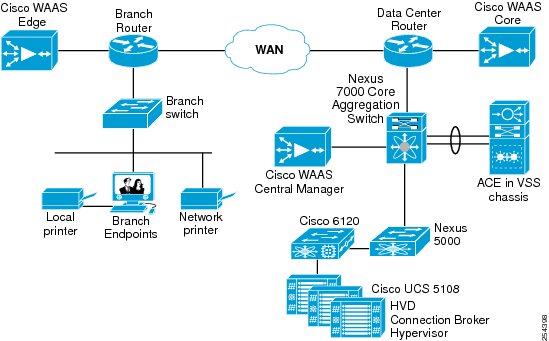

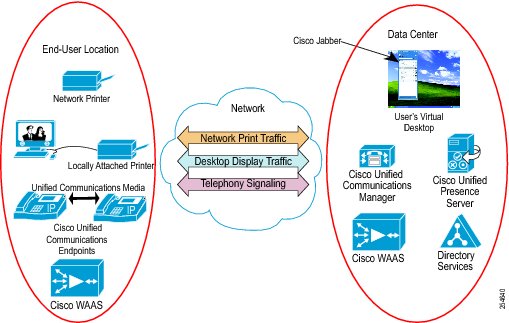

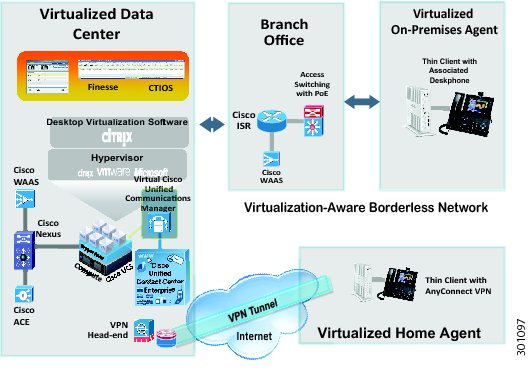

Cisco Wide Area Application Services

Endpoint Access Services in the Branch Office

Cisco Virtual Workspace Data Center Edge

Cisco Trustsec for Cisco Virtual Workspace (VXI) Smart Solution

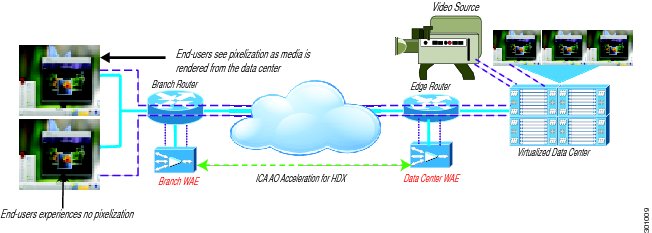

WAN Optimization for Virtual Workspace

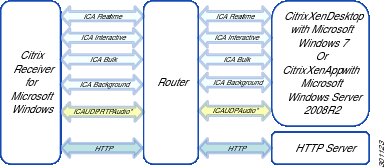

Citrix Multi-stream ICA (HDX Multi-Stream)

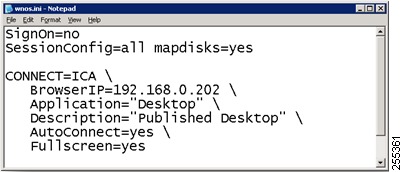

Configuring Cisco WAAS in a Cisco Virtual Workspace Environment

Configuring Traffic Interception for Cisco WAAS Using WCCP

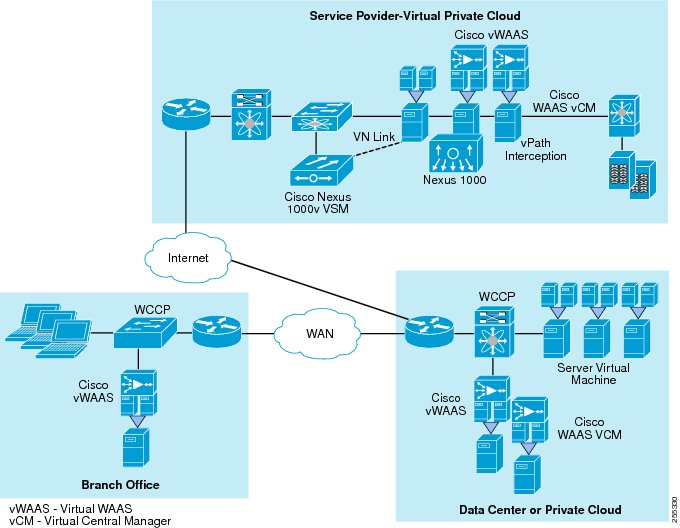

Virtual Machine-Based Network Optimization: Cisco vWAAS

WAN Stability and Path Optimization

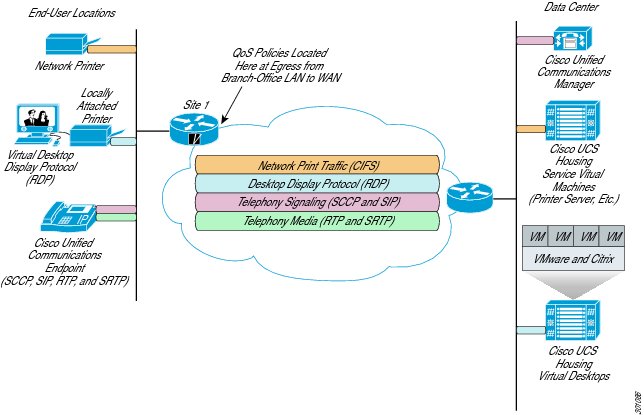

QoS for Multi-stream ICA (HDX Multi-Stream)

QoS for multi-stream ICA UDP Streams

Managing the Cisco Virtual Workspace Network

Cisco Virtual Workspace Clients

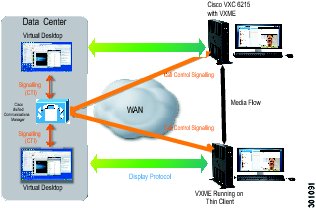

Cisco VXC 6215 Media Termination Capability

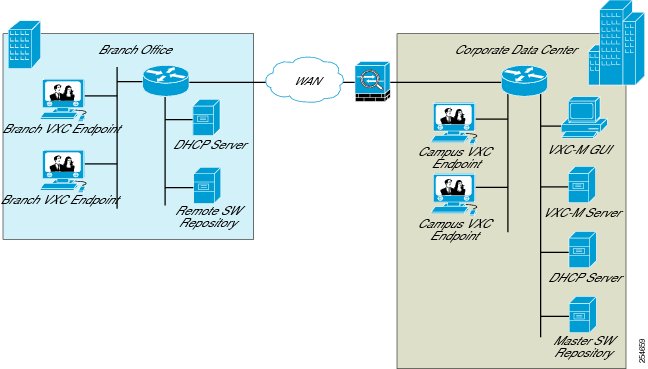

Design Considerations for the Cisco VXC Manager

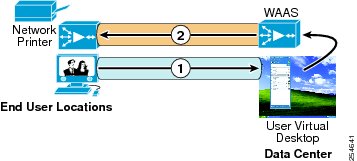

Desktop Virtualization Endpoint Printing using Network Printer

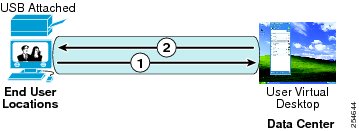

Desktop Virtualization Endpoint Access to USB-attached Peripherals (Storage or Printing)

Rich Media, Collaboration and User Experience

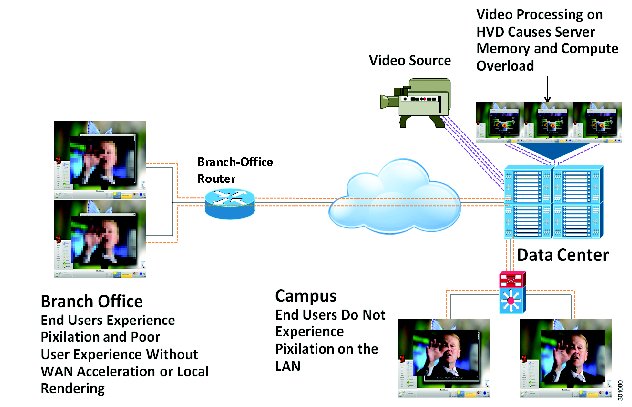

Rich Media and Collaboration in Traditional Virtual Desktop Infrastructure Environments

Rich Media and Collaboration in Cisco Virtual Workspace (VXI) Smart Solution

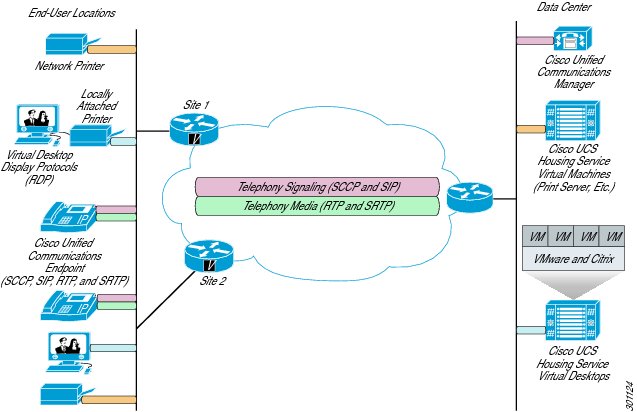

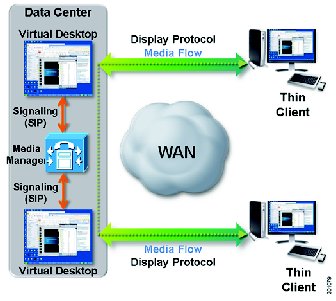

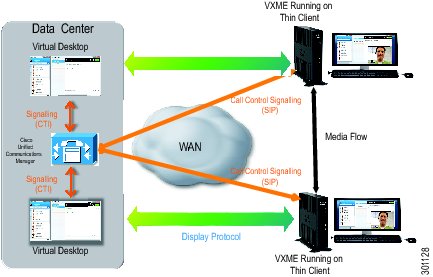

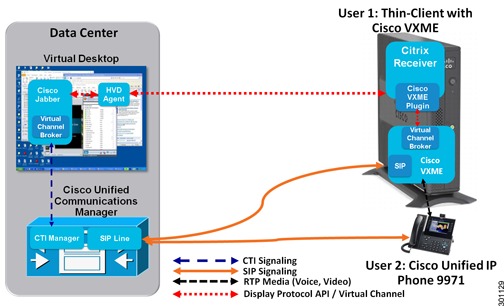

IP Telephony and Interactive Voice and Video Traffic Separation

Display Protocol Traffic Separation

Contact Center Applications in Cisco Virtual Workspace (VXI) Smart Solution

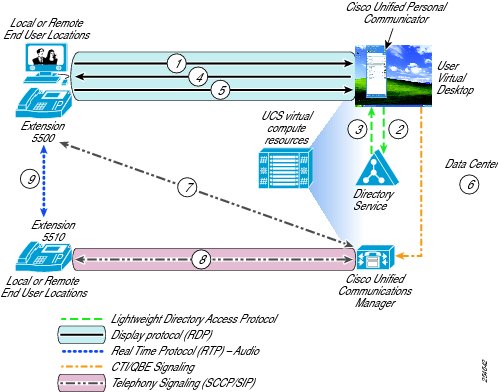

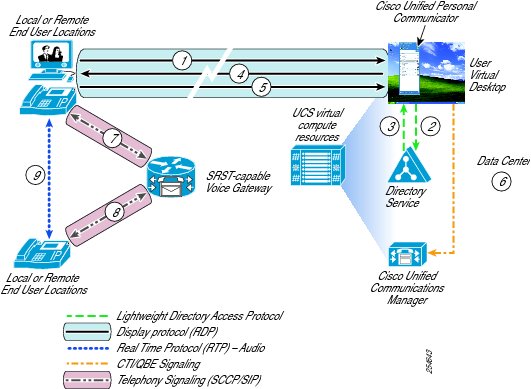

Cisco Unified Communications Applications

Unified Communications Endpoint Single Sign-On

Cisco Unified Communications Applications, HVD, and Cisco Unified SRST

Cisco Virtual Workspace (VXI) and BYOD Smart Solutions

Unified Communications Enabled Accessories

Securing Cisco Virtual Workspace

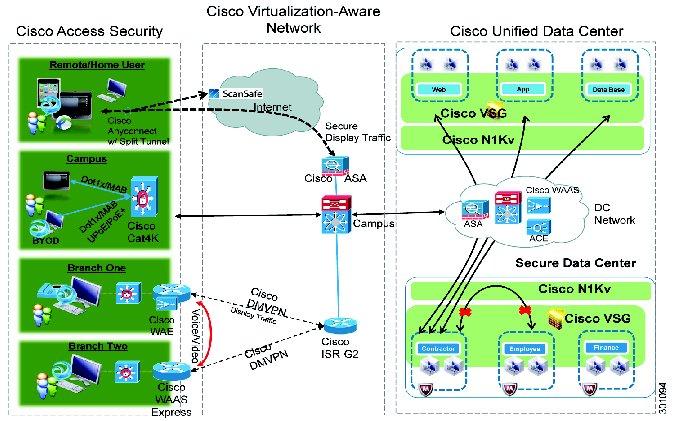

Cisco Virtual Workspace End-to-End Security Architecture

Secure Unified Workspace: Design and Best Practices

Secure Access to the Enterprise

Secure Borderless Networks: Design and Best Practices

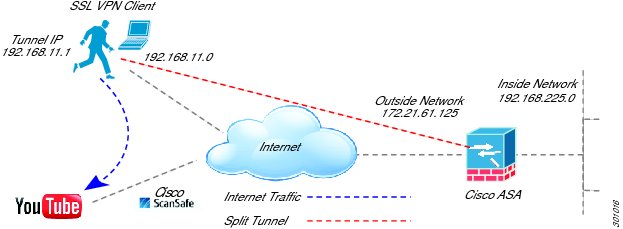

Secure Access for Fixed Teleworker and Home-User Environments

Secure Remote Access for Mobile Environments

Data Center Security - Design and Best Practices

User and Data Security for the Virtual Desktop

Securing the Virtual Desktop within the Data Center

Capacity Planning for Hosted Virtual Desktops: Computing and Storage

Resource Use in the Current Environment

Estimating Resource Requirements in a Virtualized Environment

Estimating Memory Requirements

Estimating Storage Requirements

Design Considerations: Computing

Power Management Considerations

High-Availability Considerations: Computing

Design Considerations: Storage

Thin Provisioning vs. Thick Provisioning

Storage Optimization Technologies

Cisco Knowledge Worker+ Profile

Validation Methodology and Results

Workload Profile: Cisco Knowledge Worker+

Cisco Nexus 1000V Series Switches

Cisco Virtual Security Gateway

Cisco Application Control Engine (ACE)

Cisco Adaptive Security Appliance (ASA)

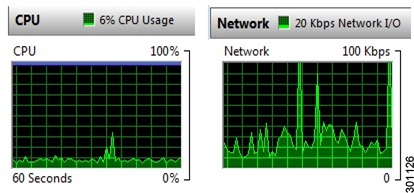

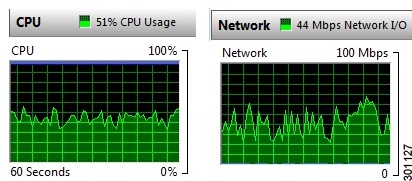

Cisco Wide Area Application Services (WAAS)

Network - WAN Capacity Planning

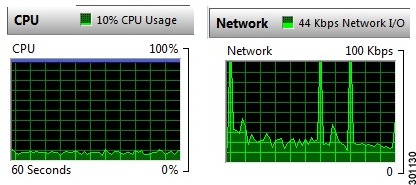

Network Characterization Results

Scaling Cisco Virtual Workspace using XenApp Hosted Shared Desktops

Design Considerations for Management Tools

Cisco Virtual Workspace (VXI) Smart Solution Management Tool Summary

Cisco Virtualization Experience Clients

Citrix XenDesktop 5.6 Management Suite

Guidelines for Using Citrix XenDesktop Management suite

Desktop Monitoring and Assessment

Cisco Virtual Workspace (VXI) Smart Solution 2.7 with Citrix XenDesktop 5.6

April 2, 2013

Contents

List of Figures

List of Tables

About Cisco Validated Design (CVD) Program

The CVD program consists of systems and solutions designed, tested, and documented to facilitate faster, more reliable, and more predictable customer deployments. For more information visit http://www.cisco.com/go/designzone.

ALL DESIGNS, SPECIFICATIONS, STATEMENTS, INFORMATION, AND RECOMMENDATIONS (COLLECTIVELY, "DESIGNS") IN THIS MANUAL ARE PRESENTED "AS IS," WITH ALL FAULTS. CISCO AND ITS SUPPLIERS DISCLAIM ALL WARRANTIES, INCLUDING, WITHOUT LIMITATION, THE WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THE DESIGNS, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES.

THE DESIGNS ARE SUBJECT TO CHANGE WITHOUT NOTICE. USERS ARE SOLELY RESPONSIBLE FOR THEIR APPLICATION OF THE DESIGNS. THE DESIGNS DO NOT CONSTITUTE THE TECHNICAL OR OTHER PROFESSIONAL ADVICE OF CISCO, ITS SUPPLIERS OR PARTNERS. USERS SHOULD CONSULT THEIR OWN TECHNICAL ADVISORS BEFORE IMPLEMENTING THE DESIGNS. RESULTS MAY VARY DEPENDING ON FACTORS NOT TESTED BY CISCO.

The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB's public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California.

Cisco and the Cisco Logo are trademarks of Cisco Systems, Inc. and/or its affiliates in the U.S. and other countries. A listing of Cisco's trademarks can be found at http://www.cisco.com/go/trademarks. Third party trademarks mentioned are the property of their respective owners. The use of the word partner does not imply a partnership relationship between Cisco and any other company. (1005R)

Any Internet Protocol (IP) addresses and phone numbers used in this document are not intended to be actual addresses and phone numbers. Any examples, command display output, network topology diagrams, and other figures included in the document are shown for illustrative purposes only. Any use of actual IP addresses or phone numbers in illustrative content is unintentional and coincidental.

Cisco Virtual Workspace (VXI) Smart Solution

© 2013 Cisco Systems, Inc. All rights reserved.

Introduction

Executive Summary

This Cisco® Validated Design Guide provides design considerations and guidelines for deploying an end-to-end Cisco Virtual Workspace (VXI) Smart Solution. Cisco Virtual Workspace (VXI) Smart Solution is a service-optimized desktop virtualization system that spans the Cisco Data Center, Cisco Borderless Networks and Cisco Collaboration architectures to deliver a superior collaboration and superior quality multimedia user experience in a fully integrated, open, and validated desktop virtualization solution. This validated design enables organizations to accelerate the successful implementation of desktop and application virtualization with a unified collaborative workspace. The Cisco Virtual Workspace (VXI) Smart Solution helps reduce risks and costs, and delivers a superior user experience.

This guide discusses the solution as a whole, and describes subsystems in separate chapters. The chapters describe major functional groups such as data center, network, and endpoints, as well as pervasive system functions such as management and security.

Cisco Virtual Workspace (VXI) Smart Solution Vision

Cisco Virtual Workspace (VXI) Smart Solution delivers the new virtual workspace that unifies virtual desktops, voice and video, enabling IT to provide exceptionally flexible, secure workspace services with an uncompromised user experience.

Document Audience and Objectives

This guide is intended for use by IT engineers and architects considering the implementation of Cisco Virtual Workspace (VXI) Smart Solution, and anyone who wants to understand the design principles underlying the solution using Citrix XenDesktop and XenApp. This document provides design considerations and guidelines for deploying an end-to-end Cisco Virtual Workspace (VXI) Smart Solution. This solution places the user's computing environment in the data center, allowing it to be accessed through a variety of endpoints, integrating it with collaboration tools, and helping ensure a high-quality user experience.

Cisco Virtual Workspace (VXI) Smart Solution is based on many subsystems including the virtualized data center, the virtualization-aware network, and the virtualized workspace; for many customers, many of these subsystems are already deployed. This document focuses on design guidance specific to Cisco Virtual Workspace (VXI) Smart Solution needed to augment an existing infrastructure for desktop and application virtualization.

This document does not cover all the foundational technologies and reference designs for routing, switching, security, storage, and virtualization. Please see the Cisco Introduction to End to End Desktop Virtualization for more information. It refers to detailed documents that discuss those technologies and reference designs. The Cisco Virtual Workspace (VXI) Smart Solution 2.7 As-Deployed Reference Guide presents detailed configurations for validated devices. It lists specific software and hardware versions and includes complete device configurations and diagrams showing the topology used in testing.

What is New in Cisco Virtual Workspace (VXI) Smart Solution 2.7

•

Integration of Cisco UCS Storage Accelerator with 768GB of disk space for desktop storage optimization

•

UCS Central Manager - A manager for multiple instances of UCS Manager

•

VMware Hypervisor ESXi5.1 with Citrix XenDesktop 5.6U1 and XenApp 6.5

•

Cisco WAAS 5.1 with optimization for Citrix HDX Multi-Stream

•

Cisco Virtual EXperience Media Engine and Jabber within the HVD

•

Native Jabber applications

•

TrustSec 3.0 with Nexus1000v

•

Cisco BYOD Smart Solution Alignment

Note

For detailed information on Citrix XenDesktop 5.6 and XenApp 6.5 capabilities, please refer to the appropriate Citrix product documentation at: http://support.citrix.com/proddocs/topic/xendesktop/xd-library-wrapper.html

http://support.citrix.com/proddocs/topic/xenapp/ps-library-wrapper.html

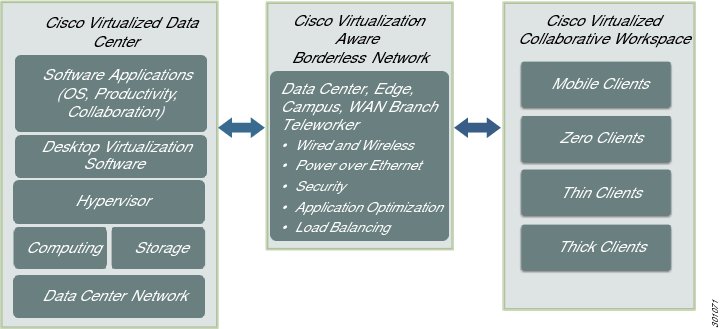

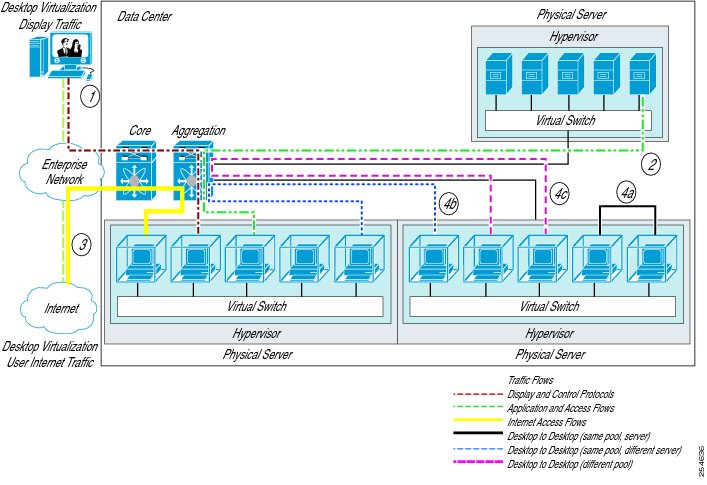

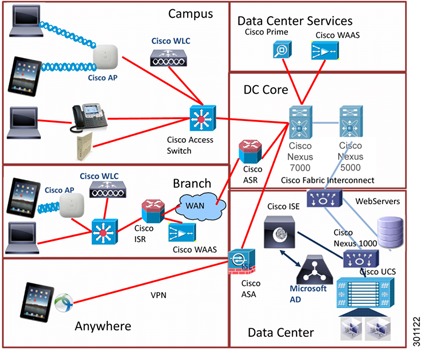

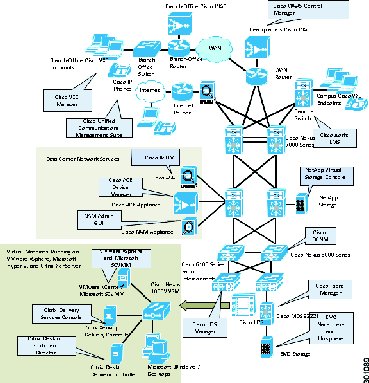

Overview

The Cisco Virtual Workspace (VXI) Smart Solution provides an optimized end-to-end infrastructure for desktop virtualization deployments. This system architecture consists of three fundamental building blocks: Cisco Virtualized Data Center, Virtualization-Aware Network, and Virtualized Collaborative Workspace (Figure 1).

The Cisco Virtualized Data Center is based on the Cisco Unified Data Center architecture, which creates data centers that are efficient, agile, and transformative. Cisco's Virtualized Data Center provides the computing, switching, storage, and virtualization capabilities needed to support a hosted virtual desktop solution from Citrix.

The Cisco Virtualization-Aware Network is based on the Cisco Borderless Networks architecture, which reduces operation complexity and provides the services needed to connect anyone, anywhere, on any device to his/her workspace. The Cisco Virtualization-Aware Network connects data centers, enterprise campuses, branch offices, and teleworkers to help ensure that traffic flowing between end users and their hosted desktops is transported securely, reliably, and efficiently. To achieve these goals, the network employs bandwidth optimization, load balancing, quality of service (QoS), security, and other technologies from Cisco's industry-leading portfolio.

The Cisco Virtualized Collaborative Workspace builds on the Cisco Collaboration architecture, extending the reach of the virtual desktop to a wide range of end points while supporting critical collaboration capabilities hosted in the data center. End points can be zero clients, thin clients, mobile devices or thick clients, and can include USB-based print and storage capabilities. The Cisco Virtualized Collaborative Workspace includes unique capabilities for integrating Cisco Unified Communications endpoints with hosted virtual desktops, including the control of Cisco IP Phones from virtual desktops.

Cisco Virtual Workspace (VXI) Smart Solution also supports management tools for both Cisco and ecosystem partner products, as well as a rich services portfolio that helps enterprises make the most of their virtualization investments.

Figure 1 Cisco Virtual Workspace (VXI) Smart Solution Architecture

Citrix Desktop and Application Virtualization for Cisco Virtual Workspace (VXI) Smart Solution

This Cisco Virtual Workspace (VXI) Smart Solution validated design is based on Citrix XenDesktop and XenApp virtualization solutions. Citrix XenDesktop is a desktop virtualization solution that delivers Windows desktops as an on-demand service to any user, anywhere. Citrix XenDesktop provides users with the flexibility to access their desktop on any device, anytime, with a high definition user experience. With XenDesktop, the IT department can manage single instances of each OS, application, and user profile and dynamically assemble these to increase business agility and greatly simplify desktop management. XenDesktop's open architecture enables customers to easily adopt desktop virtualization using any hypervisor, storage, or management infrastructure. This design guide validates the use of Citrix XenDesktop 5.6 for desktop virtualization.

Citrix XenDesktop includes a broad range of options for delivering virtualized Windows applications and desktops to the various types of users found within any given organization. These capabilities are grouped together under the term "FlexCast". XenDesktop FlexCast provides the customer with the flexibility to choose the right type of virtualized desktop or application solution for each user, and use case, found in the environment. Having this choice provides the customer with the right type of desktop virtualization solution for each user type (Power, Mobile, Task, etc.) in the organization, and helps reduce the overall cost of the solution as it provides less expensive options to the "one size fits all" approach found in VDI-only alternatives. FlexCast includes:

•

Hosted Virtual Desktops for users needing access to datacenter-hosted desktops

•

Hosted Shared environment for delivering desktops or applications, via session sharing in a single server OS instance, (i.e. XenApp)

•

RemotePC capabilities for accessing physical machines on user's desks via Citrix HDX

•

XenClient to provide power users with physical laptops running a type 1 hypervisor with one to many VMs running on top that are under the control of IT

•

Desktop Streaming capabilities to enable the on-demand delivery of an entire desktop image to a user's machine.

This Cisco Virtual Workspace (VXI) Smart Solution validates the use of Hosted Virtual (HVD) and Hosted Shared (XenApp delivered) desktops. Visit the Citrix FlexCast site for more information on those options: http://flexcast.citrix.com

Citrix XenApp, which is included as part of the XenDesktop license, is an on-demand application delivery solution that enables Windows® applications to be virtualized, centralized, and instantly delivered as a service to users anywhere on any device.

Cisco Virtual Workspace (VXI) Smart Solution Advantages

The validated design delivers the following critical advantages:

•

Unified Workspace: Cisco Virtual Workspace (VXI) Smart Solutoin supports a comprehensive ecosystem of endpoints that include unified communications and multimedia capabilities. Endpoints supported by this Smart Solution include industry-leading capabilities such as Power over Ethernet (PoE), hardware and software form factors, mobility support, and native unified communication media engines.

•

Integration with Cisco Unified Communications: Users can connect to Hosted Virtual Desktops to make and receive voice or video calls from Cisco Jabber which controls the user's desk phone or Virtual Experience Media Engine (VXME). The control plane is integrated into the virtual desktop. The media plane remains outside the virtual desktop display protocol, which enables the network to perform quality-of-service (QoS) functions such as packet prioritization, call admission control, and path optimization.

•

Simplified Configuration: The Cisco Unified Computing System™ (Cisco UCS®) integrates the computing, virtualization, hypervisor, fabric-interconnect, and storage functions in the Cisco Virtualized Data Center. Cisco UCS Manager simplifies configuration and improves manageability for all aspects of the Cisco UCS domain. The Cisco Nexus® 1000V Series Switches provide switching, traffic isolation, and policy-insertion capabilities for virtualized environments, extending full networking capabilities to the virtual machine level.

•

Network Optimization: Cisco Wide Area Application Services (Cisco WAAS) technologies can improve application response times by optimizing bandwidth consumption. For instance, remote print operations can be launched from a user's virtual desktop within the data center to a network printer at a remote branch office. Cisco WAAS can automatically recognize and compress the printing traffic, and spool the resulting print file at the remote location. This capability provides a superior user experience while improving WAN efficiency.

•

Security: Network connectivity can be controlled at the access layer, using industry-standard IEEE802.1x for port-level authentication. Cisco access switches can thus enforce a security policy at the physical device level and user level by interacting with the credentials-based access control integrated with directory services such as Microsoft Active Directory. Teleworker users, such as mobile users using laptop computers, as well as fixed users, such as home-based teleworkers, can use Cisco's award-wining VPN technology to connect to the enterprise network across the Internet. The user's virtual desktop data is fully protected as it traverses the Internet in an encrypted VPN tunnel. This technology can also be deployed for traffic traversing a managed WAN.

•

End to End Integration and Validation: Cisco Virtual Workspace (VXI) Smart Solution is an end-to-end system, integrating network and collaboration functions into the virtual desktop experience. The system has been designed and tested as an integrated whole, and mitigates the system integration investment typically required when deploying desktop virtualization and related technologies. The design guidelines and best practices provided in this document reduce the risks associated with desktop virtualization deployments.

•

Services: Complementing Cisco solutions, Cisco Desktop Virtualization Services deliver rich, expert-based services end-to-end that can help you rapidly realize a desktop virtualization solution of your choice anywhere, with any device, over any medium. These services also help provide the right fit with your existing investments and align your IT and business strategies. Our services can help you plan, build, and manage a secure desktop virtualization solution. These include:

Plan

•

Desktop Virtualization Strategy Service: Develop a comprehensive business case and solution strategy for desktop virtualization. Assess operational and mobility services readiness. Create an architecture that may include desktop virtualization, collaboration, and innovation.

•

Desktop Virtualization Assessment Service: Conduct a comprehensive feasibility study and total cost of ownership (TCO) analysis for desktop virtualization.

•

Desktop Virtualization Planning and Design Service: Design a reliable desktop virtualization infrastructure that fits your IT strategy and user requirements.

Build

•

Desktop Virtualization Pre-Production Pilot Service: Validate specific technical requirements for your proposed desktop virtualization design prior to full production.

•

Desktop Virtualization Implementation Service: Smoothly implement your desktop virtualization solution, including creating an implementation plan and migrating users.

Manage

•

Desktop Virtualization Optimization Service: Understand the performance and utilization of your desktop environment and evolve your VDI or Virtual Workspace solution to assure operational excellence as you expand.

•

Cisco Solution Support Service for Virtual Workspace: Rapidly resolve operational issues with solution support that provides a single point of contact.

Market Trends

Multiple trends are influencing the deployment of desktop virtualization: IT is seeking more virtualization and savings in the data center through cloud computing, IT is seeking better security and control in remote locations, and end users are seeking multimedia access from multiple devices and locations.

•

Cloud computing and software as a service (SaaS): As businesses are moving towards cloud computing and SaaS-based applications, desktop virtualization offers a better way to use computing resources and enable a single end-point to access multiple clouds, while providing the required security and domain isolation and network integrity. The combined Cisco and Citrix solution provides customers with a path toward a Desktop Cloud deployment within their data center environment.

•

Mobility: Mobile devices such as smartphones and tablets are increasingly being used to access work applications. Desktop virtualization enables users to access work applications without taxing computing resources at endpoints. High-speed connectivity available on smartphones allows a high-quality experience for end-users who access their desktops on smartphones with keyboard and mouse attachments.

•

Globalization: Off-shoring and expansion into new geographic locations is a prime desktop virtualization deployment scenario. Benefits include data security that facilitates compliance with privacy regulations for data crossing other countries, cost savings through centralized storage and computing resources, and increased productivity through access to data from anywhere at anytime.

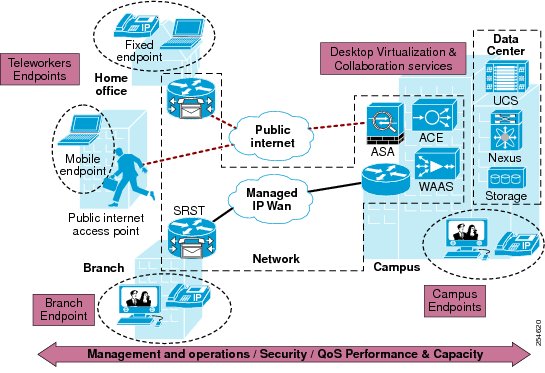

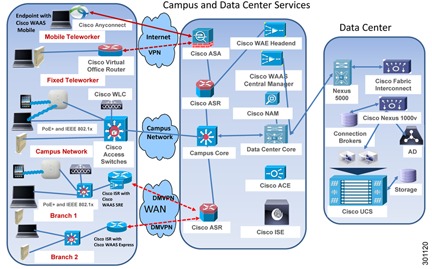

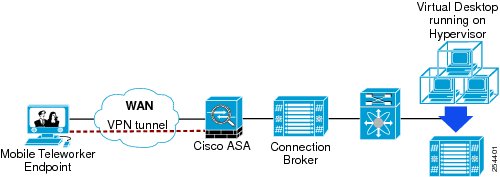

Cisco Virtual Workspace (VXI) Smart Solution Deployment Models

The Cisco Virtual Workspace (VXI) Smart Solution is fundamentally based on the centralization of the user's computing environment in the data center. The computing environment is virtualized and hosts the desktop virtualization (DV) and collaboration subsystems. Together, these subsystems provide the user community with desktop and telephony services delivered through the network to the various endpoints. These endpoints can be located on the enterprise campus, in branch or regional offices, home offices, or anywhere the user's mobile device has a network connection. Teleworkers are supported with both fixed and mobile clients. Figure 2 shows a typical deployment in which a wide range of clients access virtual desktops across the enterprise network. The term "enterprise network" refers to both the enterprise's own networking infrastructure, as well as its virtual private network (VPN) based extensions into the Internet, and other various forms of externally-managed commercial networks.

Figure 2 Top-Down View

Validation Goals and Summary

The collective sum of all components and sub-systems that make up the system is designed, deployed and tested in an integrated, end-to-end fashion that is reflective of a customer deployment. Design guidance provided throughout this document is based on this validation. Enterprises can leverage this guidance that includes best practices, caveats, scale, performance and other characterization data to plan and guide their own deployment. This information is primarily in this document but also in the following documents that serve as addendums to this document:

•

Cisco Virtual Workspace (VXI) Smart Solution Performance and Capacity Validation Results Guide 2.6 for Citrix

http://www.cisco.com/en/US/docs/solutions/Enterprise/Data_Center/VXI/CVD/VXI_PCV_C.pdf

•

Cisco Virtual Workspace (VXI) Smart Solution 2.7 As-Deployed Reference Guide

•

Cisco Virtual Workspace (VXI) Smart Solution 2.7 Release Notes

http://www.cisco.com/en/US/customer/docs/solutions/Enterprise/Data_Center/VXI/VXI_RN_CPE.pdf

In the following tables include a high level summary of scalability, performance and other characterization testing performed in Cisco's labs. The testing provides key data needed for sizing a deployment and spans important aspects of the end to end system, specifically Compute, Storage, Collaboration applications, Rich Media and Network. Please refer to the above mentioned Results Guide for the actual results and data.

Compute and Storage Sizing

A series of scale and performance tests are done in the Cisco Virtual Workspace (VXI) Smart Solution to provide sizing data for common deployment profiles on different models of Cisco UCS servers. This includes both compute and storage aspects of desktop virtualization. Table 1 below provides a summarized view of these validation efforts.

Note

All testing presented here is based on what is called the Cisco Knowledge Worker (KW+) workload. See the Scaling and High Availability chapter for a definition of this workload.

Table 1 Validation Goals and Summary

Scale and Performance characterization of Cisco UCS B200 M3 with Citrix XenApp Hosted Shared Desktops using a Cisco KW+ workload without antivirus

Cisco UCS B200 M3 with 384G of memory

NFS on NetApp FAS 3170

Citrix XenApp 6.5 on ESXi 5.1

Microsoft Windows 7 32-bit with 2 GB of memory and 24G disk; Persistent

Scale and Performance characterization of Cisco UCS B200M3 server with Citrix XenDesktop (FlexPod)

Cisco UCS B200 M3 with 384G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop 5.6 FP1 (MCS) on ESXi 5.1

Microsoft Windows 7 32-bit with 2 GB of memory and 24G disk; Persistent

Impact of deploying Citrix PVS write cache on local SSDs

Cisco UCS B200 M3 with 384G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop 5.6 FP1 (PVS 6.1) on ESXi 5.0 U1

Microsoft Windows 7 32b with 1.5G of memory and 24G disk; Non-persistent

Scale and Performance characterization of Cisco UCS B250M2 server (FlexPod)

Cisco UCS B250 M2 with 192G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop5 on ESXi 5.0; ICA

Microsoft Windows 7 32b with 1.5G of memory and 24G disk; Persistent

Scale and Performance characterization of Cisco UCS B230M2 server (FlexPod)

Cisco UCS B230 M2 with 256G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop 5.5 on ESXi 5.0; ICA

Microsoft Windows 7 32b with 1.5G of memory and 24G disk; Persistent

Scale and Performance characterization of Cisco UCS B250M2 server - Impact of Hyper-V Dynamic Memory

Cisco UCS B250 M2 with 192G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop 5.5 on Microsoft HyperV 2008 R2 SP1

Microsoft Windows 7 32b with 1.5G of memory and 24G disk; Persistent

Storage Optimization (IOPS Offload) using Citrix Intellicache

Cisco UCS B-230 M2 with 256G of memory

NFS on NetApp FAS 3170

Citrix XenDesktop 5.5 on XS 6.0*

Microsoft Windows 7 32b with 1.5G of memory and 24G disk; Persistent

Note

Please refer to Citrix Product Documentation and Cisco Virtual Workspace (VXI) Smart Solution Release Notes for support related information and caveats: http://support.citrix.com/proddocs/topic/xendesktop-als/cds-installing-xd5fp1.html http://www.cisco.com/en/US/docs/solutions/Enterprise/Data_Center/VXI/VXI_RN.pdf

Application Characterization

The goal of application characterization is to characterize the performance of the application as a standalone application in the workload, running across all user desktops deployed on a server. An Enterprise looking to deploy a Cisco Collaboration application for their virtual desktop users can use the performance data to understand the incremental impact of that application as more and more users start using them concurrently. The impact is to the server resources which can change the number of users that can be deployed on that same server once the new application is rolled out. Table 2 summarizes the application characterization results.

Table 2 Application Characterization

Network Characterization

The goal here is to characterize different network aspects of desktop virtualization, including network services, optimizations and other data needed for a successful deployment. A summary of these efforts, tested across a Cisco Virtual Workspace (VXI) Smart Solution are shown in Table 3.

Table 3 Network Characterization

Virtualized Data Center

Overview

The Virtualized Data Center unifies processor and storage resources, hosts virtual machines and desktops, and provides a network switching fabric that interconnects these resources to the rest of the enterprise network. It is based on Cisco Unified Data Center architecture, which creates data centers that are efficient, agile, and transformative. It helps enable enterprises to consolidate data center infrastructure, reduce energy costs, improve workforce productivity, and ensure business continuity. The Cisco data center is based on three pillars of innovation:

•

Cisco Unified Fabric: Cisco uses an innovative fabric-based architecture to unify computing, networking, storage, virtualization, and management into a single data center platform. This approach helps to ensure consistent delivery of highly-available and secure IT services.

•

Cisco Unified Computing: The Cisco Unified Computing System™ (Cisco UCS®) combines industry-standard blade and rack servers, networking, and management into a single, centrally-managed, and massively scalable system. Infrastructure can be automatically provisioned to speed deployment of enterprise applications.

•

Cisco Unified Management: To simplify data center management and support delivery of virtual desktops, Cisco offers centralized management of physical, virtual, and cloud-based resources. Cisco Unified Management solutions enable automatic provisioning, policy-based management, creation of an IT service catalog and self-service user portal, and pay-per-use tracking.

The Cisco Virtualized Data Center is highly scalable, and can be adopted in an incremental, granular fashion to help ensure a graceful evolution in response to enterprise needs. The virtualized data center is designed to provide optimum levels of performance, scalability, availability, and security. This section provides design guidance and best practices for achieving those goals in a deployment. The main topics include:

•

Virtualized data center architecture

•

Compute design and best practices

•

Virtualization design and best practices

•

Storage design and best practices

•

Data center network design and best practices

•

Management tools for the virtualized data center

What Is New In Release 2.7

Release 2.7 validates the Cisco UCS Storage Accelerator for use with desktop virtualization deployments. The Cisco UCS Storage Accelerator is a PCIe Flash-based caching solution that resides on the Cisco UCS B200 M3 blade server. It supports extremely high numbers of IO requests and enables organizations to significantly reduce storage costs

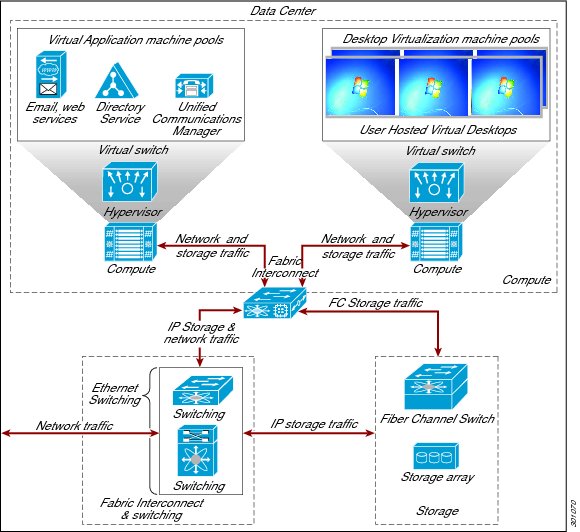

Virtualized Data Center Architecture

The Cisco Virtualized Data Center architecture consists of the computing, virtualization, storage, and networking subsystems needed to deliver an effective desktop virtualization solution. This structured approach to data center design provides outstanding flexibility as needs change, while helping ensure world-class performance, security, and resilience. The architecture tightly integrates Cisco and partner products, services, and best practices to provide a simplified, secure, and scalable solution (Figure 3).

Figure 3 Virtualized Data Center Architecture

Note

The solution also supports the Vblock™, VSPEX™ and FlexPod™ prepackaged infrastructure platforms. See http://www.vce.com/solutions/ for more information on the Vblock architecture. See www.cisco.com/go/vspex for more information on VSPEX. See http://www.netapp.com/us/technology/flexpod/ and http://www.cisco.com/ for more information on FlexPod.

Compute Subsystem Design and Best Practices

The Compute subsystem is based on Cisco UCS components. The system combines Cisco UCS B-Series Blade Servers and C-Series Rack Servers with networking and storage access in a single converged system that simplifies management and delivers greater cost efficiency and agility with increased visibility and control. The Cisco UCS B-Series and C-Series servers support Cisco Unified Fabric, which connects computing, LAN, and storage networks through a single medium. Cisco UCS servers are designed to reduce energy consumption, with highly efficient power supplies and Intel Xeon processors that match power consumption with workloads. Each server contains the processor, RAM, and I/O resources needed to support a virtual desktop environment. Cisco UCS servers are managed by Cisco UCS Manager, which implements role-based and policy-based management using service profiles and templates. This section discusses the following important elements of the data center design:

•

Compute subsystem building blocks

•

Server selection and validation

•

Connectivity for Cisco UCS servers

•

Fabric Interconnects

•

Server Topology

•

Server BIOS Settings

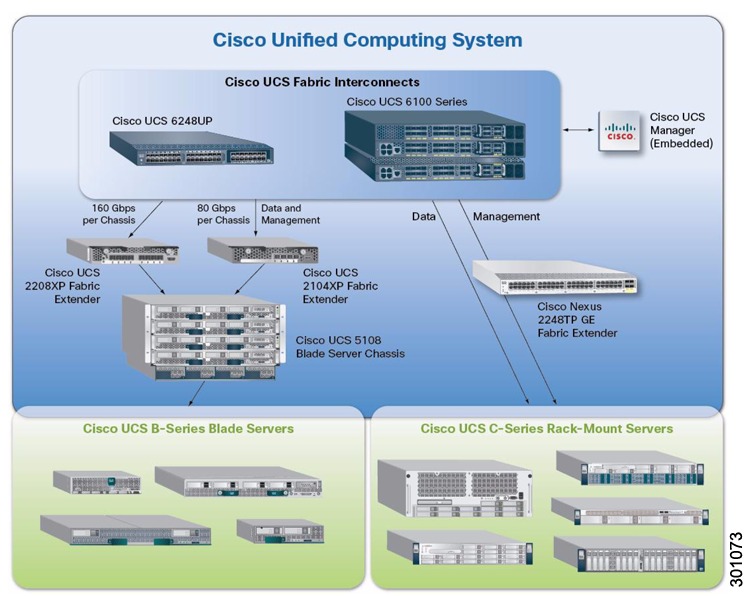

Compute Subsystem Building Blocks

•

Cisco UCS B-Series Blade Servers: Cisco UCS B Series servers are based on Intel Xeon processors and offer exceptional memory capacity. Each blade server's front panel provides direct access to video connections, USB ports, and console connections. Cisco has validated several models of the Cisco UCS B-Series Blade Server, and in this phase it continues validating the Cisco UCS B200 M3. The blade servers connect to the chassis by means of converged network adapter (CNA) cards, such as the Cisco VIC 1240 and 1280. The UCS B200 M3 blade server now supports the Cisco UCS Storage Accelerator, an on-blade Flash-based caching solution that offloads IOPS processing and reduces storage costs. Refer to the Storage section of this chapter for more information.

•

Cisco UCS 5100 Series Blade Server Chassis: The chassis provides an enclosure for Cisco UCS B-Series Blade Servers. It is six rack units (6RU) high, can mount in an industry-standard 19-inch rack, and uses standard front-to-back cooling. Each chassis can accommodate up to eight half-width or four full-width Cisco UCS B-Series Blade Servers. The chassis also supports up to four single-phase, hot-swappable power supplies.

•

Cisco UCS C-Series Rack Servers: The Cisco UCS C-Series extends Cisco UCS innovations to an industry-standard rack-mount form factor. The Cisco UCS C-Series servers can operate both in standalone environments and as part of the Cisco Unified Computing System. The Cisco UCS C-Series servers can be deployed incrementally according to an organization's timing and budget. Cisco UCS C-Series servers interface with the Cisco Unified Communications System through network adapters such as the Cisco UCS P81E VIC. This card is a dual-port 10 Gigabit Ethernet PCI Express (PCIe) adapter that provides dynamically configurable virtual interfaces.

•

Cisco fabric extenders: The Cisco UCS 2100 and 2200 Series Fabric Extenders reside in the Cisco UCS 5100 Series Blade Server Chassis and provide 10 Gigabit Ethernet connections between servers and fabric interconnects. The fabric extenders function as distributed line cards and are managed as extensions of the fabric interconnects. The Cisco Nexus® 2000 Series Fabric Extenders connect rack-mount servers to the fabric interconnects. Like the Cisco UCS fabric extenders, the Cisco Nexus fabric extenders function as line cards for the parent switch.

•

Cisco UCS 6100 and 6200 Series Fabric Interconnects: Typically deployed in pairs to provide highly available network connectivity and management capabilities for Cisco UCS, the fabric interconnects offer line-rate, low-latency, lossless 10 Gigabit Ethernet, Fibre Channel over Ethernet (FCoE), and Fibre Channel functions. All chassis and servers attached to the fabric interconnects become part of a single, highly available management domain. The fabric interconnects provide connectivity between Cisco UCS and the rest of the data center network.

•

Cisco UCS Manager: Cisco UCS Manager provides embedded management of all software and hardware components of Cisco UCS across multiple chassis and rack-mount servers and thousands of virtual machines. It manages the system as a single entity through an intuitive GUI, a command-line interface (CLI), or an XML API. Cisco UCS Manager is embedded on a pair of Cisco UCS 6100 or 6200 Series Fabric Interconnects using a clustered, active-standby configuration for high availability. The manager participates not only in server provisioning, but also in device discovery, inventory, configuration, diagnostics, monitoring, fault detection, auditing, and statistics collection.

Figure 4 Cisco Unified Computing System

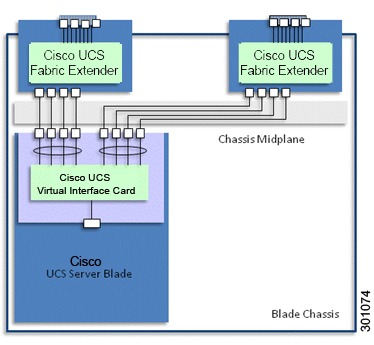

Connectivity for Cisco UCS Servers

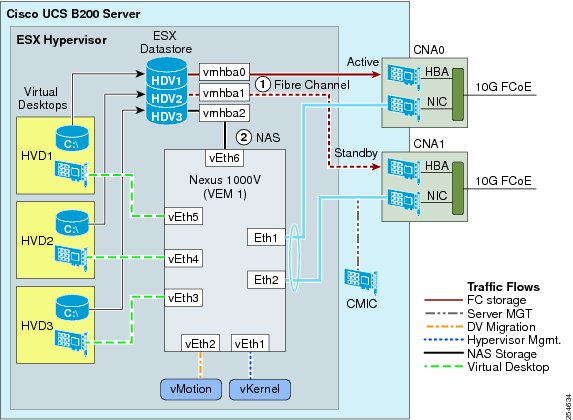

Cisco UCS B-Series Blade Servers connect to the chassis mid-plane by means of VICs installed as mezzanine cards (Figure 5). These cards provide virtual Ethernet Network Interface Cards (NIC)s or host bus adapters (HBAs) to the server operating system, and high-speed FCoE ports for connecting to Cisco UCS 2100 and 2200 Series Fabric Extenders located on the back of each chassis. The fabric extenders aggregate traffic from the interface cards and pass this traffic to an upstream pair of Cisco UCS Series 6100 or 6200 Series Fabric Interconnects (not shown).

Cisco UCS C-Series Rack Servers provide PCIe slots for network adapters (such as the VIC). These network adapters connect to Cisco Nexus 2200 Series Fabric Extenders, which in turn connect to the fabric interconnects. Alternately, Cisco UCS C-Series servers can be connected directly to a switch such as the Cisco Nexus 5500 switching platform.

Cisco UCS VICs can support advanced technologies such as Cisco Data Center Virtual Machine Fabric Extender (VM-FEX), which implements Cisco VN-Link in hardware. Cisco Data Center VM-FEX collapses physical and virtual switching layers, reduces the number of management points, and extends the network all the way to the virtual machine. Cisco Data Center VM-FEX is especially appropriate in environments in which hardware-based performance is more critical than high virtual machine density or virtualized service availability.

Cisco validated the VIC as a standard adapter without Cisco Data Center VM-FEX, using Cisco's software-based implementation of its VN-Link technology, the Cisco Nexus 1000V Series switches. The Cisco Nexus distributed virtual switches scale to very high densities, and support virtualized services such as the Cisco Virtual Security Gateway (VSG), as well as virtual firewalling and load balancing. The Cisco Nexus 1000V Series is discussed in greater detail in the networking portion of this chapter.

Figure 5 Cisco UCS B-Series Blade Server Connectivity

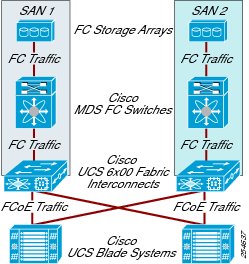

Deploying Cisco UCS Fabric Interconnects

The Cisco UCS 6100 and 6200 Series Fabric Interconnects are top-of-rack (ToR) controllers that provide network connectivity and management for Cisco UCS servers (Figure 6). Cisco fabric interconnects also function as parent switches for the fabric extenders, which act as distributed line cards. These fabric interconnects offer line-rate, low-latency, lossless 10 Gigabit Ethernet, Fibre Channel, and FCoE capabilities. The Cisco UCS 6100 and 6200 Series deliver high-performance unified fabric, centralized unified management with Cisco UCS Manager, and virtual machine-optimized services with support for Cisco VN-Link technology.

Fabric interconnects terminate FCoE traffic flows from the fabric extenders. Ethernet traffic is separated and forwarded to the data center switch fabric (composed of Cisco Nexus 5000 and 7000 Series Switches). Fibre Channel traffic is forwarded using Fibre Channel uplinks to the Fibre Channel SAN. The latest generation of fabric interconnects offers support for unified ports, which can be configured as either Ethernet or Fibre Channel ports.

Embedded Cisco UCS Manager facilitates management of the entire Cisco UCS domain. Using Cisco UCS Manager in combination with desktop virtualization management software, administrators can deploy virtual machines, perform software upgrades, migrate virtual machines between physical servers, and extend computing resource control over thousands of virtual desktops. For virtual environments, fabric interconnect design considerations include:

•

Paired deployment: By design the fabric interconnects are deployed in redundant pairs to provide uniform reliable access to both network and storage resources. A virtual IP address is created to link the two switches and provide a single point of management.

•

Operating mode: The fabric interconnect operates in either of two modes: switch mode or end-host mode. In switch mode, it acts as a normal Layer 2 switch with spanning tree enabled (which results in the blocking of upstream links). In end-host mode, server virtual NICs (vNICs) are pinned to a specific uplink port with no uplink switching (which obviates the need for spanning tree, and enables active-active uplinks). End-host mode, which is the default mode, provides the most predictable results for desktop virtualization environments, and therefore is preferred for deployments.

•

Static or dynamic pinning: Server ports can be dynamically pinned to a given uplink or Port Channel, or they can be statically pinned. With static pinning, specific pin groups are created and associated with an adapter. Static pinning provides deterministic traffic flows and enables traffic management if desired, and is the method used in testing.

Figure 6 Cisco UCS 6x00 Fabric Interconnects

Server Topology Recommendations

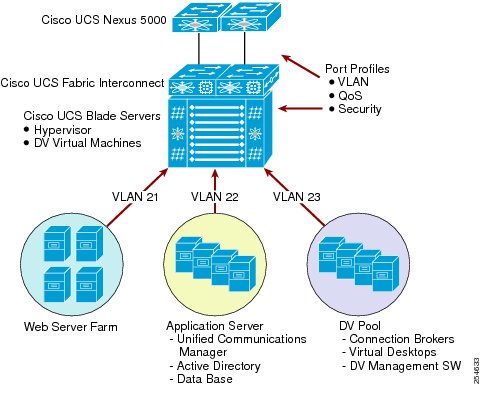

The validation process segregated infrastructure-oriented servers from servers that host virtual desktops. Infrastructure servers are those that provide critical services to a broad user population, such as application servers, license servers, unified communications, and Microsoft Active Directory. Grouping physical servers according to function and load helps ensure that resources are used more efficiently, and may protect infrastructure services from potential aggregate load effects and sudden surges. This approach also may insulate desktop users from demand peaks that might be associated with infrastructure servers. Figure 7 shows a deployment in which servers are grouped by function.

Figure 7 Grouping Servers by Function

Determining Cisco UCS BIOS Settings for Desktop Virtualization

Cisco UCS BIOS settings define basic server behavior during boot-up time. Some of these BIOS settings can directly affect system performance, especially in virtualized environments. Many of the most important parameters can be configured through Cisco UCS Manager. Table 4 shows the main BIOS parameters and the settings used for validation.

Table 4 Cisco UCS BIOS Settings

Note

Testing was conducted with Intel SpeedStep enabled. This feature saves power when a host is running a reduced load. Some organizations disable Intel SpeedStep for virtual machines that experience poor performance. This condition typically occurs on lightly loaded servers running Intel EX processors, when CPU use is lower. Rather than disabling Intel SpeedStep, the recommended solution is to disable the C1E halt state in the BIOS.

Virtualization Design and Best Practices

The virtualization subsystem includes the various solutions required to virtualize servers, desktops, users, and applications in the system. The virtualization subsystem includes the following elements:

•

Hypervisor: The hypervisor abstracts physical processor, memory, storage, and networking resources into virtual machines. Hypervisors enable virtual machines to be created, managed, optimized, and migrated.

•

Desktop virtualization: Desktops can be Hosted Virtual Desktops or Hosted Shared Desktops. With Hosted Virtual Desktops, With Hosted Virtual Desktops, applications and operating systems are hosted on a virtual desktop and accessed by remote users. Hosted Virtual Desktops can be persistent (tied to a particular user) or pooled, non-persistent. Hosted Shared Desktops allow multiple users to share the same underlying guest operating system. This design guide is based on Citrix XenDesktop for hosted virtual desktops, and Citrix XenApp for hosted shared desktops.

•

Application virtualization: Application virtualization permits packaging and on-demand delivery of applications to desktop users from a central repository.

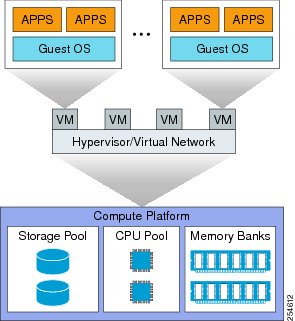

Hypervisor

The hypervisor abstracts the processor, memory, storage, and networking resources of its physical server into multiple virtual machines, and helps ensure that each virtual machine receives its appropriate share of these resources (Figure 8). The hypervisor is installed on each Cisco UCS server to allow virtualized desktops and servers to run as independent virtual machines. Cisco has validated the following hypervisors for use with Citrix XenDesktop and XenApp:

•

VMware vSphere

•

Citrix XenServer

•

Microsoft Hyper-V

Figure 8 Hypervisor

The hypervisor bridges the virtual and physical environments by interfacing virtual desktops with VICs on the blade servers. The VICs give the server (and thus the hypervisor) access to Ethernet NICs and Fibre Channel HBAs. Each card combines the Ethernet and Fibre Channel traffic on a unified fabric in the form of FCoE traffic. The hypervisor maps the physical NICs to vNICs as part of the virtual switch. Physical HBAs for Fibre Channel traffic are bridged directly to virtual HBAs (vHBAs).

Hypervisors include advanced tools for managing servers and associated virtual machines in high-density production environments. These tools enable IT administrators to identify overcommitted host machines, move virtual machines among pooled hosts, manage power-up sequences, consolidate active virtual machines on the fewest possible hosts, and more.

Many factors influence the successful deployment of a hypervisor, and a detailed discussion is beyond the scope of this document. Table 5 lists the main questions to consider when designing a hypervisor implementation for a Cisco environment.

Table 5 Main Questions When Designing a Hypervisor Installation

Virtual Machine topology definition

Physical and virtual resources can be grouped according to functions.

Management and infrastructure server applications are installed on dedicated Cisco UCS blades. Dedicated database servers host the VMware vCenter and VMware View databases. The VMware vSphere Enterprise Plus License is deployed on the VMware vCenter server.

Virtual resource provisioning

Virtualization enables a certain degree of over-provisioning, but too much over-provisioning can have diminishing returns. For example, provisioning a virtual machine with more virtual CPUs (vCPUs) than it can use will increase resource consumption and may actually reduce performance under some circumstances.

Virtual machines running a Microsoft Windows 7 or XP guest OS are provisioned with 1.5 to 2.0 GB RAM (with memory over-commit off) and 20 GB of disk space. See the Cisco Virtual Workspace (VXI) Smart Solution 2.7 Performance and Capacity Results Guide for Citrix for sizing virtual resource requirements.

Storage types

Storage can be physically located on the Cisco UCS blade server or network attached. To support virtual machine migration, clustering, distributed resource scheduling and other advanced virtualization features, shared storage is typically deployed. User preferences vary widely, and some deployments may use hybrid shared and local solutions.

The system includes support for shared storage solutions (network-attached storage [NAS] and SAN) from multiple ecosystem partners. Cisco has also validated hybrid storage solutions that blend both local and centralized share resources.

VM boot source

Booting from centralized shared storage allows for off-the-shelf server replacements as well as centralized configuration management of the hypervisor software.

The Cisco validated design is configured to boot from SAN, as recommended by the hypervisor vendor guidelines.

Disable unused physical devices

Hypervisor vendors typically recommend that unused devices such as COM, USB, and LPT1 ports, and optical drives be disabled. These devices consume resources even when not used. Some devices are polled even though inactive, or reserve blocks of memory that will not be used.

Cisco recommends disabling unused ports and drives so that these do not needlessly consume resources.

Advanced hypervisor configuration parameters (VMware)

Hypervisors may provide the capability to fine-tune CPU and memory resources in virtual machines, which can increase virtual machine density per server. In early versions of VMware vSphere, for example, tuning of CPU fairness algorithms and memory reclamation features was a manual process, performed only with the guidance of technical support. Newer (VMware vSphere 5.0 and later) versions have default values optimized for large deployments.

Cisco recommends deploying the most recent versions of these hypervisors to use optimized default settings.

Advanced hypervisor configuration parameters (Citrix)

Hypervisors increasingly provide caching features that cache temporary files and non-persistent virtual desktops on server local disks.This caching capability enables some virtual machine read and write operations to be executed on lower cost server storage, which conserves critical shared storage resources.

Citrix IntelliCache, along with Citrix Machine Creation Services (MCS) for thin provisioning, may reduce the load on centralized NAS and/or SAN resources.

Advanced hypervisor configuration parameters (Microsoft)

Microsoft Hyper-V provides a dynamic memory management capability that balances memory use among running virtual machines.

With Microsoft Hyper-V, virtual machines can be configured with a memory range, rather than just a fixed memory allocation. Administrators can set memory priorities for virtual machines so that memory can be removed from low priority machines and reallocated to higher priority machines. This capability can increase the number of virtual machines that can be supported on each server.

Compatibility issues between VMware ESXI 5 Update 1 and Citrix XenDesktop (5.0 SP1, 5.5, 5.6, 5.6 FP1)

Users should be aware of issues and workarounds involving these combinations.

System synchronization

System-wide synchronization is critical to help ensure the accuracy of all logged information.

Cisco recommends synchronizing the hypervisor clock to an external Network Time Protocol (NTP) server, and then synchronizing the virtual desktop operating system clocks to the hypervisor clock.

High availability

Hosts can be clustered to provide fault tolerance and high availability.

A system best practice is to distribute hosts across multiple Cisco UCS chassis to provide better availability in the event of a chassis failure; at the same time, all hosts are grouped in a single high-availability cluster.

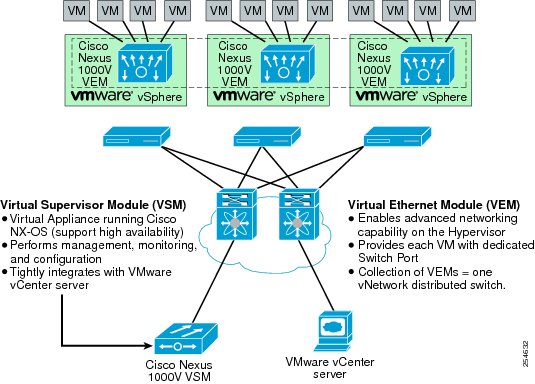

Virtual Switching for Hypervisors: Cisco Nexus 1000v Switch

The Cisco Nexus 1000V Switch is a virtual machine access switch that runs inside a hypervisor. The switch uses Cisco VN-Link server virtualization technology to deliver policy-based virtual machine connectivity, mobile virtual machine security and network policy, and a nondisruptive operation model. The Cisco Nexus 1000V provides administrators with a consistent networking feature set and provisioning process from the virtual machine access layer to the data center network core. Virtual servers can use the same network configuration, security policy, diagnostic tools, and operation models as their physical server counterparts.

A single Cisco Nexus 1000V Switch can encompass several physical servers. Targeted port profiles can be created for the specific requirements associated with each type of user and virtual desktop. Cisco Nexus 1000V profiles contain information such as VLAN assignment, quality-of-service (QoS) policies, and security access control lists (ACLs). The port profile is linked to the virtual machine profile, so that if the hypervisor migrates to a particular virtual desktop, the associated profile also migrates.

Troubleshooting of connectivity problems is enhanced through the built-in Cisco Switched Port Analyzer (SPAN). Increased security is implemented by the use of several additional features such as VLANs, private VLANs, port security, and security ACLs. The Cisco Nexus 1000V also provides a foundation for other virtual networking solutions such as the Cisco VSG and Cisco Virtual Wide Area Application Services (vWAAS) . The Cisco Nexus 1000V is currently supported on VMware vSphere hypervisors with Enterprise Plus licenses (Figure 9).

Figure 9 VMware ESX/ESXi and Cisco Nexus 1000V Series Integration

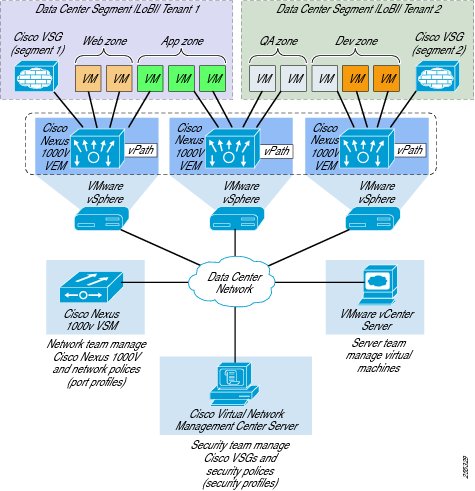

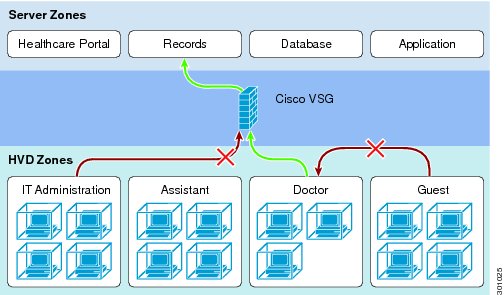

Virtual Machine-Based Security Zones: The Cisco Virtual Security Gateway

Cisco VSG for Cisco Nexus 1000V Series Switches is a virtual appliance that controls and monitors access to trust zones in enterprise and cloud provider environments (Figure 10). Cisco VSG provides secure segmentation of virtualized data center virtual machines using detailed, zone-based control and monitoring with context-aware security policies. Controls are applied across organizational zones, lines of business, or multitenant environments. Context-based access logs are generated with network and virtual machine activity levels. Trust zones and security templates can be provisioned on demand as virtual machines are created. Refer to the Securing Cisco Virtual Workspace chapter of this guide for more information on creating and deploying security zones.

Cisco VSG employs Cisco Virtual Network Service Data Path (vPath) technology embedded in the Cisco Nexus 1000V Series Virtual Ethernet Module (VEM). Cisco vPath steers traffic to the designated Cisco VSG for initial policy evaluation and enforcement. Subsequent policy enforcement is off-loaded directly to Cisco vPath. Cisco VSG can provide protection across multiple physical servers. It can be transparently inserted in one-arm mode, and it offers an active-standby mode for high availability.

Cisco VSG can be deployed across multiple virtual machine zones and virtualized applications. It requires a virtual 1.5-GHz CPU, 2 GB of RAM, a 3-GB hard drive, and three network interfaces (data, management, and high availability). It also requires VMware vSphere and vCenter Release 4.0 or later, Cisco Nexus 1000V VEMs and VSMs, and Cisco Virtual Network Management Center (VNMC).

Figure 10 Cisco Virtual Security Gateway

Virtual Machine-Based Network Optimization: Cisco vWAAS

Cisco vWAAS is a virtual appliance that accelerates business applications delivered from private and virtual private clouds. Cisco vWAAS runs on Cisco UCS servers and the VMware vSphere hypervisor, using policy-based configuration in the Cisco Nexus 1000V Switch. Cisco vWAAS can be associated with application server virtual machines as these are instantiated or moved. With Cisco vWAAS, cloud providers can rapidly provision WAN optimization services with little to no configuration or disruption.

For more information on Cisco vWAAS, see the Virtualization Aware Network and Scaling and High Availability chapters, in this guide.

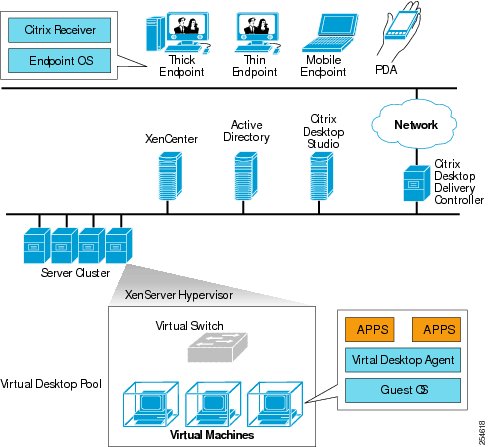

Desktop Virtualization

This Cisco Validated Design guide describes the implementation of this solution with the Citrix XenDesktop solution for desktop virtualization. With Citrix XenDesktop, organizations can create and run virtual desktops in the data center, and deliver these desktops to employees using a wide range of endpoints. Users access their virtual desktops from zero, thin, or thick clients by means of the Citrix Independent Computing Architecture (ICA) remote display protocol. Figure 11 shows a typical desktop virtualization implementation based on Citrix XenDesktop.

Figure 11 Citrix XenDesktop Desktop Virtualization Deployment

Hosted Virtual Desktop

Hosted virtual desktops (HVDs) in a Citrix XenDesktop environment can be deployed in multiple modes, such as dedicated, pooled, streamed, shared, or personal vDisks (streamed or pooled). These modes are differentiated based on the way that end users access and associate with the virtual desktop. Another way to differentiate HVDs is based on the state of virtual desktops across multiple user logins in which the two modes are persistent one-to-one or nonpersistent desktops. Persistent desktops are tied to a specific user and generally have customizations and user data preserved through the life of the desktop. Nonpersistent desktop pools allow better scalability by creating an oversubscribed pool of desktops shared across users. These desktops are assigned to the user at login. Customizations and user data, if present in a separate data store, are applied, and when the user logs off, the desktops are released in the pool for other users. Nonpersistent desktops are recommended for task workers who require temporary computing platform to access various enterprise applications and are not expected to store user data locally.

Citrix Virtual Desktop Agent

Citrix Virtual Desktop Agent (VDA) is installed on each HVD. The agent makes the desktop available to the endpoint client. The agent receives ICA connection requests on port 1494, prepares the desktop, and streams it to the client. It also registers the HVD with the desktop delivery controller (DDC). In the background, the Citrix VDA communicates with the DDC to update session status and provide usage statistics. The Citrix VDA uses port 8080 to communicate with DDC.

Citrix Online Plug-in

Citrix provides multiple client software options for use with the Citrix VDA. Depending on the endpoint hardware, the Citrix Receiver, the Online Plug-in or the Self-service plug-in can be used. The Citrix Online Plug-in provides toolbar functions, enabling users to pan and scale virtual desktops from their local desktop. Users can work with more than one desktop by using multiple Citrix Online Plug-in sessions on the same device. See Citrix product documentation for details.

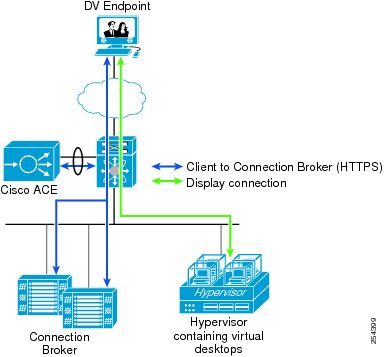

Citrix XenDesktop Controller

Citrix XenDesktop Controller is the central piece of a Citrix XenDesktop environment, and among other functions it includes a connection broker service that performs user authentication, virtual machine power management, and so on. On session establishment, it also makes Citrix HDX policy decisions and enforces any licenses, as needed. After the connection between the client and the agent is established, Citrix XenDesktop does out-of-band monitoring with assistance from the agent. Citrix XenDesktop can scale to handle many thousands of desktops on a single installed instance.

The server also performs data collection functions that can be valuable for auditing and for making desktop virtualization deployment adjustments. Administrators can create Citrix XenDesktop sites in which each controller is assigned a specialized function, such as data collection and infrastructure control. For high availability, multiple Citrix XenDesktop host servers can be deployed running broker services in the environment, preferably behind a load balancer such as the Cisco Application Control Engine (ACE) Module. Citrix XenDesktop requires that all site members be part of the same Microsoft Active Directory domain. The solution now integrates a virtual machine management server that can also create and provision virtual desktops in real time. For environments in which persistent desktops are not needed, the provisioning services can significantly optimize the storage use.

Citrix Provisioning Server

The Citrix Provisioning Server is a component that creates and provision virtual desktops from a single desktop image on demand, optimizing storage utilization and providing a pristine virtual desktop to each user every time they log on. Desktop provisioning with the Citrix Provisioning Server also simplifies desktop images, provides outstanding flexibility, and offers fewer points of desktop management for both applications and desktops.

Citrix Personal vDisk Technology

Citrix Personal vDisk technology allows for reduced storage costs by enabling the customer to leverage a single base operating system image across multiple virtual desktop users while still providing those users with a personal, virtual disk on which to store their profile, preferences, data and even user-installed applications. This provides the benefits found in pooled desktop image management (i.e. a single OS image to manage for multiple desktop users) while maintaining a level of persistent personalization of the desktop for each particular user. With Citrix Personal vDisk technology administrators can update, patch and otherwise manage the underlying OS while the user's settings, applications and other customizations persist across updates to the layers underneath. Personal vDisks provide this separation by redirecting all changes made on the user's VM to a separate disk (i.e. the personal vDisk) attached to the user's VM. The content of the personal vDisk is blended at runtime with the content from the base VM to provide a unified image experience. In this way, users can still access applications provisioned by their administrator in the base VM. Please refer to Citrix documentation on this topic for more details: http://support.citrix.com/proddocs/topic/xendesktop-ibi/cds-about-personal-vdisks-ibi.html

Citrix Desktop Director

The Citrix Desktop Director provides a single console that IT administrators can use to monitor, troubleshoot, and assist users with virtual desktops and applications. This console can be used to assist users with Citrix XenDesktop-delivered virtual desktops, Citrix XenApp applications, and server hosted desktops.

Hosted Shared Desktops

Hosted Shared Desktops (HSDs) allow multiple users to share the same underlying guest operating system. HSDs are one of the most scalable ways to provide desktop services to users. Sharing is based on Microsoft Remote Desktop Services so the users are not completely isolated from each other. A large enterprise will typically use a mix of HVDs and HSDs. HSDs are published by Citrix XenApp 6.5. A farm of servers running the required desktops is created with XenApp running on each server. After the farm is created and all necessary applications are installed, the farm can be published on the existing Citrix XenDesktop web interface, or it can be published with a new web interface. Detailed deployment and configuration information for various Citrix XenApp deployment modes and an integration checklist can be found in the Citrix knowledge base.

To determine when to use HSDs or HVDs, Citrix recommends performing user segmentation analysis, categorizing the user base according to the applications needed for work, the resource intensiveness of those applications, and use patterns. Because hosted shared desktops share the underlying OS environment, a resource-intensive action performed by one user can affect resource availability for all other users. HSDs provide the maximum benefit to task-based workers for whom personalized desktops are not required.

Application Virtualization

Application virtualization "containerizes" and insulates applications from the guest operating system. This process permits a single virtualized application instance to run across different Microsoft Windows OS versions. It also helps ensure that malware infecting a virtualized application will not escape the container to infect the OS or other applications. Cisco has validated Citrix XenApp for application virtualization. Citrix XenApp centralizes the installation, storage, and delivery of applications within the data center. These applications can be delivered transparently on demand. Refer to the Cisco VXI Management and Operations chapter of this document as well as http://support.citrix.com/proddocs/topic/xenapp/ps-library-wrapper.html for more information.

Storage Design and Best Practices

Storage is one of the most critical elements of the virtualized data center. Analysts estimate that storage represents anywhere from 40% to 80% of the cost of a desktop virtualization investment, so designing an effective storage solution is essential to achieving Return-On-Investment goals. The storage system also has a major impact on desktop performance and user experience. With traditional desktop PCs, users have enjoyed fast, direct access to dedicated disks. With desktop virtualization, these storage resources become centralized and designers must consider the effects of latency and resource contention on the user population. Storage system design also influences the ability to scale to accommodate new users, as pilot programs expand to full-scale deployments. More users generate greater demand for storage resources, higher levels of I/Os per Second (IOPS), and higher latency. In short, the storage architecture is a significant factor in determining the success or failure of a virtualized desktop implementation.

Designing storage for desktop virtualization presents significant challenges in terms of cost and performance. It can be difficult to balance costs with capacity and IO access requirements. Organizations often over-provision their storage arrays, adding more disks than are needed to ensure sufficient levels of IO access. This tactic may preserve the user experience, but at great cost. Storage design is also complicated by the episodic nature of user access. Steady state operation, for example, may require only eight or ten IOPS per desktop. During a login storm, when large numbers of users login at about the same time, IOPS may burst to very high levels. Deploying sufficient storage to handle peak loads results in excess capacity during steady state operations. On the other hand, insufficient IOPS during peak load situations can cause significant delays for end users, as they are unable to access their desktops.

In virtualized environments, IO also tends to be highly random. The Windows operating system is designed to optimize IO operations so that blocks are written to (or read from) disk sequentially. This sequential operation minimizes the time required for disk reads/writes and improves performance. In the virtual world, hypervisors tend to produce smaller random blocks of IO (also known as the blender effect). These random IO operations have a significant impact on disk performance, and may introduce unacceptable levels of latency.

The goal of the storage system designer, then, is to implement a solution that is cost-effective, predictable, and responsive to sudden changes in workload. To that end, the storage architecture is designed to integrate a wide range of storage technologies and optimization techniques. Cisco design goals include -

•

Flexibility: validate multiple approaches so that customers can confidently deploy solutions tailored to their environments. Support NAS- and SAN-based central, shared storage, as well as a range of local storage options (server memory, local HDDs, SSDs, and UCS Storage Accelerator).

•

Efficiency: protect customer Return-On-Investment (ROI) by ensuring that storage resources are optimized. Blend Cisco and ecosystem partner capabilities such as local storage, thin provisioning, caching, non-persistent pooled desktops, and other approaches that improve the efficiency of the overall storage system.

•

Performance: ensure a superior end user experience by validating Cisco and ecosystem partner solutions such as SSDs, UCS Storage Accelerator, IOPS offloading, and other approaches that can help provide predictable performance.

Storage Deployment Models

This Smart Solution has validated three major approaches to implementing storage in a Citrix desktop virtualization deployment. These models include: (1) central shared storage, (2) local server-based SSD with central shared storage, and (3) local server-based Cisco UCS Storage Accelerator with central shared storage.

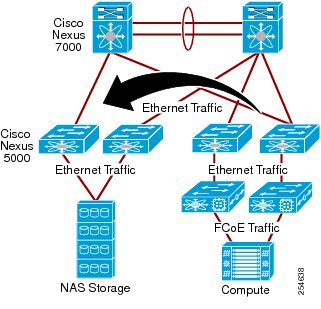

Central Shared Storage

Traditionally, virtualization vendors recommended centralized, shared storage systems for virtual desktop deployments. In this deployment model, the shared storage system contains the user's machine environment, including the operating system, user profiles, and user data (see Figure 12). These systems, based on high capacity storage arrays, offer centralized security, high performance, and resource sharing. Centralized shared storage also facilitates the use of advanced hypervisor features and aids in desktop migration.

The solution supports both Network-Attached Storage (NAS) and Storage Area Networks (SAN). Shared storage systems are provided by ecosystem partners EMC and NetApp. Each partner offers solutions that support both NAS and SAN. In the test environment, the EMC VNX system was deployed for SAN-based block storage, and the NetApp FAS system was configured for file-based NAS.

The EMC Unified Storage solution provides advanced failover and fully automated storage tiering to virtual desktop environments. EMC Unified Storage solutions can connect to multiple storage networks through NAS, Small Computer System Interface over IP (iSCSI), and Fibre Channel SANs. Cisco system testing validated the EMC Unified Storage solutions for SAN deployments. Hosts were configured to boot from the SAN.

The NetApp FAS Series is a unified storage solution that supports both SAN and NAS deployments. NetApp storage arrays are highly optimized for the intensive read and write workload typical of virtual desktop environments (see the product documentation for more information). NetApp storage runs the Data ONTAP operating system, which provides SAN (FCoE, Fibre Channel, and iSCSI), NAS (Common Internet File System [CIFS] and Network File System [NFS]), primary storage, and secondary storage on a single platform so that all virtual desktop components (virtual machine OS, user persona, user data, applications, data, etc.) can be hosted on the same unified storage array.

Deployment Notes

•

In this model, the VMs are configured to Boot from the SAN system in accordance with hypervisor recommendations.

•

Virtual machines, desktop images, and user data are stored centrally in the shared arrays.

•

Advanced storage features integral to the partner systems such as compression, de-duplication, caching tiering, and other optimizations are enabled.

•

Storage optimization appliances that offload IOPS processing, perform in-line de-duplication, and deliver sequential IO can provide substantial benefits in this model. Refer to Cisco Design Zone for documentation on optimization products.

Figure 12 Centralized, Shared Storage

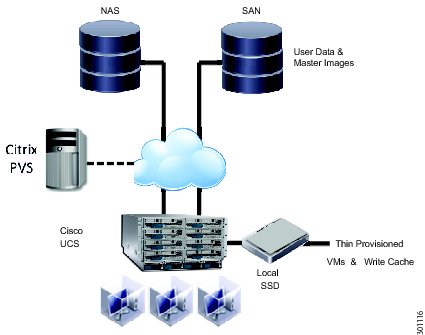

Local SSD and Central Shared Storage

This model takes advantage of solid-state drives (SSDs) on Cisco UCS servers to extend and complement the shared storage system. In this approach, non-persistent files and thin-provisioned VMs are stored locally on the Cisco UCS server SSDs. The SSD will also be used to provide a local write cache for the Citrix Provisioning Server (see Figure 13). User data and master images are maintained on shared storage. The objective is to reduce demand on the shared storage system by servicing many requests from the local SSD. This hybrid design is intended to provide a cost-effective solution for reducing storage costs.

During steady state operations (normal desktop usage), Write IO tends to be the predominate workload for storage systems. Write IO is also more expensive than Read IO in terms of resource consumption. In production-class desktop virtualization environments, large numbers of random Write operations can negatively impact storage performance. By moving the Write cache to the server SSDs, these Write operations are offloaded and addressed locally for improved performance.

Multiple SSDs can be deployed per server to scale the solution and further reduce the amount of traffic bound for the shared storage system. Cisco UCS B Series servers support a maximum of two SSDs, while some UCS C Series servers can support up to 24.

Deployment Notes

•

A best practice is to enable Write Cache on the RAID controller (which should have battery backup).

•

Recommended for use with non-persistent desktop deployments only.

•

This model introduces a higher risk of data loss in the event of a failure; RAID 1 recommended if possible, but will reduce capacity.

Figure 13 Local SSD and Shared Storage

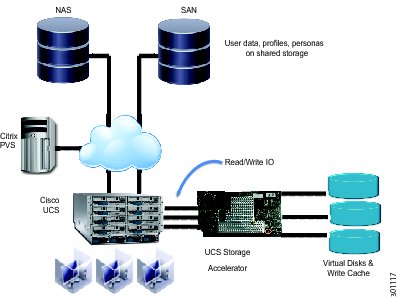

Cisco UCS Storage Accelerator and Central Shared Storage

The UCS Storage Accelerator is a Flash-based caching solution that resides on a Cisco UCS B200 M3 blade server. The accelerator is a 785 GB mezzanine card that delivers much higher levels of IOPS than a typical shared storage system. IO requests for a particular server are contained within that server, which reduces latency compared to traditional approaches. This enables network administrators to support a guaranteed number of users at a lower cost, and with predictable performance.

In this model, a golden master image and all associated clone images are hosted on the Cisco UCS Storage Accelerator, which is installed on the blade server. A central copy of the golden master image can be maintained on the shared storage system. User data, profiles, and personas are also saved on the shared system. This approach also improves scalability by enabling support for large numbers of users, while providing faster boot-up times.

Deployment Notes

•

The hypervisor should be configured to store non-persistent desktop images on the server-based accelerator.

•

Store persistent information such as user data on the shared storage system.

•

If a server fails, users simply re-connect to another desktop through their connection broker.

•

VMware vSphere 5.1 supports live migration of virtual desktops without requiring shared storage.

Figure 14 Cisco UCS Accelerator and Shared Storage

Storage Capacity Best Practices

Storage capacity is the amount of disk space needed to accommodate the virtual machines used for desktop virtualization. Capacity requirements can be roughly estimated by multiplying the number of virtual machines by the size of the hard-disk space allocated per virtual machine. For example, with 100 virtual machines each having assigned a disk space of 30GB, 100 x 30GB= 3TB of data capacity would be required. Refer to the Scaling and High Availability chapter of this guide for information on storage capacity planning. Cisco also recommends working closely with both storage and virtualization vendors to help ensure that storage resources are used efficiently.

The storage arrays support various forms of thin provisioning. Thin provisioning is a form of over-subscription that makes storage available as needed. This capability enables higher storage use, and reduces the need to overprovision and preallocate physical disk capacity.

Storage arrays also typically provide the capability to identify and eliminate duplicate data from disks. With deduplication enabled, only unique data blocks are stored. Duplicate blocks are discarded, and their associated disk space is reclaimed. The deduplication process can be scheduled to run at specific intervals. A best practice is to run deduplication at off-peak intervals to reduce impact on application performance.

Storage requirements also can be reduced with features provided by the desktop virtualization solution. Citrix MCS, Citrix Provisioning Services (PVS), and Citrix Personal vDisk (PVD) all start from a single master image and thus can further reduce the amount of disk space consumed.

IOPS Best Practices

IOPS loads for virtualized environments can vary greatly. For example, when virtual machines boot up, much data needs to be read from disk. Other operations (such as logins and logoffs) generate a lot of disk write operations. IOPS loads are subject to large peaks, such as when a lot of users start using their virtual desktops at roughly the same time. Scheduled tasks such as virus scans and file index searching also can cause peaks in IOPS load.

To optimize read IOPS, master images that will be read by many virtual machines should be placed in a fast-access data store. Both EMC and NetApp storage solutions have been validated and provide technologies to cache the master image in the first and fastest level of cache on the storage array.